Stephen Wu

for the RadonPy consortium

Omics-scale polymer computational database transferable to real-world artificial intelligence applications

Nov 07, 2025Abstract:Developing large-scale foundational datasets is a critical milestone in advancing artificial intelligence (AI)-driven scientific innovation. However, unlike AI-mature fields such as natural language processing, materials science, particularly polymer research, has significantly lagged in developing extensive open datasets. This lag is primarily due to the high costs of polymer synthesis and property measurements, along with the vastness and complexity of the chemical space. This study presents PolyOmics, an omics-scale computational database generated through fully automated molecular dynamics simulation pipelines that provide diverse physical properties for over $10^5$ polymeric materials. The PolyOmics database is collaboratively developed by approximately 260 researchers from 48 institutions to bridge the gap between academia and industry. Machine learning models pretrained on PolyOmics can be efficiently fine-tuned for a wide range of real-world downstream tasks, even when only limited experimental data are available. Notably, the generalisation capability of these simulation-to-real transfer models improve significantly as the size of the PolyOmics database increases, exhibiting power-law scaling. The emergence of scaling laws supports the "more is better" principle, highlighting the significance of ultralarge-scale computational materials data for improving real-world prediction performance. This unprecedented omics-scale database reveals vast unexplored regions of polymer materials, providing a foundation for AI-driven polymer science.

Scaling Law of Sim2Real Transfer Learning in Expanding Computational Materials Databases for Real-World Predictions

Aug 07, 2024Abstract:To address the challenge of limited experimental materials data, extensive physical property databases are being developed based on high-throughput computational experiments, such as molecular dynamics simulations. Previous studies have shown that fine-tuning a predictor pretrained on a computational database to a real system can result in models with outstanding generalization capabilities compared to learning from scratch. This study demonstrates the scaling law of simulation-to-real (Sim2Real) transfer learning for several machine learning tasks in materials science. Case studies of three prediction tasks for polymers and inorganic materials reveal that the prediction error on real systems decreases according to a power-law as the size of the computational data increases. Observing the scaling behavior offers various insights for database development, such as determining the sample size necessary to achieve a desired performance, identifying equivalent sample sizes for physical and computational experiments, and guiding the design of data production protocols for downstream real-world tasks.

Future-proofing geotechnics workflows: accelerating problem-solving with large language models

Dec 14, 2023

Abstract:The integration of Large Language Models (LLMs) like ChatGPT into the workflows of geotechnical engineering has a high potential to transform how the discipline approaches problem-solving and decision-making. This paper delves into the innovative application of LLMs in geotechnical engineering, as explored in a hands-on workshop held in Tokyo, Japan. The event brought together a diverse group of 20 participants, including students, researchers, and professionals from academia, industry, and government sectors, to investigate practical uses of LLMs in addressing specific geotechnical challenges. The workshop facilitated the creation of solutions for four different practical geotechnical problems as illustrative examples, culminating in the development of an academic paper. The paper discusses the potential of LLMs to transform geotechnical engineering practices, highlighting their proficiency in handling a range of tasks from basic data analysis to complex, multimodal problem-solving. It also addresses the challenges in implementing LLMs, particularly in achieving high precision and accuracy in specialized tasks, and underscores the need for expert oversight. The findings demonstrate LLMs' effectiveness in enhancing efficiency, data processing, and decision-making in geotechnical engineering, suggesting a paradigm shift towards more integrated, data-driven approaches in this field. This study not only showcases the potential of LLMs in a specific engineering domain, but also sets a precedent for their broader application in interdisciplinary research and practice, where the synergy of human expertise and artificial intelligence redefines the boundaries of problem-solving.

Pathway to a fully data-driven geotechnics: lessons from materials informatics

Dec 01, 2023

Abstract:This paper elucidates the challenges and opportunities inherent in integrating data-driven methodologies into geotechnics, drawing inspiration from the success of materials informatics. Highlighting the intricacies of soil complexity, heterogeneity, and the lack of comprehensive data, the discussion underscores the pressing need for community-driven database initiatives and open science movements. By leveraging the transformative power of deep learning, particularly in feature extraction from high-dimensional data and the potential of transfer learning, we envision a paradigm shift towards a more collaborative and innovative geotechnics field. The paper concludes with a forward-looking stance, emphasizing the revolutionary potential brought about by advanced computational tools like large language models in reshaping geotechnics informatics.

Bayesian Sequential Stacking Algorithm for Concurrently Designing Molecules and Synthetic Reaction Networks

Mar 01, 2022

Abstract:In the last few years, de novo molecular design using machine learning has made great technical progress but its practical deployment has not been as successful. This is mostly owing to the cost and technical difficulty of synthesizing such computationally designed molecules. To overcome such barriers, various methods for synthetic route design using deep neural networks have been studied intensively in recent years. However, little progress has been made in designing molecules and their synthetic routes simultaneously. Here, we formulate the problem of simultaneously designing molecules with the desired set of properties and their synthetic routes within the framework of Bayesian inference. The design variables consist of a set of reactants in a reaction network and its network topology. The design space is extremely large because it consists of all combinations of purchasable reactants, often in the order of millions or more. In addition, the designed reaction networks can adopt any topology beyond simple multistep linear reaction routes. To solve this hard combinatorial problem, we present a powerful sequential Monte Carlo algorithm that recursively designs a synthetic reaction network by sequentially building up single-step reactions. In a case study of designing drug-like molecules based on commercially available compounds, compared with heuristic combinatorial search methods, the proposed method shows overwhelming performance in terms of computational efficiency and coverage and novelty with respect to existing compounds.

A General Class of Transfer Learning Regression without Implementation Cost

Jun 23, 2020

Abstract:We propose a novel framework that unifies and extends existing methods of transfer learning (TL) for regression. To bridge a pretrained source model to the model on a target task, we introduce a density-ratio reweighting function, which is estimated through the Bayesian framework with a specific prior distribution. By changing two intrinsic hyperparameters and the choice of the density-ratio model, the proposed method can integrate three popular methods of TL: TL based on cross-domain similarity regularization, a probabilistic TL using the density-ratio estimation, and fine-tuning of pretrained neural networks. Moreover, the proposed method can benefit from its simple implementation without any additional cost; the model can be fully trained using off-the-shelf libraries for supervised learning in which the original output variable is simply transformed to a new output. We demonstrate its simplicity, generality, and applicability using various real data applications.

A Bayesian algorithm for retrosynthesis

Mar 06, 2020

Abstract:The identification of synthetic routes that end with a desired product has been an inherently time-consuming process that is largely dependent on expert knowledge regarding a limited fraction of the entire reaction space. At present, emerging machine-learning technologies are overturning the process of retrosynthetic planning. The objective of this study is to discover synthetic routes backwardly from a given desired molecule to commercially available compounds. The problem is reduced to a combinatorial optimization task with the solution space subject to the combinatorial complexity of all possible pairs of purchasable reactants. We address this issue within the framework of Bayesian inference and computation. The workflow consists of two steps: a deep neural network is trained that forwardly predicts a product of the given reactants with a high level of accuracy, following which this forward model is inverted into the backward one via Bayes' law of conditional probability. Using the backward model, a diverse set of highly probable reaction sequences ending with a given synthetic target is exhaustively explored using a Monte Carlo search algorithm. The Bayesian retrosynthesis algorithm could successfully rediscover 80.3% and 50.0% of known synthetic routes of single-step and two-step reactions within top-10 accuracy, respectively, thereby outperforming state-of-the-art algorithms in terms of the overall accuracy. Remarkably, the Monte Carlo method, which was specifically designed for the presence of diverse multiple routes, often revealed a ranked list of hundreds of reaction routes to the same synthetic target. We investigated the potential applicability of such diverse candidates based on expert knowledge from synthetic organic chemistry.

Recovering compressed images for automatic crack segmentation using generative models

Mar 06, 2020

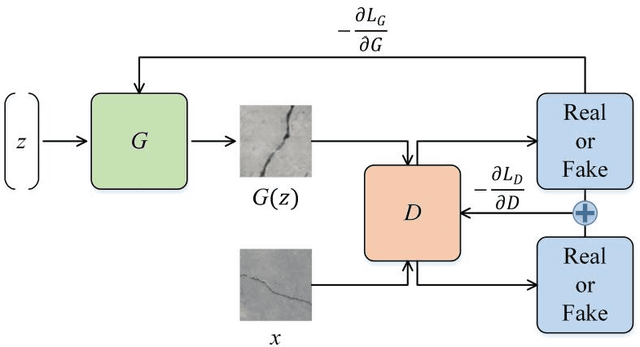

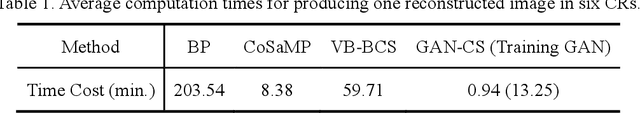

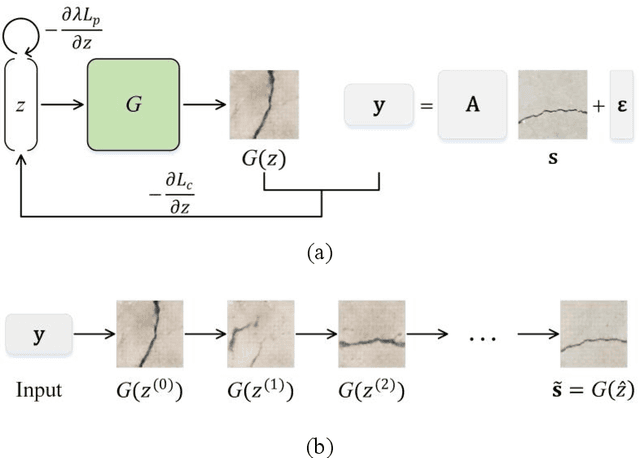

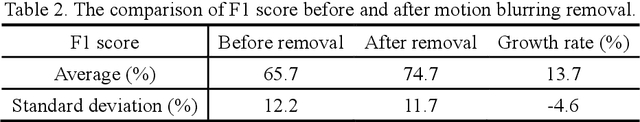

Abstract:In a structural health monitoring (SHM) system that uses digital cameras to monitor cracks of structural surfaces, techniques for reliable and effective data compression are essential to ensure a stable and energy efficient crack images transmission in wireless devices, e.g., drones and robots with high definition cameras installed. Compressive sensing (CS) is a signal processing technique that allows accurate recovery of a signal from a sampling rate much smaller than the limitation of the Nyquist sampling theorem. The conventional CS method is based on the principle that, through a regularized optimization, the sparsity property of the original signals in some domain can be exploited to get the exact reconstruction with a high probability. However, the strong assumption of the signals being highly sparse in an invertible space is relatively hard for real crack images. In this paper, we present a new approach of CS that replaces the sparsity regularization with a generative model that is able to effectively capture a low dimension representation of targeted images. We develop a recovery framework for automatic crack segmentation of compressed crack images based on this new CS method and demonstrate the remarkable performance of the method taking advantage of the strong capability of generative models to capture the necessary features required in the crack segmentation task even the backgrounds of the generated images are not well reconstructed. The superior performance of our recovery framework is illustrated by comparing with three existing CS algorithms. Furthermore, we show that our framework is extensible to other common problems in automatic crack segmentation, such as defect recovery from motion blurring and occlusion.

Robust Bayesian compressive sensing with data loss recovery for structural health monitoring signals

Mar 28, 2015

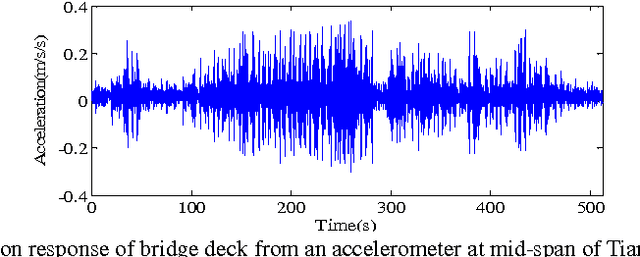

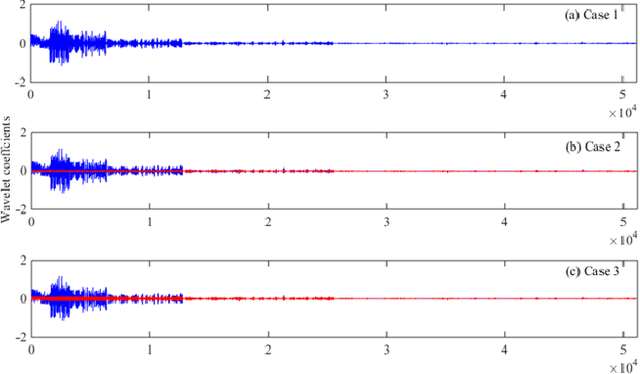

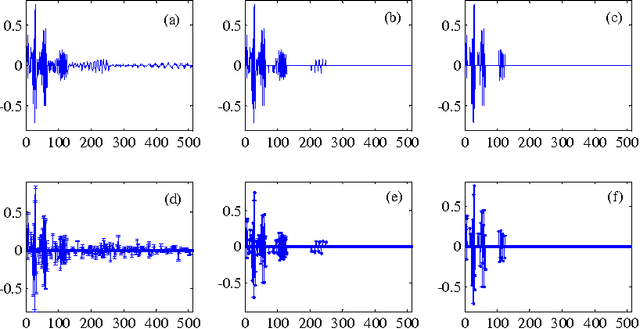

Abstract:The application of compressive sensing (CS) to structural health monitoring is an emerging research topic. The basic idea in CS is to use a specially-designed wireless sensor to sample signals that are sparse in some basis (e.g. wavelet basis) directly in a compressed form, and then to reconstruct (decompress) these signals accurately using some inversion algorithm after transmission to a central processing unit. However, most signals in structural health monitoring are only approximately sparse, i.e. only a relatively small number of the signal coefficients in some basis are significant, but the other coefficients are usually not exactly zero. In this case, perfect reconstruction from compressed measurements is not expected. A new Bayesian CS algorithm is proposed in which robust treatment of the uncertain parameters is explored, including integration over the prediction-error precision parameter to remove it as a "nuisance" parameter. The performance of the new CS algorithm is investigated using compressed data from accelerometers installed on a space-frame structure and on a cable-stayed bridge. Compared with other state-of-the-art CS methods including our previously-published Bayesian method which uses MAP (maximum a posteriori) estimation of the prediction-error precision parameter, the new algorithm shows superior performance in reconstruction robustness and posterior uncertainty quantification. Furthermore, our method can be utilized for recovery of lost data during wireless transmission, regardless of the level of sparseness in the signal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge