Stefano Melacci

Department of Information Engineering and Mathematics, University of Siena, Siena, Italy

LTLZinc: a Benchmarking Framework for Continual Learning and Neuro-Symbolic Temporal Reasoning

Jul 23, 2025

Abstract:Neuro-symbolic artificial intelligence aims to combine neural architectures with symbolic approaches that can represent knowledge in a human-interpretable formalism. Continual learning concerns with agents that expand their knowledge over time, improving their skills while avoiding to forget previously learned concepts. Most of the existing approaches for neuro-symbolic artificial intelligence are applied to static scenarios only, and the challenging setting where reasoning along the temporal dimension is necessary has been seldom explored. In this work we introduce LTLZinc, a benchmarking framework that can be used to generate datasets covering a variety of different problems, against which neuro-symbolic and continual learning methods can be evaluated along the temporal and constraint-driven dimensions. Our framework generates expressive temporal reasoning and continual learning tasks from a linear temporal logic specification over MiniZinc constraints, and arbitrary image classification datasets. Fine-grained annotations allow multiple neural and neuro-symbolic training settings on the same generated datasets. Experiments on six neuro-symbolic sequence classification and four class-continual learning tasks generated by LTLZinc, demonstrate the challenging nature of temporal learning and reasoning, and highlight limitations of current state-of-the-art methods. We release the LTLZinc generator and ten ready-to-use tasks to the neuro-symbolic and continual learning communities, in the hope of fostering research towards unified temporal learning and reasoning frameworks.

A Neuro-Symbolic Framework for Sequence Classification with Relational and Temporal Knowledge

May 08, 2025

Abstract:One of the goals of neuro-symbolic artificial intelligence is to exploit background knowledge to improve the performance of learning tasks. However, most of the existing frameworks focus on the simplified scenario where knowledge does not change over time and does not cover the temporal dimension. In this work we consider the much more challenging problem of knowledge-driven sequence classification where different portions of knowledge must be employed at different timesteps, and temporal relations are available. Our experimental evaluation compares multi-stage neuro-symbolic and neural-only architectures, and it is conducted on a newly-introduced benchmarking framework. Results demonstrate the challenging nature of this novel setting, and also highlight under-explored shortcomings of neuro-symbolic methods, representing a precious reference for future research.

Generative System Dynamics in Recurrent Neural Networks

Apr 16, 2025Abstract:In this study, we investigate the continuous time dynamics of Recurrent Neural Networks (RNNs), focusing on systems with nonlinear activation functions. The objective of this work is to identify conditions under which RNNs exhibit perpetual oscillatory behavior, without converging to static fixed points. We establish that skew-symmetric weight matrices are fundamental to enable stable limit cycles in both linear and nonlinear configurations. We further demonstrate that hyperbolic tangent-like activation functions (odd, bounded, and continuous) preserve these oscillatory dynamics by ensuring motion invariants in state space. Numerical simulations showcase how nonlinear activation functions not only maintain limit cycles, but also enhance the numerical stability of the system integration process, mitigating those instabilities that are commonly associated with the forward Euler method. The experimental results of this analysis highlight practical considerations for designing neural architectures capable of capturing complex temporal dependencies, i.e., strategies for enhancing memorization skills in recurrent models.

Pirates of the RAG: Adaptively Attacking LLMs to Leak Knowledge Bases

Dec 24, 2024

Abstract:The growing ubiquity of Retrieval-Augmented Generation (RAG) systems in several real-world services triggers severe concerns about their security. A RAG system improves the generative capabilities of a Large Language Models (LLM) by a retrieval mechanism which operates on a private knowledge base, whose unintended exposure could lead to severe consequences, including breaches of private and sensitive information. This paper presents a black-box attack to force a RAG system to leak its private knowledge base which, differently from existing approaches, is adaptive and automatic. A relevance-based mechanism and an attacker-side open-source LLM favor the generation of effective queries to leak most of the (hidden) knowledge base. Extensive experimentation proves the quality of the proposed algorithm in different RAG pipelines and domains, comparing to very recent related approaches, which turn out to be either not fully black-box, not adaptive, or not based on open-source models. The findings from our study remark the urgent need for more robust privacy safeguards in the design and deployment of RAG systems.

A Unified Framework for Neural Computation and Learning Over Time

Sep 18, 2024Abstract:This paper proposes Hamiltonian Learning, a novel unified framework for learning with neural networks "over time", i.e., from a possibly infinite stream of data, in an online manner, without having access to future information. Existing works focus on the simplified setting in which the stream has a known finite length or is segmented into smaller sequences, leveraging well-established learning strategies from statistical machine learning. In this paper, the problem of learning over time is rethought from scratch, leveraging tools from optimal control theory, which yield a unifying view of the temporal dynamics of neural computations and learning. Hamiltonian Learning is based on differential equations that: (i) can be integrated without the need of external software solvers; (ii) generalize the well-established notion of gradient-based learning in feed-forward and recurrent networks; (iii) open to novel perspectives. The proposed framework is showcased by experimentally proving how it can recover gradient-based learning, comparing it to out-of-the box optimizers, and describing how it is flexible enough to switch from fully-local to partially/non-local computational schemes, possibly distributed over multiple devices, and BackPropagation without storing activations. Hamiltonian Learning is easy to implement and can help researches approach in a principled and innovative manner the problem of learning over time.

Continual Learning of Conjugated Visual Representations through Higher-order Motion Flows

Sep 16, 2024

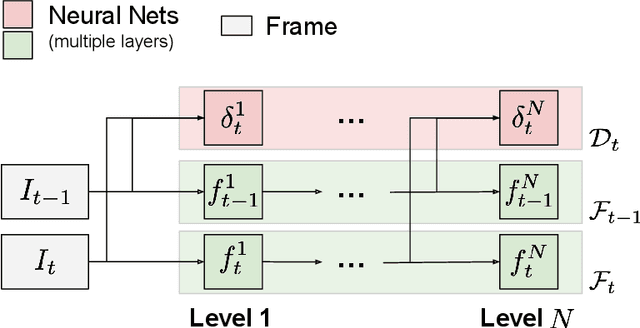

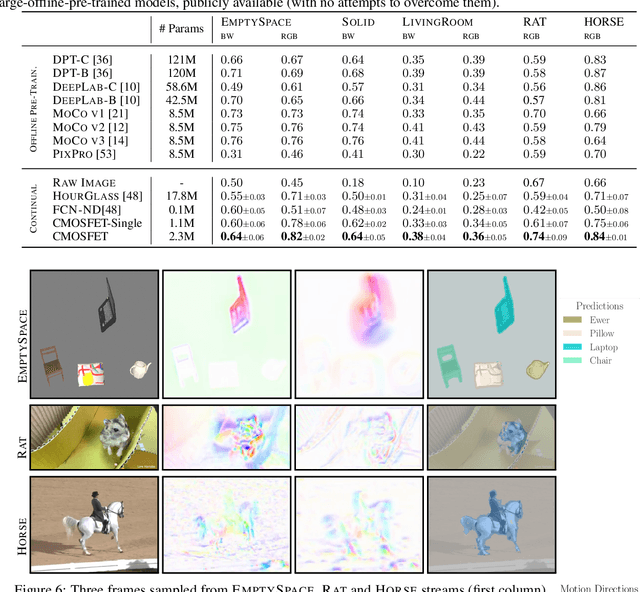

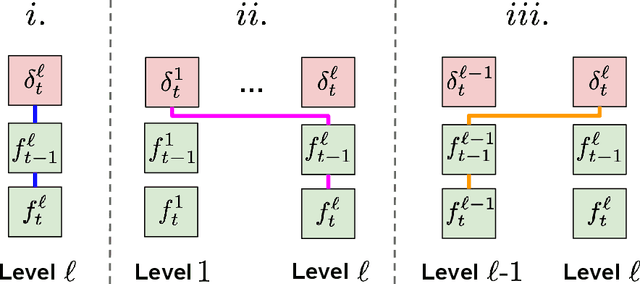

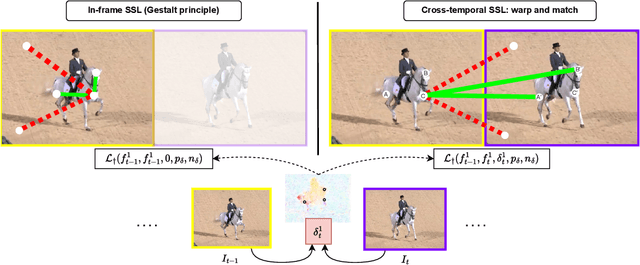

Abstract:Learning with neural networks from a continuous stream of visual information presents several challenges due to the non-i.i.d. nature of the data. However, it also offers novel opportunities to develop representations that are consistent with the information flow. In this paper we investigate the case of unsupervised continual learning of pixel-wise features subject to multiple motion-induced constraints, therefore named motion-conjugated feature representations. Differently from existing approaches, motion is not a given signal (either ground-truth or estimated by external modules), but is the outcome of a progressive and autonomous learning process, occurring at various levels of the feature hierarchy. Multiple motion flows are estimated with neural networks and characterized by different levels of abstractions, spanning from traditional optical flow to other latent signals originating from higher-level features, hence called higher-order motions. Continuously learning to develop consistent multi-order flows and representations is prone to trivial solutions, which we counteract by introducing a self-supervised contrastive loss, spatially-aware and based on flow-induced similarity. We assess our model on photorealistic synthetic streams and real-world videos, comparing to pre-trained state-of-the art feature extractors (also based on Transformers) and to recent unsupervised learning models, significantly outperforming these alternatives.

Dynamic Decoupling of Placid Terminal Attractor-based Gradient Descent Algorithm

Sep 10, 2024

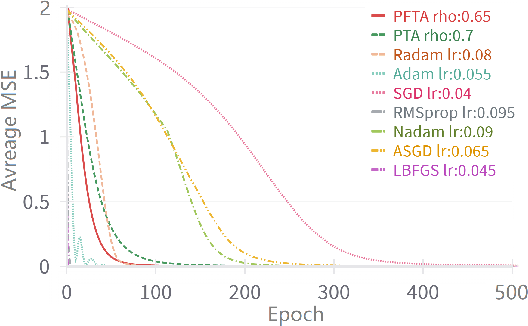

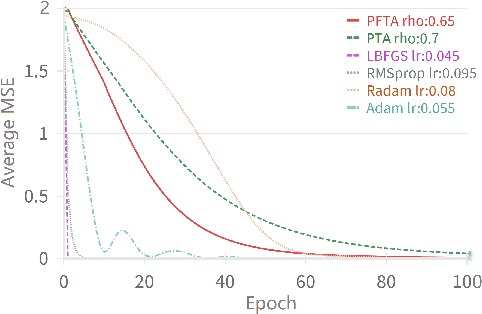

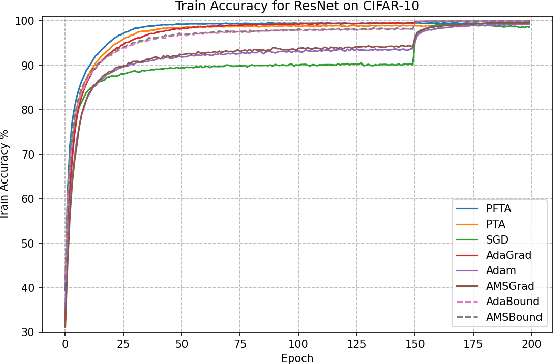

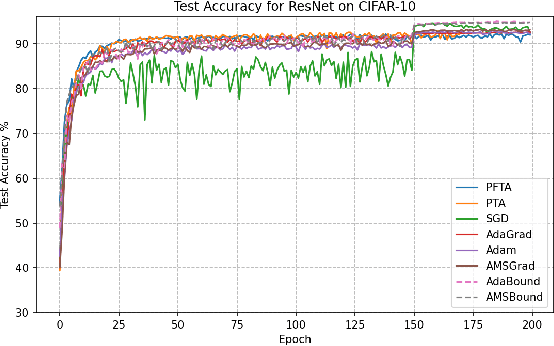

Abstract:Gradient descent (GD) and stochastic gradient descent (SGD) have been widely used in a large number of application domains. Therefore, understanding the dynamics of GD and improving its convergence speed is still of great importance. This paper carefully analyzes the dynamics of GD based on the terminal attractor at different stages of its gradient flow. On the basis of the terminal sliding mode theory and the terminal attractor theory, four adaptive learning rates are designed. Their performances are investigated in light of a detailed theoretical investigation, and the running times of the learning procedures are evaluated and compared. The total times of their learning processes are also studied in detail. To evaluate their effectiveness, various simulation results are investigated on a function approximation problem and an image classification problem.

State-Space Modeling in Long Sequence Processing: A Survey on Recurrence in the Transformer Era

Jun 13, 2024Abstract:Effectively learning from sequential data is a longstanding goal of Artificial Intelligence, especially in the case of long sequences. From the dawn of Machine Learning, several researchers engaged in the search of algorithms and architectures capable of processing sequences of patterns, retaining information about the past inputs while still leveraging the upcoming data, without losing precious long-term dependencies and correlations. While such an ultimate goal is inspired by the human hallmark of continuous real-time processing of sensory information, several solutions simplified the learning paradigm by artificially limiting the processed context or dealing with sequences of limited length, given in advance. These solutions were further emphasized by the large ubiquity of Transformers, that have initially shaded the role of Recurrent Neural Nets. However, recurrent networks are facing a strong recent revival due to the growing popularity of (deep) State-Space models and novel instances of large-context Transformers, which are both based on recurrent computations to go beyond several limits of currently ubiquitous technologies. In fact, the fast development of Large Language Models enhanced the interest in efficient solutions to process data over time. This survey provides an in-depth summary of the latest approaches that are based on recurrent models for sequential data processing. A complete taxonomy over the latest trends in architectural and algorithmic solutions is reported and discussed, guiding researchers in this appealing research field. The emerging picture suggests that there is room for thinking of novel routes, constituted by learning algorithms which depart from the standard Backpropagation Through Time, towards a more realistic scenario where patterns are effectively processed online, leveraging local-forward computations, opening to further research on this topic.

The KANDY Benchmark: Incremental Neuro-Symbolic Learning and Reasoning with Kandinsky Patterns

Feb 27, 2024

Abstract:Artificial intelligence is continuously seeking novel challenges and benchmarks to effectively measure performance and to advance the state-of-the-art. In this paper we introduce KANDY, a benchmarking framework that can be used to generate a variety of learning and reasoning tasks inspired by Kandinsky patterns. By creating curricula of binary classification tasks with increasing complexity and with sparse supervisions, KANDY can be used to implement benchmarks for continual and semi-supervised learning, with a specific focus on symbol compositionality. Classification rules are also provided in the ground truth to enable analysis of interpretable solutions. Together with the benchmark generation pipeline, we release two curricula, an easier and a harder one, that we propose as new challenges for the research community. With a thorough experimental evaluation, we show how both state-of-the-art neural models and purely symbolic approaches struggle with solving most of the tasks, thus calling for the application of advanced neuro-symbolic methods trained over time.

Enhancing Modern Supervised Word Sense Disambiguation Models by Semantic Lexical Resources

Feb 20, 2024

Abstract:Supervised models for Word Sense Disambiguation (WSD) currently yield to state-of-the-art results in the most popular benchmarks. Despite the recent introduction of Word Embeddings and Recurrent Neural Networks to design powerful context-related features, the interest in improving WSD models using Semantic Lexical Resources (SLRs) is mostly restricted to knowledge-based approaches. In this paper, we enhance "modern" supervised WSD models exploiting two popular SLRs: WordNet and WordNet Domains. We propose an effective way to introduce semantic features into the classifiers, and we consider using the SLR structure to augment the training data. We study the effect of different types of semantic features, investigating their interaction with local contexts encoded by means of mixtures of Word Embeddings or Recurrent Neural Networks, and we extend the proposed model into a novel multi-layer architecture for WSD. A detailed experimental comparison in the recent Unified Evaluation Framework (Raganato et al., 2017) shows that the proposed approach leads to supervised models that compare favourably with the state-of-the art.

* The 11th International Conference on Language Resources and Evaluation (LREC 2018)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge