Sri Garimella

DuRep: Dual-Mode Speech Representation Learning via ASR-Aware Distillation

May 26, 2025Abstract:Recent advancements in speech encoders have drawn attention due to their integration with Large Language Models for various speech tasks. While most research has focused on either causal or full-context speech encoders, there's limited exploration to effectively handle both streaming and non-streaming applications, while achieving state-of-the-art performance. We introduce DuRep, a Dual-mode Speech Representation learning setup, which enables a single speech encoder to function efficiently in both offline and online modes without additional parameters or mode-specific adjustments, across downstream tasks. DuRep-200M, our 200M parameter dual-mode encoder, achieves 12% and 11.6% improvements in streaming and non-streaming modes, over baseline encoders on Multilingual ASR. Scaling this approach to 2B parameters, DuRep-2B sets new performance benchmarks across ASR and non-ASR tasks. Our analysis reveals interesting trade-offs between acoustic and semantic information across encoder layers.

An Efficient Self-Learning Framework For Interactive Spoken Dialog Systems

Sep 16, 2024

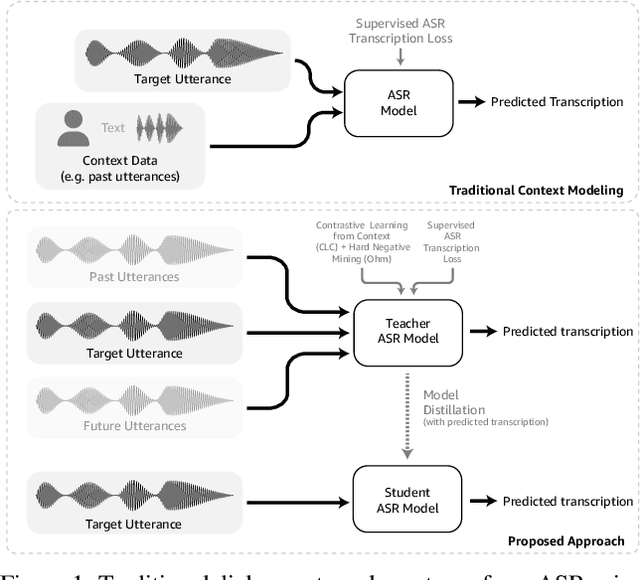

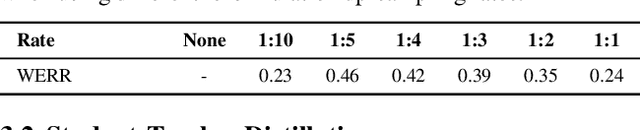

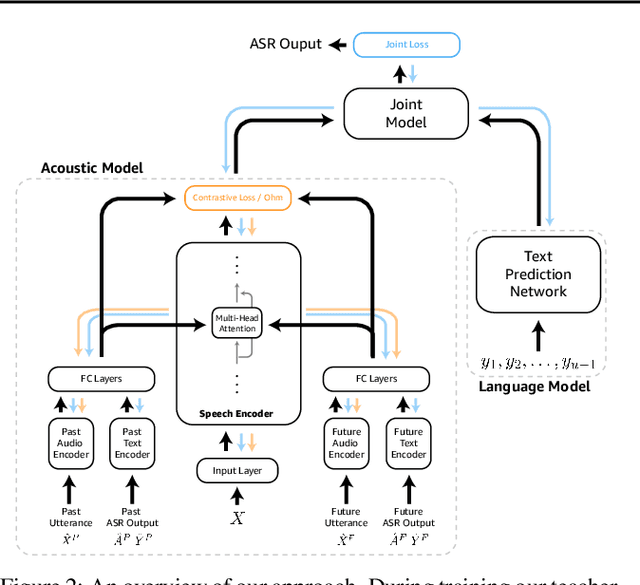

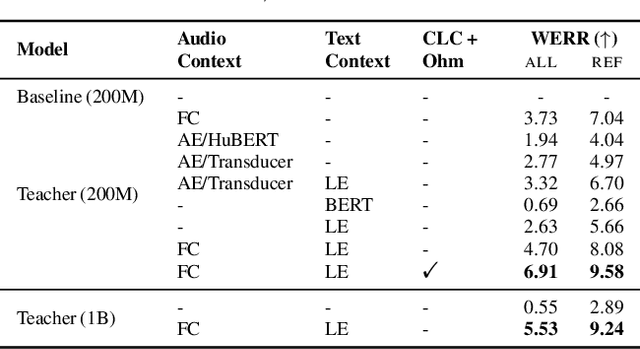

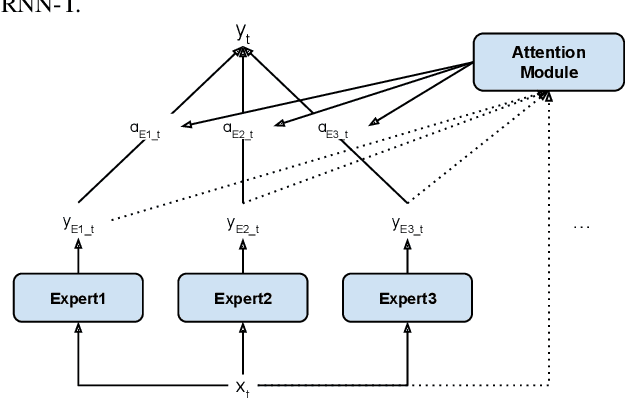

Abstract:Dialog systems, such as voice assistants, are expected to engage with users in complex, evolving conversations. Unfortunately, traditional automatic speech recognition (ASR) systems deployed in such applications are usually trained to recognize each turn independently and lack the ability to adapt to the conversational context or incorporate user feedback. In this work, we introduce a general framework for ASR in dialog systems that can go beyond learning from single-turn utterances and learn over time how to adapt to both explicit supervision and implicit user feedback present in multi-turn conversations. We accomplish that by leveraging advances in student-teacher learning and context-aware dialog processing, and designing contrastive self-supervision approaches with Ohm, a new online hard-negative mining approach. We show that leveraging our new framework compared to traditional training leads to relative WER reductions of close to 10% in real-world dialog systems, and up to 26% on public synthetic data.

Unified Modeling of Multi-Domain Multi-Device ASR Systems

May 13, 2022

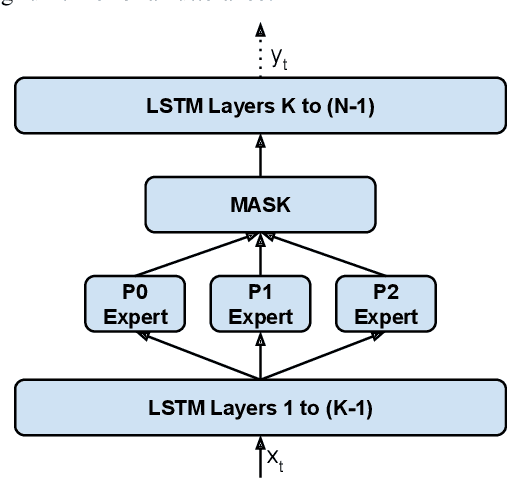

Abstract:Modern Automatic Speech Recognition (ASR) systems often use a portfolio of domain-specific models in order to get high accuracy for distinct user utterance types across different devices. In this paper, we propose an innovative approach that integrates the different per-domain per-device models into a unified model, using a combination of domain embedding, domain experts, mixture of experts and adversarial training. We run careful ablation studies to show the benefit of each of these innovations in contributing to the accuracy of the overall unified model. Experiments show that our proposed unified modeling approach actually outperforms the carefully tuned per-domain models, giving relative gains of up to 10% over a baseline model with negligible increase in the number of parameters.

Improving RNN-T ASR Performance with Date-Time and Location Awareness

Jun 16, 2021

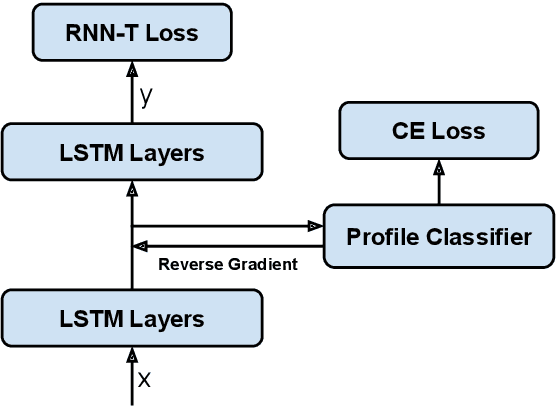

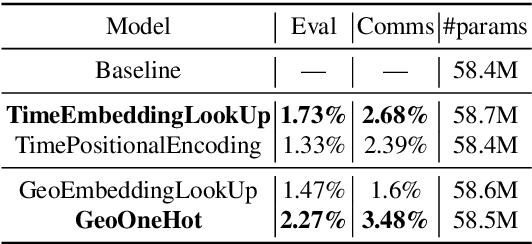

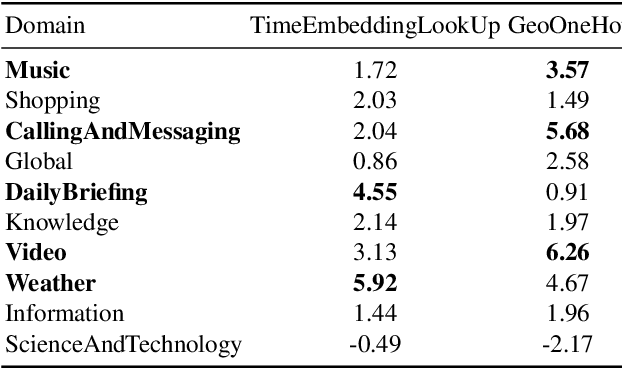

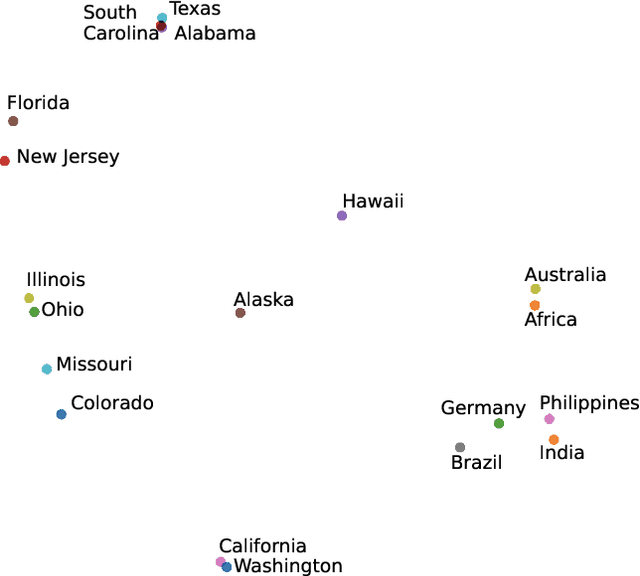

Abstract:In this paper, we explore the benefits of incorporating context into a Recurrent Neural Network (RNN-T) based Automatic Speech Recognition (ASR) model to improve the speech recognition for virtual assistants. Specifically, we use meta information extracted from the time at which the utterance is spoken and the approximate location information to make ASR context aware. We show that these contextual information, when used individually, improves overall performance by as much as 3.48% relative to the baseline and when the contexts are combined, the model learns complementary features and the recognition improves by 4.62%. On specific domains, these contextual signals show improvements as high as 11.5%, without any significant degradation on others. We ran experiments with models trained on data of sizes 30K hours and 10K hours. We show that the scale of improvement with the 10K hours dataset is much higher than the one obtained with 30K hours dataset. Our results indicate that with limited data to train the ASR model, contextual signals can improve the performance significantly.

Knowledge Distillation and Data Selection for Semi-Supervised Learning in CTC Acoustic Models

Aug 10, 2020

Abstract:Semi-supervised learning (SSL) is an active area of research which aims to utilize unlabelled data in order to improve the accuracy of speech recognition systems. The current study proposes a methodology for integration of two key ideas: 1) SSL using connectionist temporal classification (CTC) objective and teacher-student based learning 2) Designing effective data-selection mechanisms for leveraging unlabelled data to boost performance of student models. Our aim is to establish the importance of good criteria in selecting samples from a large pool of unlabelled data based on attributes like confidence measure, speaker and content variability. The question we try to answer is: Is it possible to design a data selection mechanism which reduces dependence on a large set of randomly selected unlabelled samples without compromising on Word Error Rate (WER)? We perform empirical investigations of different data selection methods to answer this question and quantify the effect of different sampling strategies. On a semi-supervised ASR setting with 40000 hours of carefully selected unlabelled data, our CTC-SSL approach gives 17% relative WER improvement over a baseline CTC system trained with labelled data. It also achieves on-par performance with CTC-SSL system trained on order of magnitude larger unlabeled data based on random sampling.

Streaming End-to-End Bilingual ASR Systems with Joint Language Identification

Jul 08, 2020

Abstract:Multilingual ASR technology simplifies model training and deployment, but its accuracy is known to depend on the availability of language information at runtime. Since language identity is seldom known beforehand in real-world scenarios, it must be inferred on-the-fly with minimum latency. Furthermore, in voice-activated smart assistant systems, language identity is also required for downstream processing of ASR output. In this paper, we introduce streaming, end-to-end, bilingual systems that perform both ASR and language identification (LID) using the recurrent neural network transducer (RNN-T) architecture. On the input side, embeddings from pretrained acoustic-only LID classifiers are used to guide RNN-T training and inference, while on the output side, language targets are jointly modeled with ASR targets. The proposed method is applied to two language pairs: English-Spanish as spoken in the United States, and English-Hindi as spoken in India. Experiments show that for English-Spanish, the bilingual joint ASR-LID architecture matches monolingual ASR and acoustic-only LID accuracies. For the more challenging (owing to within-utterance code switching) case of English-Hindi, English ASR and LID metrics show degradation. Overall, in scenarios where users switch dynamically between languages, the proposed architecture offers a promising simplification over running multiple monolingual ASR models and an LID classifier in parallel.

Language Model Bootstrapping Using Neural Machine Translation For Conversational Speech Recognition

Dec 02, 2019

Abstract:Building conversational speech recognition systems for new languages is constrained by the availability of utterances that capture user-device interactions. Data collection is both expensive and limited by the speed of manual transcription. In order to address this, we advocate the use of neural machine translation as a data augmentation technique for bootstrapping language models. Machine translation (MT) offers a systematic way of incorporating collections from mature, resource-rich conversational systems that may be available for a different language. However, ingesting raw translations from a general purpose MT system may not be effective owing to the presence of named entities, intra sentential code-switching and the domain mismatch between the conversational data being translated and the parallel text used for MT training. To circumvent this, we explore the following domain adaptation techniques: (a) sentence embedding based data selection for MT training, (b) model finetuning, and (c) rescoring and filtering translated hypotheses. Using Hindi as the experimental testbed, we translate US English utterances to supplement the transcribed collections. We observe a relative word error rate reduction of 7.8-15.6%, depending on the bootstrapping phase. Fine grained analysis reveals that translation particularly aids the interaction scenarios which are underrepresented in the transcribed data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge