Souma Chowdhury

Learning When to Jump for Off-road Navigation

Jan 31, 2026Abstract:Low speed does not always guarantee safety in off-road driving. For instance, crossing a ditch may be risky at a low speed due to the risk of getting stuck, yet safe at a higher speed with a controlled, accelerated jump. Achieving such behavior requires path planning that explicitly models complex motion dynamics, whereas existing methods often neglect this aspect and plan solely based on positions or a fixed velocity. To address this gap, we introduce Motion-aware Traversability (MAT) representation to explicitly model terrain cost conditioned on actual robot motion. Instead of assigning a single scalar score for traversability, MAT models each terrain region as a Gaussian function of velocity. During online planning, we decompose the terrain cost computation into two stages: (1) predict terrain-dependent Gaussian parameters from perception in a single forward pass, (2) efficiently update terrain costs for new velocities inferred from current dynamics by evaluating these functions without repeated inference. We develop a system that integrates MAT to enable agile off-road navigation and evaluate it in both simulated and real-world environments with various obstacles. Results show that MAT achieves real-time efficiency and enhances the performance of off-road navigation, reducing path detours by 75% while maintaining safety across challenging terrains.

CLEAR: A Semantic-Geometric Terrain Abstraction for Large-Scale Unstructured Environments

Jan 19, 2026Abstract:Long-horizon navigation in unstructured environments demands terrain abstractions that scale to tens of km$^2$ while preserving semantic and geometric structure, a combination existing methods fail to achieve. Grids scale poorly; quadtrees misalign with terrain boundaries; neither encodes landcover semantics essential for traversability-aware planning. This yields infeasible or unreliable paths for autonomous ground vehicles operating over 10+ km$^2$ under real-time constraints. CLEAR (Connected Landcover Elevation Abstract Representation) couples boundary-aware spatial decomposition with recursive plane fitting to produce convex, semantically aligned regions encoded as a terrain-aware graph. Evaluated on maps spanning 9-100~km$^2$ using a physics-based simulator, CLEAR achieves up to 10x faster planning than raw grids with only 6.7% cost overhead and delivers 6-9% shorter, more reliable paths than other abstraction baselines. These results highlight CLEAR's scalability and utility for long-range navigation in applications such as disaster response, defense, and planetary exploration.

Learning-aided Bigraph Matching Approach to Multi-Crew Restoration of Damaged Power Networks Coupled with Road Transportation Networks

Jun 24, 2025Abstract:The resilience of critical infrastructure networks (CINs) after disruptions, such as those caused by natural hazards, depends on both the speed of restoration and the extent to which operational functionality can be regained. Allocating resources for restoration is a combinatorial optimal planning problem that involves determining which crews will repair specific network nodes and in what order. This paper presents a novel graph-based formulation that merges two interconnected graphs, representing crew and transportation nodes and power grid nodes, into a single heterogeneous graph. To enable efficient planning, graph reinforcement learning (GRL) is integrated with bigraph matching. GRL is utilized to design the incentive function for assigning crews to repair tasks based on the graph-abstracted state of the environment, ensuring generalization across damage scenarios. Two learning techniques are employed: a graph neural network trained using Proximal Policy Optimization and another trained via Neuroevolution. The learned incentive functions inform a bipartite graph that links crews to repair tasks, enabling weighted maximum matching for crew-to-task allocations. An efficient simulation environment that pre-computes optimal node-to-node path plans is used to train the proposed restoration planning methods. An IEEE 8500-bus power distribution test network coupled with a 21 square km transportation network is used as the case study, with scenarios varying in terms of numbers of damaged nodes, depots, and crews. Results demonstrate the approach's generalizability and scalability across scenarios, with learned policies providing 3-fold better performance than random policies, while also outperforming optimization-based solutions in both computation time (by several orders of magnitude) and power restored.

A Talent-infused Policy-gradient Approach to Efficient Co-Design of Morphology and Task Allocation Behavior of Multi-Robot Systems

Nov 27, 2024

Abstract:Interesting and efficient collective behavior observed in multi-robot or swarm systems emerges from the individual behavior of the robots. The functional space of individual robot behaviors is in turn shaped or constrained by the robot's morphology or physical design. Thus the full potential of multi-robot systems can be realized by concurrently optimizing the morphology and behavior of individual robots, informed by the environment's feedback about their collective performance, as opposed to treating morphology and behavior choices disparately or in sequence (the classical approach). This paper presents an efficient concurrent design or co-design method to explore this potential and understand how morphology choices impact collective behavior, particularly in an MRTA problem focused on a flood response scenario, where the individual behavior is designed via graph reinforcement learning. Computational efficiency in this case is attributed to a new way of near exact decomposition of the co-design problem into a series of simpler optimization and learning problems. This is achieved through i) the identification and use of the Pareto front of Talent metrics that represent morphology-dependent robot capabilities, and ii) learning the selection of Talent best trade-offs and individual robot policy that jointly maximizes the MRTA performance. Applied to a multi-unmanned aerial vehicle flood response use case, the co-design outcomes are shown to readily outperform sequential design baselines. Significant differences in morphology and learned behavior are also observed when comparing co-designed single robot vs. co-designed multi-robot systems for similar operations.

Optimizing Design and Control of Running Robots Abstracted as Torque Driven Spring Loaded Inverted Pendulum (TD-SLIP)

Jul 16, 2024

Abstract:Legged locomotion shows promise for running in complex, unstructured environments. Designing such legged robots requires considering heterogeneous, multi-domain constraints and variables, from mechanical hardware and geometry choices to controller profiles. However, very few formal or systematic (as opposed to ad hoc) design formulations and frameworks exist to identify feasible and robust running platforms, especially at the small (sub 500 g) scale. This critical gap in running legged robot design is addressed here by abstracting the motion of legged robots through a torque-driven spring-loaded inverted pendulum (TD-SLIP) model, and deriving constraints that result in stable cyclic forward locomotion in the presence of system noise. Synthetic noise is added to the initial state in candidate design evaluation to simulate accumulated errors in an open-loop control. The design space was defined in terms of morphological parameters, such as the leg properties and system mass, actuator selection, and an open loop voltage profile. These attributes were optimized with a well-known particle swarm optimization solver that can handle mixed-discrete variables. Two separate case studies minimized the difference in touchdown angle from stride to stride and the actuation energy, respectively. Both cases resulted in legged robot designs with relatively repeatable and stable dynamics, while presenting distinct geometry and controller profile choices.

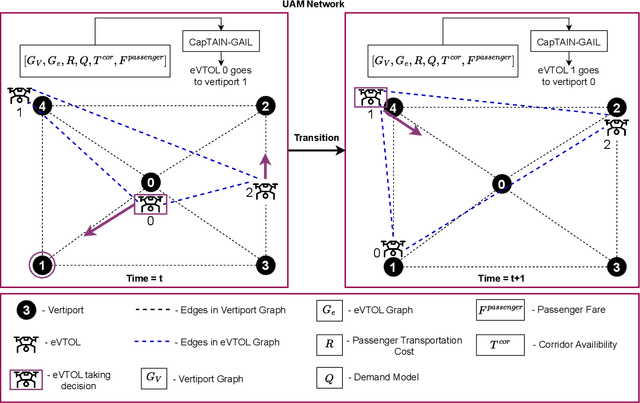

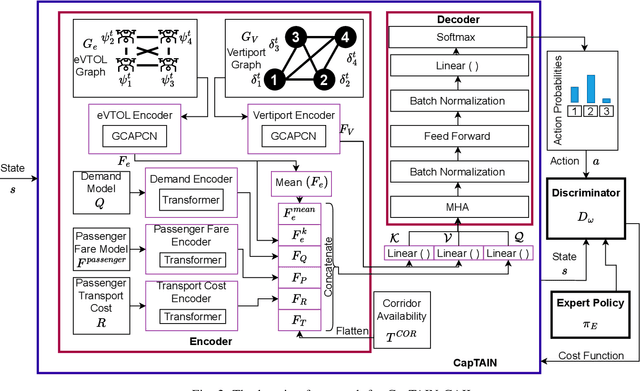

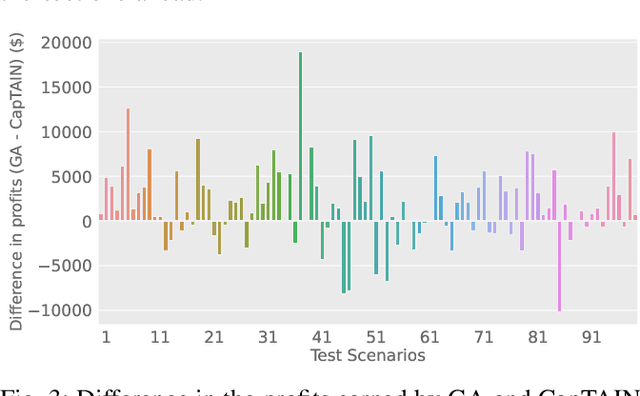

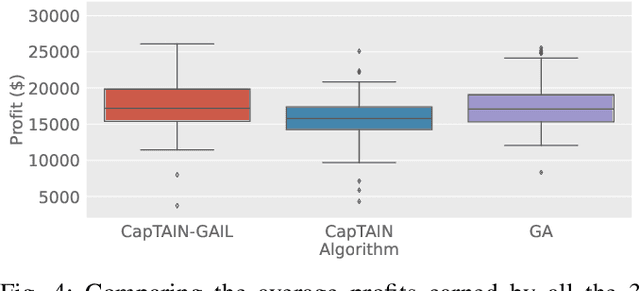

A Graph-based Adversarial Imitation Learning Framework for Reliable & Realtime Fleet Scheduling in Urban Air Mobility

Jul 16, 2024

Abstract:The advent of Urban Air Mobility (UAM) presents the scope for a transformative shift in the domain of urban transportation. However, its widespread adoption and economic viability depends in part on the ability to optimally schedule the fleet of aircraft across vertiports in a UAM network, under uncertainties attributed to airspace congestion, changing weather conditions, and varying demands. This paper presents a comprehensive optimization formulation of the fleet scheduling problem, while also identifying the need for alternate solution approaches, since directly solving the resulting integer nonlinear programming problem is computationally prohibitive for daily fleet scheduling. Previous work has shown the effectiveness of using (graph) reinforcement learning (RL) approaches to train real-time executable policy models for fleet scheduling. However, such policies can often be brittle on out-of-distribution scenarios or edge cases. Moreover, training performance also deteriorates as the complexity (e.g., number of constraints) of the problem increases. To address these issues, this paper presents an imitation learning approach where the RL-based policy exploits expert demonstrations yielded by solving the exact optimization using a Genetic Algorithm. The policy model comprises Graph Neural Network (GNN) based encoders that embed the space of vertiports and aircraft, Transformer networks to encode demand, passenger fare, and transport cost profiles, and a Multi-head attention (MHA) based decoder. Expert demonstrations are used through the Generative Adversarial Imitation Learning (GAIL) algorithm. Interfaced with a UAM simulation environment involving 8 vertiports and 40 aircrafts, in terms of the daily profits earned reward, the new imitative approach achieves better mean performance and remarkable improvement in the case of unseen worst-case scenarios, compared to pure RL results.

Physics-Informed Machine Learning Towards A Real-Time Spacecraft Thermal Simulator

Jul 08, 2024

Abstract:Modeling thermal states for complex space missions, such as the surface exploration of airless bodies, requires high computation, whether used in ground-based analysis for spacecraft design or during onboard reasoning for autonomous operations. For example, a finite-element thermal model with hundreds of elements can take significant time to simulate, which makes it unsuitable for onboard reasoning during time-sensitive scenarios such as descent and landing, proximity operations, or in-space assembly. Further, the lack of fast and accurate thermal modeling drives thermal designs to be more conservative and leads to spacecraft with larger mass and higher power budgets. The emerging paradigm of physics-informed machine learning (PIML) presents a class of hybrid modeling architectures that address this challenge by combining simplified physics models with machine learning (ML) models resulting in models which maintain both interpretability and robustness. Such techniques enable designs with reduced mass and power through onboard thermal-state estimation and control and may lead to improved onboard handling of off-nominal states, including unplanned down-time. The PIML model or hybrid model presented here consists of a neural network which predicts reduced nodalizations (distribution and size of coarse mesh) given on-orbit thermal load conditions, and subsequently a (relatively coarse) finite-difference model operates on this mesh to predict thermal states. We compare the computational performance and accuracy of the hybrid model to a data-driven neural net model, and a high-fidelity finite-difference model of a prototype Earth-orbiting small spacecraft. The PIML based active nodalization approach provides significantly better generalization than the neural net model and coarse mesh model, while reducing computing cost by up to 1.7x compared to the high-fidelity model.

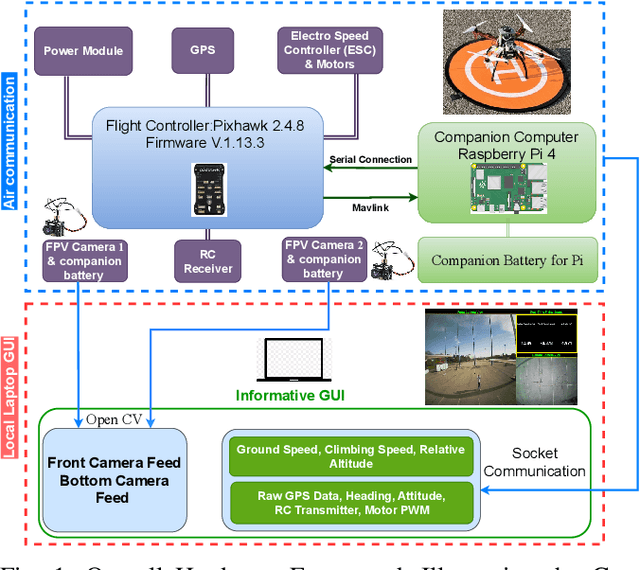

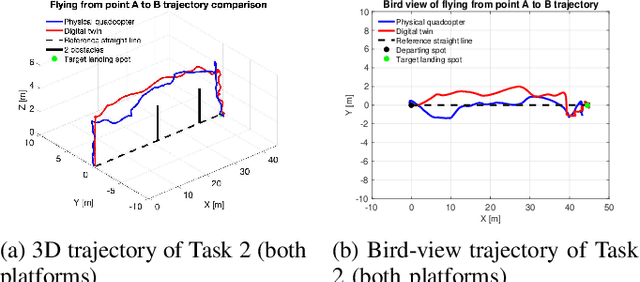

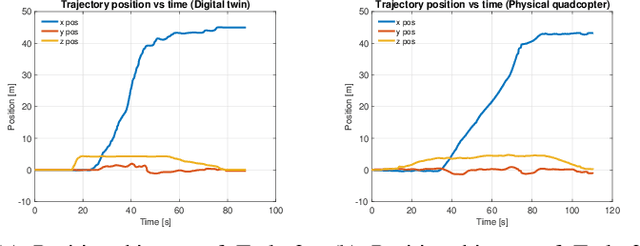

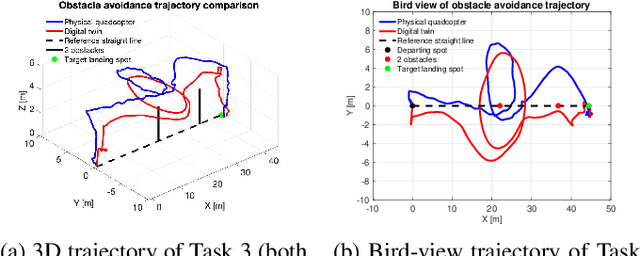

An Open-source Hardware/Software Architecture and Supporting Simulation Environment to Perform Human FPV Flight Demonstrations for Unmanned Aerial Vehicle Autonomy

Jul 08, 2024

Abstract:Small multi-rotor unmanned aerial vehicles (UAVs), mainly quadcopters, are nowadays ubiquitous in research on aerial autonomy, including serving as scaled-down models for much larger aircraft such as vertical-take-off-and-lift vehicles for urban air mobility. Among the various research use cases, first-person-view RC flight experiments allow for collecting data on how human pilots fly such aircraft, which could then be used to compare, contrast, validate, or train autonomous flight agents. While this could be uniquely beneficial, especially for studying UAV operation in contextually complex and safety-critical environments such as in human-UAV shared spaces, the lack of inexpensive and open-source hardware/software platforms that offer this capability along with low-level access to the underlying control software and data remains limited. To address this gap and significantly reduce barriers to human-guided autonomy research with UAVs, this paper presents an open-source software architecture implemented with an inexpensive in-house built quadcopter platform based on the F450 Quadcopter Frame. This setup uses two cameras to provide a dual-view FPV and an open-source flight controller, Pixhawk. The underlying software architecture, developed using the Python-based Kivy library, allows logging telemetry, GPS, control inputs, and camera frame data in a synchronized manner on the ground station computer. Since costs (time) and weather constraints typically limit numbers of physical outdoor flight experiments, this paper also presents a unique AirSim/Unreal Engine based simulation environment and graphical user interface aka digital twin, that provides a Hardware In The Loop setup via the Pixhawk flight controller. We demonstrate the usability and reliability of the overall framework through a set of diverse physical FPV flight experiments and corresponding flight tests in the digital twin.

Towards Physically Talented Aerial Robots with Tactically Smart Swarm Behavior thereof: An Efficient Co-design Approach

Jun 24, 2024Abstract:The collective performance or capacity of collaborative autonomous systems such as a swarm of robots is jointly influenced by the morphology and the behavior of individual systems in that collective. In that context, this paper explores how morphology impacts the learned tactical behavior of unmanned aerial/ground robots performing reconnaissance and search & rescue. This is achieved by presenting a computationally efficient framework to solve this otherwise challenging problem of jointly optimizing the morphology and tactical behavior of swarm robots. Key novel developments to this end include the use of physical talent metrics and modification of graph reinforcement learning architectures to allow joint learning of the swarm tactical policy and the talent metrics (search speed, flight range, and cruising speed) that constrain mobility and object/victim search capabilities of the aerial robots executing these tactics. Implementation of this co-design approach is supported by advancements to an open-source Pybullet-based swarm simulator that allows the use of variable aerial asset capabilities. The results of the co-design are observed to outperform those of tactics learning with a fixed Pareto design, when compared in terms of mission performance metrics. Significant differences in morphology and learned behavior are also observed by comparing the baseline design and the co-design outcomes.

Bigraph Matching Weighted with Learnt Incentive Function for Multi-Robot Task Allocation

Mar 11, 2024

Abstract:Most real-world Multi-Robot Task Allocation (MRTA) problems require fast and efficient decision-making, which is often achieved using heuristics-aided methods such as genetic algorithms, auction-based methods, and bipartite graph matching methods. These methods often assume a form that lends better explainability compared to an end-to-end (learnt) neural network based policy for MRTA. However, deriving suitable heuristics can be tedious, risky and in some cases impractical if problems are too complex. This raises the question: can these heuristics be learned? To this end, this paper particularly develops a Graph Reinforcement Learning (GRL) framework to learn the heuristics or incentives for a bipartite graph matching approach to MRTA. Specifically a Capsule Attention policy model is used to learn how to weight task/robot pairings (edges) in the bipartite graph that connects the set of tasks to the set of robots. The original capsule attention network architecture is fundamentally modified by adding encoding of robots' state graph, and two Multihead Attention based decoders whose output are used to construct a LogNormal distribution matrix from which positive bigraph weights can be drawn. The performance of this new bigraph matching approach augmented with a GRL-derived incentive is found to be at par with the original bigraph matching approach that used expert-specified heuristics, with the former offering notable robustness benefits. During training, the learned incentive policy is found to get initially closer to the expert-specified incentive and then slightly deviate from its trend.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge