Sophie Fellenz

Superstudent intelligence in thermodynamics

Jun 11, 2025Abstract:In this short note, we report and analyze a striking event: OpenAI's large language model o3 has outwitted all students in a university exam on thermodynamics. The thermodynamics exam is a difficult hurdle for most students, where they must show that they have mastered the fundamentals of this important topic. Consequently, the failure rates are very high, A-grades are rare - and they are considered proof of the students' exceptional intellectual abilities. This is because pattern learning does not help in the exam. The problems can only be solved by knowledgeably and creatively combining principles of thermodynamics. We have given our latest thermodynamics exam not only to the students but also to OpenAI's most powerful reasoning model, o3, and have assessed the answers of o3 exactly the same way as those of the students. In zero-shot mode, the model o3 solved all problems correctly, better than all students who took the exam; its overall score was in the range of the best scores we have seen in more than 10,000 similar exams since 1985. This is a turning point: machines now excel in complex tasks, usually taken as proof of human intellectual capabilities. We discuss the consequences this has for the work of engineers and the education of future engineers.

Multi-level Supervised Contrastive Learning

Feb 05, 2025

Abstract:Contrastive learning is a well-established paradigm in representation learning. The standard framework of contrastive learning minimizes the distance between "similar" instances and maximizes the distance between dissimilar ones in the projection space, disregarding the various aspects of similarity that can exist between two samples. Current methods rely on a single projection head, which fails to capture the full complexity of different aspects of a sample, leading to suboptimal performance, especially in scenarios with limited training data. In this paper, we present a novel supervised contrastive learning method in a unified framework called multilevel contrastive learning (MLCL), that can be applied to both multi-label and hierarchical classification tasks. The key strength of the proposed method is the ability to capture similarities between samples across different labels and/or hierarchies using multiple projection heads. Extensive experiments on text and image datasets demonstrate that the proposed approach outperforms state-of-the-art contrastive learning methods

Sparse Data Generation Using Diffusion Models

Feb 04, 2025

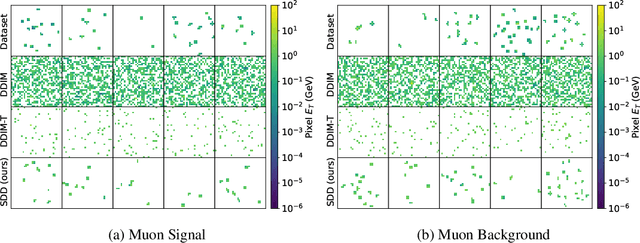

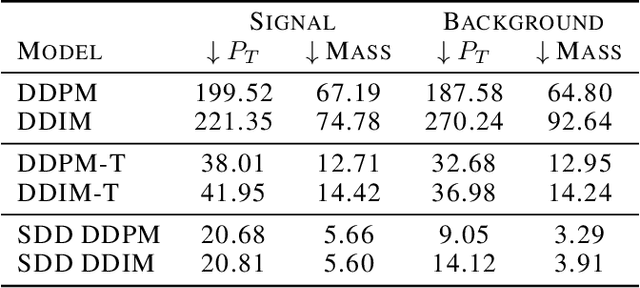

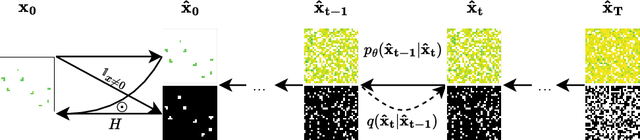

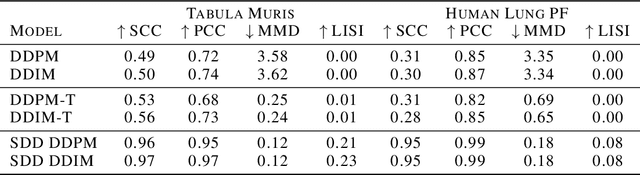

Abstract:Sparse data is ubiquitous, appearing in numerous domains, from economics and recommender systems to astronomy and biomedical sciences. However, efficiently and realistically generating sparse data remains a significant challenge. We introduce Sparse Data Diffusion (SDD), a novel method for generating sparse data. SDD extends continuous state-space diffusion models by explicitly modeling sparsity through the introduction of Sparsity Bits. Empirical validation on image data from various domains-including two scientific applications, physics and biology-demonstrates that SDD achieves high fidelity in representing data sparsity while preserving the quality of the generated data.

Challenging Assumptions in Learning Generic Text Style Embeddings

Jan 27, 2025

Abstract:Recent advancements in language representation learning primarily emphasize language modeling for deriving meaningful representations, often neglecting style-specific considerations. This study addresses this gap by creating generic, sentence-level style embeddings crucial for style-centric tasks. Our approach is grounded on the premise that low-level text style changes can compose any high-level style. We hypothesize that applying this concept to representation learning enables the development of versatile text style embeddings. By fine-tuning a general-purpose text encoder using contrastive learning and standard cross-entropy loss, we aim to capture these low-level style shifts, anticipating that they offer insights applicable to high-level text styles. The outcomes prompt us to reconsider the underlying assumptions as the results do not always show that the learned style representations capture high-level text styles.

BBPOS: BERT-based Part-of-Speech Tagging for Uzbek

Jan 17, 2025

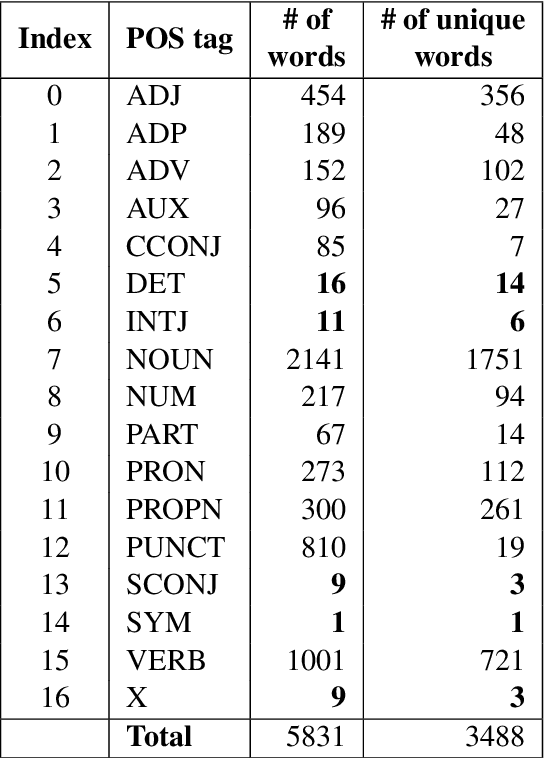

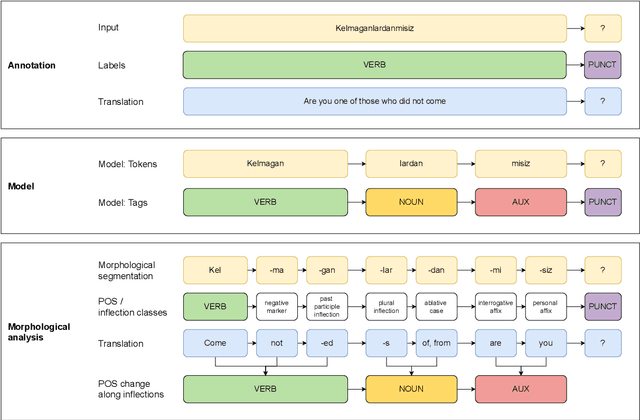

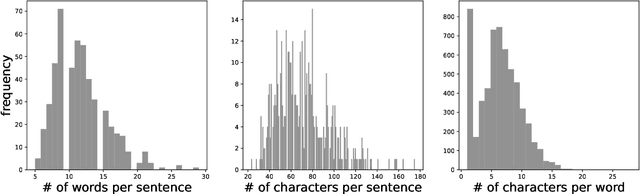

Abstract:This paper advances NLP research for the low-resource Uzbek language by evaluating two previously untested monolingual Uzbek BERT models on the part-of-speech (POS) tagging task and introducing the first publicly available UPOS-tagged benchmark dataset for Uzbek. Our fine-tuned models achieve 91% average accuracy, outperforming the baseline multi-lingual BERT as well as the rule-based tagger. Notably, these models capture intermediate POS changes through affixes and demonstrate context sensitivity, unlike existing rule-based taggers.

Towards Graph Foundation Models: A Study on the Generalization of Positional and Structural Encodings

Dec 10, 2024Abstract:Recent advances in integrating positional and structural encodings (PSEs) into graph neural networks (GNNs) have significantly enhanced their performance across various graph learning tasks. However, the general applicability of these encodings and their potential to serve as foundational representations for graphs remain uncertain. This paper investigates the fine-tuning efficiency, scalability with sample size, and generalization capability of learnable PSEs across diverse graph datasets. Specifically, we evaluate their potential as universal pre-trained models that can be easily adapted to new tasks with minimal fine-tuning and limited data. Furthermore, we assess the expressivity of the learned representations, particularly, when used to augment downstream GNNs. We demonstrate through extensive benchmarking and empirical analysis that PSEs generally enhance downstream models. However, some datasets may require specific PSE-augmentations to achieve optimal performance. Nevertheless, our findings highlight their significant potential to become integral components of future graph foundation models. We provide new insights into the strengths and limitations of PSEs, contributing to the broader discourse on foundation models in graph learning.

Tethering Broken Themes: Aligning Neural Topic Models with Labels and Authors

Oct 22, 2024

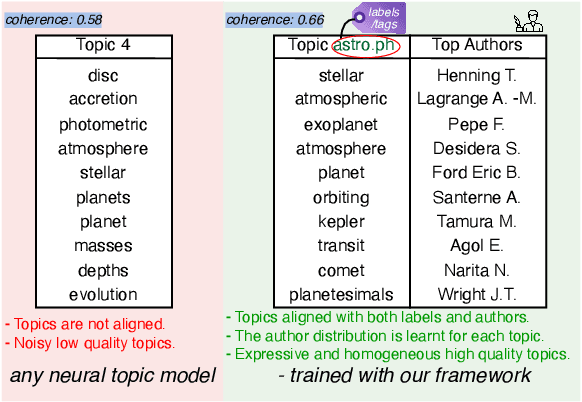

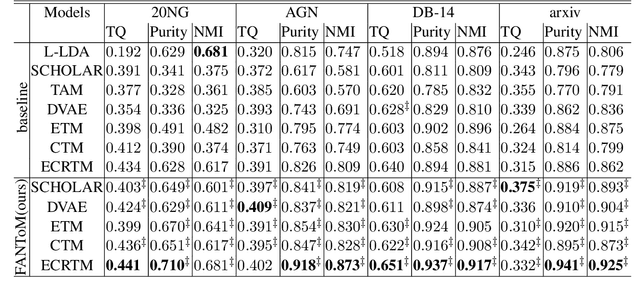

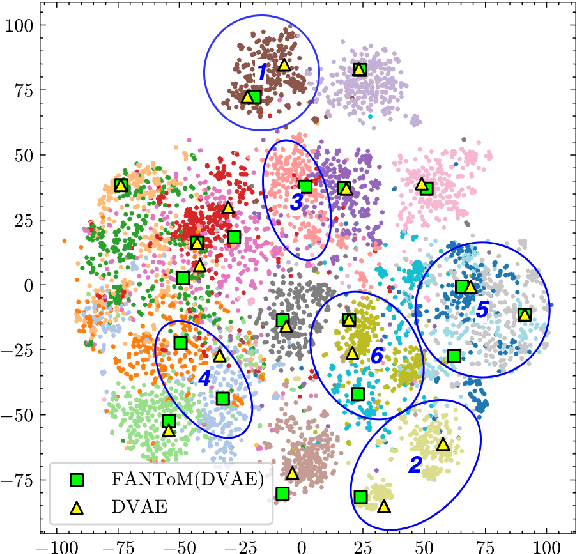

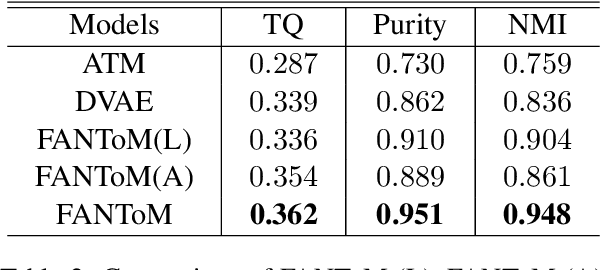

Abstract:Topic models are a popular approach for extracting semantic information from large document collections. However, recent studies suggest that the topics generated by these models often do not align well with human intentions. While metadata such as labels and authorship information is available, it has not yet been effectively incorporated into neural topic models. To address this gap, we introduce FANToM, a novel method for aligning neural topic models with both labels and authorship information. FANToM allows for the inclusion of this metadata when available, producing interpretable topics and author distributions for each topic. Our approach demonstrates greater expressiveness than conventional topic models by learning the alignment between labels, topics, and authors. Experimental results show that FANToM improves upon existing models in terms of both topic quality and alignment. Additionally, it identifies author interests and similarities.

SetPINNs: Set-based Physics-informed Neural Networks

Sep 30, 2024Abstract:Physics-Informed Neural Networks (PINNs) have emerged as a promising method for approximating solutions to partial differential equations (PDEs) using deep learning. However, PINNs, based on multilayer perceptrons (MLP), often employ point-wise predictions, overlooking the implicit dependencies within the physical system such as temporal or spatial dependencies. These dependencies can be captured using more complex network architectures, for example CNNs or Transformers. However, these architectures conventionally do not allow for incorporating physical constraints, as advancements in integrating such constraints within these frameworks are still lacking. Relying on point-wise predictions often results in trivial solutions. To address this limitation, we propose SetPINNs, a novel approach inspired by Finite Elements Methods from the field of Numerical Analysis. SetPINNs allow for incorporating the dependencies inherent in the physical system while at the same time allowing for incorporating the physical constraints. They accurately approximate PDE solutions of a region, thereby modeling the inherent dependencies between multiple neighboring points in that region. Our experiments show that SetPINNs demonstrate superior generalization performance and accuracy across diverse physical systems, showing that they mitigate failure modes and converge faster in comparison to existing approaches. Furthermore, we demonstrate the utility of SetPINNs on two real-world physical systems.

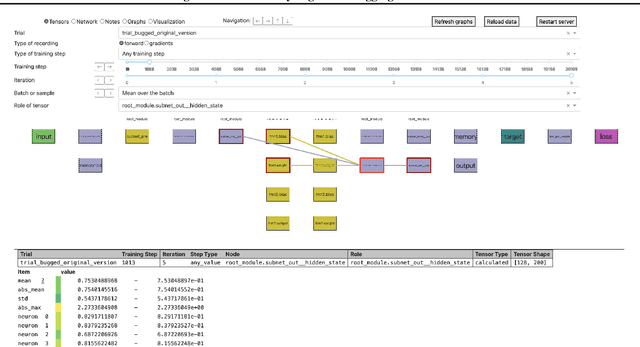

Comgra: A Tool for Analyzing and Debugging Neural Networks

Jul 31, 2024

Abstract:Neural Networks are notoriously difficult to inspect. We introduce comgra, an open source python library for use with PyTorch. Comgra extracts data about the internal activations of a model and organizes it in a GUI (graphical user interface). It can show both summary statistics and individual data points, compare early and late stages of training, focus on individual samples of interest, and visualize the flow of the gradient through the network. This makes it possible to inspect the model's behavior from many different angles and save time by rapidly testing different hypotheses without having to rerun it. Comgra has applications for debugging, neural architecture design, and mechanistic interpretability. We publish our library through Python Package Index (PyPI) and provide code, documentation, and tutorials at https://github.com/FlorianDietz/comgra.

HANNA: Hard-constraint Neural Network for Consistent Activity Coefficient Prediction

Jul 25, 2024Abstract:We present the first hard-constraint neural network for predicting activity coefficients (HANNA), a thermodynamic mixture property that is the basis for many applications in science and engineering. Unlike traditional neural networks, which ignore physical laws and result in inconsistent predictions, our model is designed to strictly adhere to all thermodynamic consistency criteria. By leveraging deep-set neural networks, HANNA maintains symmetry under the permutation of the components. Furthermore, by hard-coding physical constraints in the network architecture, we ensure consistency with the Gibbs-Duhem equation and in modeling the pure components. The model was trained and evaluated on 317,421 data points for activity coefficients in binary mixtures from the Dortmund Data Bank, achieving significantly higher prediction accuracies than the current state-of-the-art model UNIFAC. Moreover, HANNA only requires the SMILES of the components as input, making it applicable to any binary mixture of interest. HANNA is fully open-source and available for free use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge