Soohyun Lee

GhostUI: Unveiling Hidden Interactions in Mobile UI

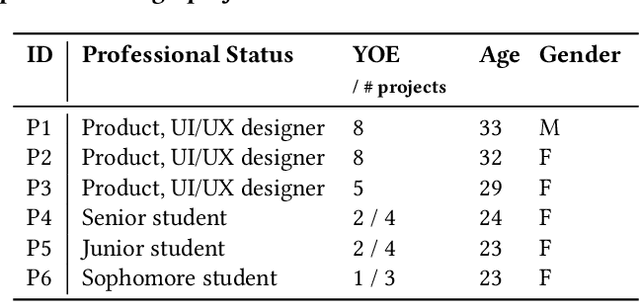

Jan 27, 2026Abstract:Modern mobile applications rely on hidden interactions--gestures without visual cues like long presses and swipes--to provide functionality without cluttering interfaces. While experienced users may discover these interactions through prior use or onboarding tutorials, their implicit nature makes them difficult for most users to uncover. Similarly, mobile agents--systems designed to automate tasks on mobile user interfaces, powered by vision language models (VLMs)--struggle to detect veiled interactions or determine actions for completing tasks. To address this challenge, we present GhostUI, a new dataset designed to enable the detection of hidden interactions in mobile applications. GhostUI provides before-and-after screenshots, simplified view hierarchies, gesture metadata, and task descriptions, allowing VLMs to better recognize concealed gestures and anticipate post-interaction states. Quantitative evaluations with VLMs show that models fine-tuned on GhostUI outperform baseline VLMs, particularly in predicting hidden interactions and inferring post-interaction screens, underscoring GhostUI's potential as a foundation for advancing mobile task automation.

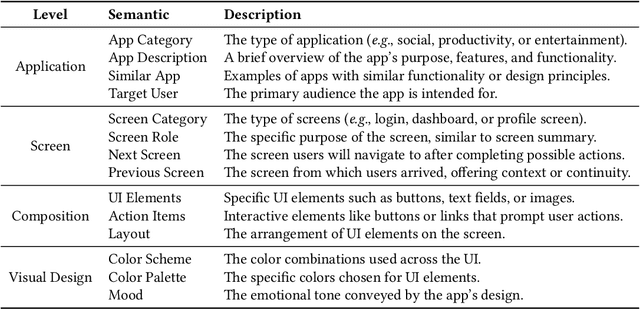

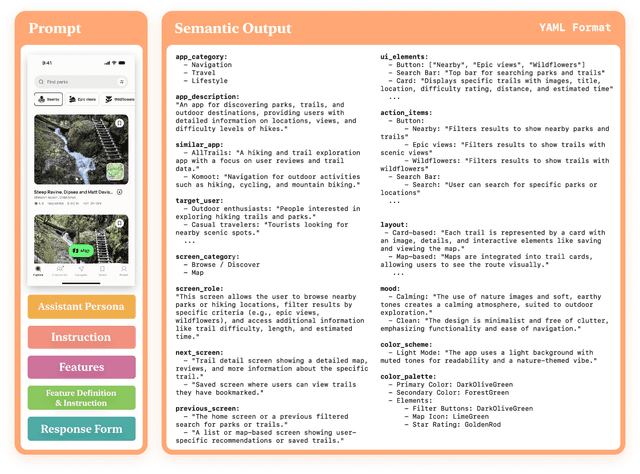

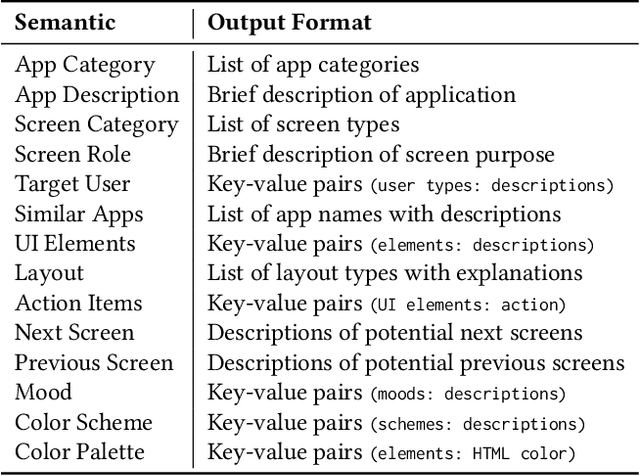

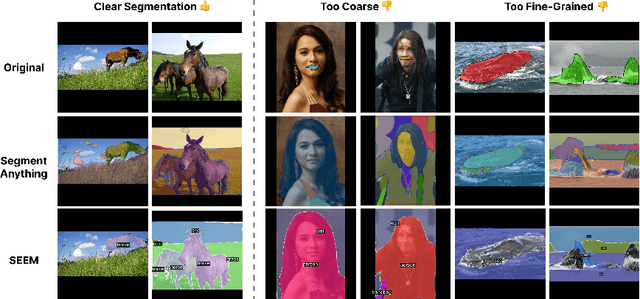

Bridging Gulfs in UI Generation through Semantic Guidance

Jan 27, 2026Abstract:While generative AI enables high-fidelity UI generation from text prompts, users struggle to articulate design intent and evaluate or refine results-creating gulfs of execution and evaluation. To understand the information needed for UI generation, we conducted a thematic analysis of UI prompting guidelines, identifying key design semantics and discovering that they are hierarchical and interdependent. Leveraging these findings, we developed a system that enables users to specify semantics, visualize relationships, and extract how semantics are reflected in generated UIs. By making semantics serve as an intermediate representation between human intent and AI output, our system bridges both gulfs by making requirements explicit and outcomes interpretable. A comparative user study suggests that our approach enhances users' perceived control over intent expression, outcome interpretation, and facilitates more predictable, iterative refinement. Our work demonstrates how explicit semantic representation enables systematic and explainable exploration of design possibilities in AI-driven UI design.

InFerActive: Towards Scalable Human Evaluation of Large Language Models through Interactive Inference

Dec 11, 2025Abstract:Human evaluation remains the gold standard for evaluating outputs of Large Language Models (LLMs). The current evaluation paradigm reviews numerous individual responses, leading to significant scalability challenges. LLM outputs can be more efficiently represented as a tree structure, reflecting their autoregressive generation process and stochastic token selection. However, conventional tree visualization cannot scale to the exponentially large trees generated by modern sampling methods of LLMs. To address this problem, we present InFerActive, an interactive inference system for scalable human evaluation. InFerActive enables on-demand exploration through probability-based filtering and evaluation features, while bridging the semantic gap between computational tokens and human-readable text through adaptive visualization techniques. Through a technical evaluation and user study (N=12), we demonstrate that InFerActive significantly improves evaluation efficiency and enables more comprehensive assessment of model behavior. We further conduct expert case studies that demonstrate InFerActive's practical applicability and potential for transforming LLM evaluation workflows.

SUCCESS-GS: Survey of Compactness and Compression for Efficient Static and Dynamic Gaussian Splatting

Dec 08, 2025

Abstract:3D Gaussian Splatting (3DGS) has emerged as a powerful explicit representation enabling real-time, high-fidelity 3D reconstruction and novel view synthesis. However, its practical use is hindered by the massive memory and computational demands required to store and render millions of Gaussians. These challenges become even more severe in 4D dynamic scenes. To address these issues, the field of Efficient Gaussian Splatting has rapidly evolved, proposing methods that reduce redundancy while preserving reconstruction quality. This survey provides the first unified overview of efficient 3D and 4D Gaussian Splatting techniques. For both 3D and 4D settings, we systematically categorize existing methods into two major directions, Parameter Compression and Restructuring Compression, and comprehensively summarize the core ideas and methodological trends within each category. We further cover widely used datasets, evaluation metrics, and representative benchmark comparisons. Finally, we discuss current limitations and outline promising research directions toward scalable, compact, and real-time Gaussian Splatting for both static and dynamic 3D scene representation.

Dataset-Adaptive Dimensionality Reduction

Jul 16, 2025

Abstract:Selecting the appropriate dimensionality reduction (DR) technique and determining its optimal hyperparameter settings that maximize the accuracy of the output projections typically involves extensive trial and error, often resulting in unnecessary computational overhead. To address this challenge, we propose a dataset-adaptive approach to DR optimization guided by structural complexity metrics. These metrics quantify the intrinsic complexity of a dataset, predicting whether higher-dimensional spaces are necessary to represent it accurately. Since complex datasets are often inaccurately represented in two-dimensional projections, leveraging these metrics enables us to predict the maximum achievable accuracy of DR techniques for a given dataset, eliminating redundant trials in optimizing DR. We introduce the design and theoretical foundations of these structural complexity metrics. We quantitatively verify that our metrics effectively approximate the ground truth complexity of datasets and confirm their suitability for guiding dataset-adaptive DR workflow. Finally, we empirically show that our dataset-adaptive workflow significantly enhances the efficiency of DR optimization without compromising accuracy.

Leveraging Multimodal LLM for Inspirational User Interface Search

Jan 30, 2025

Abstract:Inspirational search, the process of exploring designs to inform and inspire new creative work, is pivotal in mobile user interface (UI) design. However, exploring the vast space of UI references remains a challenge. Existing AI-based UI search methods often miss crucial semantics like target users or the mood of apps. Additionally, these models typically require metadata like view hierarchies, limiting their practical use. We used a multimodal large language model (MLLM) to extract and interpret semantics from mobile UI images. We identified key UI semantics through a formative study and developed a semantic-based UI search system. Through computational and human evaluations, we demonstrate that our approach significantly outperforms existing UI retrieval methods, offering UI designers a more enriched and contextually relevant search experience. We enhance the understanding of mobile UI design semantics and highlight MLLMs' potential in inspirational search, providing a rich dataset of UI semantics for future studies.

Assessing Graphical Perception of Image Embedding Models using Channel Effectiveness

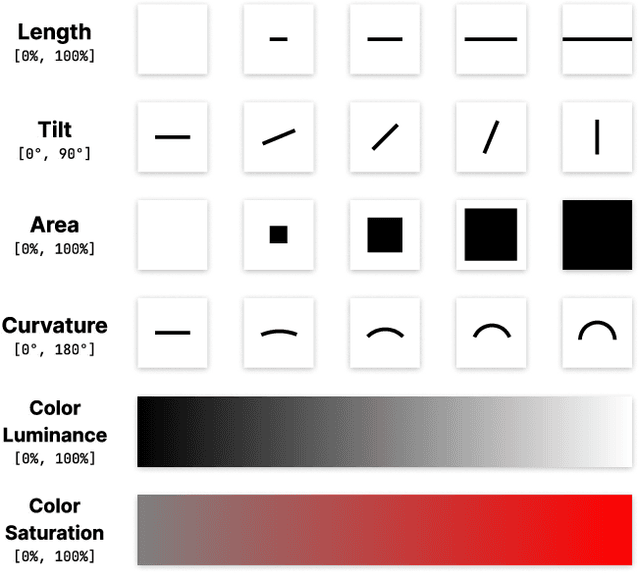

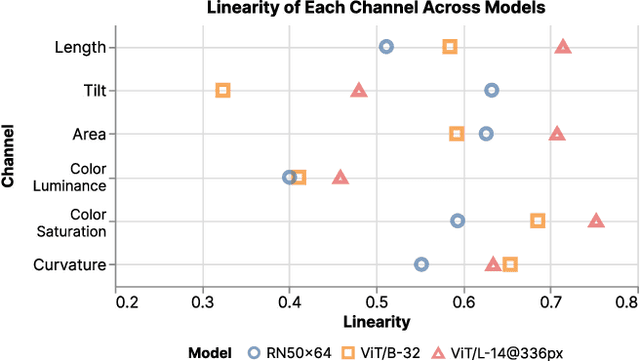

Jul 30, 2024

Abstract:Recent advancements in vision models have greatly improved their ability to handle complex chart understanding tasks, like chart captioning and question answering. However, it remains challenging to assess how these models process charts. Existing benchmarks only roughly evaluate model performance without evaluating the underlying mechanisms, such as how models extract image embeddings. This limits our understanding of the model's ability to perceive fundamental graphical components. To address this, we introduce a novel evaluation framework to assess the graphical perception of image embedding models. For chart comprehension, we examine two main aspects of channel effectiveness: accuracy and discriminability of various visual channels. Channel accuracy is assessed through the linearity of embeddings, measuring how well the perceived magnitude aligns with the size of the stimulus. Discriminability is evaluated based on the distances between embeddings, indicating their distinctness. Our experiments with the CLIP model show that it perceives channel accuracy differently from humans and shows unique discriminability in channels like length, tilt, and curvature. We aim to develop this work into a broader benchmark for reliable visual encoders, enhancing models for precise chart comprehension and human-like perception in future applications.

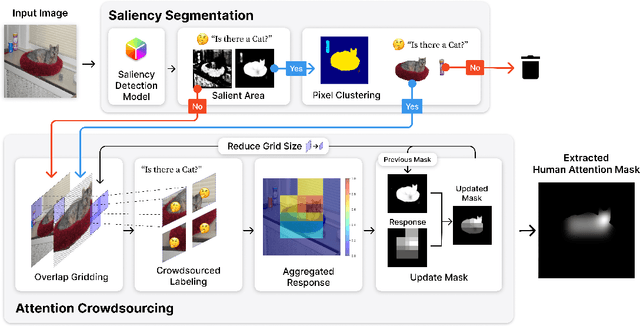

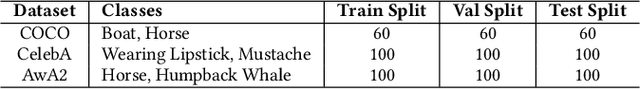

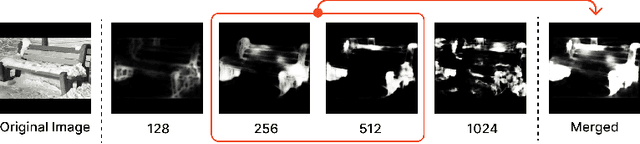

Extracting Human Attention through Crowdsourced Patch Labeling

Mar 22, 2024

Abstract:In image classification, a significant problem arises from bias in the datasets. When it contains only specific types of images, the classifier begins to rely on shortcuts - simplistic and erroneous rules for decision-making. This leads to high performance on the training dataset but inferior results on new, varied images, as the classifier's generalization capability is reduced. For example, if the images labeled as mustache consist solely of male figures, the model may inadvertently learn to classify images by gender rather than the presence of a mustache. One approach to mitigate such biases is to direct the model's attention toward the target object's location, usually marked using bounding boxes or polygons for annotation. However, collecting such annotations requires substantial time and human effort. Therefore, we propose a novel patch-labeling method that integrates AI assistance with crowdsourcing to capture human attention from images, which can be a viable solution for mitigating bias. Our method consists of two steps. First, we extract the approximate location of a target using a pre-trained saliency detection model supplemented by human verification for accuracy. Then, we determine the human-attentive area in the image by iteratively dividing the image into smaller patches and employing crowdsourcing to ascertain whether each patch can be classified as the target object. We demonstrated the effectiveness of our method in mitigating bias through improved classification accuracy and the refined focus of the model. Also, crowdsourced experiments validate that our method collects human annotation up to 3.4 times faster than annotating object locations with polygons, significantly reducing the need for human resources. We conclude the paper by discussing the advantages of our method in a crowdsourcing context, mainly focusing on aspects of human errors and accessibility.

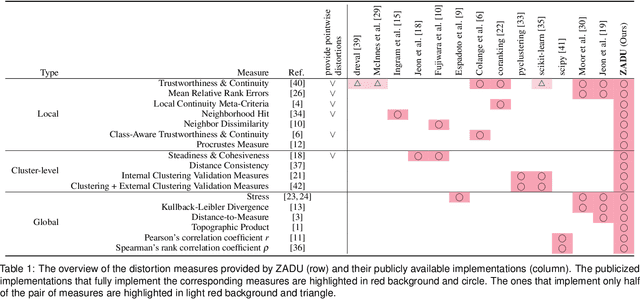

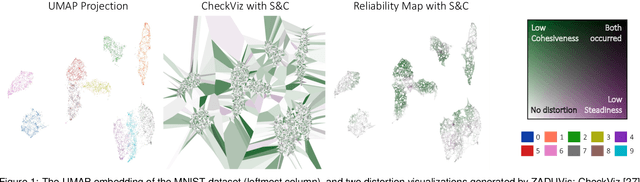

ZADU: A Python Library for Evaluating the Reliability of Dimensionality Reduction Embeddings

Aug 11, 2023

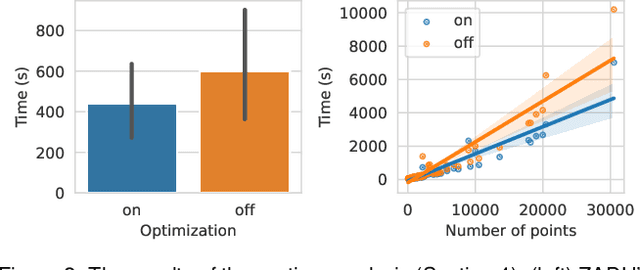

Abstract:Dimensionality reduction (DR) techniques inherently distort the original structure of input high-dimensional data, producing imperfect low-dimensional embeddings. Diverse distortion measures have thus been proposed to evaluate the reliability of DR embeddings. However, implementing and executing distortion measures in practice has so far been time-consuming and tedious. To address this issue, we present ZADU, a Python library that provides distortion measures. ZADU is not only easy to install and execute but also enables comprehensive evaluation of DR embeddings through three key features. First, the library covers a wide range of distortion measures. Second, it automatically optimizes the execution of distortion measures, substantially reducing the running time required to execute multiple measures. Last, the library informs how individual points contribute to the overall distortions, facilitating the detailed analysis of DR embeddings. By simulating a real-world scenario of optimizing DR embeddings, we verify that our optimization scheme substantially reduces the time required to execute distortion measures. Finally, as an application of ZADU, we present another library called ZADUVis that allows users to easily create distortion visualizations that depict the extent to which each region of an embedding suffers from distortions.

Uniform Manifold Approximation with Two-phase Optimization

May 01, 2022

Abstract:We introduce Uniform Manifold Approximation with Two-phase Optimization (UMATO), a dimensionality reduction (DR) technique that improves UMAP to capture the global structure of high-dimensional data more accurately. In UMATO, optimization is divided into two phases so that the resulting embeddings can depict the global structure reliably while preserving the local structure with sufficient accuracy. As the first phase, hub points are identified and projected to construct a skeletal layout for the global structure. In the second phase, the remaining points are added to the embedding preserving the regional characteristics of local areas. Through quantitative experiments, we found that UMATO (1) outperformed widely used DR techniques in preserving the global structure while (2) producing competitive accuracy in representing the local structure. We also verified that UMATO is preferable in terms of robustness over diverse initialization methods, number of epochs, and subsampling techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge