Somaya Al-Maadeed

CASR-Net: An Image Processing-focused Deep Learning-based Coronary Artery Segmentation and Refinement Network for X-ray Coronary Angiogram

Oct 31, 2025Abstract:Early detection of coronary artery disease (CAD) is critical for reducing mortality and improving patient treatment planning. While angiographic image analysis from X-rays is a common and cost-effective method for identifying cardiac abnormalities, including stenotic coronary arteries, poor image quality can significantly impede clinical diagnosis. We present the Coronary Artery Segmentation and Refinement Network (CASR-Net), a three-stage pipeline comprising image preprocessing, segmentation, and refinement. A novel multichannel preprocessing strategy combining CLAHE and an improved Ben Graham method provides incremental gains, increasing Dice Score Coefficient (DSC) by 0.31-0.89% and Intersection over Union (IoU) by 0.40-1.16% compared with using the techniques individually. The core innovation is a segmentation network built on a UNet with a DenseNet121 encoder and a Self-organized Operational Neural Network (Self-ONN) based decoder, which preserves the continuity of narrow and stenotic vessel branches. A final contour refinement module further suppresses false positives. Evaluated with 5-fold cross-validation on a combination of two public datasets that contain both healthy and stenotic arteries, CASR-Net outperformed several state-of-the-art models, achieving an IoU of 61.43%, a DSC of 76.10%, and clDice of 79.36%. These results highlight a robust approach to automated coronary artery segmentation, offering a valuable tool to support clinicians in diagnosis and treatment planning.

A Hybrid CNN-VSSM model for Multi-View, Multi-Task Mammography Analysis: Robust Diagnosis with Attention-Based Fusion

Jul 22, 2025Abstract:Early and accurate interpretation of screening mammograms is essential for effective breast cancer detection, yet it remains a complex challenge due to subtle imaging findings and diagnostic ambiguity. Many existing AI approaches fall short by focusing on single view inputs or single-task outputs, limiting their clinical utility. To address these limitations, we propose a novel multi-view, multitask hybrid deep learning framework that processes all four standard mammography views and jointly predicts diagnostic labels and BI-RADS scores for each breast. Our architecture integrates a hybrid CNN VSSM backbone, combining convolutional encoders for rich local feature extraction with Visual State Space Models (VSSMs) to capture global contextual dependencies. To improve robustness and interpretability, we incorporate a gated attention-based fusion module that dynamically weights information across views, effectively handling cases with missing data. We conduct extensive experiments across diagnostic tasks of varying complexity, benchmarking our proposed hybrid models against baseline CNN architectures and VSSM models in both single task and multi task learning settings. Across all tasks, the hybrid models consistently outperform the baselines. In the binary BI-RADS 1 vs. 5 classification task, the shared hybrid model achieves an AUC of 0.9967 and an F1 score of 0.9830. For the more challenging ternary classification, it attains an F1 score of 0.7790, while in the five-class BI-RADS task, the best F1 score reaches 0.4904. These results highlight the effectiveness of the proposed hybrid framework and underscore both the potential and limitations of multitask learning for improving diagnostic performance and enabling clinically meaningful mammography analysis.

PDC-ViT : Source Camera Identification using Pixel Difference Convolution and Vision Transformer

Jan 27, 2025Abstract:Source camera identification has emerged as a vital solution to unlock incidents involving critical cases like terrorism, violence, and other criminal activities. The ability to trace the origin of an image/video can aid law enforcement agencies in gathering evidence and constructing the timeline of events. Moreover, identifying the owner of a certain device narrows down the area of search in a criminal investigation where smartphone devices are involved. This paper proposes a new pixel-based method for source camera identification, integrating Pixel Difference Convolution (PDC) with a Vision Transformer network (ViT), and named PDC-ViT. While the PDC acts as the backbone for feature extraction by exploiting Angular PDC (APDC) and Radial PDC (RPDC). These techniques enhance the capability to capture subtle variations in pixel information, which are crucial for distinguishing between different source cameras. The second part of the methodology focuses on classification, which is based on a Vision Transformer network. Unlike traditional methods that utilize image patches directly for training the classification network, the proposed approach uniquely inputs PDC features into the Vision Transformer network. To demonstrate the effectiveness of the PDC-ViT approach, it has been assessed on five different datasets, which include various image contents and video scenes. The method has also been compared with state-of-the-art source camera identification methods. Experimental results demonstrate the effectiveness and superiority of the proposed system in terms of accuracy and robustness when compared to its competitors. For example, our proposed PDC-ViT has achieved an accuracy of 94.30%, 84%, 94.22% and 92.29% using the Vision dataset, Daxing dataset, Socrates dataset and QUFVD dataset, respectively.

Multimodal Ensemble with Conditional Feature Fusion for Dysgraphia Diagnosis in Children from Handwriting Samples

Aug 25, 2024Abstract:Developmental dysgraphia is a neurological disorder that hinders children's writing skills. In recent years, researchers have increasingly explored machine learning methods to support the diagnosis of dysgraphia based on offline and online handwriting. In most previous studies, the two types of handwriting have been analysed separately, which does not necessarily lead to promising results. In this way, the relationship between online and offline data cannot be explored. To address this limitation, we propose a novel multimodal machine learning approach utilizing both online and offline handwriting data. We created a new dataset by transforming an existing online handwritten dataset, generating corresponding offline handwriting images. We considered only different types of word data (simple word, pseudoword & difficult word) in our multimodal analysis. We trained SVM and XGBoost classifiers separately on online and offline features as well as implemented multimodal feature fusion and soft-voted ensemble. Furthermore, we proposed a novel ensemble with conditional feature fusion method which intelligently combines predictions from online and offline classifiers, selectively incorporating feature fusion when confidence scores fall below a threshold. Our novel approach achieves an accuracy of 88.8%, outperforming SVMs for single modalities by 12-14%, existing methods by 8-9%, and traditional multimodal approaches (soft-vote ensemble and feature fusion) by 3% and 5%, respectively. Our methodology contributes to the development of accurate and efficient dysgraphia diagnosis tools, requiring only a single instance of multimodal word/pseudoword data to determine the handwriting impairment. This work highlights the potential of multimodal learning in enhancing dysgraphia diagnosis, paving the way for accessible and practical diagnostic tools.

Advancing Histopathology-Based Breast Cancer Diagnosis: Insights into Multi-Modality and Explainability

Jun 07, 2024Abstract:It is imperative that breast cancer is detected precisely and timely to improve patient outcomes. Diagnostic methodologies have traditionally relied on unimodal approaches; however, medical data analytics is integrating diverse data sources beyond conventional imaging. Using multi-modal techniques, integrating both image and non-image data, marks a transformative advancement in breast cancer diagnosis. The purpose of this review is to explore the burgeoning field of multimodal techniques, particularly the fusion of histopathology images with non-image data. Further, Explainable AI (XAI) will be used to elucidate the decision-making processes of complex algorithms, emphasizing the necessity of explainability in diagnostic processes. This review utilizes multi-modal data and emphasizes explainability to enhance diagnostic accuracy, clinician confidence, and patient engagement, ultimately fostering more personalized treatment strategies for breast cancer, while also identifying research gaps in multi-modality and explainability, guiding future studies, and contributing to the strategic direction of the field.

Classification of Dysarthria based on the Levels of Severity. A Systematic Review

Oct 11, 2023Abstract:Dysarthria is a neurological speech disorder that can significantly impact affected individuals' communication abilities and overall quality of life. The accurate and objective classification of dysarthria and the determination of its severity are crucial for effective therapeutic intervention. While traditional assessments by speech-language pathologists (SLPs) are common, they are often subjective, time-consuming, and can vary between practitioners. Emerging machine learning-based models have shown the potential to provide a more objective dysarthria assessment, enhancing diagnostic accuracy and reliability. This systematic review aims to comprehensively analyze current methodologies for classifying dysarthria based on severity levels. Specifically, this review will focus on determining the most effective set and type of features that can be used for automatic patient classification and evaluating the best AI techniques for this purpose. We will systematically review the literature on the automatic classification of dysarthria severity levels. Sources of information will include electronic databases and grey literature. Selection criteria will be established based on relevance to the research questions. Data extraction will include methodologies used, the type of features extracted for classification, and AI techniques employed. The findings of this systematic review will contribute to the current understanding of dysarthria classification, inform future research, and support the development of improved diagnostic tools. The implications of these findings could be significant in advancing patient care and improving therapeutic outcomes for individuals affected by dysarthria.

3D objects and scenes classification, recognition, segmentation, and reconstruction using 3D point cloud data: A review

Jun 09, 2023

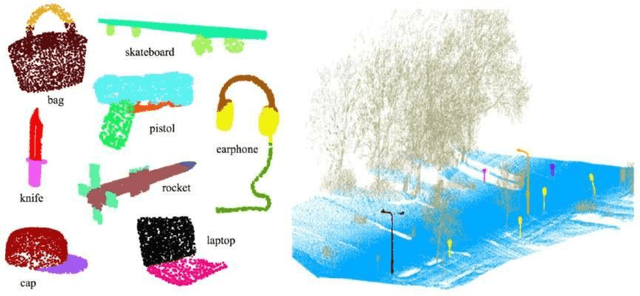

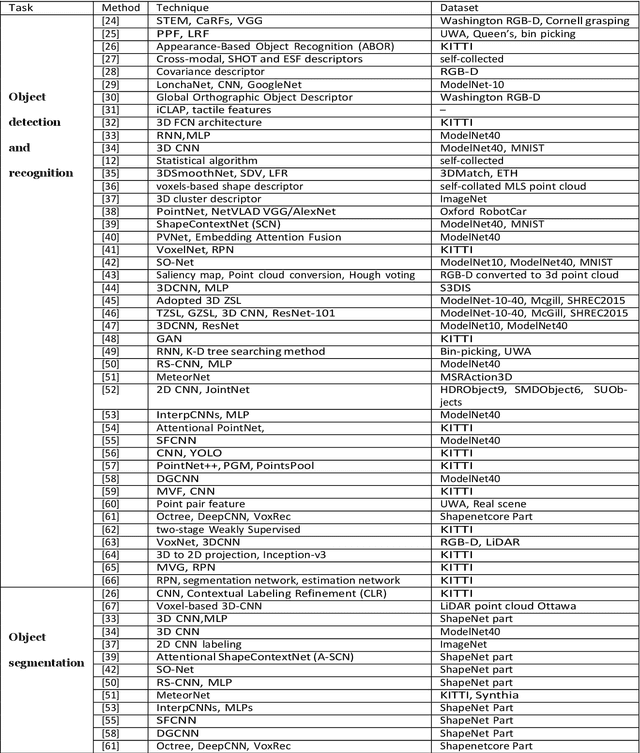

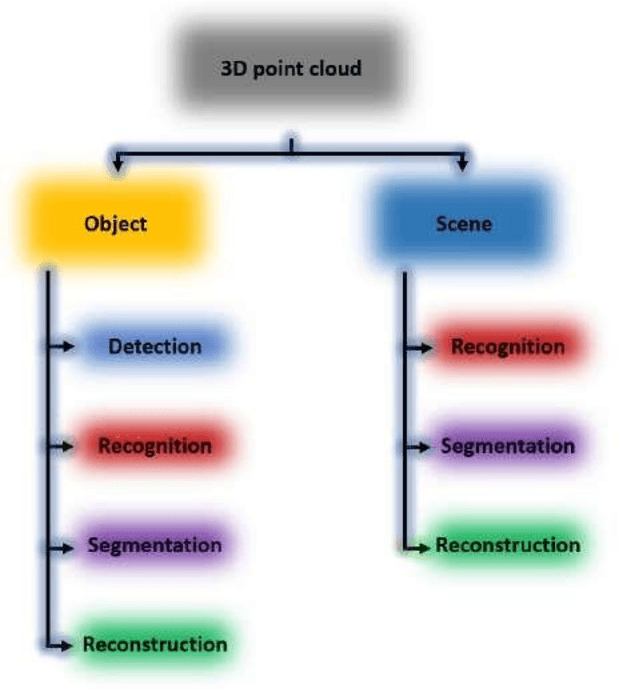

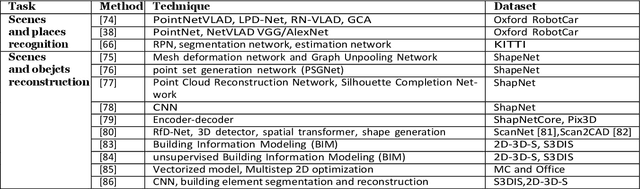

Abstract:Three-dimensional (3D) point cloud analysis has become one of the attractive subjects in realistic imaging and machine visions due to its simplicity, flexibility and powerful capacity of visualization. Actually, the representation of scenes and buildings using 3D shapes and formats leveraged many applications among which automatic driving, scenes and objects reconstruction, etc. Nevertheless, working with this emerging type of data has been a challenging task for objects representation, scenes recognition, segmentation, and reconstruction. In this regard, a significant effort has recently been devoted to developing novel strategies, using different techniques such as deep learning models. To that end, we present in this paper a comprehensive review of existing tasks on 3D point cloud: a well-defined taxonomy of existing techniques is performed based on the nature of the adopted algorithms, application scenarios, and main objectives. Various tasks performed on 3D point could data are investigated, including objects and scenes detection, recognition, segmentation and reconstruction. In addition, we introduce a list of used datasets, we discuss respective evaluation metrics and we compare the performance of existing solutions to better inform the state-of-the-art and identify their limitations and strengths. Lastly, we elaborate on current challenges facing the subject of technology and future trends attracting considerable interest, which could be a starting point for upcoming research studies

Deep Transfer Learning for Automatic Speech Recognition: Towards Better Generalization

Apr 27, 2023

Abstract:Automatic speech recognition (ASR) has recently become an important challenge when using deep learning (DL). It requires large-scale training datasets and high computational and storage resources. Moreover, DL techniques and machine learning (ML) approaches in general, hypothesize that training and testing data come from the same domain, with the same input feature space and data distribution characteristics. This assumption, however, is not applicable in some real-world artificial intelligence (AI) applications. Moreover, there are situations where gathering real data is challenging, expensive, or rarely occurring, which can not meet the data requirements of DL models. deep transfer learning (DTL) has been introduced to overcome these issues, which helps develop high-performing models using real datasets that are small or slightly different but related to the training data. This paper presents a comprehensive survey of DTL-based ASR frameworks to shed light on the latest developments and helps academics and professionals understand current challenges. Specifically, after presenting the DTL background, a well-designed taxonomy is adopted to inform the state-of-the-art. A critical analysis is then conducted to identify the limitations and advantages of each framework. Moving on, a comparative study is introduced to highlight the current challenges before deriving opportunities for future research.

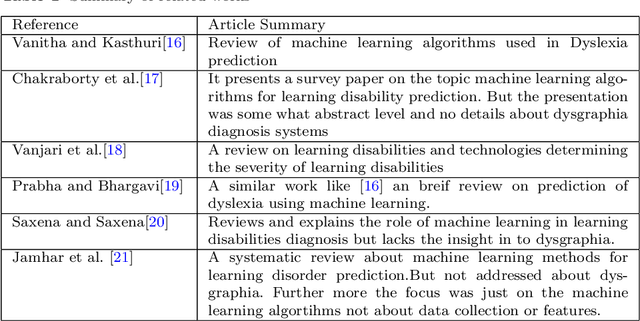

Automated Systems For Diagnosis of Dysgraphia in Children: A Survey and Novel Framework

Jun 27, 2022

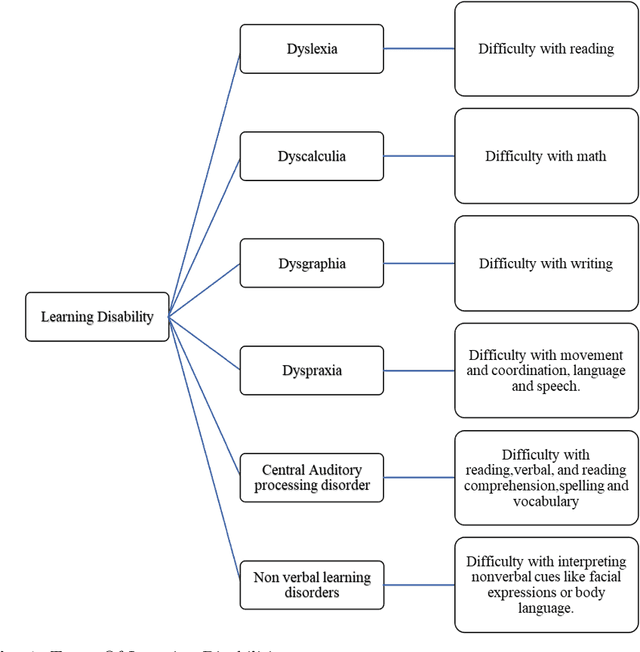

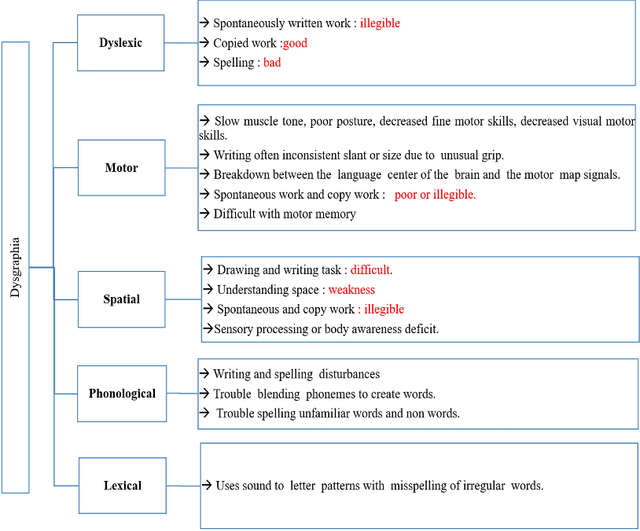

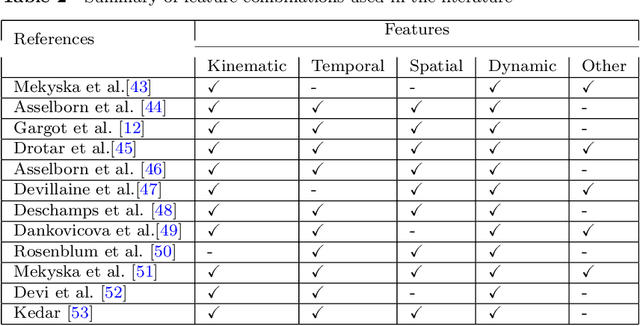

Abstract:Learning disabilities, which primarily interfere with the basic learning skills such as reading, writing and math, are known to affect around 10% of children in the world. The poor motor skills and motor coordination as part of the neurodevelopmental disorder can become a causative factor for the difficulty in learning to write (dysgraphia), hindering the academic track of an individual. The signs and symptoms of dysgraphia include but are not limited to irregular handwriting, improper handling of writing medium, slow or labored writing, unusual hand position, etc. The widely accepted assessment criterion for all the types of learning disabilities is the examination performed by medical experts. The few available artificial intelligence-powered screening systems for dysgraphia relies on the distinctive features of handwriting from the corresponding images.This work presents a review of the existing automated dysgraphia diagnosis systems for children in the literature. The main focus of the work is to review artificial intelligence-based systems for dysgraphia diagnosis in children. This work discusses the data collection method, important handwriting features, machine learning algorithms employed in the literature for the diagnosis of dysgraphia. Apart from that, this article discusses some of the non-artificial intelligence-based automated systems also. Furthermore, this article discusses the drawbacks of existing systems and proposes a novel framework for dysgraphia diagnosis.

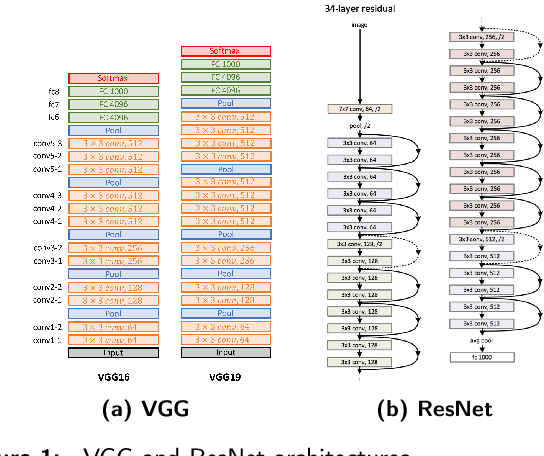

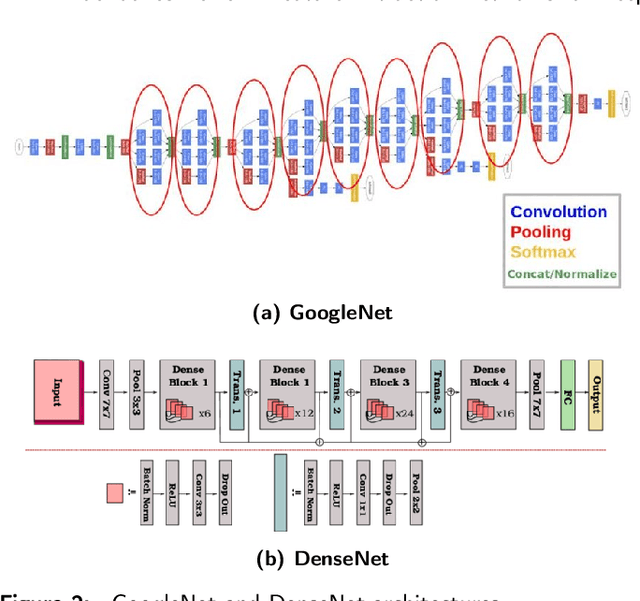

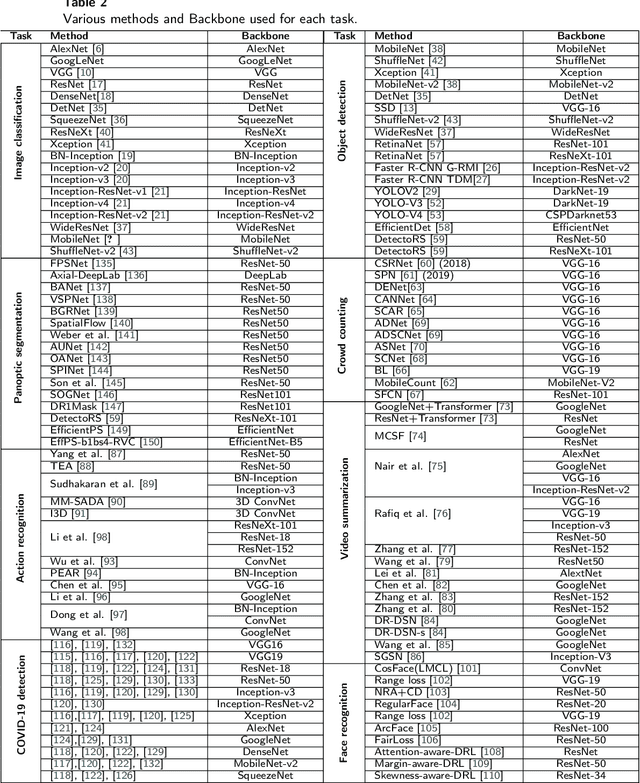

Backbones-Review: Feature Extraction Networks for Deep Learning and Deep Reinforcement Learning Approaches

Jun 16, 2022

Abstract:To understand the real world using various types of data, Artificial Intelligence (AI) is the most used technique nowadays. While finding the pattern within the analyzed data represents the main task. This is performed by extracting representative features step, which is proceeded using the statistical algorithms or using some specific filters. However, the selection of useful features from large-scale data represented a crucial challenge. Now, with the development of convolution neural networks (CNNs), the feature extraction operation has become more automatic and easier. CNNs allow to work on large-scale size of data, as well as cover different scenarios for a specific task. For computer vision tasks, convolutional networks are used to extract features also for the other parts of a deep learning model. The selection of a suitable network for feature extraction or the other parts of a DL model is not random work. So, the implementation of such a model can be related to the target task as well as the computational complexity of it. Many networks have been proposed and become the famous networks used for any DL models in any AI task. These networks are exploited for feature extraction or at the beginning of any DL model which is named backbones. A backbone is a known network trained in many other tasks before and demonstrates its effectiveness. In this paper, an overview of the existing backbones, e.g. VGGs, ResNets, DenseNet, etc, is given with a detailed description. Also, a couple of computer vision tasks are discussed by providing a review of each task regarding the backbones used. In addition, a comparison in terms of performance is also provided, based on the backbone used for each task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge