Fouad Khelifi

Robust Palm-Vein Recognition Using the MMD Filter: Improving SIFT-Based Feature Matching

Mar 03, 2025

Abstract:A major challenge with palm vein images is that slight movements of the fingers and thumb, or variations in hand posture, can stretch the skin in different areas and alter the vein patterns. This can result in an infinite number of variations in palm vein images for a given individual. This paper introduces a novel filtering technique for SIFT-based feature matching, known as the Mean and Median Distance (MMD) Filter. This method evaluates the differences in keypoint coordinates and computes the mean and median in each direction to eliminate incorrect matches. Experiments conducted on the 850nm subset of the CASIA dataset indicate that the proposed MMD filter effectively preserves correct points while reducing false positives detected by other filtering methods. A comparison with existing SIFT-based palm vein recognition systems demonstrates that the proposed MMD filter delivers outstanding performance, achieving lower Equal Error Rate (EER) values. This article presents an extended author's version based on our previous work, A Keypoint Filtering Method for SIFT based Palm-Vein Recognition.

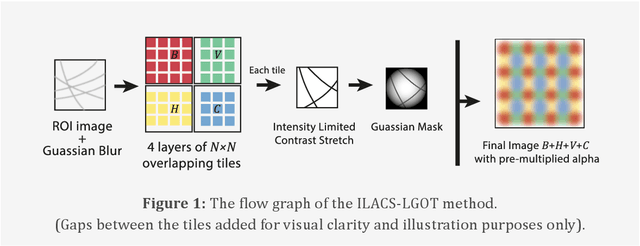

ILACS-LGOT: A Multi-Layer Contrast Enhancement Approach for Palm-Vein Images

Feb 26, 2025

Abstract:This article presents an extended author's version based on our previous work, where we introduced the Multiple Overlapping Tiles (MOT) method for palm vein image enhancement. To better reflect the specific operations involved, we rename MOT to ILACS-LGOT (Intensity-Limited Adaptive Contrast Stretching with Layered Gaussian-weighted Overlapping Tiles). This revised terminology more accurately represents the method's approach to contrast enhancement and blocky effect mitigation. Additionally, this article provides a more detailed analysis, including expanded evaluations, graphical representations, and sample-based comparisons, demonstrating the effectiveness of ILACS-LGOT over existing methods.

PDC-ViT : Source Camera Identification using Pixel Difference Convolution and Vision Transformer

Jan 27, 2025Abstract:Source camera identification has emerged as a vital solution to unlock incidents involving critical cases like terrorism, violence, and other criminal activities. The ability to trace the origin of an image/video can aid law enforcement agencies in gathering evidence and constructing the timeline of events. Moreover, identifying the owner of a certain device narrows down the area of search in a criminal investigation where smartphone devices are involved. This paper proposes a new pixel-based method for source camera identification, integrating Pixel Difference Convolution (PDC) with a Vision Transformer network (ViT), and named PDC-ViT. While the PDC acts as the backbone for feature extraction by exploiting Angular PDC (APDC) and Radial PDC (RPDC). These techniques enhance the capability to capture subtle variations in pixel information, which are crucial for distinguishing between different source cameras. The second part of the methodology focuses on classification, which is based on a Vision Transformer network. Unlike traditional methods that utilize image patches directly for training the classification network, the proposed approach uniquely inputs PDC features into the Vision Transformer network. To demonstrate the effectiveness of the PDC-ViT approach, it has been assessed on five different datasets, which include various image contents and video scenes. The method has also been compared with state-of-the-art source camera identification methods. Experimental results demonstrate the effectiveness and superiority of the proposed system in terms of accuracy and robustness when compared to its competitors. For example, our proposed PDC-ViT has achieved an accuracy of 94.30%, 84%, 94.22% and 92.29% using the Vision dataset, Daxing dataset, Socrates dataset and QUFVD dataset, respectively.

Convolutional Neural Network-Based Automatic Classification of Colorectal and Prostate Tumor Biopsies Using Multispectral Imagery: System Development Study

Jan 30, 2023Abstract:Colorectal and prostate cancers are the most common types of cancer in men worldwide. To diagnose colorectal and prostate cancer, a pathologist performs a histological analysis on needle biopsy samples. This manual process is time-consuming and error-prone, resulting in high intra and interobserver variability, which affects diagnosis reliability. This study aims to develop an automatic computerized system for diagnosing colorectal and prostate tumors by using images of biopsy samples to reduce time and diagnosis error rates associated with human analysis. We propose a CNN model for classifying colorectal and prostate tumors from multispectral images of biopsy samples. The key idea was to remove the last block of the convolutional layers and halve the number of filters per layer. Our results showed excellent performance, with an average test accuracy of 99.8% and 99.5% for the prostate and colorectal data sets, respectively. The system showed excellent performance when compared with pretrained CNNs and other classification methods, as it avoids the preprocessing phase while using a single CNN model for classification. Overall, the proposed CNN architecture was globally the best-performing system for classifying colorectal and prostate tumor images. The proposed CNN was detailed and compared with previously trained network models used as feature extractors. These CNNs were also compared with other classification techniques. As opposed to pretrained CNNs and other classification approaches, the proposed CNN yielded excellent results. The computational complexity of the CNNs was also investigated, it was shown that the proposed CNN is better at classifying images than pretrained networks because it does not require preprocessing. Thus, the overall analysis was that the proposed CNN architecture was globally the best-performing system for classifying colorectal and prostate tumor images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge