Hamza Kheddar

A Robust Cross-Domain IDS using BiGRU-LSTM-Attention for Medical and Industrial IoT Security

Aug 17, 2025Abstract:The increased Internet of Medical Things IoMT and the Industrial Internet of Things IIoT interconnectivity has introduced complex cybersecurity challenges, exposing sensitive data, patient safety, and industrial operations to advanced cyber threats. To mitigate these risks, this paper introduces a novel transformer-based intrusion detection system IDS, termed BiGAT-ID a hybrid model that combines bidirectional gated recurrent units BiGRU, long short-term memory LSTM networks, and multi-head attention MHA. The proposed architecture is designed to effectively capture bidirectional temporal dependencies, model sequential patterns, and enhance contextual feature representation. Extensive experiments on two benchmark datasets, CICIoMT2024 medical IoT and EdgeIIoTset industrial IoT demonstrate the model's cross-domain robustness, achieving detection accuracies of 99.13 percent and 99.34 percent, respectively. Additionally, the model exhibits exceptional runtime efficiency, with inference times as low as 0.0002 seconds per instance in IoMT and 0.0001 seconds in IIoT scenarios. Coupled with a low false positive rate, BiGAT-ID proves to be a reliable and efficient IDS for deployment in real-world heterogeneous IoT environments

Transfer Learning-Based Deep Residual Learning for Speech Recognition in Clean and Noisy Environments

May 02, 2025

Abstract:Addressing the detrimental impact of non-stationary environmental noise on automatic speech recognition (ASR) has been a persistent and significant research focus. Despite advancements, this challenge continues to be a major concern. Recently, data-driven supervised approaches, such as deep neural networks, have emerged as promising alternatives to traditional unsupervised methods. With extensive training, these approaches have the potential to overcome the challenges posed by diverse real-life acoustic environments. In this light, this paper introduces a novel neural framework that incorporates a robust frontend into ASR systems in both clean and noisy environments. Utilizing the Aurora-2 speech database, the authors evaluate the effectiveness of an acoustic feature set for Mel-frequency, employing the approach of transfer learning based on Residual neural network (ResNet). The experimental results demonstrate a significant improvement in recognition accuracy compared to convolutional neural networks (CNN) and long short-term memory (LSTM) networks. They achieved accuracies of 98.94% in clean and 91.21% in noisy mode.

Enhancing Cochlear Implant Signal Coding with Scaled Dot-Product Attention

Apr 26, 2025Abstract:Cochlear implants (CIs) play a vital role in restoring hearing for individuals with severe to profound sensorineural hearing loss by directly stimulating the auditory nerve with electrical signals. While traditional coding strategies, such as the advanced combination encoder (ACE), have proven effective, they are constrained by their adaptability and precision. This paper investigates the use of deep learning (DL) techniques to generate electrodograms for CIs, presenting our model as an advanced alternative. We compared the performance of our model with the ACE strategy by evaluating the intelligibility of reconstructed audio signals using the short-time objective intelligibility (STOI) metric. The results indicate that our model achieves a STOI score of 0.6031, closely approximating the 0.6126 score of the ACE strategy, and offers potential advantages in flexibility and adaptability. This study underscores the benefits of incorporating artificial intelligent (AI) into CI technology, such as enhanced personalization and efficiency.

Improving Pretrained YAMNet for Enhanced Speech Command Detection via Transfer Learning

Apr 26, 2025

Abstract:This work addresses the need for enhanced accuracy and efficiency in speech command recognition systems, a critical component for improving user interaction in various smart applications. Leveraging the robust pretrained YAMNet model and transfer learning, this study develops a method that significantly improves speech command recognition. We adapt and train a YAMNet deep learning model to effectively detect and interpret speech commands from audio signals. Using the extensively annotated Speech Commands dataset (speech_commands_v0.01), our approach demonstrates the practical application of transfer learning to accurately recognize a predefined set of speech commands. The dataset is meticulously augmented, and features are strategically extracted to boost model performance. As a result, the final model achieved a recognition accuracy of 95.28%, underscoring the impact of advanced machine learning techniques on speech command recognition. This achievement marks substantial progress in audio processing technologies and establishes a new benchmark for future research in the field.

Automatic Speech Recognition with BERT and CTC Transformers: A Review

Oct 12, 2024

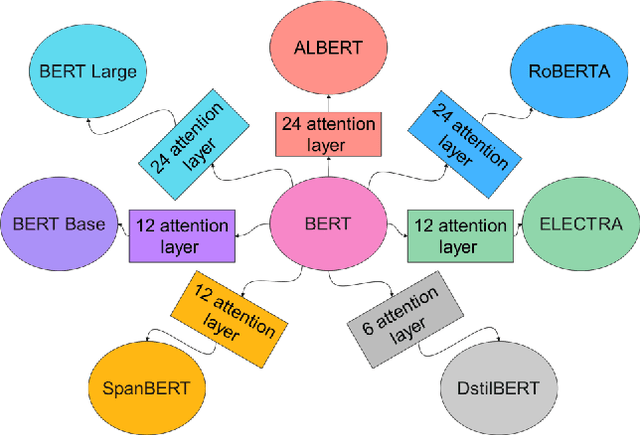

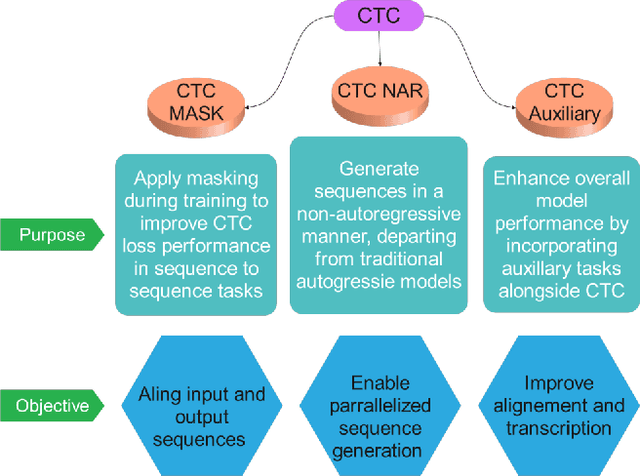

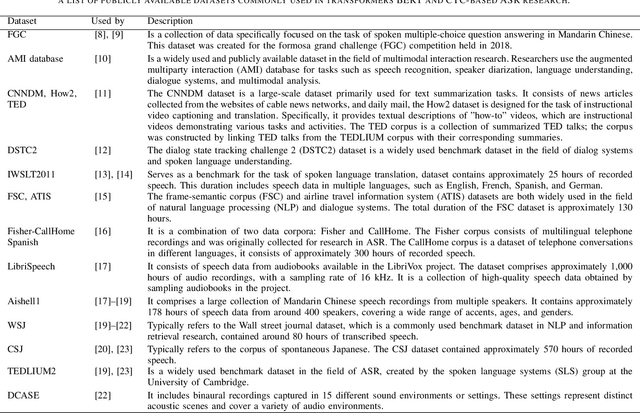

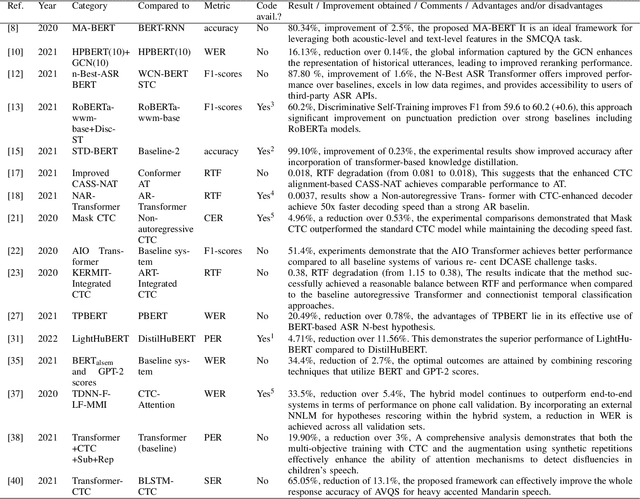

Abstract:This review paper provides a comprehensive analysis of recent advances in automatic speech recognition (ASR) with bidirectional encoder representations from transformers BERT and connectionist temporal classification (CTC) transformers. The paper first introduces the fundamental concepts of ASR and discusses the challenges associated with it. It then explains the architecture of BERT and CTC transformers and their potential applications in ASR. The paper reviews several studies that have used these models for speech recognition tasks and discusses the results obtained. Additionally, the paper highlights the limitations of these models and outlines potential areas for further research. All in all, this review provides valuable insights for researchers and practitioners who are interested in ASR with BERT and CTC transformers.

Deep Learning Techniques for Hand Vein Biometrics: A Comprehensive Review

Sep 11, 2024Abstract:Biometric authentication has garnered significant attention as a secure and efficient method of identity verification. Among the various modalities, hand vein biometrics, including finger vein, palm vein, and dorsal hand vein recognition, offer unique advantages due to their high accuracy, low susceptibility to forgery, and non-intrusiveness. The vein patterns within the hand are highly complex and distinct for each individual, making them an ideal biometric identifier. Additionally, hand vein recognition is contactless, enhancing user convenience and hygiene compared to other modalities such as fingerprint or iris recognition. Furthermore, the veins are internally located, rendering them less susceptible to damage or alteration, thus enhancing the security and reliability of the biometric system. The combination of these factors makes hand vein biometrics a highly effective and secure method for identity verification. This review paper delves into the latest advancements in deep learning techniques applied to finger vein, palm vein, and dorsal hand vein recognition. It encompasses all essential fundamentals of hand vein biometrics, summarizes publicly available datasets, and discusses state-of-the-art metrics used for evaluating the three modes. Moreover, it provides a comprehensive overview of suggested approaches for finger, palm, dorsal, and multimodal vein techniques, offering insights into the best performance achieved, data augmentation techniques, and effective transfer learning methods, along with associated pretrained deep learning models. Additionally, the review addresses research challenges faced and outlines future directions and perspectives, encouraging researchers to enhance existing methods and propose innovative techniques.

Deep Transfer Learning for Kidney Cancer Diagnosis

Aug 08, 2024

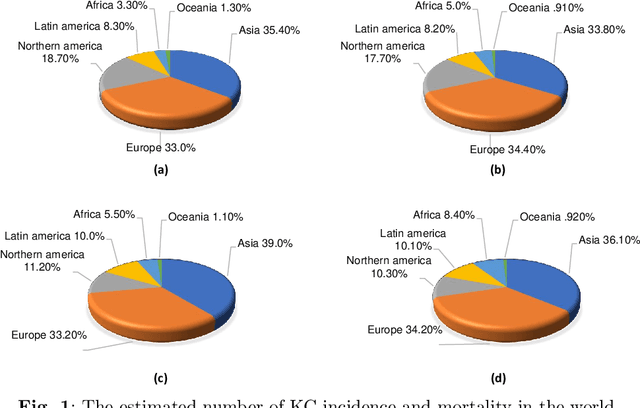

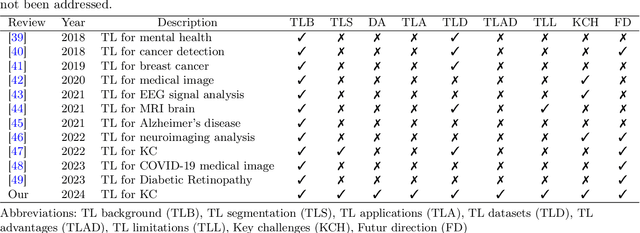

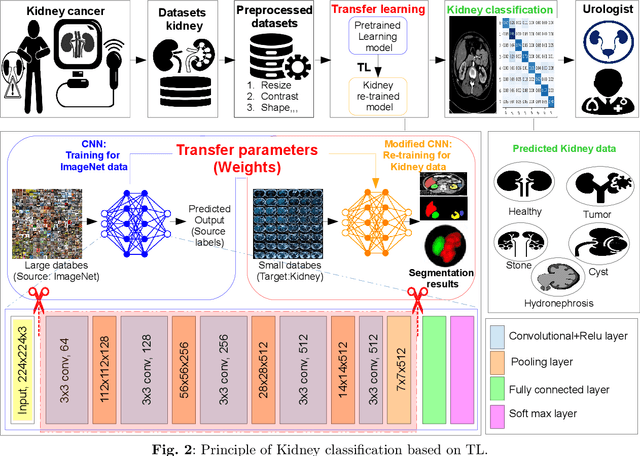

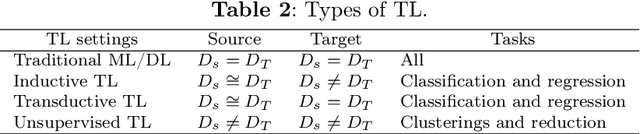

Abstract:Many incurable diseases prevalent across global societies stem from various influences, including lifestyle choices, economic conditions, social factors, and genetics. Research predominantly focuses on these diseases due to their widespread nature, aiming to decrease mortality, enhance treatment options, and improve healthcare standards. Among these, kidney disease stands out as a particularly severe condition affecting men and women worldwide. Nonetheless, there is a pressing need for continued research into innovative, early diagnostic methods to develop more effective treatments for such diseases. Recently, automatic diagnosis of Kidney Cancer has become an important challenge especially when using deep learning (DL) due to the importance of training medical datasets, which in most cases are difficult and expensive to obtain. Furthermore, in most cases, algorithms require data from the same domain and a powerful computer with efficient storage capacity. To overcome this issue, a new type of learning known as transfer learning (TL) has been proposed that can produce impressive results based on other different pre-trained data. This paper presents, to the best of the authors' knowledge, the first comprehensive survey of DL-based TL frameworks for kidney cancer diagnosis. This is a strong contribution to help researchers understand the current challenges and perspectives of this topic. Hence, the main limitations and advantages of each framework are identified and detailed critical analyses are provided. Looking ahead, the article identifies promising directions for future research. Moving on, the discussion is concluded by reflecting on the pivotal role of TL in the development of precision medicine and its effects on clinical practice and research in oncology.

Advancing 3D Point Cloud Understanding through Deep Transfer Learning: A Comprehensive Survey

Jul 25, 2024

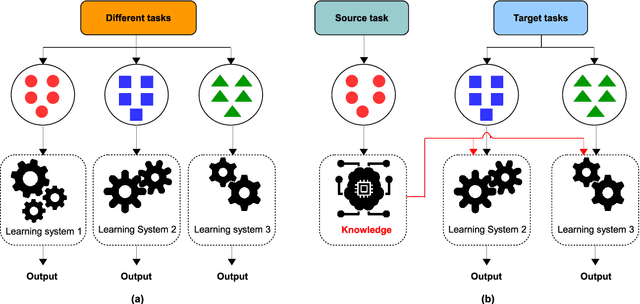

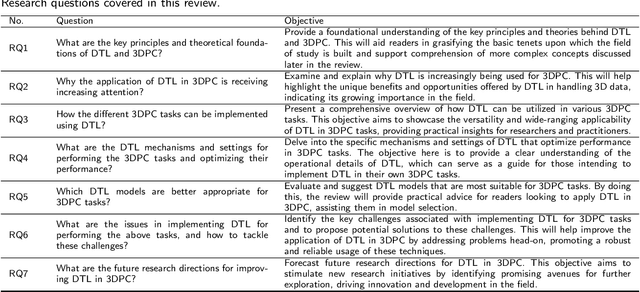

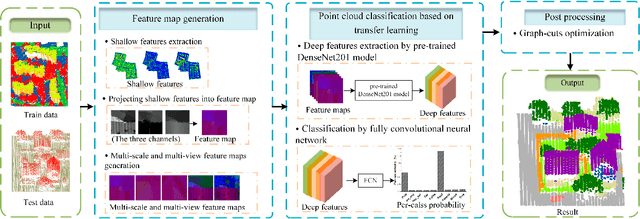

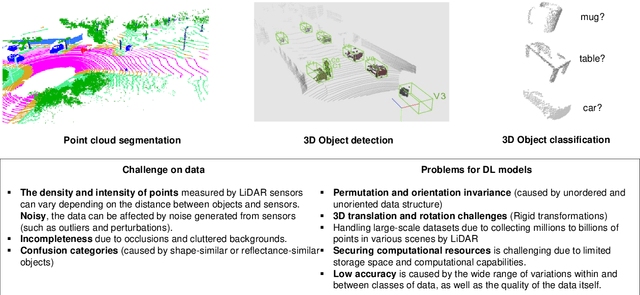

Abstract:The 3D point cloud (3DPC) has significantly evolved and benefited from the advance of deep learning (DL). However, the latter faces various issues, including the lack of data or annotated data, the existence of a significant gap between training data and test data, and the requirement for high computational resources. To that end, deep transfer learning (DTL), which decreases dependency and costs by utilizing knowledge gained from a source data/task in training a target data/task, has been widely investigated. Numerous DTL frameworks have been suggested for aligning point clouds obtained from several scans of the same scene. Additionally, DA, which is a subset of DTL, has been modified to enhance the point cloud data's quality by dealing with noise and missing points. Ultimately, fine-tuning and DA approaches have demonstrated their effectiveness in addressing the distinct difficulties inherent in point cloud data. This paper presents the first review shedding light on this aspect. it provides a comprehensive overview of the latest techniques for understanding 3DPC using DTL and domain adaptation (DA). Accordingly, DTL's background is first presented along with the datasets and evaluation metrics. A well-defined taxonomy is introduced, and detailed comparisons are presented, considering different aspects such as different knowledge transfer strategies, and performance. The paper covers various applications, such as 3DPC object detection, semantic labeling, segmentation, classification, registration, downsampling/upsampling, and denoising. Furthermore, the article discusses the advantages and limitations of the presented frameworks, identifies open challenges, and suggests potential research directions.

Enhancing IoT Security with CNN and LSTM-Based Intrusion Detection Systems

May 28, 2024

Abstract:Protecting Internet of things (IoT) devices against cyber attacks is imperative owing to inherent security vulnerabilities. These vulnerabilities can include a spectrum of sophisticated attacks that pose significant damage to both individuals and organizations. Employing robust security measures like intrusion detection systems (IDSs) is essential to solve these problems and protect IoT systems from such attacks. In this context, our proposed IDS model consists on a combination of convolutional neural network (CNN) and long short-term memory (LSTM) deep learning (DL) models. This fusion facilitates the detection and classification of IoT traffic into binary categories, benign and malicious activities by leveraging the spatial feature extraction capabilities of CNN for pattern recognition and the sequential memory retention of LSTM for discerning complex temporal dependencies in achieving enhanced accuracy and efficiency. In assessing the performance of our proposed model, the authors employed the new CICIoT2023 dataset for both training and final testing, while further validating the model's performance through a conclusive testing phase utilizing the CICIDS2017 dataset. Our proposed model achieves an accuracy rate of 98.42%, accompanied by a minimal loss of 0.0275. False positive rate(FPR) is equally important, reaching 9.17% with an F1-score of 98.57%. These results demonstrate the effectiveness of our proposed CNN-LSTM IDS model in fortifying IoT environments against potential cyber threats.

High-energy physics image classification: A Survey of Jet Applications

Mar 18, 2024Abstract:In recent times, the fields of high-energy physics (HEP) experimentation and phenomenological studies have seen the integration of machine learning (ML) and its specialized branch, deep learning (DL). This survey offers a comprehensive assessment of these applications within the realm of various DL approaches. The initial segment of the paper introduces the fundamentals encompassing diverse particle physics types and establishes criteria for evaluating particle physics in tandem with learning models. Following this, a comprehensive taxonomy is presented for representing HEP images, encompassing accessible datasets, intricate details of preprocessing techniques, and methods of feature extraction and selection. Subsequently, the focus shifts to an exploration of available artificial intelligence (AI) models tailored to HEP images, along with a concentrated examination of HEP image classification pertaining to Jet particles. Within this review, a profound investigation is undertaken into distinct ML and DL proposed state-of-the art (SOTA) techniques, underscoring their implications for HEP inquiries. The discussion delves into specific applications in substantial detail, including Jet tagging, Jet tracking, particle classification, and more. The survey culminates with an analysis concerning the present status of HEP grounded in DL methodologies, encompassing inherent challenges and prospective avenues for future research endeavors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge