Automatic Speech Recognition with BERT and CTC Transformers: A Review

Paper and Code

Oct 12, 2024

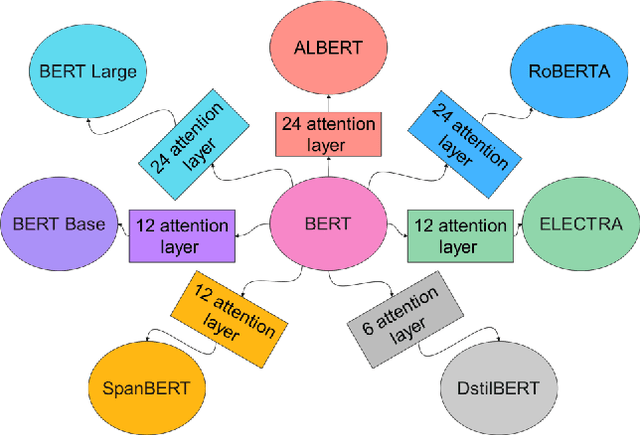

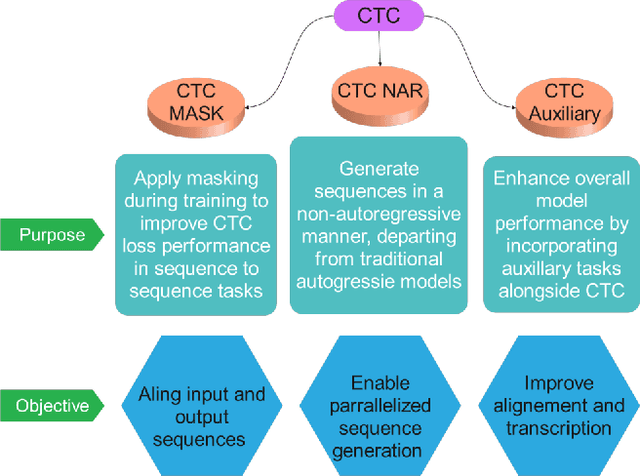

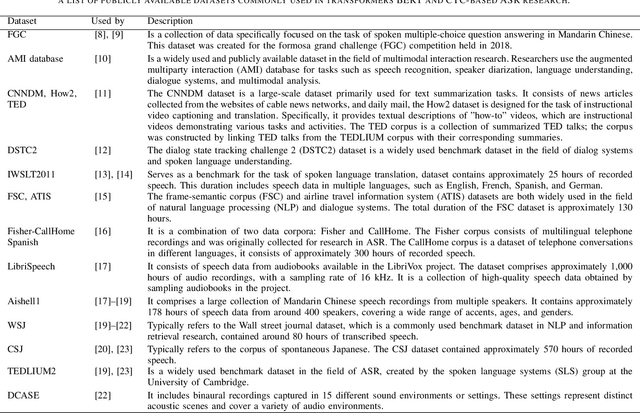

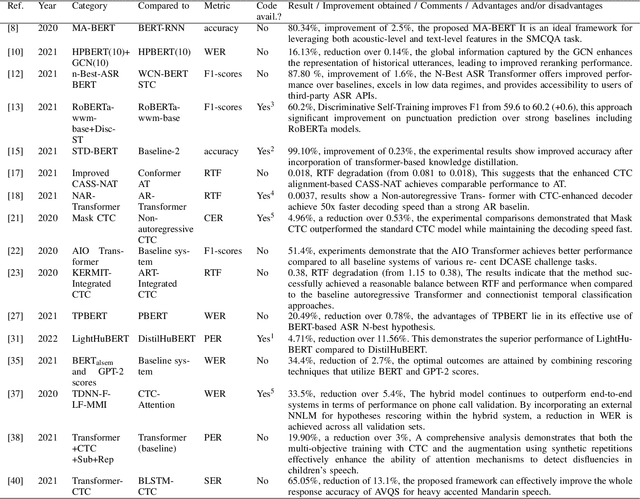

This review paper provides a comprehensive analysis of recent advances in automatic speech recognition (ASR) with bidirectional encoder representations from transformers BERT and connectionist temporal classification (CTC) transformers. The paper first introduces the fundamental concepts of ASR and discusses the challenges associated with it. It then explains the architecture of BERT and CTC transformers and their potential applications in ASR. The paper reviews several studies that have used these models for speech recognition tasks and discusses the results obtained. Additionally, the paper highlights the limitations of these models and outlines potential areas for further research. All in all, this review provides valuable insights for researchers and practitioners who are interested in ASR with BERT and CTC transformers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge