Siteng Ma

Entropy-Guided Agreement-Diversity: A Semi-Supervised Active Learning Framework for Fetal Head Segmentation in Ultrasound

Jan 24, 2026Abstract:Fetal ultrasound (US) data is often limited due to privacy and regulatory restrictions, posing challenges for training deep learning (DL) models. While semi-supervised learning (SSL) is commonly used for fetal US image analysis, existing SSL methods typically rely on random limited selection, which can lead to suboptimal model performance by overfitting to homogeneous labeled data. To address this, we propose a two-stage Active Learning (AL) sampler, Entropy-Guided Agreement-Diversity (EGAD), for fetal head segmentation. Our method first selects the most uncertain samples using predictive entropy, and then refines the final selection using the agreement-diversity score combining cosine similarity and mutual information. Additionally, our SSL framework employs a consistency learning strategy with feature downsampling to further enhance segmentation performance. In experiments, SSL-EGAD achieves an average Dice score of 94.57\% and 96.32\% on two public datasets for fetal head segmentation, using 5\% and 10\% labeled data for training, respectively. Our method outperforms current SSL models and showcases consistent robustness across diverse pregnancy stage data. The code is available on \href{https://github.com/13204942/Semi-supervised-EGAD}{GitHub}.

Deep Learning Approaches for Medical Imaging Under Varying Degrees of Label Availability: A Comprehensive Survey

Apr 15, 2025

Abstract:Deep learning has achieved significant breakthroughs in medical imaging, but these advancements are often dependent on large, well-annotated datasets. However, obtaining such datasets poses a significant challenge, as it requires time-consuming and labor-intensive annotations from medical experts. Consequently, there is growing interest in learning paradigms such as incomplete, inexact, and absent supervision, which are designed to operate under limited, inexact, or missing labels. This survey categorizes and reviews the evolving research in these areas, analyzing around 600 notable contributions since 2018. It covers tasks such as image classification, segmentation, and detection across various medical application areas, including but not limited to brain, chest, and cardiac imaging. We attempt to establish the relationships among existing research studies in related areas. We provide formal definitions of different learning paradigms and offer a comprehensive summary and interpretation of various learning mechanisms and strategies, aiding readers in better understanding the current research landscape and ideas. We also discuss potential future research challenges.

Masked Angle-Aware Autoencoder for Remote Sensing Images

Aug 04, 2024Abstract:To overcome the inherent domain gap between remote sensing (RS) images and natural images, some self-supervised representation learning methods have made promising progress. However, they have overlooked the diverse angles present in RS objects. This paper proposes the Masked Angle-Aware Autoencoder (MA3E) to perceive and learn angles during pre-training. We design a \textit{scaling center crop} operation to create the rotated crop with random orientation on each original image, introducing the explicit angle variation. MA3E inputs this composite image while reconstruct the original image, aiming to effectively learn rotation-invariant representations by restoring the angle variation introduced on the rotated crop. To avoid biases caused by directly reconstructing the rotated crop, we propose an Optimal Transport (OT) loss that automatically assigns similar original image patches to each rotated crop patch for reconstruction. MA3E demonstrates more competitive performance than existing pre-training methods on seven different RS image datasets in three downstream tasks.

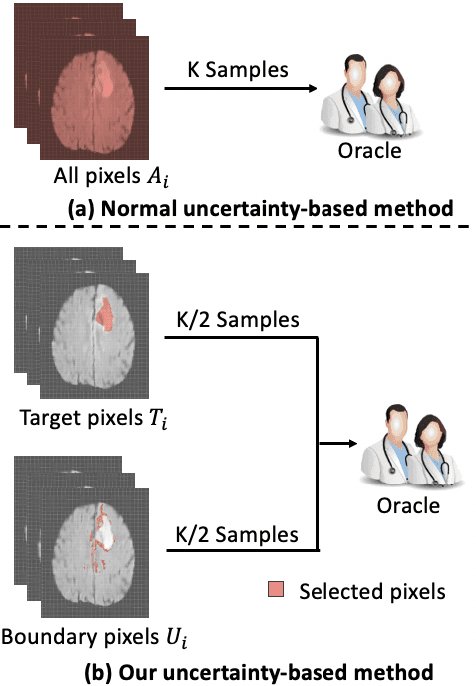

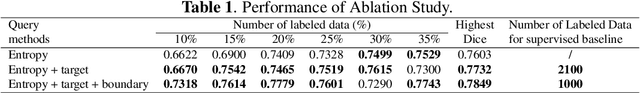

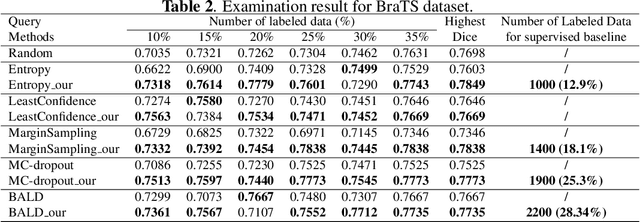

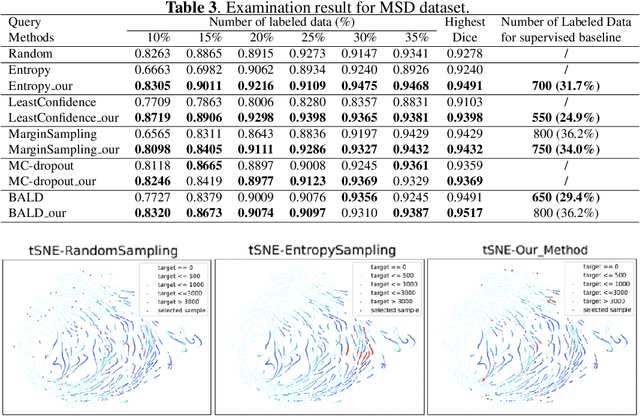

Breaking the Barrier: Selective Uncertainty-based Active Learning for Medical Image Segmentation

Jan 29, 2024

Abstract:Active learning (AL) has found wide applications in medical image segmentation, aiming to alleviate the annotation workload and enhance performance. Conventional uncertainty-based AL methods, such as entropy and Bayesian, often rely on an aggregate of all pixel-level metrics. However, in imbalanced settings, these methods tend to neglect the significance of target regions, eg., lesions, and tumors. Moreover, uncertainty-based selection introduces redundancy. These factors lead to unsatisfactory performance, and in many cases, even underperform random sampling. To solve this problem, we introduce a novel approach called the Selective Uncertainty-based AL, avoiding the conventional practice of summing up the metrics of all pixels. Through a filtering process, our strategy prioritizes pixels within target areas and those near decision boundaries. This resolves the aforementioned disregard for target areas and redundancy. Our method showed substantial improvements across five different uncertainty-based methods and two distinct datasets, utilizing fewer labeled data to reach the supervised baseline and consistently achieving the highest overall performance. Our code is available at https://github.com/HelenMa9998/Selective\_Uncertainty\_AL.

Adaptive Nonlinear Latent Transformation for Conditional Face Editing

Jul 15, 2023Abstract:Recent works for face editing usually manipulate the latent space of StyleGAN via the linear semantic directions. However, they usually suffer from the entanglement of facial attributes, need to tune the optimal editing strength, and are limited to binary attributes with strong supervision signals. This paper proposes a novel adaptive nonlinear latent transformation for disentangled and conditional face editing, termed AdaTrans. Specifically, our AdaTrans divides the manipulation process into several finer steps; i.e., the direction and size at each step are conditioned on both the facial attributes and the latent codes. In this way, AdaTrans describes an adaptive nonlinear transformation trajectory to manipulate the faces into target attributes while keeping other attributes unchanged. Then, AdaTrans leverages a predefined density model to constrain the learned trajectory in the distribution of latent codes by maximizing the likelihood of transformed latent code. Moreover, we also propose a disentangled learning strategy under a mutual information framework to eliminate the entanglement among attributes, which can further relax the need for labeled data. Consequently, AdaTrans enables a controllable face editing with the advantages of disentanglement, flexibility with non-binary attributes, and high fidelity. Extensive experimental results on various facial attributes demonstrate the qualitative and quantitative effectiveness of the proposed AdaTrans over existing state-of-the-art methods, especially in the most challenging scenarios with a large age gap and few labeled examples. The source code is available at https://github.com/Hzzone/AdaTrans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge