Simon Guist

Identifying Policy Gradient Subspaces

Jan 15, 2024Abstract:Policy gradient methods hold great potential for solving complex continuous control tasks. Still, their training efficiency can be improved by exploiting structure within the optimization problem. Recent work indicates that supervised learning can be accelerated by leveraging the fact that gradients lie in a low-dimensional and slowly-changing subspace. In this paper, we conduct a thorough evaluation of this phenomenon for two popular deep policy gradient methods on various simulated benchmark tasks. Our results demonstrate the existence of such gradient subspaces despite the continuously changing data distribution inherent to reinforcement learning. These findings reveal promising directions for future work on more efficient reinforcement learning, e.g., through improving parameter-space exploration or enabling second-order optimization.

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Oct 17, 2023

Abstract:Large, high-capacity models trained on diverse datasets have shown remarkable successes on efficiently tackling downstream applications. In domains from NLP to Computer Vision, this has led to a consolidation of pretrained models, with general pretrained backbones serving as a starting point for many applications. Can such a consolidation happen in robotics? Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train generalist X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms. More details can be found on the project website $\href{https://robotics-transformer-x.github.io}{\text{robotics-transformer-x.github.io}}$.

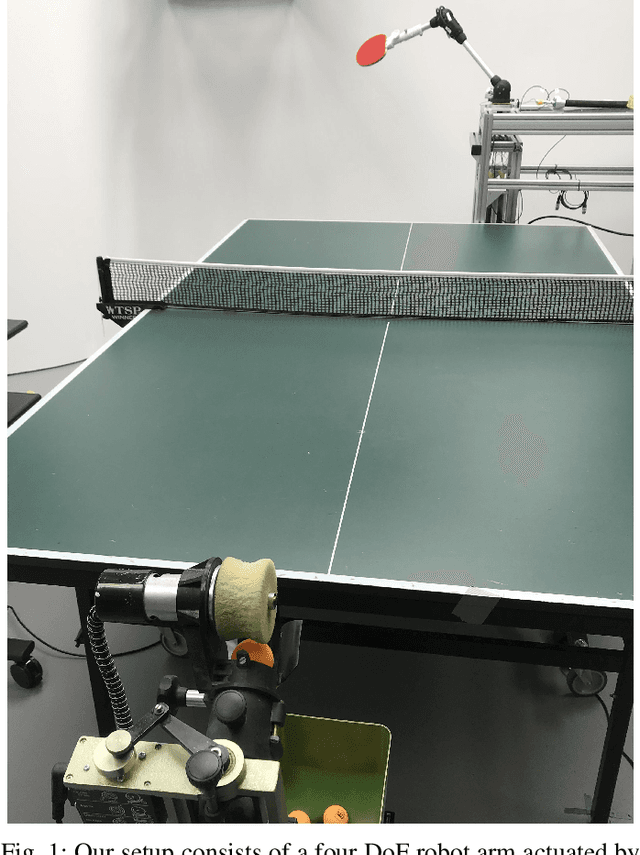

A Robust Open-source Tendon-driven Robot Arm for Learning Control of Dynamic Motions

Jul 10, 2023

Abstract:A long-lasting goal of robotics research is to operate robots safely, while achieving high performance which often involves fast motions. Traditional motor-driven systems frequently struggle to balance these competing demands. Addressing this trade-off is crucial for advancing fields such as manufacturing and healthcare, where seamless collaboration between robots and humans is essential. We introduce a four degree-of-freedom (DoF) tendon-driven robot arm, powered by pneumatic artificial muscles (PAMs), to tackle this challenge. Our new design features low friction, passive compliance, and inherent impact resilience, enabling rapid, precise, high-force, and safe interactions during dynamic tasks. In addition to fostering safer human-robot collaboration, the inherent safety properties are particularly beneficial for reinforcement learning, where the robot's ability to explore dynamic motions without causing self-damage is crucial. We validate our robotic arm through various experiments, including long-term dynamic motions, impact resilience tests, and assessments of its ease of control. On a challenging dynamic table tennis task, we further demonstrate our robot's capabilities in rapid and precise movements. By showcasing our new design's potential, we aim to inspire further research on robotic systems that balance high performance and safety in diverse tasks. Our open-source hardware design, software, and a large dataset of diverse robot motions can be found at https://webdav.tuebingen.mpg.de/pamy2/.

Synchronizing Machine Learning Algorithms, Realtime Robotic Control and Simulated Environment with o80

Jun 16, 2023Abstract:Robotic applications require the integration of various modalities, encompassing perception, control of real robots and possibly the control of simulated environments. While the state-of-the-art robotic software solutions such as ROS 2 provide most of the required features, flexible synchronization between algorithms, data streams and control loops can be tedious. o80 is a versatile C++ framework for robotics which provides a shared memory model and a command framework for real-time critical systems. It enables expert users to set up complex robotic systems and generate Python bindings for scientists. o80's unique feature is its flexible synchronization between processes, including the traditional blocking commands and the novel ``bursting mode'', which allows user code to control the execution of the lower process control loop. This makes it particularly useful for setups that mix real and simulated environments.

Hindsight States: Blending Sim and Real Task Elements for Efficient Reinforcement Learning

Mar 09, 2023Abstract:Reinforcement learning has shown great potential in solving complex tasks when large amounts of data can be generated with little effort. In robotics, one approach to generate training data builds on simulations based on dynamics models derived from first principles. However, for tasks that, for instance, involve complex soft robots, devising such models is substantially more challenging. Being able to train effectively in increasingly complicated scenarios with reinforcement learning enables to take advantage of complex systems such as soft robots. Here, we leverage the imbalance in complexity of the dynamics to learn more sample-efficiently. We (i) abstract the task into distinct components, (ii) off-load the simple dynamics parts into the simulation, and (iii) multiply these virtual parts to generate more data in hindsight. Our new method, Hindsight States (HiS), uses this data and selects the most useful transitions for training. It can be used with an arbitrary off-policy algorithm. We validate our method on several challenging simulated tasks and demonstrate that it improves learning both alone and when combined with an existing hindsight algorithm, Hindsight Experience Replay (HER). Finally, we evaluate HiS on a physical system and show that it boosts performance on a complex table tennis task with a muscular robot. Videos and code of the experiments can be found on webdav.tuebingen.mpg.de/his/.

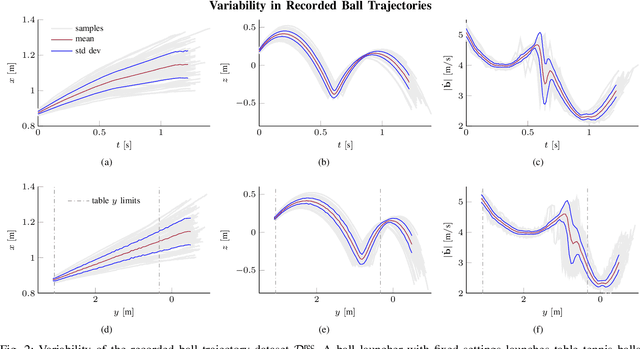

AIMY: An Open-source Table Tennis Ball Launcher for Versatile and High-fidelity Trajectory Generation

Oct 13, 2022

Abstract:To approach the level of advanced human players in table tennis with robots, generating varied ball trajectories in a reproducible and controlled manner is essential. Current ball launchers used in robot table tennis either do not provide an interface for automatic control or are limited in their capabilities to adapt speed, direction, and spin of the ball. For these reasons, we present AIMY, a three-wheeled open-hardware and open-source table tennis ball launcher, which can generate ball speeds and spins of up to 15.44 m/s and 192/s, respectively, which are comparable to advanced human players. The wheel speeds, launch orientation and time can be fully controlled via an open Ethernet or Wi-Fi interface. We provide a detailed overview of the core design features, as well as open source the software to encourage distribution and duplication within and beyond the robot table tennis research community. We also extensively evaluate the ball launcher's accuracy for different system settings and learn to launch a ball to desired locations. With this ball launcher, we enable long-duration training of robot table tennis approaches where the complexity of the ball trajectory can be automatically adjusted, enabling large-scale real-world online reinforcement learning for table tennis robots.

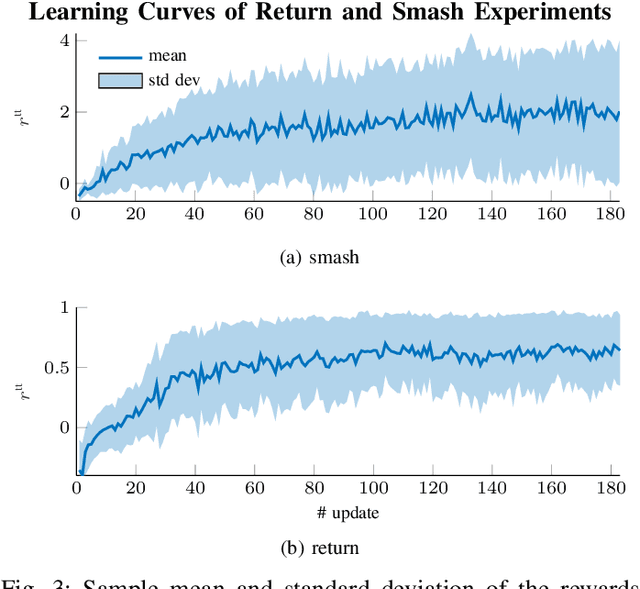

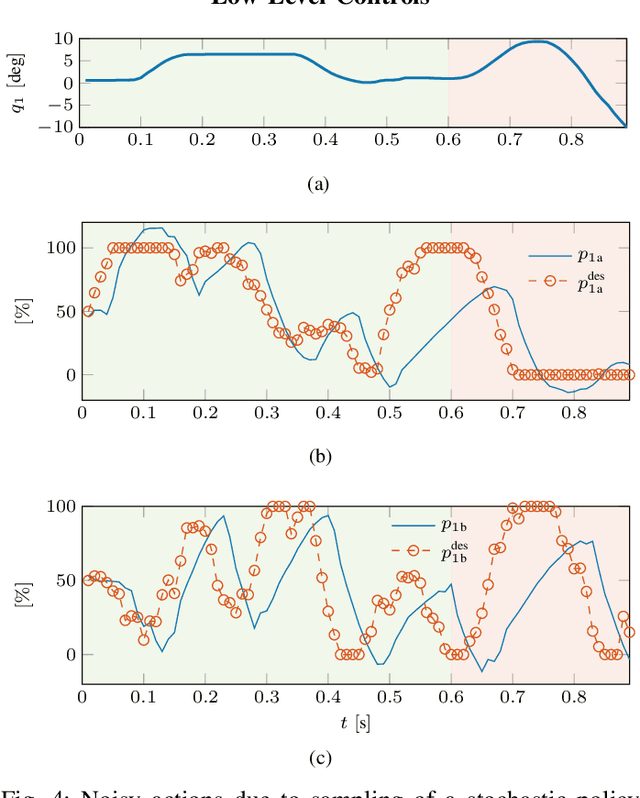

Learning to Play Table Tennis From Scratch using Muscular Robots

Jun 10, 2020

Abstract:Dynamic tasks like table tennis are relatively easy to learn for humans but pose significant challenges to robots. Such tasks require accurate control of fast movements and precise timing in the presence of imprecise state estimation of the flying ball and the robot. Reinforcement Learning (RL) has shown promise in learning of complex control tasks from data. However, applying step-based RL to dynamic tasks on real systems is safety-critical as RL requires exploring and failing safely for millions of time steps in high-speed regimes. In this paper, we demonstrate that safe learning of table tennis using model-free Reinforcement Learning can be achieved by using robot arms driven by pneumatic artificial muscles (PAMs). Softness and back-drivability properties of PAMs prevent the system from leaving the safe region of its state space. In this manner, RL empowers the robot to return and smash real balls with 5 m\s and 12m\s on average to a desired landing point. Our setup allows the agent to learn this safety-critical task (i) without safety constraints in the algorithm, (ii) while maximizing the speed of returned balls directly in the reward function (iii) using a stochastic policy that acts directly on the low-level controls of the real system and (iv) trains for thousands of trials (v) from scratch without any prior knowledge. Additionally, we present HYSR, a practical hybrid sim and real training that avoids playing real balls during training by randomly replaying recorded ball trajectories in simulation and applying actions to the real robot. This work is the first to (a) fail-safe learn of a safety-critical dynamic task using anthropomorphic robot arms, (b) learn a precision-demanding problem with a PAM-driven system despite the control challenges and (c) train robots to play table tennis without real balls. Videos and datasets are available at muscularTT.embodied.ml.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge