Shuo He

Defending Multimodal Backdoored Models by Repulsive Visual Prompt Tuning

Dec 29, 2024

Abstract:Multimodal contrastive learning models (e.g., CLIP) can learn high-quality representations from large-scale image-text datasets, yet they exhibit significant vulnerabilities to backdoor attacks, raising serious safety concerns. In this paper, we disclose that CLIP's vulnerabilities primarily stem from its excessive encoding of class-irrelevant features, which can compromise the model's visual feature resistivity to input perturbations, making it more susceptible to capturing the trigger patterns inserted by backdoor attacks. Inspired by this finding, we propose Repulsive Visual Prompt Tuning (RVPT), a novel defense approach that employs specially designed deep visual prompt tuning and feature-repelling loss to eliminate excessive class-irrelevant features while simultaneously optimizing cross-entropy loss to maintain clean accuracy. Unlike existing multimodal backdoor defense methods that typically require the availability of poisoned data or involve fine-tuning the entire model, RVPT leverages few-shot downstream clean samples and only tunes a small number of parameters. Empirical results demonstrate that RVPT tunes only 0.27\% of the parameters relative to CLIP, yet it significantly outperforms state-of-the-art baselines, reducing the attack success rate from 67.53\% to 2.76\% against SoTA attacks and effectively generalizing its defensive capabilities across multiple datasets.

BDetCLIP: Multimodal Prompting Contrastive Test-Time Backdoor Detection

May 24, 2024Abstract:Multimodal contrastive learning methods (e.g., CLIP) have shown impressive zero-shot classification performance due to their strong ability to joint representation learning for visual and textual modalities. However, recent research revealed that multimodal contrastive learning on poisoned pre-training data with a small proportion of maliciously backdoored data can induce backdoored CLIP that could be attacked by inserted triggers in downstream tasks with a high success rate. To defend against backdoor attacks on CLIP, existing defense methods focus on either the pre-training stage or the fine-tuning stage, which would unfortunately cause high computational costs due to numerous parameter updates. In this paper, we provide the first attempt at a computationally efficient backdoor detection method to defend against backdoored CLIP in the inference stage. We empirically find that the visual representations of backdoored images are insensitive to both benign and malignant changes in class description texts. Motivated by this observation, we propose BDetCLIP, a novel test-time backdoor detection method based on contrastive prompting. Specifically, we first prompt the language model (e.g., GPT-4) to produce class-related description texts (benign) and class-perturbed random texts (malignant) by specially designed instructions. Then, the distribution difference in cosine similarity between images and the two types of class description texts can be used as the criterion to detect backdoor samples. Extensive experiments validate that our proposed BDetCLIP is superior to state-of-the-art backdoor detection methods, in terms of both effectiveness and efficiency.

Partial-label Learning with Mixed Closed-set and Open-set Out-of-candidate Examples

Jul 02, 2023

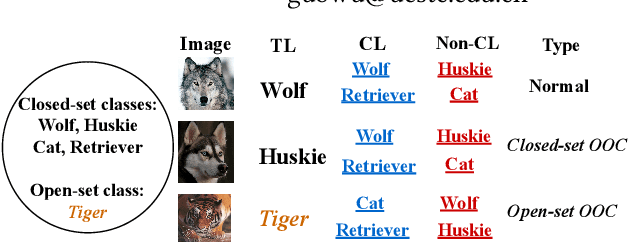

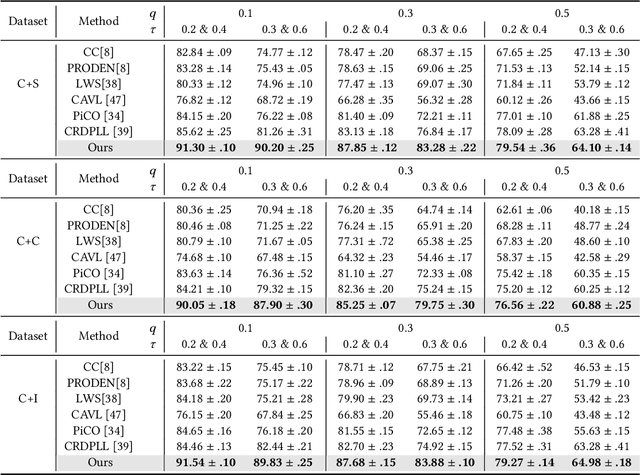

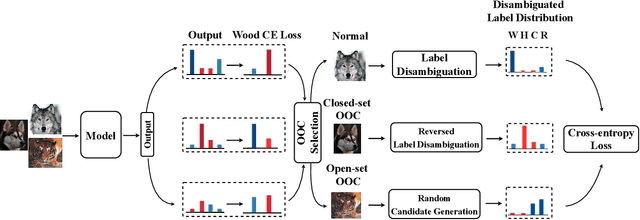

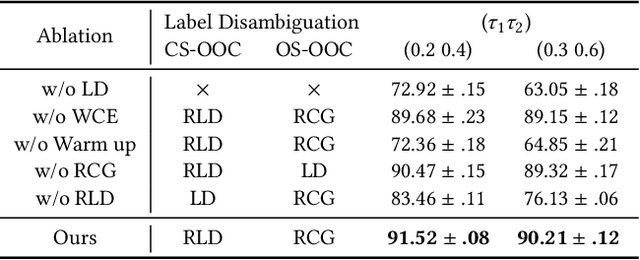

Abstract:Partial-label learning (PLL) relies on a key assumption that the true label of each training example must be in the candidate label set. This restrictive assumption may be violated in complex real-world scenarios, and thus the true label of some collected examples could be unexpectedly outside the assigned candidate label set. In this paper, we term the examples whose true label is outside the candidate label set OOC (out-of-candidate) examples, and pioneer a new PLL study to learn with OOC examples. We consider two types of OOC examples in reality, i.e., the closed-set/open-set OOC examples whose true label is inside/outside the known label space. To solve this new PLL problem, we first calculate the wooden cross-entropy loss from candidate and non-candidate labels respectively, and dynamically differentiate the two types of OOC examples based on specially designed criteria. Then, for closed-set OOC examples, we conduct reversed label disambiguation in the non-candidate label set; for open-set OOC examples, we leverage them for training by utilizing an effective regularization strategy that dynamically assigns random candidate labels from the candidate label set. In this way, the two types of OOC examples can be differentiated and further leveraged for model training. Extensive experiments demonstrate that our proposed method outperforms state-of-the-art PLL methods.

A Generalized Unbiased Risk Estimator for Learning with Augmented Classes

Jun 12, 2023

Abstract:In contrast to the standard learning paradigm where all classes can be observed in training data, learning with augmented classes (LAC) tackles the problem where augmented classes unobserved in the training data may emerge in the test phase. Previous research showed that given unlabeled data, an unbiased risk estimator (URE) can be derived, which can be minimized for LAC with theoretical guarantees. However, this URE is only restricted to the specific type of one-versus-rest loss functions for multi-class classification, making it not flexible enough when the loss needs to be changed with the dataset in practice. In this paper, we propose a generalized URE that can be equipped with arbitrary loss functions while maintaining the theoretical guarantees, given unlabeled data for LAC. To alleviate the issue of negative empirical risk commonly encountered by previous studies, we further propose a novel risk-penalty regularization term. Experiments demonstrate the effectiveness of our proposed method.

Incorporating Multiple Cluster Centers for Multi-Label Learning

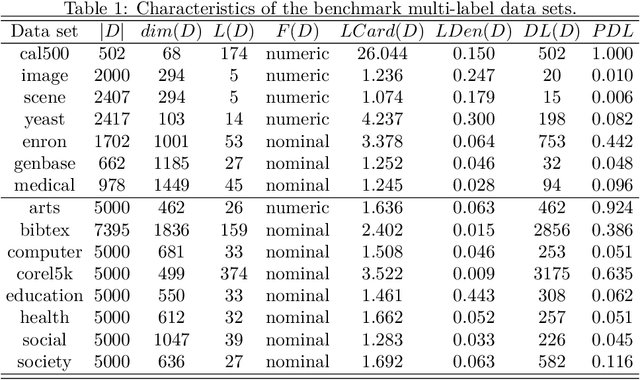

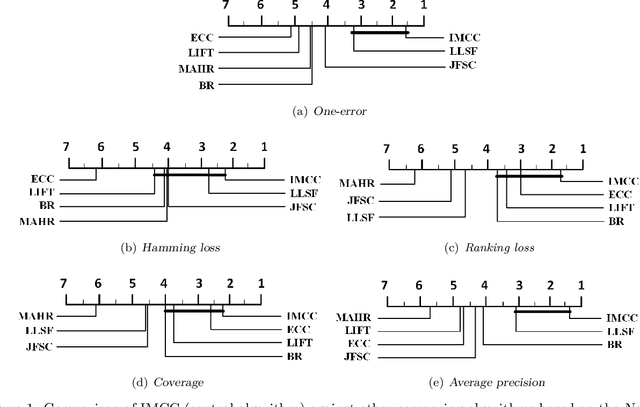

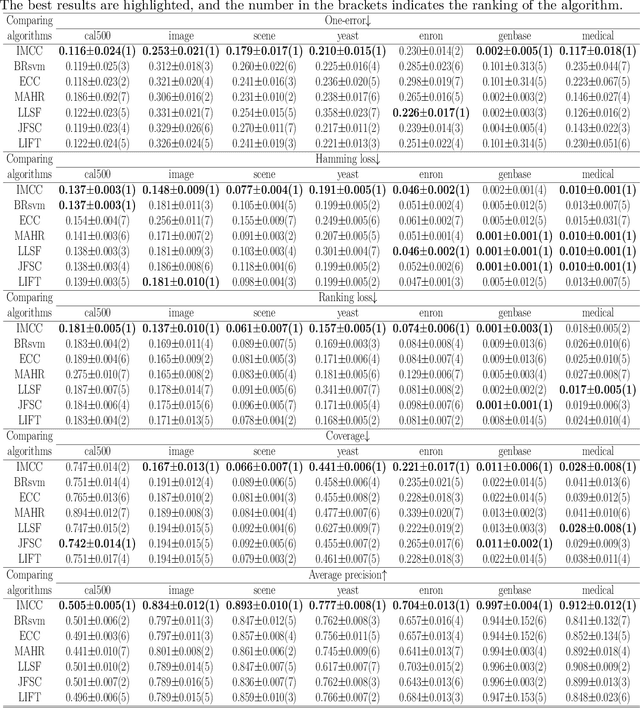

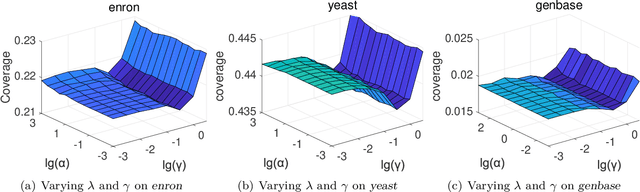

Apr 17, 2020

Abstract:Multi-label learning deals with the problem that each instance is associated with multiple labels simultaneously. Most of the existing approaches aim to improve the performance of multi-label learning by exploiting label correlations. Although the data augmentation technique is widely used in many machine learning tasks, it is still unclear whether data augmentation is helpful to multi-label learning. In this paper, (to the best of our knowledge) we provide the first attempt to leverage the data augmentation technique to improve the performance of multi-label learning. Specifically, we first propose a novel data augmentation approach that performs clustering on the real examples and treats the cluster centers as virtual examples, and these virtual examples naturally embody the local label correlations and label importances. Then, motivated by the cluster assumption that examples in the same cluster should have the same label, we propose a novel regularization term to bridge the gap between the real examples and virtual examples, which can promote the local smoothness of the learning function. Extensive experimental results on a number of real-world multi-label data sets clearly demonstrate that our proposed approach outperforms the state-of-the-art counterparts.

Collaboration based Multi-Label Learning

Feb 08, 2019

Abstract:It is well-known that exploiting label correlations is crucially important to multi-label learning. Most of the existing approaches take label correlations as prior knowledge, which may not correctly characterize the real relationships among labels. Besides, label correlations are normally used to regularize the hypothesis space, while the final predictions are not explicitly correlated. In this paper, we suggest that for each individual label, the final prediction involves the collaboration between its own prediction and the predictions of other labels. Based on this assumption, we first propose a novel method to learn the label correlations via sparse reconstruction in the label space. Then, by seamlessly integrating the learned label correlations into model training, we propose a novel multi-label learning approach that aims to explicitly account for the correlated predictions of labels while training the desired model simultaneously. Extensive experimental results show that our approach outperforms the state-of-the-art counterparts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge