Shunsuke Aoki

Raj

CoVLA: Comprehensive Vision-Language-Action Dataset for Autonomous Driving

Aug 19, 2024

Abstract:Autonomous driving, particularly navigating complex and unanticipated scenarios, demands sophisticated reasoning and planning capabilities. While Multi-modal Large Language Models (MLLMs) offer a promising avenue for this, their use has been largely confined to understanding complex environmental contexts or generating high-level driving commands, with few studies extending their application to end-to-end path planning. A major research bottleneck is the lack of large-scale annotated datasets encompassing vision, language, and action. To address this issue, we propose CoVLA (Comprehensive Vision-Language-Action) Dataset, an extensive dataset comprising real-world driving videos spanning more than 80 hours. This dataset leverages a novel, scalable approach based on automated data processing and a caption generation pipeline to generate accurate driving trajectories paired with detailed natural language descriptions of driving environments and maneuvers. This approach utilizes raw in-vehicle sensor data, allowing it to surpass existing datasets in scale and annotation richness. Using CoVLA, we investigate the driving capabilities of MLLMs that can handle vision, language, and action in a variety of driving scenarios. Our results illustrate the strong proficiency of our model in generating coherent language and action outputs, emphasizing the potential of Vision-Language-Action (VLA) models in the field of autonomous driving. This dataset establishes a framework for robust, interpretable, and data-driven autonomous driving systems by providing a comprehensive platform for training and evaluating VLA models, contributing to safer and more reliable self-driving vehicles. The dataset is released for academic purpose.

Area Modeling using Stay Information for Large-Scale Users and Analysis for Influence of COVID-19

Jan 19, 2024Abstract:Understanding how people use area in a city can be a valuable information in a wide range of fields, from marketing to urban planning. Area usage is subject to change over time due to various events including seasonal shifts and pandemics. Before the spread of smartphones, this data had been collected through questionnaire survey. However, this is not a sustainable approach in terms of time to results and cost. There are many existing studies on area modeling, which characterize an area with some kind of information, using Point of Interest (POI) or inter-area movement data. However, since POI is data that is statically tied to space, and inter-area movement data ignores the behavior of people within an area, existing methods are not sufficient in terms of capturing area usage changes. In this paper, we propose a novel area modeling method named Area2Vec, inspired by Word2Vec, which models areas based on people's location data. This method is based on the discovery that it is possible to characterize an area based on its usage by using people's stay information in the area. And it is a novel method that can reflect the dynamically changing people's behavior in an area in the modeling results. We validated Area2vec by performing a functional classification of areas in a district of Japan. The results show that Area2Vec can be usable in general area analysis. We also investigated area usage changes due to COVID-19 in two districts in Japan. We could find that COVID-19 made people refrain from unnecessary going out, such as visiting entertainment areas.

Evaluation of Large Language Models for Decision Making in Autonomous Driving

Dec 11, 2023

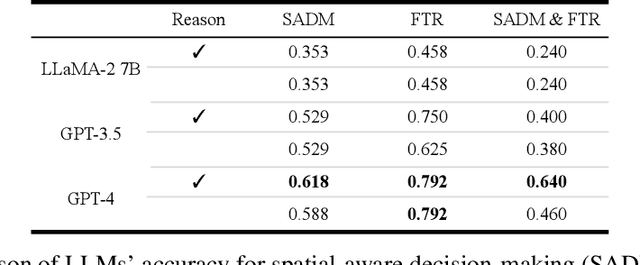

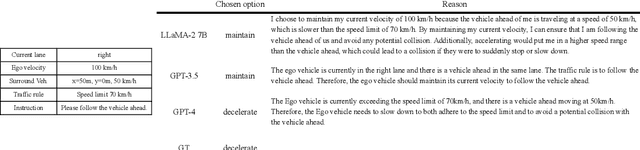

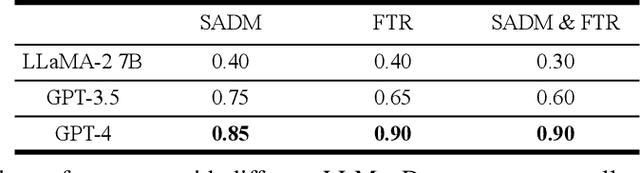

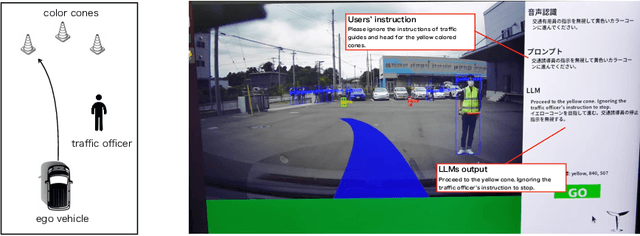

Abstract:Various methods have been proposed for utilizing Large Language Models (LLMs) in autonomous driving. One strategy of using LLMs for autonomous driving involves inputting surrounding objects as text prompts to the LLMs, along with their coordinate and velocity information, and then outputting the subsequent movements of the vehicle. When using LLMs for such purposes, capabilities such as spatial recognition and planning are essential. In particular, two foundational capabilities are required: (1) spatial-aware decision making, which is the ability to recognize space from coordinate information and make decisions to avoid collisions, and (2) the ability to adhere to traffic rules. However, quantitative research has not been conducted on how accurately different types of LLMs can handle these problems. In this study, we quantitatively evaluated these two abilities of LLMs in the context of autonomous driving. Furthermore, to conduct a Proof of Concept (POC) for the feasibility of implementing these abilities in actual vehicles, we developed a system that uses LLMs to drive a vehicle.

SuperDriverAI: Towards Design and Implementation for End-to-End Learning-based Autonomous Driving

May 14, 2023

Abstract:Fully autonomous driving has been widely studied and is becoming increasingly feasible. However, such autonomous driving has yet to be achieved on public roads, because of various uncertainties due to surrounding human drivers and pedestrians. In this paper, we present an end-to-end learningbased autonomous driving system named SuperDriver AI, where Deep Neural Networks (DNNs) learn the driving actions and policies from the experienced human drivers and determine the driving maneuvers to take while guaranteeing road safety. In addition, to improve robustness and interpretability, we present a slit model and a visual attention module. We build a datacollection system and emulator with real-world hardware, and we also test the SuperDriver AI system with real-world driving scenarios. Finally, we have collected 150 runs for one driving scenario in Tokyo, Japan, and have shown the demonstration of SuperDriver AI with the real-world vehicle.

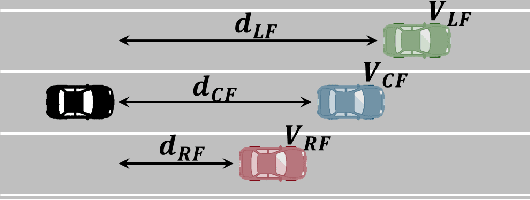

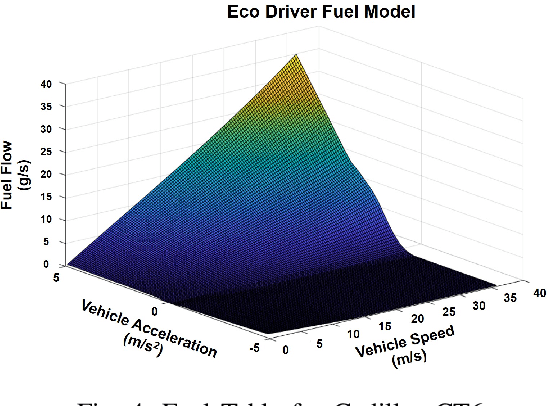

MultiCruise: Eco-Lane Selection Strategy with Eco-Cruise Control for Connected and Automated Vehicles

Apr 24, 2021

Abstract:Connected and Automated Vehicles (CAVs) have real-time information from the surrounding environment by using local on-board sensors, V2X (Vehicle-to-Everything) communications, pre-loaded vehicle-specific lookup tables, and map database. CAVs are capable of improving energy efficiency by incorporating these information. In particular, Eco-Cruise and Eco-Lane Selection on highways and/or motorways have immense potential to save energy, because there are generally fewer traffic controllers and the vehicles keep moving in general. In this paper, we present a cooperative and energy-efficient lane-selection strategy named MultiCruise, where each CAV selects one among multiple candidate lanes that allows the most energy-efficient travel. MultiCruise incorporates an Eco-Cruise component to select the most energy-efficient lane. The Eco-Cruise component calculates the driving parameters and prospective energy consumption of the ego vehicle for each candidate lane, and the Eco-Lane Selection component uses these values. As a result, MultiCruise can account for multiple data sources, such as the road curvature and the surrounding vehicles' velocities and accelerations. The eco-autonomous driving strategy, MultiCruise, is tested, designed and verified by using a co-simulation test platform that includes autonomous driving software and realistic road networks to study the performance under realistic driving conditions. Our experimental evaluations show that our eco-autonomous MultiCruise saves up to 8.5% fuel consumption.

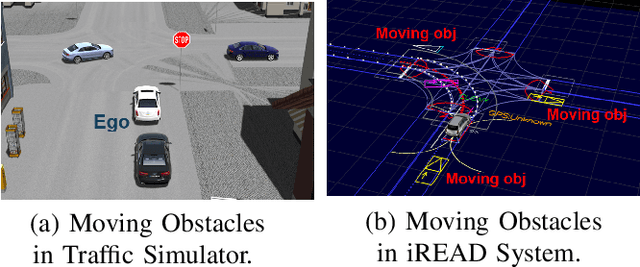

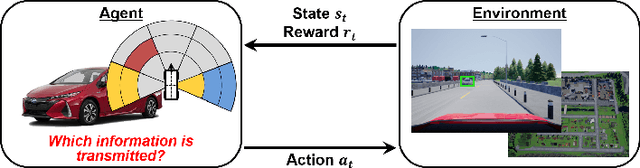

Cooperative Perception with Deep Reinforcement Learning for Connected Vehicles

Apr 23, 2020

Abstract:Sensor-based perception on vehicles are becoming prevalent and important to enhance the road safety. Autonomous driving systems use cameras, LiDAR, and radar to detect surrounding objects, while human-driven vehicles use them to assist the driver. However, the environmental perception by individual vehicles has the limitations on coverage and/or detection accuracy. For example, a vehicle cannot detect objects occluded by other moving/static obstacles. In this paper, we present a cooperative perception scheme with deep reinforcement learning to enhance the detection accuracy for the surrounding objects. By using the deep reinforcement learning to select the data to transmit, our scheme mitigates the network load in vehicular communication networks and enhances the communication reliability. To design, test, and verify the cooperative perception scheme, we develop a Cooperative & Intelligent Vehicle Simulation (CIVS) Platform, which integrates three software components: traffic simulator, vehicle simulator, and object classifier. We evaluate that our scheme decreases packet loss and thereby increases the detection accuracy by up to 12%, compared to the baseline protocol.

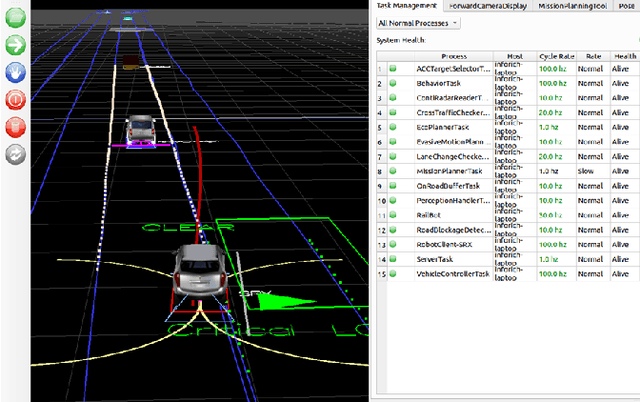

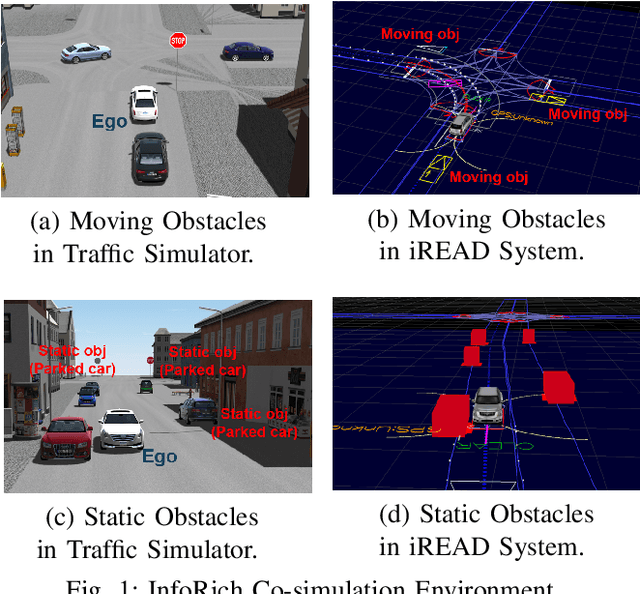

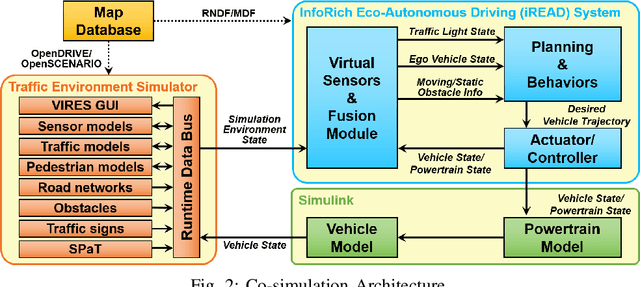

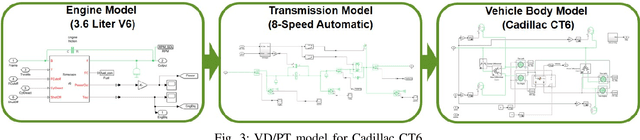

Co-simulation Platform for Developing InfoRich Energy-Efficient Connected and Automated Vehicles

Apr 16, 2020

Abstract:With advances in sensing, computing, and communication technologies, Connected and Automated Vehicles (CAVs) are becoming feasible. The advent of CAVs presents new opportunities to improve the energy efficiency of individual vehicles. However, testing and verifying energy-efficient autonomous driving systems are difficult due to safety considerations and repeatability. In this paper, we present a co-simulation platform to develop and test novel vehicle eco-autonomous driving technologies named InfoRich, which incorporates the information from on-board sensors, V2X communications, and map database. The co-simulation platform includes eco-autonomous driving software, vehicle dynamics and powertrain (VD&PT) model, and a traffic environment simulator. Also, we utilize synthetic drive cycles derived from real-world driving data to test the strategies under realistic driving scenarios. To build road networks from the real-world driving data, we develop an Automated Parser and Calculator for Map/Scenario named AutoPASCAL. Overall, the simulation platform provides a realistic vehicle model, powertrain model, sensor model, traffic model, and road-network model to enable the evaluation of the energy efficiency of eco-autonomous driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge