Rajkumar

ContextualFusion: Context-Based Multi-Sensor Fusion for 3D Object Detection in Adverse Operating Conditions

Apr 23, 2024

Abstract:The fusion of multimodal sensor data streams such as camera images and lidar point clouds plays an important role in the operation of autonomous vehicles (AVs). Robust perception across a range of adverse weather and lighting conditions is specifically required for AVs to be deployed widely. While multi-sensor fusion networks have been previously developed for perception in sunny and clear weather conditions, these methods show a significant degradation in performance under night-time and poor weather conditions. In this paper, we propose a simple yet effective technique called ContextualFusion to incorporate the domain knowledge about cameras and lidars behaving differently across lighting and weather variations into 3D object detection models. Specifically, we design a Gated Convolutional Fusion (GatedConv) approach for the fusion of sensor streams based on the operational context. To aid in our evaluation, we use the open-source simulator CARLA to create a multimodal adverse-condition dataset called AdverseOp3D to address the shortcomings of existing datasets being biased towards daytime and good-weather conditions. Our ContextualFusion approach yields an mAP improvement of 6.2% over state-of-the-art methods on our context-balanced synthetic dataset. Finally, our method enhances state-of-the-art 3D objection performance at night on the real-world NuScenes dataset with a significant mAP improvement of 11.7%.

MultiCruise: Eco-Lane Selection Strategy with Eco-Cruise Control for Connected and Automated Vehicles

Apr 24, 2021

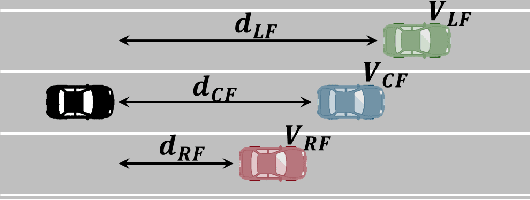

Abstract:Connected and Automated Vehicles (CAVs) have real-time information from the surrounding environment by using local on-board sensors, V2X (Vehicle-to-Everything) communications, pre-loaded vehicle-specific lookup tables, and map database. CAVs are capable of improving energy efficiency by incorporating these information. In particular, Eco-Cruise and Eco-Lane Selection on highways and/or motorways have immense potential to save energy, because there are generally fewer traffic controllers and the vehicles keep moving in general. In this paper, we present a cooperative and energy-efficient lane-selection strategy named MultiCruise, where each CAV selects one among multiple candidate lanes that allows the most energy-efficient travel. MultiCruise incorporates an Eco-Cruise component to select the most energy-efficient lane. The Eco-Cruise component calculates the driving parameters and prospective energy consumption of the ego vehicle for each candidate lane, and the Eco-Lane Selection component uses these values. As a result, MultiCruise can account for multiple data sources, such as the road curvature and the surrounding vehicles' velocities and accelerations. The eco-autonomous driving strategy, MultiCruise, is tested, designed and verified by using a co-simulation test platform that includes autonomous driving software and realistic road networks to study the performance under realistic driving conditions. Our experimental evaluations show that our eco-autonomous MultiCruise saves up to 8.5% fuel consumption.

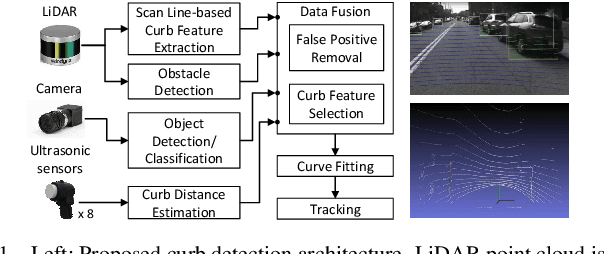

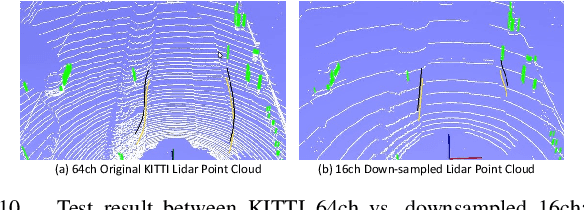

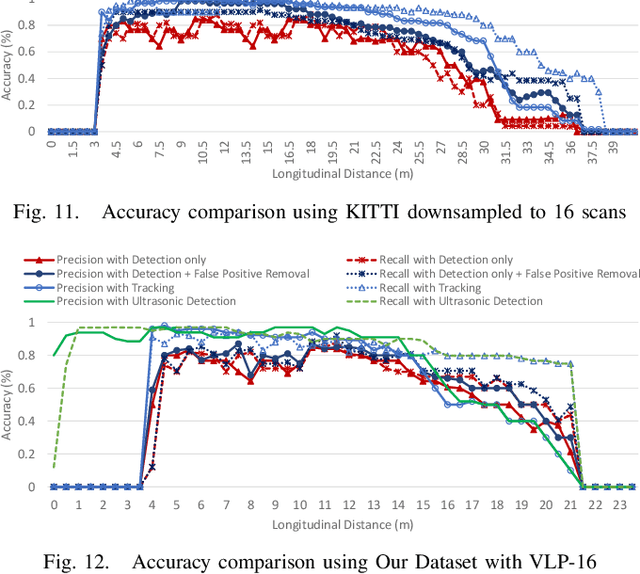

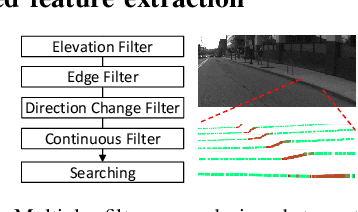

CurbScan: Curb Detection and Tracking Using Multi-Sensor Fusion

Oct 13, 2020

Abstract:Reliable curb detection is critical for safe autonomous driving in urban contexts. Curb detection and tracking are also useful in vehicle localization and path planning. Past work utilized a 3D LiDAR sensor to determine accurate distance information and the geometric attributes of curbs. However, such an approach requires dense point cloud data and is also vulnerable to false positives from obstacles present on both road and off-road areas. In this paper, we propose an approach to detect and track curbs by fusing together data from multiple sensors: sparse LiDAR data, a mono camera and low-cost ultrasonic sensors. The detection algorithm is based on a single 3D LiDAR and a mono camera sensor used to detect candidate curb features and it effectively removes false positives arising from surrounding static and moving obstacles. The detection accuracy of the tracking algorithm is boosted by using Kalman filter-based prediction and fusion with lateral distance information from low-cost ultrasonic sensors. We next propose a line-fitting algorithm that yields robust results for curb locations. Finally, we demonstrate the practical feasibility of our solution by testing in different road environments and evaluating our implementation in a real vehicle\footnote{Demo video clips demonstrating our algorithm have been uploaded to Youtube: https://www.youtube.com/watch?v=w5MwsdWhcy4, https://www.youtube.com/watch?v=Gd506RklfG8.}. Our algorithm maintains over 90\% accuracy within 4.5-22 meters and 0-14 meters for the KITTI dataset and our dataset respectively, and its average processing time per frame is approximately 10 ms on Intel i7 x86 and 100ms on NVIDIA Xavier board.

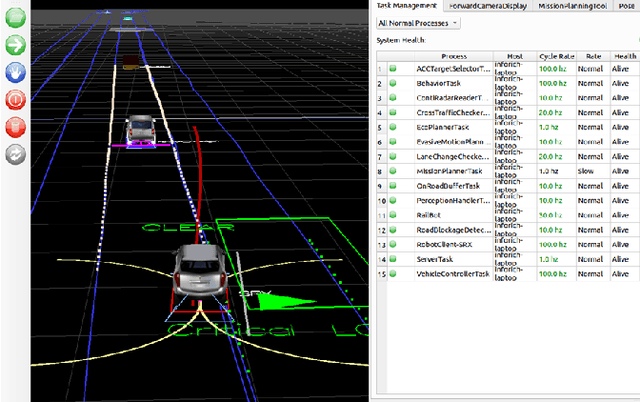

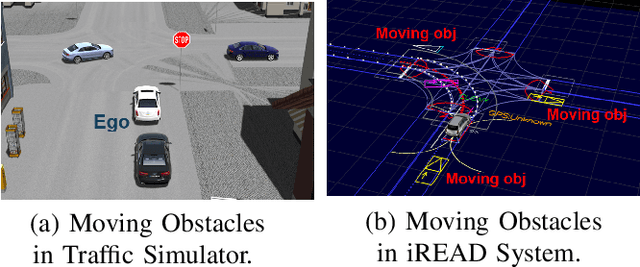

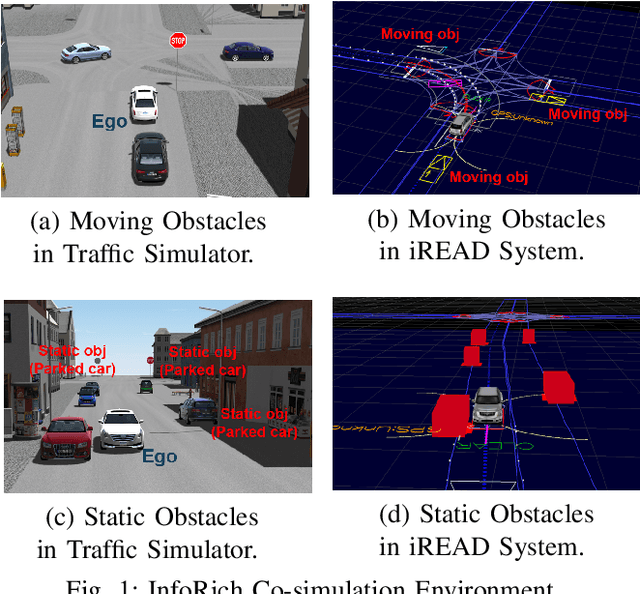

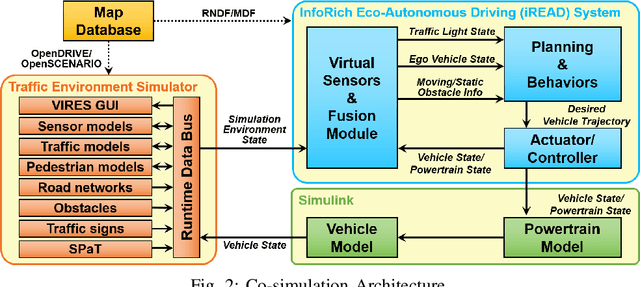

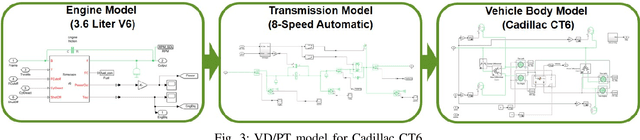

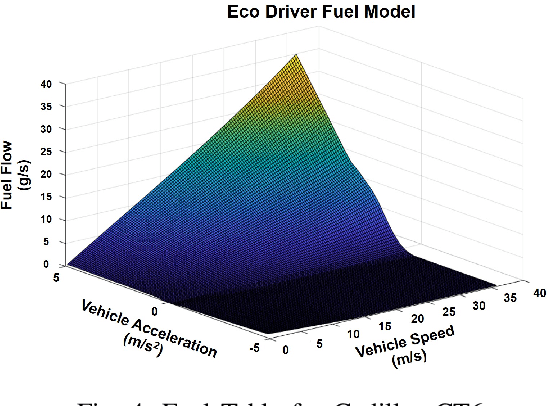

Co-simulation Platform for Developing InfoRich Energy-Efficient Connected and Automated Vehicles

Apr 16, 2020

Abstract:With advances in sensing, computing, and communication technologies, Connected and Automated Vehicles (CAVs) are becoming feasible. The advent of CAVs presents new opportunities to improve the energy efficiency of individual vehicles. However, testing and verifying energy-efficient autonomous driving systems are difficult due to safety considerations and repeatability. In this paper, we present a co-simulation platform to develop and test novel vehicle eco-autonomous driving technologies named InfoRich, which incorporates the information from on-board sensors, V2X communications, and map database. The co-simulation platform includes eco-autonomous driving software, vehicle dynamics and powertrain (VD&PT) model, and a traffic environment simulator. Also, we utilize synthetic drive cycles derived from real-world driving data to test the strategies under realistic driving scenarios. To build road networks from the real-world driving data, we develop an Automated Parser and Calculator for Map/Scenario named AutoPASCAL. Overall, the simulation platform provides a realistic vehicle model, powertrain model, sensor model, traffic model, and road-network model to enable the evaluation of the energy efficiency of eco-autonomous driving.

Point-GNN: Graph Neural Network for 3D Object Detection in a Point Cloud

Mar 02, 2020

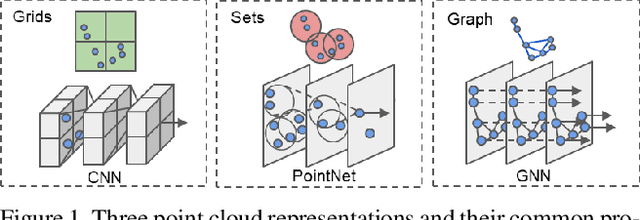

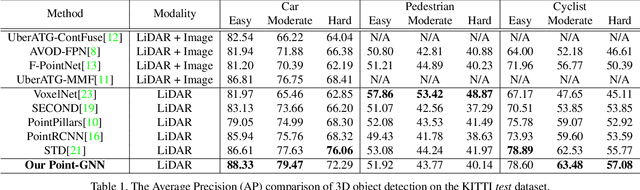

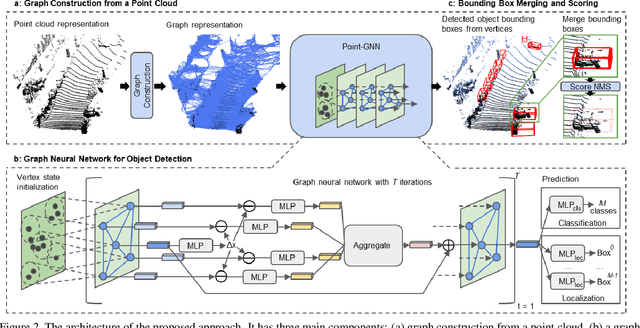

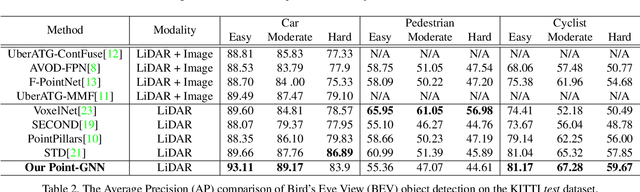

Abstract:In this paper, we propose a graph neural network to detect objects from a LiDAR point cloud. Towards this end, we encode the point cloud efficiently in a fixed radius near-neighbors graph. We design a graph neural network, named Point-GNN, to predict the category and shape of the object that each vertex in the graph belongs to. In Point-GNN, we propose an auto-registration mechanism to reduce translation variance, and also design a box merging and scoring operation to combine detections from multiple vertices accurately. Our experiments on the KITTI benchmark show the proposed approach achieves leading accuracy using the point cloud alone and can even surpass fusion-based algorithms. Our results demonstrate the potential of using the graph neural network as a new approach for 3D object detection. The code is available https://github.com/WeijingShi/Point-GNN.

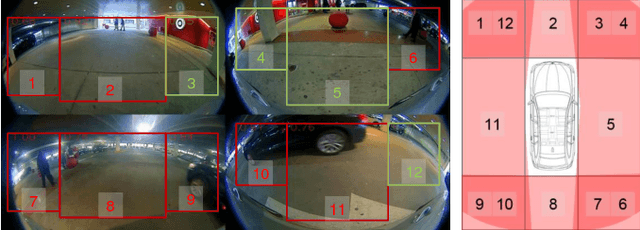

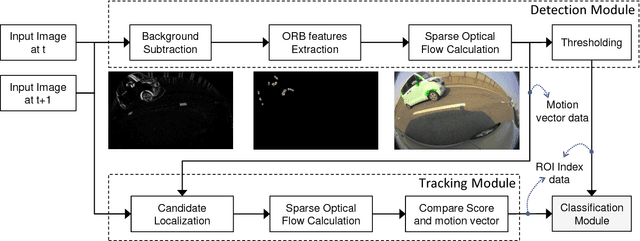

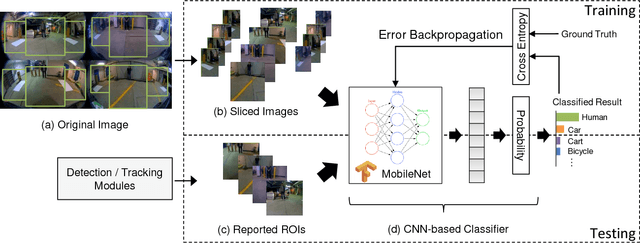

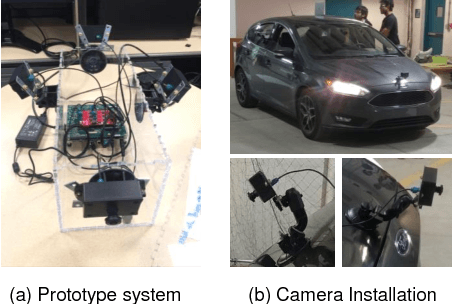

Real-time Detection, Tracking, and Classification of Moving and Stationary Objects using Multiple Fisheye Images

Aug 31, 2018

Abstract:The ability to detect pedestrians and other moving objects is crucial for an autonomous vehicle. This must be done in real-time with minimum system overhead. This paper discusses the implementation of a surround view system to identify moving as well as static objects that are close to the ego vehicle. The algorithm works on 4 views captured by fisheye cameras which are merged into a single frame. The moving object detection and tracking solution uses minimal system overhead to isolate regions of interest (ROIs) containing moving objects. These ROIs are then analyzed using a deep neural network (DNN) to categorize the moving object. With deployment and testing on a real car in urban environments, we have demonstrated the practical feasibility of the solution. The video demos of our algorithm have been uploaded to Youtube: https://youtu.be/vpoCfC724iA, https://youtu.be/2X4aqH2bMBs

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge