Iljoo Baek

Raj

CurbScan: Curb Detection and Tracking Using Multi-Sensor Fusion

Oct 13, 2020

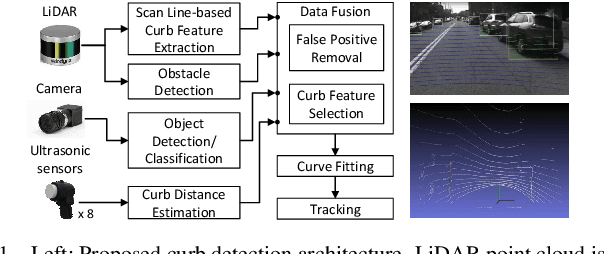

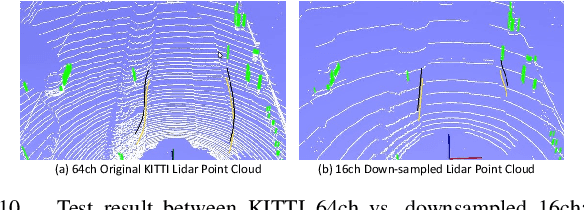

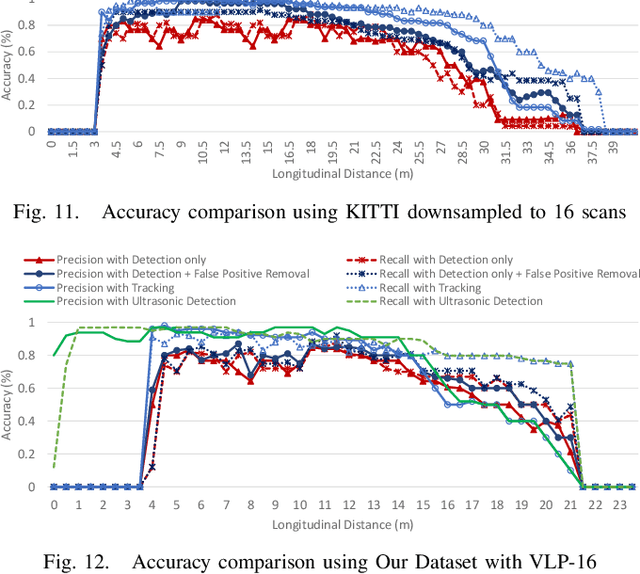

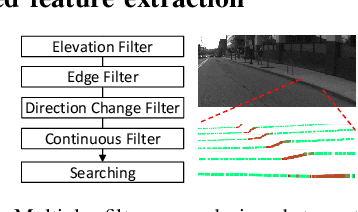

Abstract:Reliable curb detection is critical for safe autonomous driving in urban contexts. Curb detection and tracking are also useful in vehicle localization and path planning. Past work utilized a 3D LiDAR sensor to determine accurate distance information and the geometric attributes of curbs. However, such an approach requires dense point cloud data and is also vulnerable to false positives from obstacles present on both road and off-road areas. In this paper, we propose an approach to detect and track curbs by fusing together data from multiple sensors: sparse LiDAR data, a mono camera and low-cost ultrasonic sensors. The detection algorithm is based on a single 3D LiDAR and a mono camera sensor used to detect candidate curb features and it effectively removes false positives arising from surrounding static and moving obstacles. The detection accuracy of the tracking algorithm is boosted by using Kalman filter-based prediction and fusion with lateral distance information from low-cost ultrasonic sensors. We next propose a line-fitting algorithm that yields robust results for curb locations. Finally, we demonstrate the practical feasibility of our solution by testing in different road environments and evaluating our implementation in a real vehicle\footnote{Demo video clips demonstrating our algorithm have been uploaded to Youtube: https://www.youtube.com/watch?v=w5MwsdWhcy4, https://www.youtube.com/watch?v=Gd506RklfG8.}. Our algorithm maintains over 90\% accuracy within 4.5-22 meters and 0-14 meters for the KITTI dataset and our dataset respectively, and its average processing time per frame is approximately 10 ms on Intel i7 x86 and 100ms on NVIDIA Xavier board.

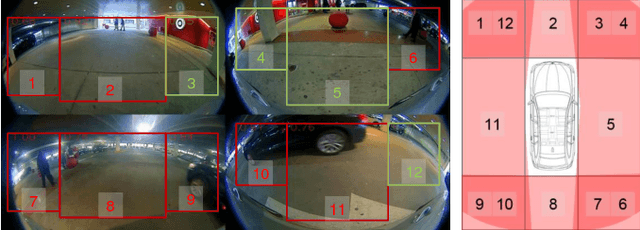

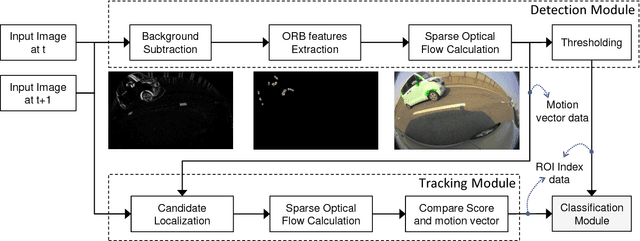

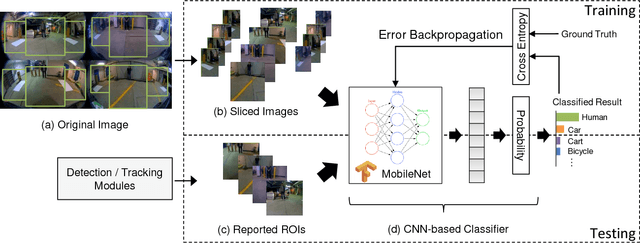

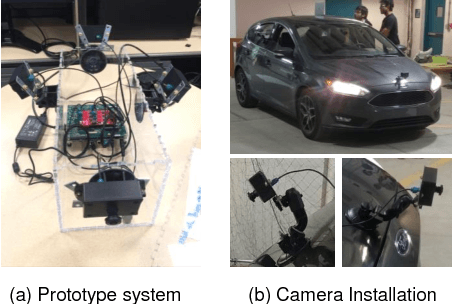

Real-time Detection, Tracking, and Classification of Moving and Stationary Objects using Multiple Fisheye Images

Aug 31, 2018

Abstract:The ability to detect pedestrians and other moving objects is crucial for an autonomous vehicle. This must be done in real-time with minimum system overhead. This paper discusses the implementation of a surround view system to identify moving as well as static objects that are close to the ego vehicle. The algorithm works on 4 views captured by fisheye cameras which are merged into a single frame. The moving object detection and tracking solution uses minimal system overhead to isolate regions of interest (ROIs) containing moving objects. These ROIs are then analyzed using a deep neural network (DNN) to categorize the moving object. With deployment and testing on a real car in urban environments, we have demonstrated the practical feasibility of the solution. The video demos of our algorithm have been uploaded to Youtube: https://youtu.be/vpoCfC724iA, https://youtu.be/2X4aqH2bMBs

Vehicles Lane-changing Behavior Detection

Aug 22, 2018

Abstract:The lane-level localization accuracy is very important for autonomous vehicles. The Global Navigation Satellite System (GNSS), e.g. GPS, is a generic localization method for vehicles, but is vulnerable to the multi-path interference in the urban environment. Integrating the vision-based relative localization result and a digital map with the GNSS is a common and cheap way to increase the global localization accuracy and thus to realize the lane-level localization. This project is to develop a mono-camera based lane-changing behavior detection module for the correction of lateral GPS localization. We implemented a Support Vector Machine (SVM) based framework to directly classify the driving behavior, including the lane keeping, left and right lane changing, from a sampled data of the raw image captured by the mono-camera installed behind the window shield. The training data was collected from the driving around Carnegie Mellon University, and we compared the trained SVM models w/ and w/o the Principle Component Analysis (PCA) dimension reduction technique. The performance of the SVM based classification method was compared with the CNN method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge