Shuhao Liao

HELM: Human-Preferred Exploration with Language Models

Mar 10, 2025

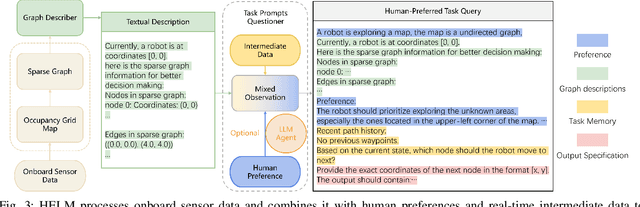

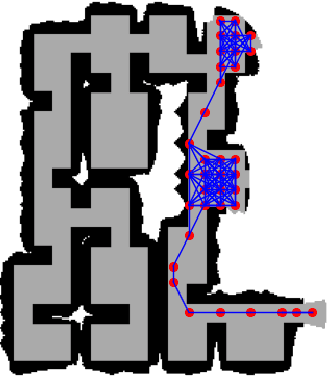

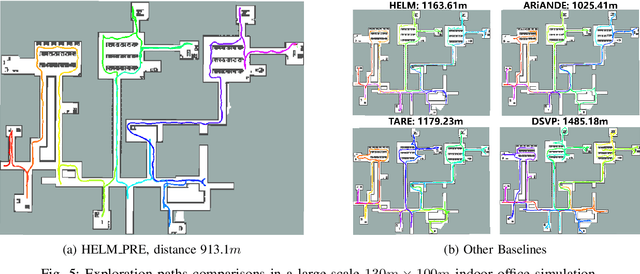

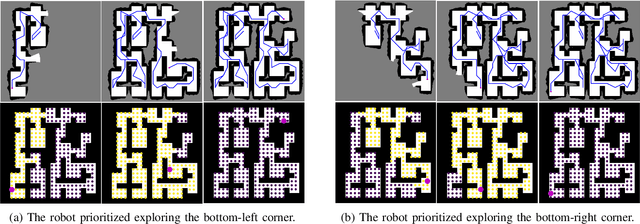

Abstract:In autonomous exploration tasks, robots are required to explore and map unknown environments while efficiently planning in dynamic and uncertain conditions. Given the significant variability of environments, human operators often have specific preference requirements for exploration, such as prioritizing certain areas or optimizing for different aspects of efficiency. However, existing methods struggle to accommodate these human preferences adaptively, often requiring extensive parameter tuning or network retraining. With the recent advancements in Large Language Models (LLMs), which have been widely applied to text-based planning and complex reasoning, their potential for enhancing autonomous exploration is becoming increasingly promising. Motivated by this, we propose an LLM-based human-preferred exploration framework that seamlessly integrates a mobile robot system with LLMs. By leveraging the reasoning and adaptability of LLMs, our approach enables intuitive and flexible preference control through natural language while maintaining a task success rate comparable to state-of-the-art traditional methods. Experimental results demonstrate that our framework effectively bridges the gap between human intent and policy preference in autonomous exploration, offering a more user-friendly and adaptable solution for real-world robotic applications.

SIGMA: Sheaf-Informed Geometric Multi-Agent Pathfinding

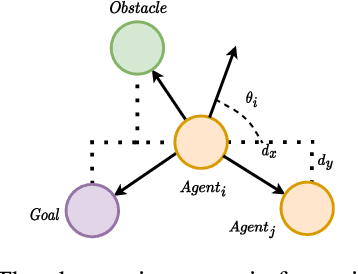

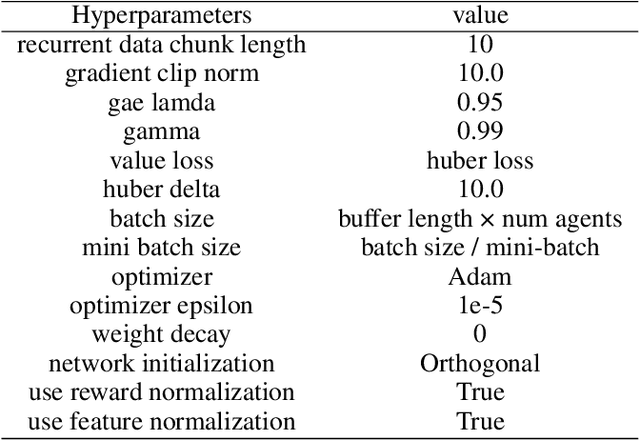

Feb 10, 2025Abstract:The Multi-Agent Path Finding (MAPF) problem aims to determine the shortest and collision-free paths for multiple agents in a known, potentially obstacle-ridden environment. It is the core challenge for robotic deployments in large-scale logistics and transportation. Decentralized learning-based approaches have shown great potential for addressing the MAPF problems, offering more reactive and scalable solutions. However, existing learning-based MAPF methods usually rely on agents making decisions based on a limited field of view (FOV), resulting in short-sighted policies and inefficient cooperation in complex scenarios. There, a critical challenge is to achieve consensus on potential movements between agents based on limited observations and communications. To tackle this challenge, we introduce a new framework that applies sheaf theory to decentralized deep reinforcement learning, enabling agents to learn geometric cross-dependencies between each other through local consensus and utilize them for tightly cooperative decision-making. In particular, sheaf theory provides a mathematical proof of conditions for achieving global consensus through local observation. Inspired by this, we incorporate a neural network to approximately model the consensus in latent space based on sheaf theory and train it through self-supervised learning. During the task, in addition to normal features for MAPF as in previous works, each agent distributedly reasons about a learned consensus feature, leading to efficient cooperation on pathfinding and collision avoidance. As a result, our proposed method demonstrates significant improvements over state-of-the-art learning-based MAPF planners, especially in relatively large and complex scenarios, demonstrating its superiority over baselines in various simulations and real-world robot experiments.

Bench-CoE: a Framework for Collaboration of Experts from Benchmark

Dec 05, 2024Abstract:Large Language Models (LLMs) are key technologies driving intelligent systems to handle multiple tasks. To meet the demands of various tasks, an increasing number of LLMs-driven experts with diverse capabilities have been developed, accompanied by corresponding benchmarks to evaluate their performance. This paper proposes the Bench-CoE framework, which enables Collaboration of Experts (CoE) by effectively leveraging benchmark evaluations to achieve optimal performance across various tasks. Bench-CoE includes a set of expert models, a router for assigning tasks to corresponding experts, and a benchmark dataset for training the router. Moreover, we formulate Query-Level and Subject-Level approaches based on our framework, and analyze the merits and drawbacks of these two approaches. Finally, we conduct a series of experiments with vary data distributions on both language and multimodal tasks to validate that our proposed Bench-CoE outperforms any single model in terms of overall performance. We hope this method serves as a baseline for further research in this area. The code is available at \url{https://github.com/ZhangXJ199/Bench-CoE}.

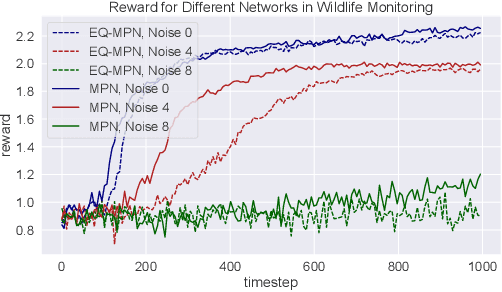

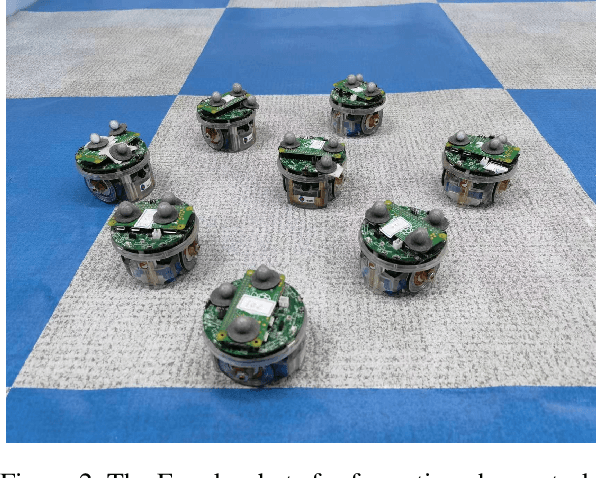

Leveraging Partial Symmetry for Multi-Agent Reinforcement Learning

Dec 30, 2023

Abstract:Incorporating symmetry as an inductive bias into multi-agent reinforcement learning (MARL) has led to improvements in generalization, data efficiency, and physical consistency. While prior research has succeeded in using perfect symmetry prior, the realm of partial symmetry in the multi-agent domain remains unexplored. To fill in this gap, we introduce the partially symmetric Markov game, a new subclass of the Markov game. We then theoretically show that the performance error introduced by utilizing symmetry in MARL is bounded, implying that the symmetry prior can still be useful in MARL even in partial symmetry situations. Motivated by this insight, we propose the Partial Symmetry Exploitation (PSE) framework that is able to adaptively incorporate symmetry prior in MARL under different symmetry-breaking conditions. Specifically, by adaptively adjusting the exploitation of symmetry, our framework is able to achieve superior sample efficiency and overall performance of MARL algorithms. Extensive experiments are conducted to demonstrate the superior performance of the proposed framework over baselines. Finally, we implement the proposed framework in real-world multi-robot testbed to show its superiority.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge