Shuangqi Luo

Endowing Robots with Longer-term Autonomy by Recovering from External Disturbances in Manipulation through Grounded Anomaly Classification and Recovery Policies

Sep 11, 2018

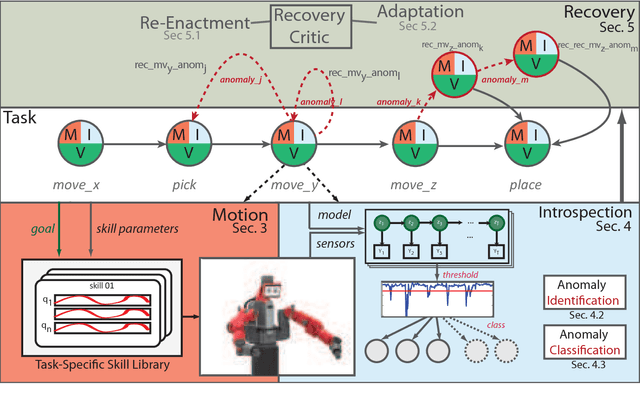

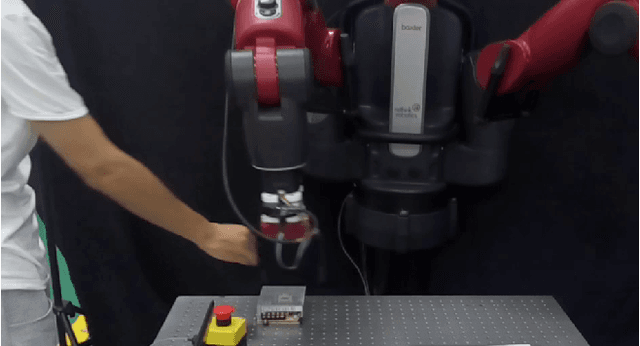

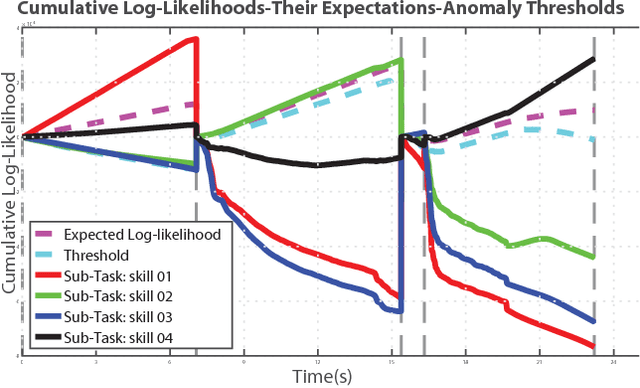

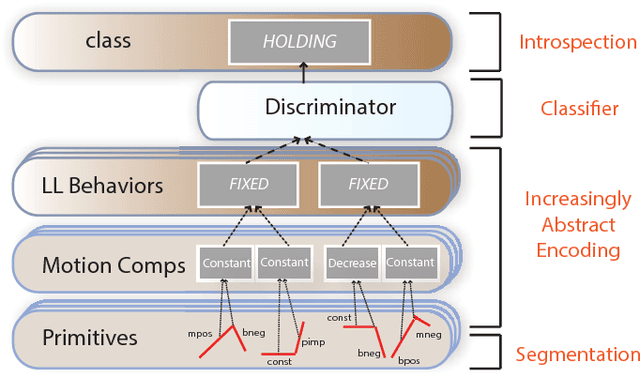

Abstract:Robot manipulation is increasingly poised to interact with humans in co-shared workspaces. Despite increasingly robust manipulation and control algorithms, failure modes continue to exist whenever models do not capture the dynamics of the unstructured environment. To obtain longer-term horizons in robot automation, robots must develop introspection and recovery abilities. We contribute a set of recovery policies to deal with anomalies produced by external disturbances as well as anomaly classification through the use of non-parametric statistics with memoized variational inference with scalable adaptation. A recovery critic stands atop of a tightly-integrated, graph-based online motion-generation and introspection system that resolves a wide range of anomalous situations. Policies, skills, and introspection models are learned incrementally and contextually in a task. Two task-level recovery policies: re-enactment and adaptation resolve accidental and persistent anomalies respectively. The introspection system uses non-parametric priors along with Markov jump linear systems and memoized variational inference with scalable adaptation to learn a model from the data. Extensive real-robot experimentation with various strenuous anomalous conditions is induced and resolved at different phases of a task and in different combinations. The system executes around-the-clock introspection and recovery and even elicited self-recovery when misclassifications occurred.

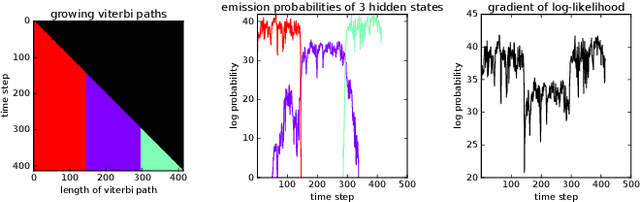

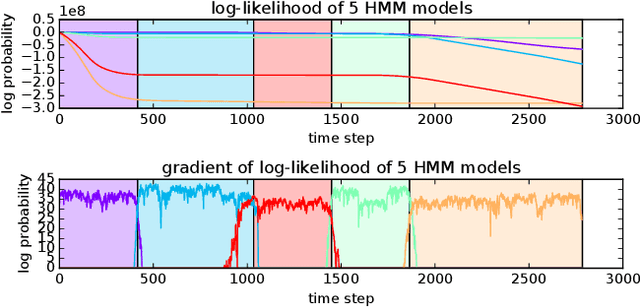

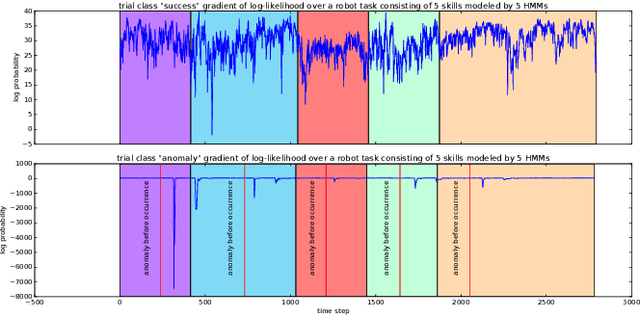

Fast, Robust, and Versatile Event Detection through HMM Belief State Gradient Measures

Jun 20, 2018

Abstract:Event detection is a critical feature in data-driven systems as it assists with the identification of nominal and anomalous behavior. Event detection is increasingly relevant in robotics as robots operate with greater autonomy in increasingly unstructured environments. In this work, we present an accurate, robust, fast, and versatile measure for skill and anomaly identification. A theoretical proof establishes the link between the derivative of the log-likelihood of the HMM filtered belief state and the latest emission probabilities. The key insight is the inverse relationship in which gradient analysis is used for skill and anomaly identification. Our measure showed better performance across all metrics than related state-of-the art works. The result is broadly applicable to domains that use HMMs for event detection.

Recovering from External Disturbances in Online Manipulation through State-Dependent Revertive Recovery Policies

Apr 02, 2018

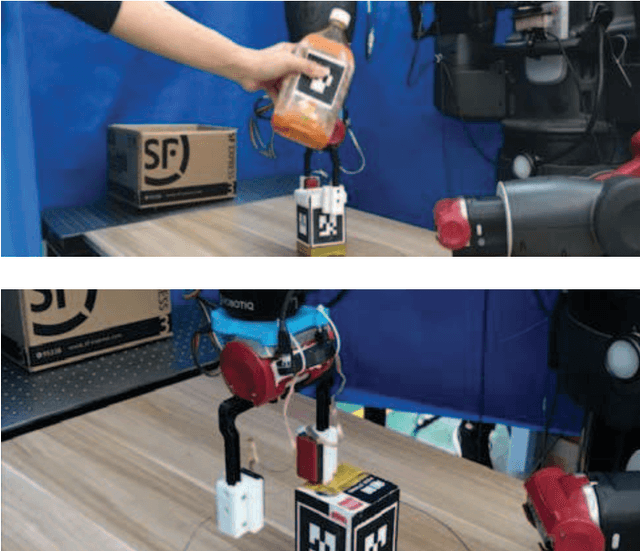

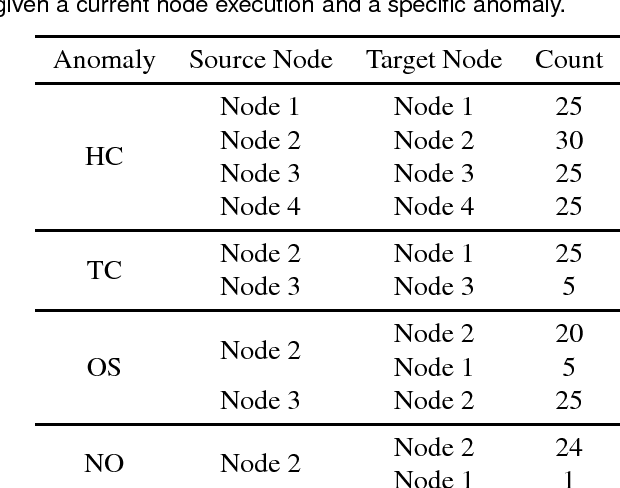

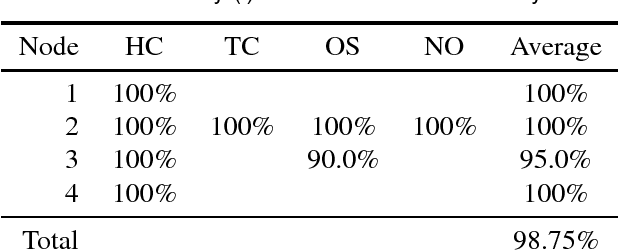

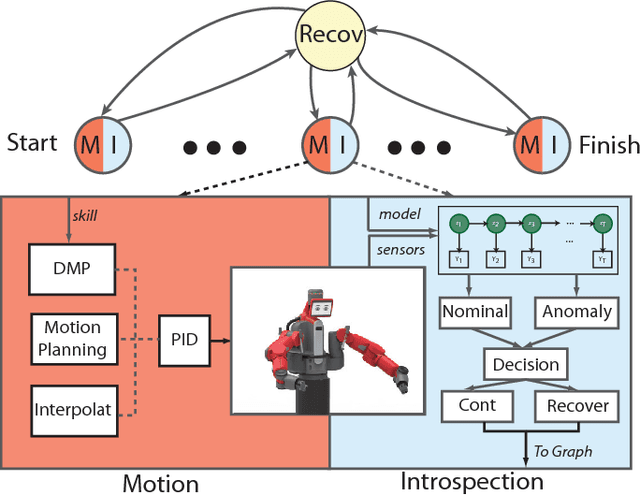

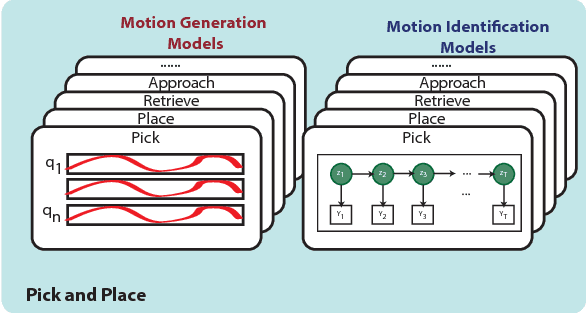

Abstract:Robots are increasingly entering uncertain and unstructured environments. Within these, robots are bound to face unexpected external disturbances like accidental human or tool collisions. Robots must develop the capacity to respond to unexpected events. That is not only identifying the sudden anomaly, but also deciding how to handle it. In this work, we contribute a recovery policy that allows a robot to recovery from various anomalous scenarios across different tasks and conditions in a consistent and robust fashion. The system organizes tasks as a sequence of nodes composed of internal modules such as motion generation and introspection. When an introspection module flags an anomaly, the recovery strategy is triggered and reverts the task execution by selecting a target node as a function of a state dependency chart. The new skill allows the robot to overcome the effects of the external disturbance and conclude the task. Our system recovers from accidental human and tool collisions in a number of tasks. Of particular importance is the fact that we test the robustness of the recovery system by triggering anomalies at each node in the task graph showing robust recovery everywhere in the task. We also trigger multiple and repeated anomalies at each of the nodes of the task showing that the recovery system can consistently recover anywhere in the presence of strong and pervasive anomalous conditions. Robust recovery systems will be key enablers for long-term autonomy in robot systems. Supplemental info including code, data, graphs, and result analysis can be found at [1].

Online Robot Introspection via Wrench-based Action Grammars

Jul 19, 2017

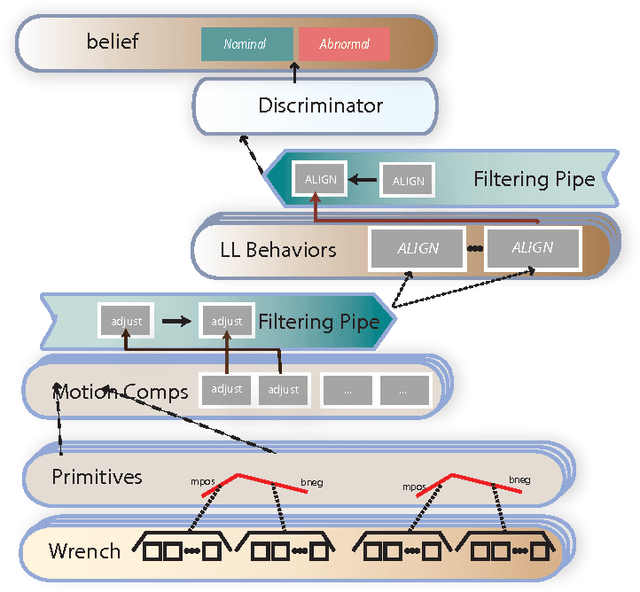

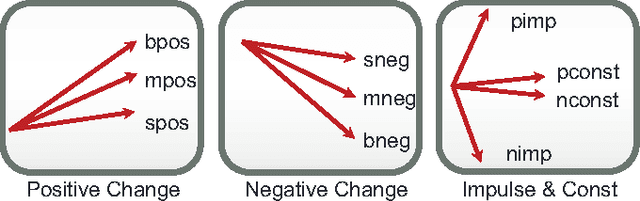

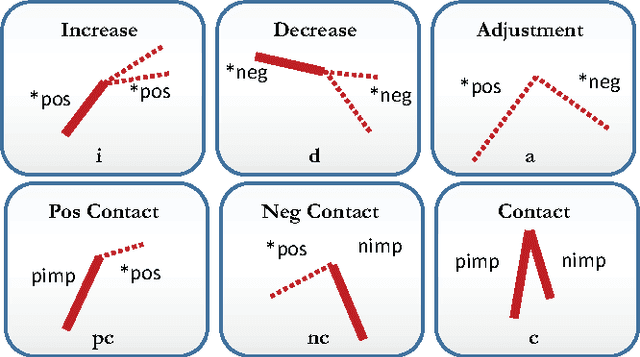

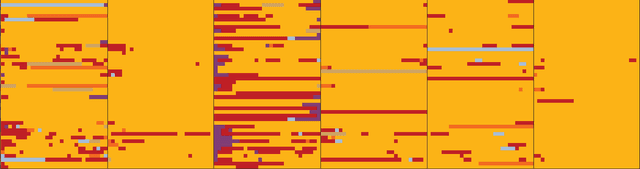

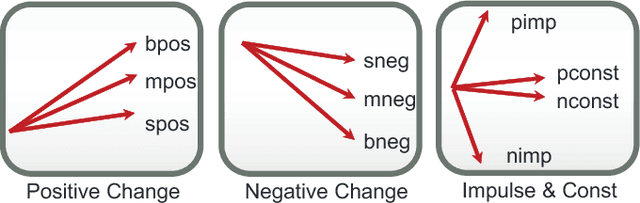

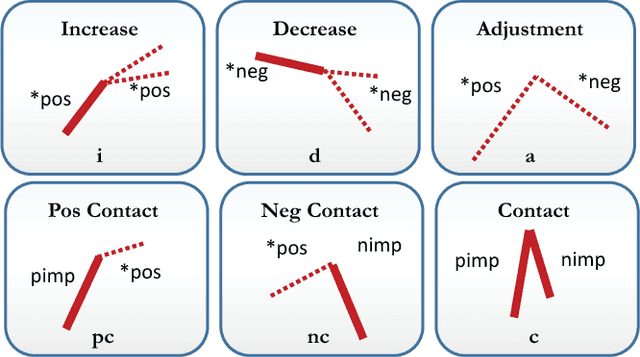

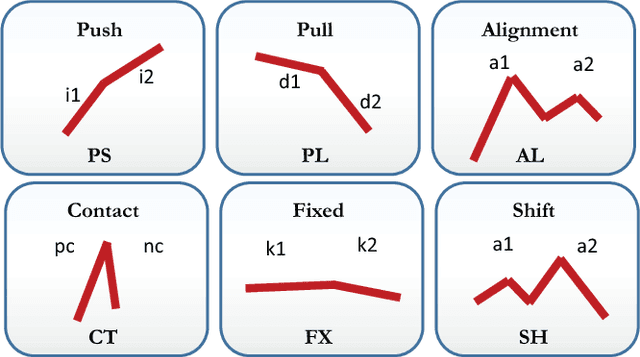

Abstract:Robotic failure is all too common in unstructured robot tasks. Despite well-designed controllers, robots often fail due to unexpected events. How do robots measure unexpected events? Many do not. Most robots are driven by the sense-plan act paradigm, however more recently robots are undergoing a sense-plan-act-verify paradigm. In this work, we present a principled methodology to bootstrap online robot introspection for contact tasks. In effect, we are trying to enable the robot to answer the question: what did I do? Is my behavior as expected or not? To this end, we analyze noisy wrench data and postulate that the latter inherently contains patterns that can be effectively represented by a vocabulary. The vocabulary is generated by segmenting and encoding the data. When the wrench information represents a sequence of sub-tasks, we can think of the vocabulary forming a sentence (set of words with grammar rules) for a given sub-task; allowing the latter to be uniquely represented. The grammar, which can also include unexpected events, was classified in offline and online scenarios as well as for simulated and real robot experiments. Multiclass Support Vector Machines (SVMs) were used offline, while online probabilistic SVMs were are used to give temporal confidence to the introspection result. The contribution of our work is the presentation of a generalizable online semantic scheme that enables a robot to understand its high-level state whether nominal or abnormal. It is shown to work in offline and online scenarios for a particularly challenging contact task: snap assemblies. We perform the snap assembly in one-arm simulated and real one-arm experiments and a simulated two-arm experiment. This verification mechanism can be used by high-level planners or reasoning systems to enable intelligent failure recovery or determine the next most optima manipulation skill to be used.

Robot Introspection via Wrench-based Action Grammars

Sep 16, 2016

Abstract:Robotic failure is all too common in unstructured robot tasks. Despite well designed controllers, robots often fail due to unexpected events. How do robots measure unexpected events? Many do not. Most robots are driven by the senseplan- act paradigm, however more recently robots are working with a sense-plan-act-verify paradigm. In this work we present a principled methodology to bootstrap robot introspection for contact tasks. In effect, we are trying to answer the question, what did the robot do? To this end, we hypothesize that all noisy wrench data inherently contains patterns that can be effectively represented by a vocabulary. The vocabulary is generated by meaningfully segmenting the data and then encoding it. When the wrench information represents a sequence of sub-tasks, we can think of the vocabulary forming sets of words or sentences, such that each subtask is uniquely represented by a word set. Such sets can be classified using statistical or machine learning techniques. We use SVMs and Mondrian Forests to classify contacts tasks both in simulation and in real robots for one and dual arm scenarios showing the general robustness of the approach. The contribution of our work is the presentation of a simple but generalizable semantic scheme that enables a robot to understand its high level state. This verification mechanism can provide feedback for high-level planners or reasoning systems that use semantic descriptors as well. The code, data, and other supporting documentation can be found at: http://www.juanrojas.net/2017icra_wrench_introspection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge