Yunlong Du

Online Robot Introspection via Wrench-based Action Grammars

Jul 19, 2017

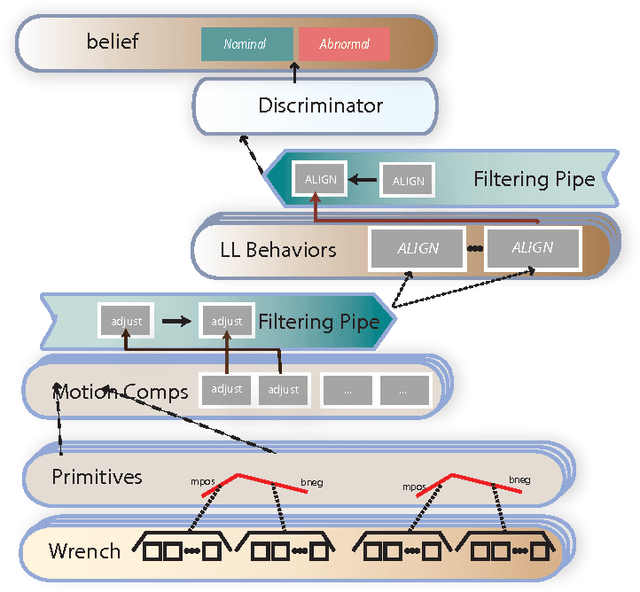

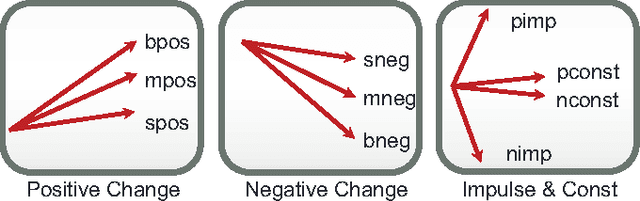

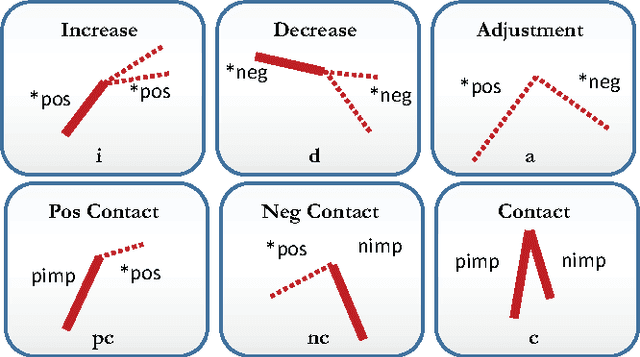

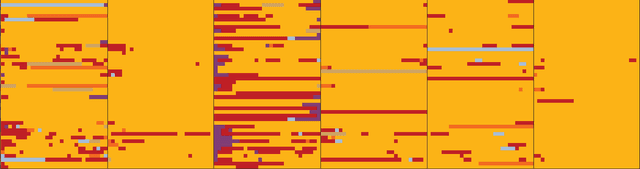

Abstract:Robotic failure is all too common in unstructured robot tasks. Despite well-designed controllers, robots often fail due to unexpected events. How do robots measure unexpected events? Many do not. Most robots are driven by the sense-plan act paradigm, however more recently robots are undergoing a sense-plan-act-verify paradigm. In this work, we present a principled methodology to bootstrap online robot introspection for contact tasks. In effect, we are trying to enable the robot to answer the question: what did I do? Is my behavior as expected or not? To this end, we analyze noisy wrench data and postulate that the latter inherently contains patterns that can be effectively represented by a vocabulary. The vocabulary is generated by segmenting and encoding the data. When the wrench information represents a sequence of sub-tasks, we can think of the vocabulary forming a sentence (set of words with grammar rules) for a given sub-task; allowing the latter to be uniquely represented. The grammar, which can also include unexpected events, was classified in offline and online scenarios as well as for simulated and real robot experiments. Multiclass Support Vector Machines (SVMs) were used offline, while online probabilistic SVMs were are used to give temporal confidence to the introspection result. The contribution of our work is the presentation of a generalizable online semantic scheme that enables a robot to understand its high-level state whether nominal or abnormal. It is shown to work in offline and online scenarios for a particularly challenging contact task: snap assemblies. We perform the snap assembly in one-arm simulated and real one-arm experiments and a simulated two-arm experiment. This verification mechanism can be used by high-level planners or reasoning systems to enable intelligent failure recovery or determine the next most optima manipulation skill to be used.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge