Yunlong Du Wenwei Kuang

Robot Introspection via Wrench-based Action Grammars

Sep 16, 2016

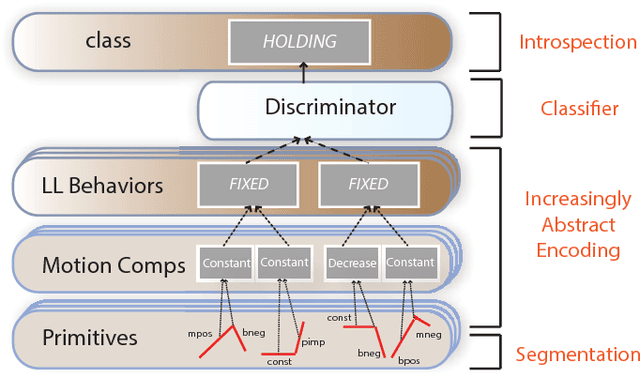

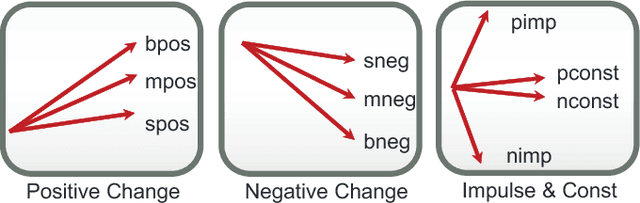

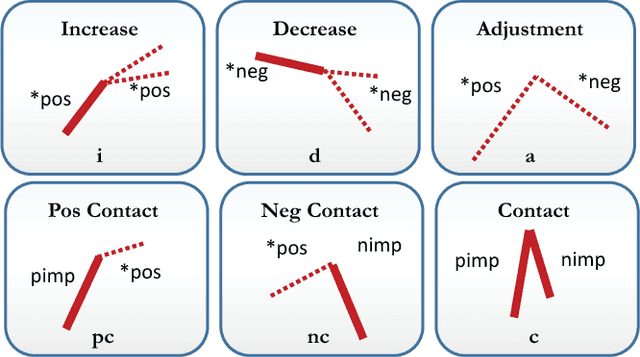

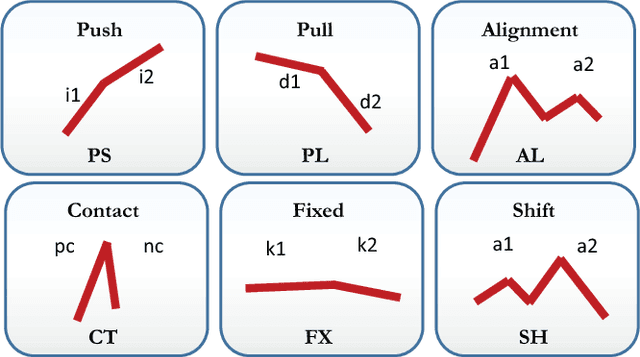

Abstract:Robotic failure is all too common in unstructured robot tasks. Despite well designed controllers, robots often fail due to unexpected events. How do robots measure unexpected events? Many do not. Most robots are driven by the senseplan- act paradigm, however more recently robots are working with a sense-plan-act-verify paradigm. In this work we present a principled methodology to bootstrap robot introspection for contact tasks. In effect, we are trying to answer the question, what did the robot do? To this end, we hypothesize that all noisy wrench data inherently contains patterns that can be effectively represented by a vocabulary. The vocabulary is generated by meaningfully segmenting the data and then encoding it. When the wrench information represents a sequence of sub-tasks, we can think of the vocabulary forming sets of words or sentences, such that each subtask is uniquely represented by a word set. Such sets can be classified using statistical or machine learning techniques. We use SVMs and Mondrian Forests to classify contacts tasks both in simulation and in real robots for one and dual arm scenarios showing the general robustness of the approach. The contribution of our work is the presentation of a simple but generalizable semantic scheme that enables a robot to understand its high level state. This verification mechanism can provide feedback for high-level planners or reasoning systems that use semantic descriptors as well. The code, data, and other supporting documentation can be found at: http://www.juanrojas.net/2017icra_wrench_introspection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge