Longxin Chen

Dynamic Interaction Probabilistic Movement Primitives

Jan 30, 2019

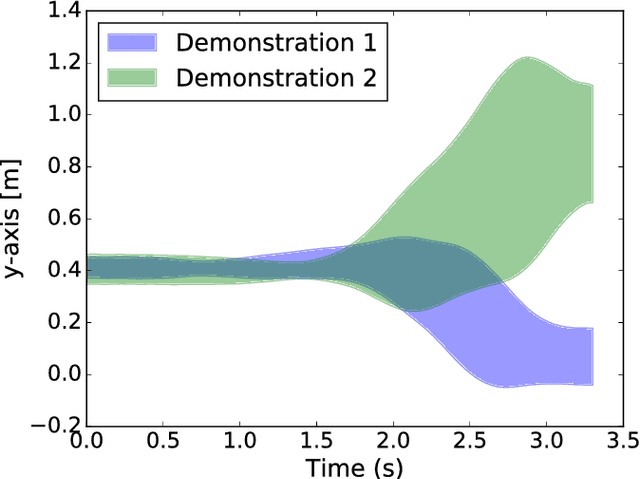

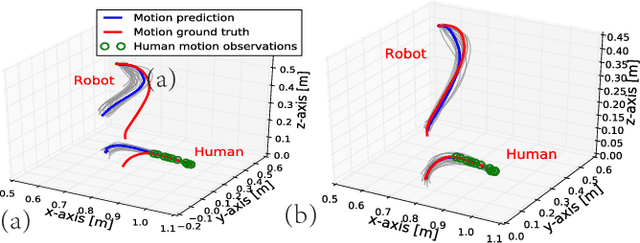

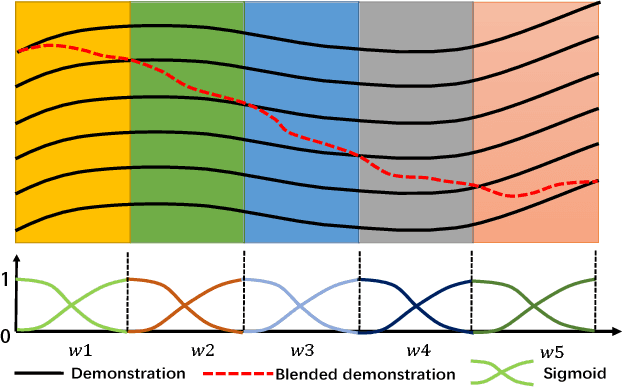

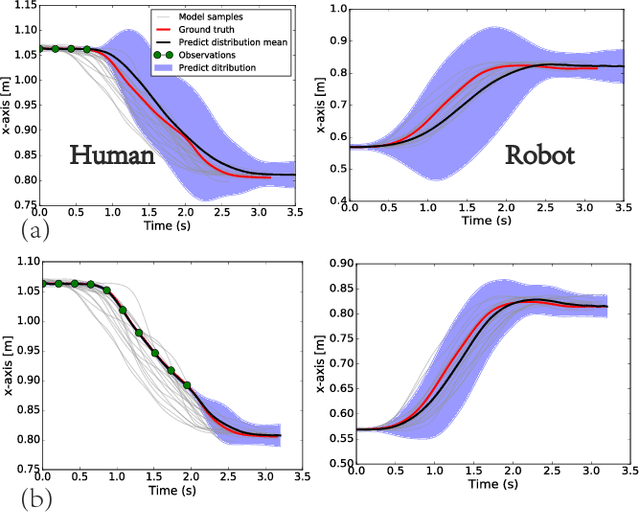

Abstract:Human-robot collaboration is on the rise. Robots need to increasingly improve the efficiency and smoothness with which they assist humans by properly anticipating a human's intention. To do so, prediction models need to increase their accuracy and responsiveness. This work builds on top of Interaction Movement Primitives with phase estimation and re-formulates the framework to use dynamic human-motion observations which constantly update anticipatory motions. The original framework only considers a single fixed-duration static human observation which is used to perform only one anticipatory motion. Dynamic observations, with built-in phase estimation, yield a series of updated robot motion distributions. Co-activation is performed between the existing and newest most probably robot motion distribution. This results in smooth anticipatory robot motions that are highly accurate and with enhanced responsiveness.

Endowing Robots with Longer-term Autonomy by Recovering from External Disturbances in Manipulation through Grounded Anomaly Classification and Recovery Policies

Sep 11, 2018

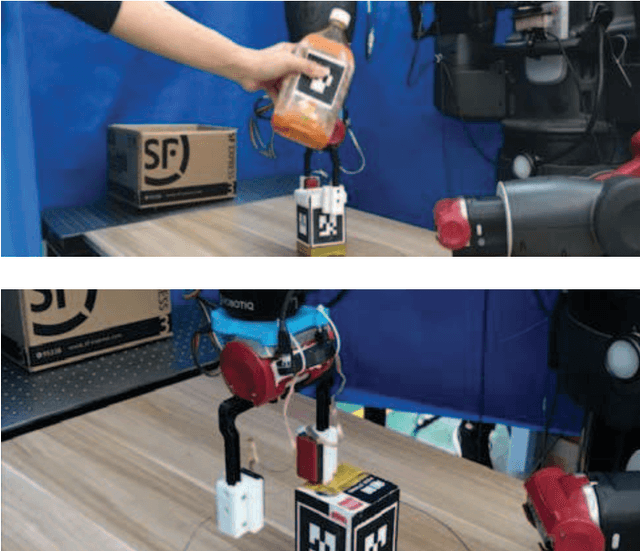

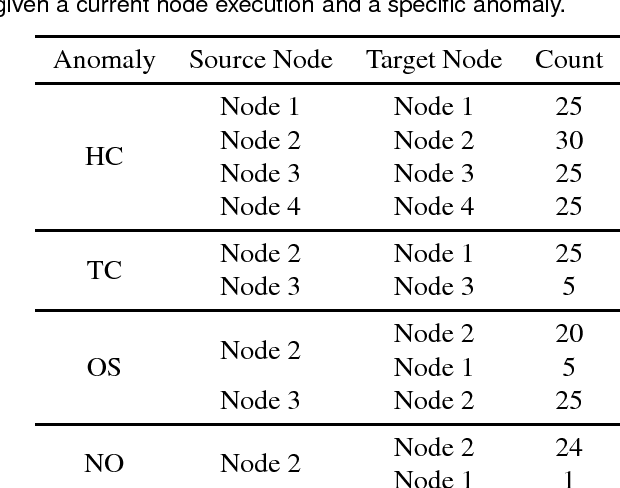

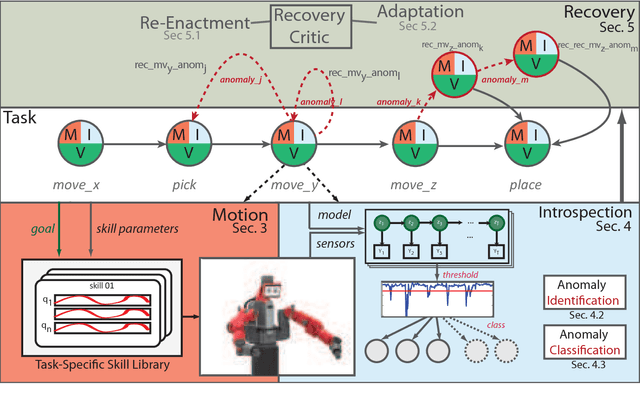

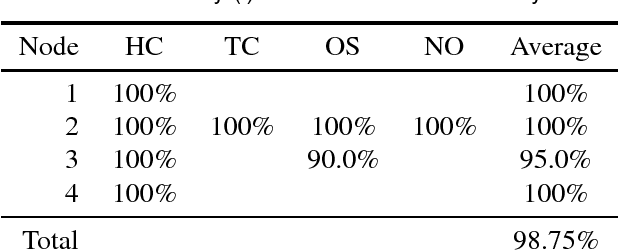

Abstract:Robot manipulation is increasingly poised to interact with humans in co-shared workspaces. Despite increasingly robust manipulation and control algorithms, failure modes continue to exist whenever models do not capture the dynamics of the unstructured environment. To obtain longer-term horizons in robot automation, robots must develop introspection and recovery abilities. We contribute a set of recovery policies to deal with anomalies produced by external disturbances as well as anomaly classification through the use of non-parametric statistics with memoized variational inference with scalable adaptation. A recovery critic stands atop of a tightly-integrated, graph-based online motion-generation and introspection system that resolves a wide range of anomalous situations. Policies, skills, and introspection models are learned incrementally and contextually in a task. Two task-level recovery policies: re-enactment and adaptation resolve accidental and persistent anomalies respectively. The introspection system uses non-parametric priors along with Markov jump linear systems and memoized variational inference with scalable adaptation to learn a model from the data. Extensive real-robot experimentation with various strenuous anomalous conditions is induced and resolved at different phases of a task and in different combinations. The system executes around-the-clock introspection and recovery and even elicited self-recovery when misclassifications occurred.

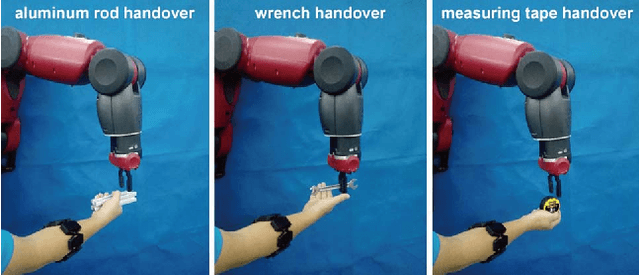

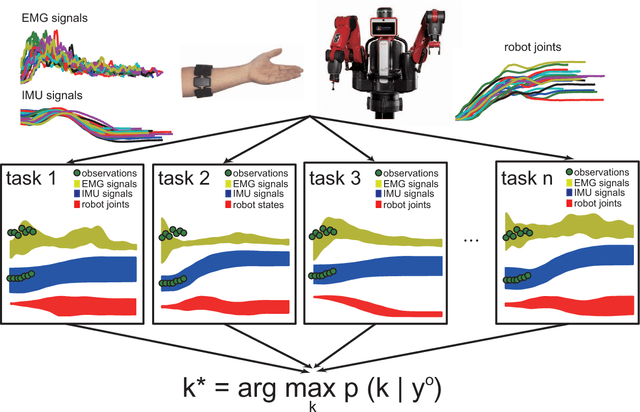

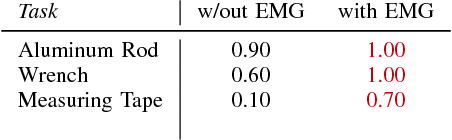

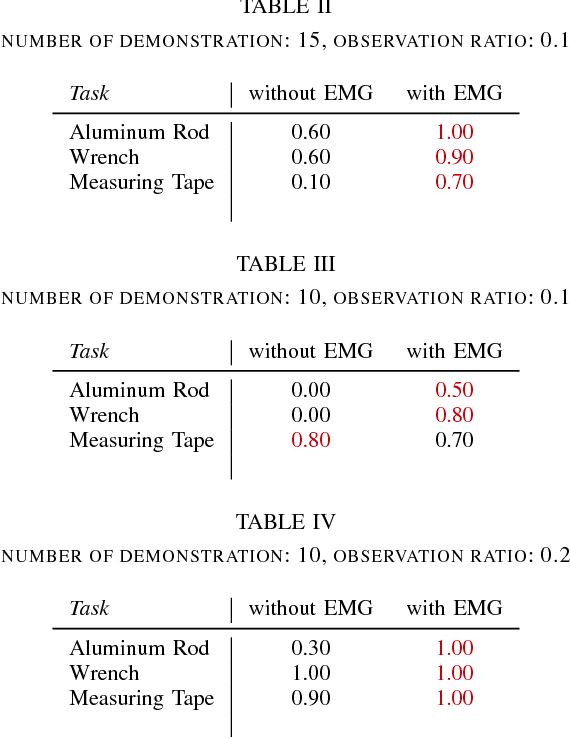

Learning Human-Robot Collaboration Insights through the Integration of Muscle Activity in Interaction Motion Models

Aug 08, 2017

Abstract:Recent progress in human-robot collaboration makes fast and fluid interactions possible, even when human observations are partial and occluded. Methods like Interaction Probabilistic Movement Primitives (ProMP) model human trajectories through motion capture systems. However, such representation does not properly model tasks where similar motions handle different objects. Under current approaches, a robot would not adapt its pose and dynamics for proper handling. We integrate the use of Electromyography (EMG) into the Interaction ProMP framework and utilize muscular signals to augment the human observation representation. The contribution of our paper is increased task discernment when trajectories are similar but tools are different and require the robot to adjust its pose for proper handling. Interaction ProMPs are used with an augmented vector that integrates muscle activity. Augmented time-normalized trajectories are used in training to learn correlation parameters and robot motions are predicted by finding the best weight combination and temporal scaling for a task. Collaborative single task scenarios with similar motions but different objects were used and compared. For one experiment only joint angles were recorded, for the other EMG signals were additionally integrated. Task recognition was computed for both tasks. Observation state vectors with augmented EMG signals were able to completely identify differences across tasks, while the baseline method failed every time. Integrating EMG signals into collaborative tasks significantly increases the ability of the system to recognize nuances in the tasks that are otherwise imperceptible, up to 74.6% in our studies. Furthermore, the integration of EMG signals for collaboration also opens the door to a wide class of human-robot physical interactions based on haptic communication that has been largely unexploited in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge