Shoichi Masui

Unsupervised Discovery of Long-Term Spatiotemporal Periodic Workflows in Human Activities

Nov 18, 2025Abstract:Periodic human activities with implicit workflows are common in manufacturing, sports, and daily life. While short-term periodic activities -- characterized by simple structures and high-contrast patterns -- have been widely studied, long-term periodic workflows with low-contrast patterns remain largely underexplored. To bridge this gap, we introduce the first benchmark comprising 580 multimodal human activity sequences featuring long-term periodic workflows. The benchmark supports three evaluation tasks aligned with real-world applications: unsupervised periodic workflow detection, task completion tracking, and procedural anomaly detection. We also propose a lightweight, training-free baseline for modeling diverse periodic workflow patterns. Experiments show that: (i) our benchmark presents significant challenges to both unsupervised periodic detection methods and zero-shot approaches based on powerful large language models (LLMs); (ii) our baseline outperforms competing methods by a substantial margin in all evaluation tasks; and (iii) in real-world applications, our baseline demonstrates deployment advantages on par with traditional supervised workflow detection approaches, eliminating the need for annotation and retraining. Our project page is https://sites.google.com/view/periodicworkflow.

FieldWorkArena: Agentic AI Benchmark for Real Field Work Tasks

May 26, 2025Abstract:This paper proposes FieldWorkArena, a benchmark for agentic AI targeting real-world field work. With the recent increase in demand for agentic AI, they are required to monitor and report safety and health incidents, as well as manufacturing-related incidents, that may occur in real-world work environments. Existing agentic AI benchmarks have been limited to evaluating web tasks and are insufficient for evaluating agents in real-world work environments, where complexity increases significantly. In this paper, we define a new action space that agentic AI should possess for real world work environment benchmarks and improve the evaluation function from previous methods to assess the performance of agentic AI in diverse real-world tasks. The dataset consists of videos captured on-site and documents actually used in factories and warehouses, and tasks were created based on interviews with on-site workers and managers. Evaluation results confirmed that performance evaluation considering the characteristics of Multimodal LLM (MLLM) such as GPT-4o is feasible. Additionally, the effectiveness and limitations of the proposed new evaluation method were identified. The complete dataset (HuggingFace) and evaluation program (GitHub) can be downloaded from the following website: https://en-documents.research.global.fujitsu.com/fieldworkarena/.

A Unified Multi-view Multi-person Tracking Framework

Feb 08, 2023Abstract:Although there is a significant development in 3D Multi-view Multi-person Tracking (3D MM-Tracking), current 3D MM-Tracking frameworks are designed separately for footprint and pose tracking. Specifically, frameworks designed for footprint tracking cannot be utilized in 3D pose tracking, because they directly obtain 3D positions on the ground plane with a homography projection, which is inapplicable to 3D poses above the ground. In contrast, frameworks designed for pose tracking generally isolate multi-view and multi-frame associations and may not be robust to footprint tracking, since footprint tracking utilizes fewer key points than pose tracking, which weakens multi-view association cues in a single frame. This study presents a Unified Multi-view Multi-person Tracking framework to bridge the gap between footprint tracking and pose tracking. Without additional modifications, the framework can adopt monocular 2D bounding boxes and 2D poses as the input to produce robust 3D trajectories for multiple persons. Importantly, multi-frame and multi-view information are jointly employed to improve the performance of association and triangulation. The effectiveness of our framework is verified by accomplishing state-of-the-art performance on the Campus and Shelf datasets for 3D pose tracking, and by comparable results on the WILDTRACK and MMPTRACK datasets for 3D footprint tracking.

* Accepted to Computational Visual Media

The Second-place Solution for CVPR 2022 SoccerNet Tracking Challenge

Dec 06, 2022

Abstract:This is our second-place solution for CVPR 2022 SoccerNet Tracking Challenge. Our method mainly includes two steps: online short-term tracking using our Cascaded Buffer-IoU (C-BIoU) Tracker, and, offline long-term tracking using appearance feature and hierarchical clustering. At each step, online tracking yielded HOTA scores near 90, and offline tracking further improved HOTA scores to around 93.2.

The Second-place Solution for ECCV 2022 Multiple People Tracking in Group Dance Challenge

Dec 06, 2022

Abstract:This is our 2nd-place solution for the ECCV 2022 Multiple People Tracking in Group Dance Challenge. Our method mainly includes two steps: online short-term tracking using our Cascaded Buffer-IoU (C-BIoU) Tracker, and, offline long-term tracking using appearance feature and hierarchical clustering. Our C-BIoU tracker adds buffers to expand the matching space of detections and tracks, which mitigates the effect of irregular motions in two aspects: one is to directly match identical but non-overlapping detections and tracks in adjacent frames, and the other is to compensate for the motion estimation bias in the matching space. In addition, to reduce the risk of overexpansion of the matching space, cascaded matching is employed: first matching alive tracks and detections with a small buffer, and then matching unmatched tracks and detections with a large buffer. After using our C-BIoU for online tracking, we applied the offline refinement introduced by ReMOTS.

Hard to Track Objects with Irregular Motions and Similar Appearances? Make It Easier by Buffering the Matching Space

Dec 06, 2022

Abstract:We propose a Cascaded Buffered IoU (C-BIoU) tracker to track multiple objects that have irregular motions and indistinguishable appearances. When appearance features are unreliable and geometric features are confused by irregular motions, applying conventional Multiple Object Tracking (MOT) methods may generate unsatisfactory results. To address this issue, our C-BIoU tracker adds buffers to expand the matching space of detections and tracks, which mitigates the effect of irregular motions in two aspects: one is to directly match identical but non-overlapping detections and tracks in adjacent frames, and the other is to compensate for the motion estimation bias in the matching space. In addition, to reduce the risk of overexpansion of the matching space, cascaded matching is employed: first matching alive tracks and detections with a small buffer, and then matching unmatched tracks and detections with a large buffer. Despite its simplicity, our C-BIoU tracker works surprisingly well and achieves state-of-the-art results on MOT datasets that focus on irregular motions and indistinguishable appearances. Moreover, the C-BIoU tracker is the dominant component for our 2-nd place solution in the CVPR'22 SoccerNet MOT and ECCV'22 MOTComplex DanceTrack challenges. Finally, we analyze the limitation of our C-BIoU tracker in ablation studies and discuss its application scope.

* Accepted to WACV 2023. arXiv admin note: text overlap with arXiv:2211.13509

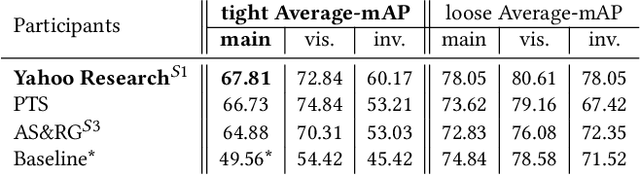

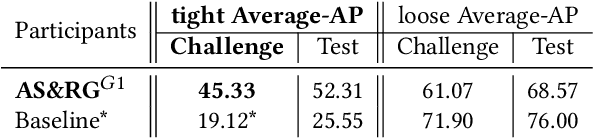

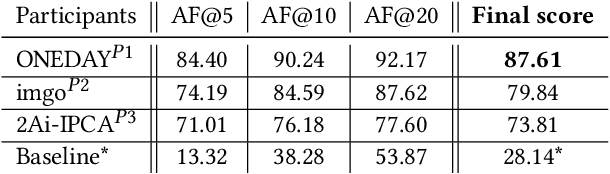

SoccerNet 2022 Challenges Results

Oct 05, 2022

Abstract:The SoccerNet 2022 challenges were the second annual video understanding challenges organized by the SoccerNet team. In 2022, the challenges were composed of 6 vision-based tasks: (1) action spotting, focusing on retrieving action timestamps in long untrimmed videos, (2) replay grounding, focusing on retrieving the live moment of an action shown in a replay, (3) pitch localization, focusing on detecting line and goal part elements, (4) camera calibration, dedicated to retrieving the intrinsic and extrinsic camera parameters, (5) player re-identification, focusing on retrieving the same players across multiple views, and (6) multiple object tracking, focusing on tracking players and the ball through unedited video streams. Compared to last year's challenges, tasks (1-2) had their evaluation metrics redefined to consider tighter temporal accuracies, and tasks (3-6) were novel, including their underlying data and annotations. More information on the tasks, challenges and leaderboards are available on https://www.soccer-net.org. Baselines and development kits are available on https://github.com/SoccerNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge