Shinnosuke Matsuo

Learning from Majority Label: A Novel Problem in Multi-class Multiple-Instance Learning

Sep 04, 2025

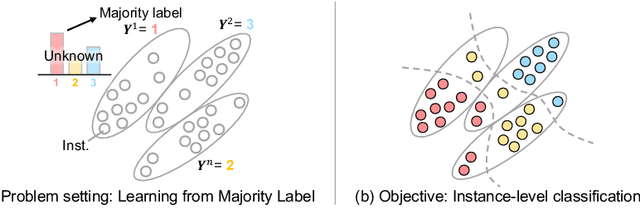

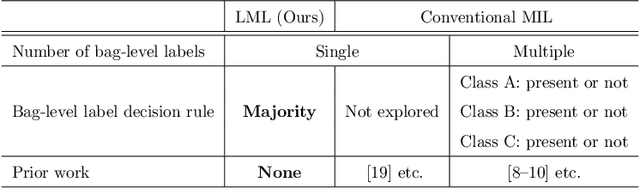

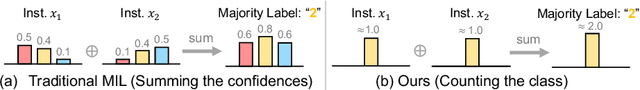

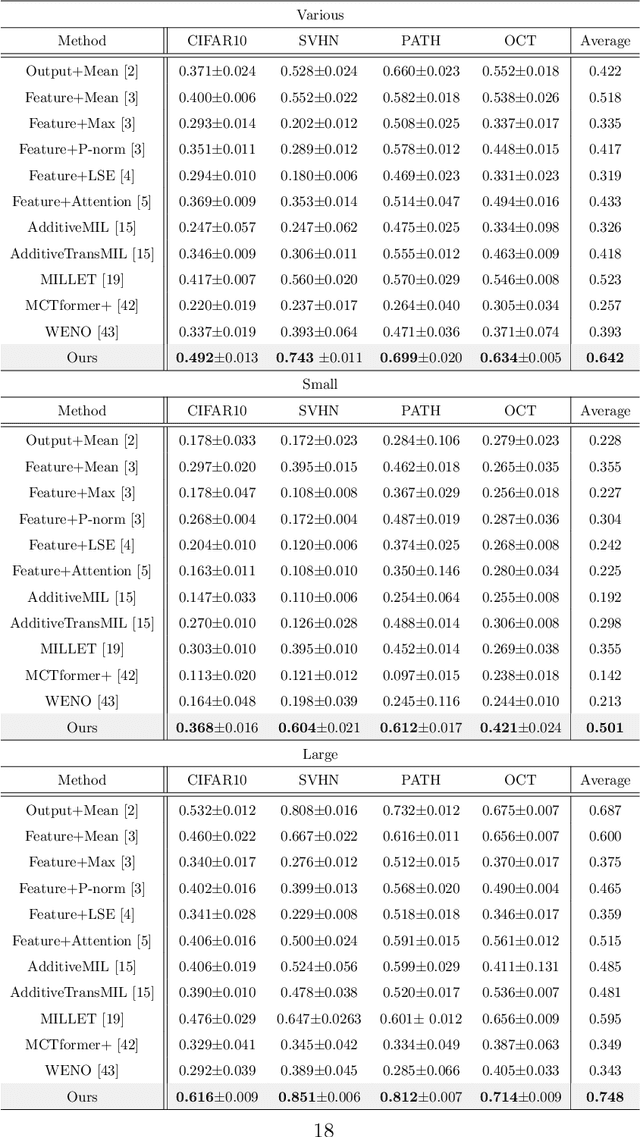

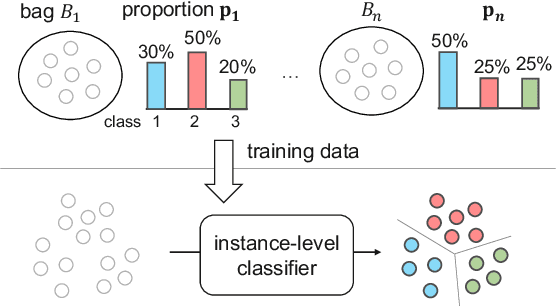

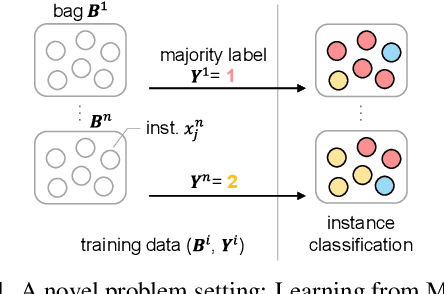

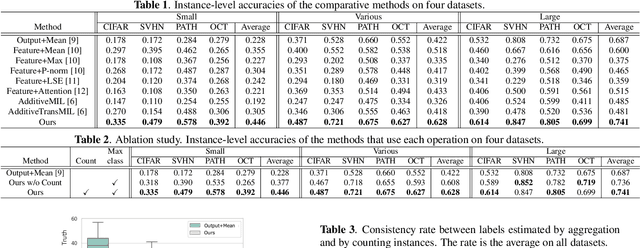

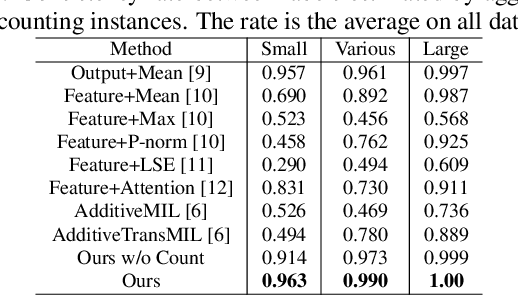

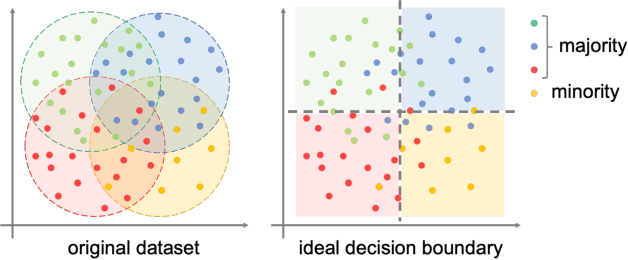

Abstract:The paper proposes a novel multi-class Multiple-Instance Learning (MIL) problem called Learning from Majority Label (LML). In LML, the majority class of instances in a bag is assigned as the bag-level label. The goal of LML is to train a classification model that estimates the class of each instance using the majority label. This problem is valuable in a variety of applications, including pathology image segmentation, political voting prediction, customer sentiment analysis, and environmental monitoring. To solve LML, we propose a Counting Network trained to produce bag-level majority labels, estimated by counting the number of instances in each class. Furthermore, analysis experiments on the characteristics of LML revealed that bags with a high proportion of the majority class facilitate learning. Based on this result, we developed a Majority Proportion Enhancement Module (MPEM) that increases the proportion of the majority class by removing minority class instances within the bags. Experiments demonstrate the superiority of the proposed method on four datasets compared to conventional MIL methods. Moreover, ablation studies confirmed the effectiveness of each module. The code is available at \href{https://github.com/Shiku-Kaito/Learning-from-Majority-Label-A-Novel-Problem-in-Multi-class-Multiple-Instance-Learning}{here}.

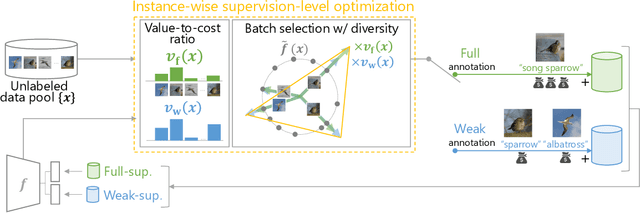

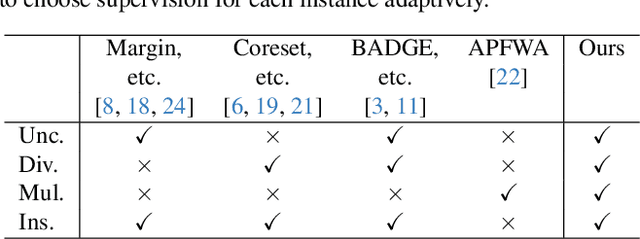

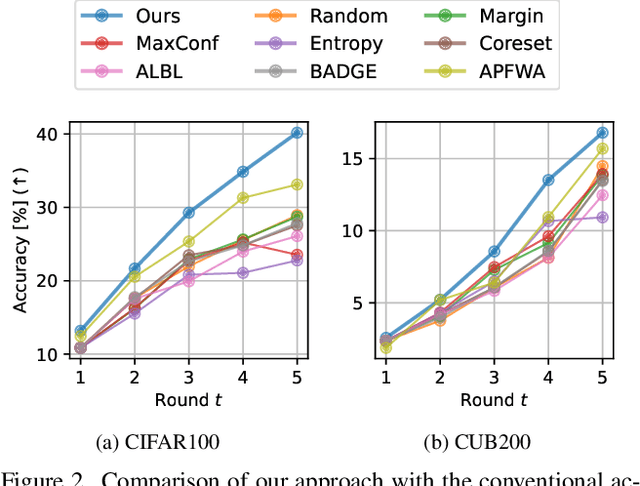

Instance-wise Supervision-level Optimization in Active Learning

Mar 09, 2025

Abstract:Active learning (AL) is a label-efficient machine learning paradigm that focuses on selectively annotating high-value instances to maximize learning efficiency. Its effectiveness can be further enhanced by incorporating weak supervision, which uses rough yet cost-effective annotations instead of exact (i.e., full) but expensive annotations. We introduce a novel AL framework, Instance-wise Supervision-Level Optimization (ISO), which not only selects the instances to annotate but also determines their optimal annotation level within a fixed annotation budget. Its optimization criterion leverages the value-to-cost ratio (VCR) of each instance while ensuring diversity among the selected instances. In classification experiments, ISO consistently outperforms traditional AL methods and surpasses a state-of-the-art AL approach that combines full and weak supervision, achieving higher accuracy at a lower overall cost. This code is available at https://github.com/matsuo-shinnosuke/ISOAL.

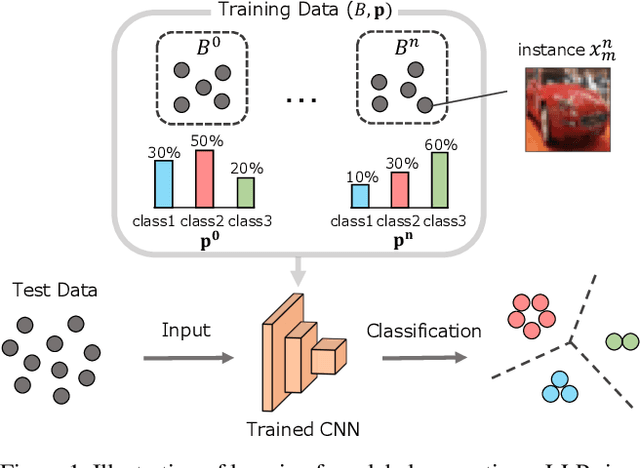

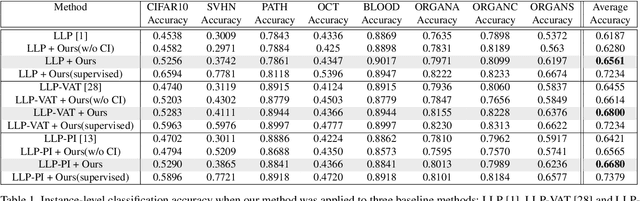

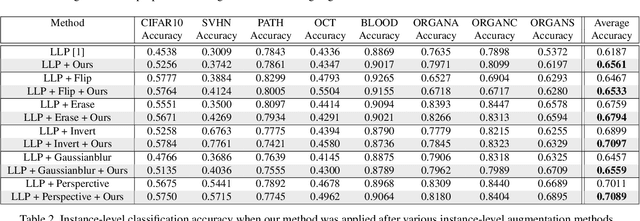

Theoretical Proportion Label Perturbation for Learning from Label Proportions in Large Bags

Aug 26, 2024

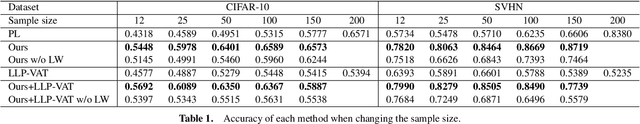

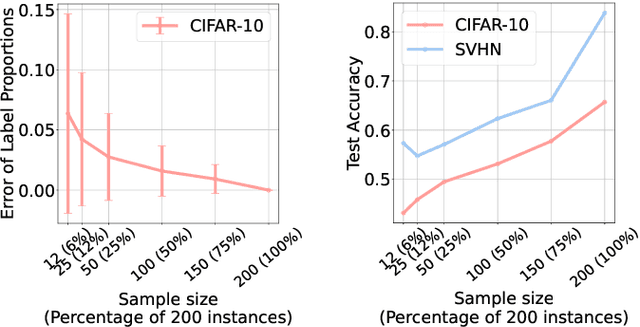

Abstract:Learning from label proportions (LLP) is a kind of weakly supervised learning that trains an instance-level classifier from label proportions of bags, which consist of sets of instances without using instance labels. A challenge in LLP arises when the number of instances in a bag (bag size) is numerous, making the traditional LLP methods difficult due to GPU memory limitations. This study aims to develop an LLP method capable of learning from bags with large sizes. In our method, smaller bags (mini-bags) are generated by sampling instances from large-sized bags (original bags), and these mini-bags are used in place of the original bags. However, the proportion of a mini-bag is unknown and differs from that of the original bag, leading to overfitting. To address this issue, we propose a perturbation method for the proportion labels of sampled mini-bags to mitigate overfitting to noisy label proportions. This perturbation is added based on the multivariate hypergeometric distribution, which is statistically modeled. Additionally, loss weighting is implemented to reduce the negative impact of proportions sampled from the tail of the distribution. Experimental results demonstrate that the proportion label perturbation and loss weighting achieve classification accuracy comparable to that obtained without sampling. Our codes are available at https://github.com/stainlessnight/LLP-LargeBags.

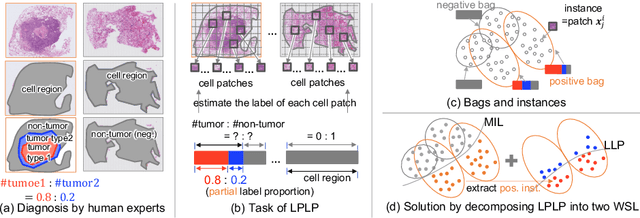

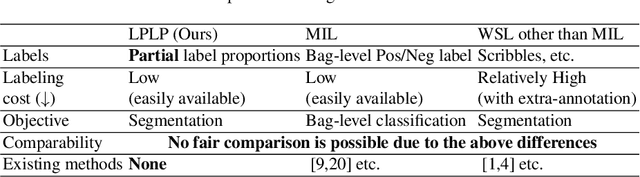

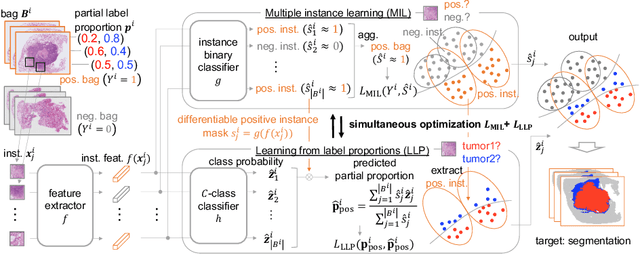

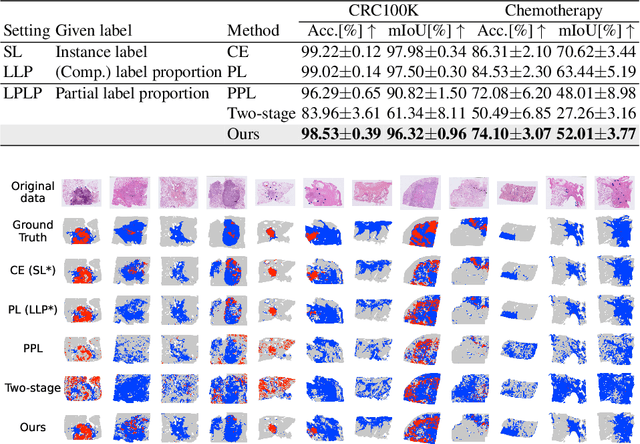

Learning from Partial Label Proportions for Whole Slide Image Segmentation

May 15, 2024

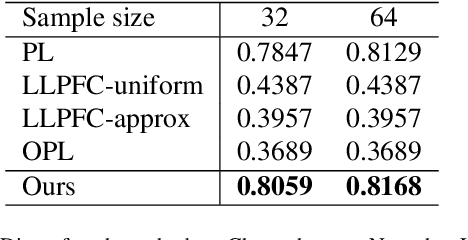

Abstract:In this paper, we address the segmentation of tumor subtypes in whole slide images (WSI) by utilizing incomplete label proportions. Specifically, we utilize `partial' label proportions, which give the proportions among tumor subtypes but do not give the proportion between tumor and non-tumor. Partial label proportions are recorded as the standard diagnostic information by pathologists, and we, therefore, want to use them for realizing the segmentation model that can classify each WSI patch into one of the tumor subtypes or non-tumor. We call this problem ``learning from partial label proportions (LPLP)'' and formulate the problem as a weakly supervised learning problem. Then, we propose an efficient algorithm for this challenging problem by decomposing it into two weakly supervised learning subproblems: multiple instance learning (MIL) and learning from label proportions (LLP). These subproblems are optimized efficiently in the end-to-end manner. The effectiveness of our algorithm is demonstrated through experiments conducted on two WSI datasets.

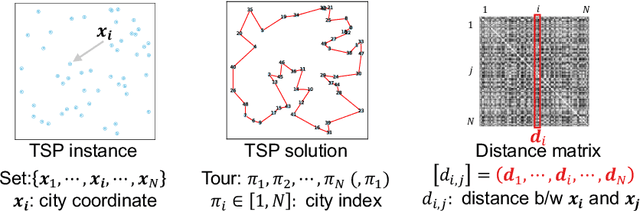

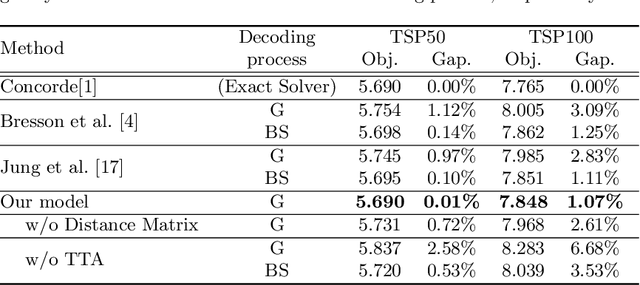

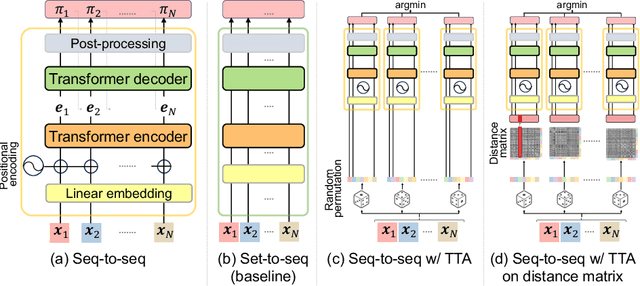

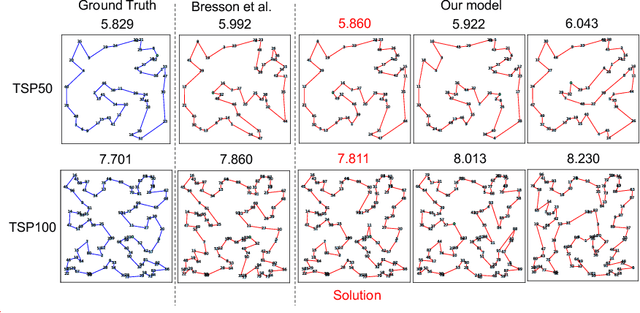

Test-Time Augmentation for Traveling Salesperson Problem

May 08, 2024

Abstract:We propose Test-Time Augmentation (TTA) as an effective technique for addressing combinatorial optimization problems, including the Traveling Salesperson Problem. In general, deep learning models possessing the property of invariance, where the output is uniquely determined regardless of the node indices, have been proposed to learn graph structures efficiently. In contrast, we interpret the permutation of node indices, which exchanges the elements of the distance matrix, as a TTA scheme. The results demonstrate that our method is capable of obtaining shorter solutions than the latest models. Furthermore, we show that the probability of finding a solution closer to an exact solution increases depending on the augmentation size.

Counting Network for Learning from Majority Label

Mar 20, 2024

Abstract:The paper proposes a novel problem in multi-class Multiple-Instance Learning (MIL) called Learning from the Majority Label (LML). In LML, the majority class of instances in a bag is assigned as the bag's label. LML aims to classify instances using bag-level majority classes. This problem is valuable in various applications. Existing MIL methods are unsuitable for LML due to aggregating confidences, which may lead to inconsistency between the bag-level label and the label obtained by counting the number of instances for each class. This may lead to incorrect instance-level classification. We propose a counting network trained to produce the bag-level majority labels estimated by counting the number of instances for each class. This led to the consistency of the majority class between the network outputs and one obtained by counting the number of instances. Experimental results show that our counting network outperforms conventional MIL methods on four datasets The code is publicly available at https://github.com/Shiku-Kaito/Counting-Network-for-Learning-from-Majority-Label.

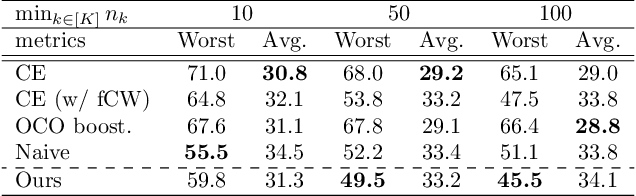

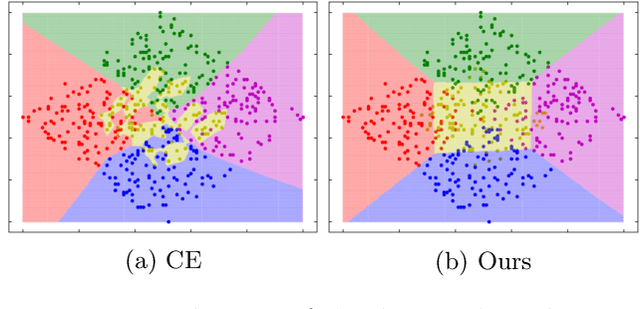

Boosting for Bounding the Worst-class Error

Oct 20, 2023

Abstract:This paper tackles the problem of the worst-class error rate, instead of the standard error rate averaged over all classes. For example, a three-class classification task with class-wise error rates of 10\%, 10\%, and 40\% has a worst-class error rate of 40\%, whereas the average is 20\% under the class-balanced condition. The worst-class error is important in many applications. For example, in a medical image classification task, it would not be acceptable for the malignant tumor class to have a 40\% error rate, while the benign and healthy classes have 10\% error rates.We propose a boosting algorithm that guarantees an upper bound of the worst-class training error and derive its generalization bound. Experimental results show that the algorithm lowers worst-class test error rates while avoiding overfitting to the training set.

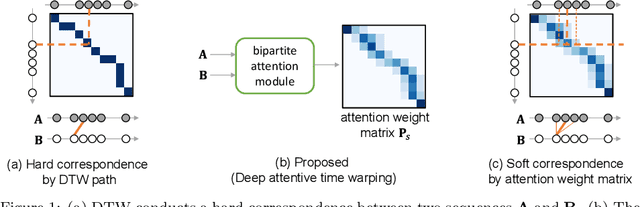

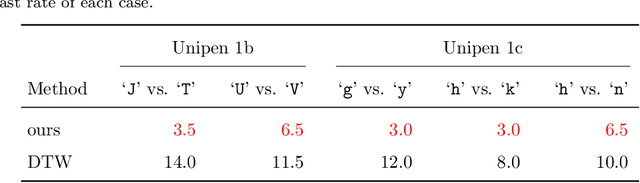

Deep Attentive Time Warping

Sep 13, 2023

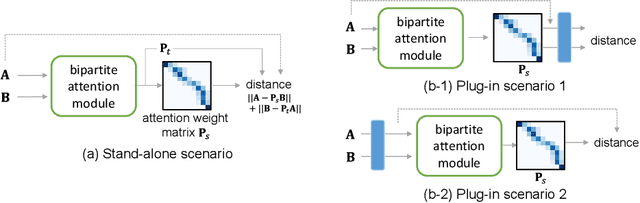

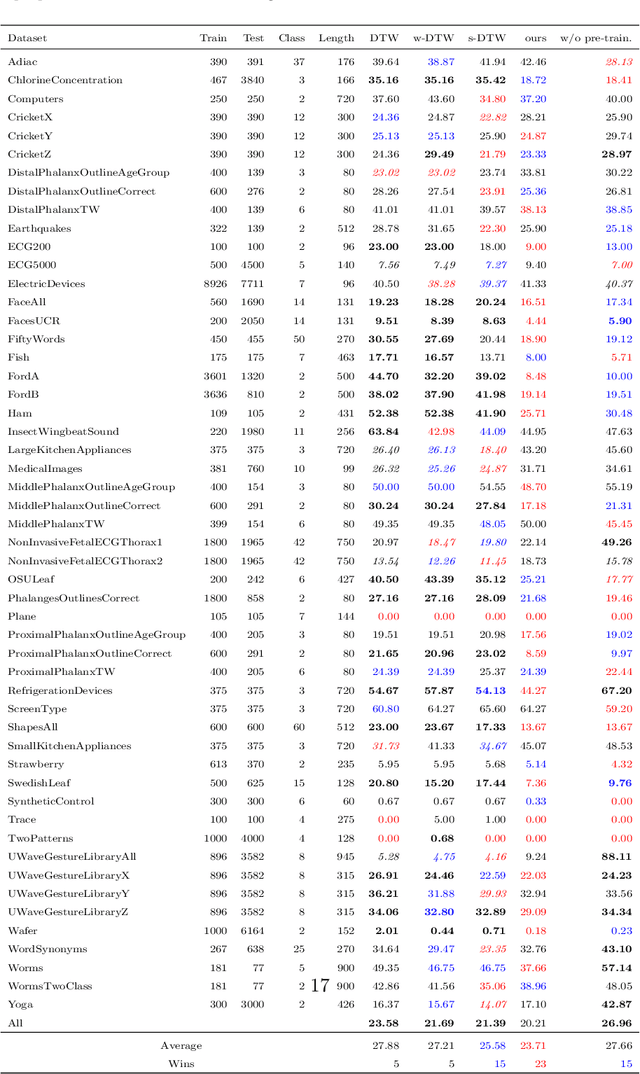

Abstract:Similarity measures for time series are important problems for time series classification. To handle the nonlinear time distortions, Dynamic Time Warping (DTW) has been widely used. However, DTW is not learnable and suffers from a trade-off between robustness against time distortion and discriminative power. In this paper, we propose a neural network model for task-adaptive time warping. Specifically, we use the attention model, called the bipartite attention model, to develop an explicit time warping mechanism with greater distortion invariance. Unlike other learnable models using DTW for warping, our model predicts all local correspondences between two time series and is trained based on metric learning, which enables it to learn the optimal data-dependent warping for the target task. We also propose to induce pre-training of our model by DTW to improve the discriminative power. Extensive experiments demonstrate the superior effectiveness of our model over DTW and its state-of-the-art performance in online signature verification.

MixBag: Bag-Level Data Augmentation for Learning from Label Proportions

Aug 17, 2023

Abstract:Learning from label proportions (LLP) is a promising weakly supervised learning problem. In LLP, a set of instances (bag) has label proportions, but no instance-level labels are given. LLP aims to train an instance-level classifier by using the label proportions of the bag. In this paper, we propose a bag-level data augmentation method for LLP called MixBag, based on the key observation from our preliminary experiments; that the instance-level classification accuracy improves as the number of labeled bags increases even though the total number of instances is fixed. We also propose a confidence interval loss designed based on statistical theory to use the augmented bags effectively. To the best of our knowledge, this is the first attempt to propose bag-level data augmentation for LLP. The advantage of MixBag is that it can be applied to instance-level data augmentation techniques and any LLP method that uses the proportion loss. Experimental results demonstrate this advantage and the effectiveness of our method.

Learning from Label Proportion with Online Pseudo-Label Decision by Regret Minimization

Feb 17, 2023Abstract:This paper proposes a novel and efficient method for Learning from Label Proportions (LLP), whose goal is to train a classifier only by using the class label proportions of instance sets, called bags. We propose a novel LLP method based on an online pseudo-labeling method with regret minimization. As opposed to the previous LLP methods, the proposed method effectively works even if the bag sizes are large. We demonstrate the effectiveness of the proposed method using some benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge