Shi Li

SUNY at Buffalo

Learning Longitudinal Health Representations from EHR and Wearable Data

Jan 18, 2026Abstract:Foundation models trained on electronic health records show strong performance on many clinical prediction tasks but are limited by sparse and irregular documentation. Wearable devices provide dense continuous physiological signals but lack semantic grounding. Existing methods usually model these data sources separately or combine them through late fusion. We propose a multimodal foundation model that jointly represents electronic health records and wearable data as a continuous time latent process. The model uses modality specific encoders and a shared temporal backbone pretrained with self supervised and cross modal objectives. This design produces representations that are temporally coherent and clinically grounded. Across forecasting physiological and risk modeling tasks the model outperforms strong electronic health record only and wearable only baselines especially at long horizons and under missing data. These results show that joint electronic health record and wearable pretraining yields more faithful representations of longitudinal health.

Where It Moves, It Matters: Referring Surgical Instrument Segmentation via Motion

Jan 18, 2026Abstract:Enabling intuitive, language-driven interaction with surgical scenes is a critical step toward intelligent operating rooms and autonomous surgical robotic assistance. However, the task of referring segmentation, localizing surgical instruments based on natural language descriptions, remains underexplored in surgical videos, with existing approaches struggling to generalize due to reliance on static visual cues and predefined instrument names. In this work, we introduce SurgRef, a novel motion-guided framework that grounds free-form language expressions in instrument motion, capturing how tools move and interact across time, rather than what they look like. This allows models to understand and segment instruments even under occlusion, ambiguity, or unfamiliar terminology. To train and evaluate SurgRef, we present Ref-IMotion, a diverse, multi-institutional video dataset with dense spatiotemporal masks and rich motion-centric expressions. SurgRef achieves state-of-the-art accuracy and generalization across surgical procedures, setting a new benchmark for robust, language-driven surgical video segmentation.

A Unified Graph-based Framework for Scalable 3D Tree Reconstruction and Non-Destructive Biomass Estimation from Point Clouds

Jun 18, 2025Abstract:Estimating forest above-ground biomass (AGB) is crucial for assessing carbon storage and supporting sustainable forest management. Quantitative Structural Model (QSM) offers a non-destructive approach to AGB estimation through 3D tree structural reconstruction. However, current QSM methods face significant limitations, as they are primarily designed for individual trees,depend on high-quality point cloud data from terrestrial laser scanning (TLS), and also require multiple pre-processing steps that hinder scalability and practical deployment. This study presents a novel unified framework that enables end-to-end processing of large-scale point clouds using an innovative graph-based pipeline. The proposed approach seamlessly integrates tree segmentation,leaf-wood separation and 3D skeletal reconstruction through dedicated graph operations including pathing and abstracting for tree topology reasoning. Comprehensive validation was conducted on datasets with varying leaf conditions (leaf-on and leaf-off), spatial scales (tree- and plot-level), and data sources (TLS and UAV-based laser scanning, ULS). Experimental results demonstrate strong performance under challenging conditions, particularly in leaf-on scenarios (~20% relative error) and low-density ULS datasets with partial coverage (~30% relative error). These findings indicate that the proposed framework provides a robust and scalable solution for large-scale, non-destructive AGB estimation. It significantly reduces dependency on specialized pre-processing tools and establishes ULS as a viable alternative to TLS. To our knowledge, this is the first method capable of enabling seamless, end-to-end 3D tree reconstruction at operational scales. This advancement substantially improves the feasibility of QSM-based AGB estimation, paving the way for broader applications in forest inventory and climate change research.

Temporal Entailment Pretraining for Clinical Language Models over EHR Data

Apr 25, 2025Abstract:Clinical language models have achieved strong performance on downstream tasks by pretraining on domain specific corpora such as discharge summaries and medical notes. However, most approaches treat the electronic health record as a static document, neglecting the temporally-evolving and causally entwined nature of patient trajectories. In this paper, we introduce a novel temporal entailment pretraining objective for language models in the clinical domain. Our method formulates EHR segments as temporally ordered sentence pairs and trains the model to determine whether a later state is entailed by, contradictory to, or neutral with respect to an earlier state. Through this temporally structured pretraining task, models learn to perform latent clinical reasoning over time, improving their ability to generalize across forecasting and diagnosis tasks. We pretrain on a large corpus derived from MIMIC IV and demonstrate state of the art results on temporal clinical QA, early warning prediction, and disease progression modeling.

ChronoFormer: Time-Aware Transformer Architectures for Structured Clinical Event Modeling

Apr 10, 2025Abstract:The temporal complexity of electronic health record (EHR) data presents significant challenges for predicting clinical outcomes using machine learning. This paper proposes ChronoFormer, an innovative transformer based architecture specifically designed to encode and leverage temporal dependencies in longitudinal patient data. ChronoFormer integrates temporal embeddings, hierarchical attention mechanisms, and domain specific masking techniques. Extensive experiments conducted on three benchmark tasks mortality prediction, readmission prediction, and long term comorbidity onset demonstrate substantial improvements over current state of the art methods. Furthermore, detailed analyses of attention patterns underscore ChronoFormer's capability to capture clinically meaningful long range temporal relationships.

Exploring Neural Ordinary Differential Equations as Interpretable Healthcare classifiers

Mar 05, 2025Abstract:Deep Learning has emerged as one of the most significant innovations in machine learning. However, a notable limitation of this field lies in the ``black box" decision-making processes, which have led to skepticism within groups like healthcare and scientific communities regarding its applicability. In response, this study introduces a interpretable approach using Neural Ordinary Differential Equations (NODEs), a category of neural network models that exploit the dynamics of differential equations for representation learning. Leveraging their foundation in differential equations, we illustrate the capability of these models to continuously process textual data, marking the first such model of its kind, and thereby proposing a promising direction for future research in this domain. The primary objective of this research is to propose a novel architecture for groups like healthcare that require the predictive capabilities of deep learning while emphasizing the importance of model transparency demonstrated in NODEs.

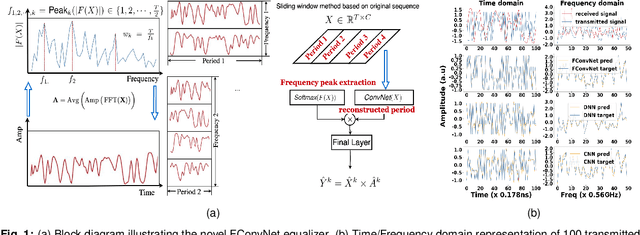

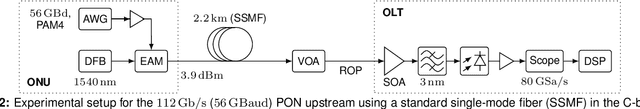

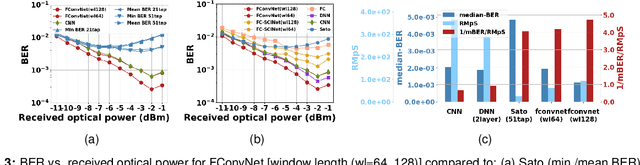

Advanced Equalization in 112 Gb/s Upstream PON Using a Novel Fourier Convolution-based Network

May 04, 2024

Abstract:We experimentally demonstrate a novel, low-complexity Fourier Convolution-based Network (FConvNet) based equalizer for 112 Gb/s upstream PAM4-PON. At a BER of 0.005, FConvNet enhances the receiver sensitivity by 2 and 1 dB compared to a 51-tap Sato equalizer and benchmark machine learning algorithms respectively.

Machine Learning in Short-Reach Optical Systems: A Comprehensive Survey

May 02, 2024

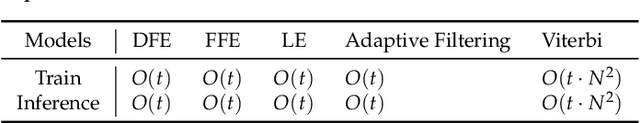

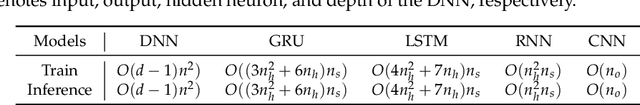

Abstract:In recent years, extensive research has been conducted to explore the utilization of machine learning algorithms in various direct-detected and self-coherent short-reach communication applications. These applications encompass a wide range of tasks, including bandwidth request prediction, signal quality monitoring, fault detection, traffic prediction, and digital signal processing (DSP)-based equalization. As a versatile approach, machine learning demonstrates the ability to address stochastic phenomena in optical systems networks where deterministic methods may fall short. However, when it comes to DSP equalization algorithms, their performance improvements are often marginal, and their complexity is prohibitively high, especially in cost-sensitive short-reach communications scenarios such as passive optical networks (PONs). They excel in capturing temporal dependencies, handling irregular or nonlinear patterns effectively, and accommodating variable time intervals. Within this extensive survey, we outline the application of machine learning techniques in short-reach communications, specifically emphasizing their utilization in high-bandwidth demanding PONs. Notably, we introduce a novel taxonomy for time-series methods employed in machine learning signal processing, providing a structured classification framework. Our taxonomy categorizes current time series methods into four distinct groups: traditional methods, Fourier convolution-based methods, transformer-based models, and time-series convolutional networks. Finally, we highlight prospective research directions within this rapidly evolving field and outline specific solutions to mitigate the complexity associated with hardware implementations. We aim to pave the way for more practical and efficient deployment of machine learning approaches in short-reach optical communication systems by addressing complexity concerns.

A Novel Machine Learning-based Equalizer for a Downstream 100G PAM-4 PON

Apr 25, 2024

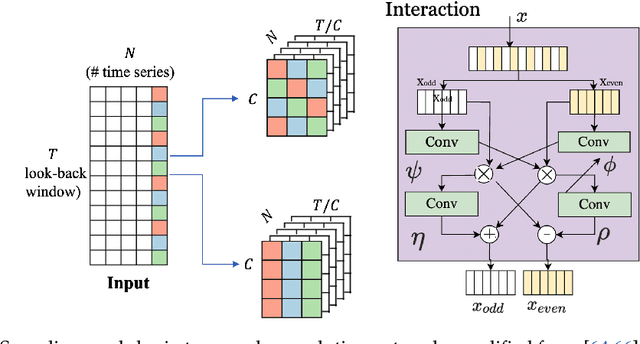

Abstract:A frequency-calibrated SCINet (FC-SCINet) equalizer is proposed for down-stream 100G PON with 28.7 dB path loss. At 5 km, FC-SCINet improves the BER by 88.87% compared to FFE and a 3-layer DNN with 10.57% lower complexity.

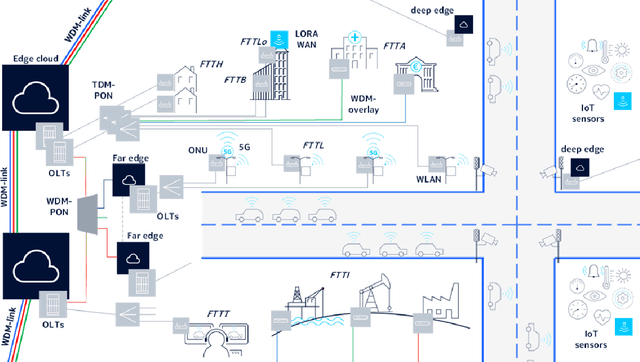

KIGLIS: Smart Networks for Smart Cities

Jun 09, 2021

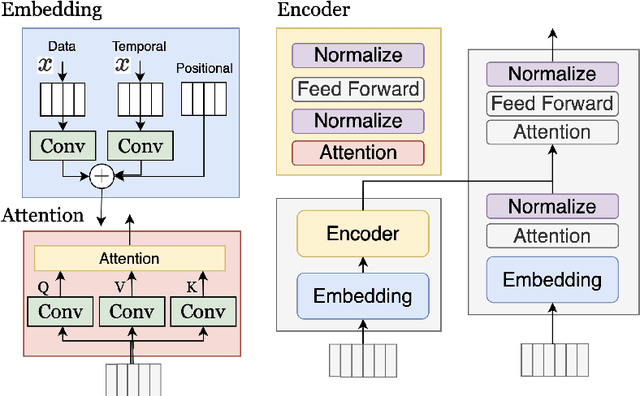

Abstract:Smart cities will be characterized by a variety of intelligent and networked services, each with specific requirements for the underlying network infrastructure. While smart city architectures and services have been studied extensively, little attention has been paid to the network technology. The KIGLIS research project, consisting of a consortium of companies, universities and research institutions, focuses on artificial intelligence for optimizing fiber-optic networks of a smart city, with a special focus on future mobility applications, such as automated driving. In this paper, we present early results on our process of collecting smart city requirements for communication networks, which will lead towards reference infrastructure and architecture solutions. Finally, we suggest directions in which artificial intelligence will improve smart city networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge