Stephan Pachnicke

Soft-Demapping for Short Reach Optical Communication: A Comparison of Deep Neural Networks and Volterra Series

Jan 10, 2025Abstract:In optical fiber communication, optical and electrical components introduce nonlinearities, which require effective compensation to attain highest data rates. In particular, in short reach communication, components are the dominant source of nonlinearities. Volterra series are a popular countermeasure for receiver-side equalization of nonlinear component impairments and their memory effects. However, Volterra equalizer architectures are generally very complex. This article investigates soft deep neural network (DNN) architectures as an alternative for nonlinear equalization and soft-decision demapping. On coherent 92 GBd dual polarization 64QAM back-to-back measurements performance and complexity is experimentally evaluated. The proposed bit-wise soft DNN equalizer (SDNNE) is compared to a 5th order Volterra equalizer at a 15 % overhead forward error correction (FEC) limit. At equal performance, the computational complexity is reduced by 65 %. At equal complexity, the performance is improved by 0.35 dB gain in optical signal-to-noise-ratio (OSNR).

* 11 pages, 14 figures, journal

Experimental Investigation of Machine Learning based Soft-Failure Management using the Optical Spectrum

Dec 12, 2023Abstract:The demand for high-speed data is exponentially growing. To conquer this, optical networks underwent significant changes getting more complex and versatile. The increasing complexity necessitates the fault management to be more adaptive to enhance network assurance. In this paper, we experimentally compare the performance of soft-failure management of different machine learning algorithms. We further introduce a machine-learning based soft-failure management framework. It utilizes a variational autoencoder based generative adversarial network (VAE-GAN) running on optical spectral data obtained by optical spectrum analyzers. The framework is able to reliably run on a fraction of available training data as well as identifying unknown failure types. The investigations show, that the VAE-GAN outperforms the other machine learning algorithms when up to 10\% of the total training data is available in identification tasks. Furthermore, the advanced training mechanism for the GAN shows a high F1-score for unknown spectrum identification. The failure localization comparison shows the advantage of a low complexity neural network in combination with a VAE over established machine learning algorithms.

Fault Monitoring in Passive Optical Networks using Machine Learning Techniques

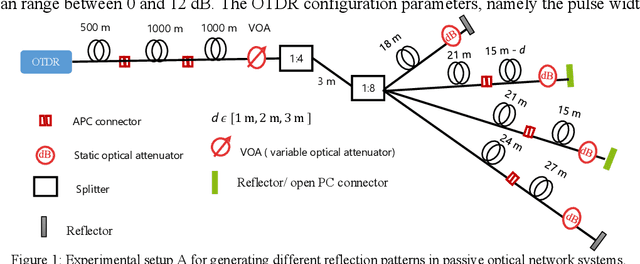

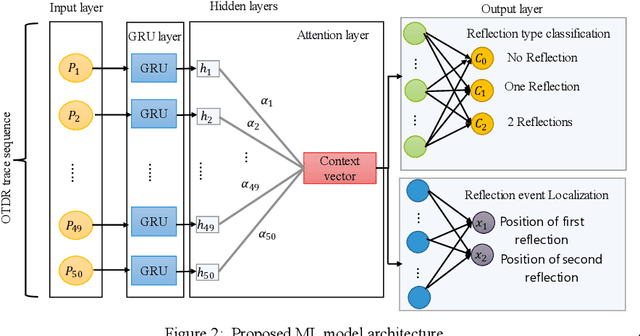

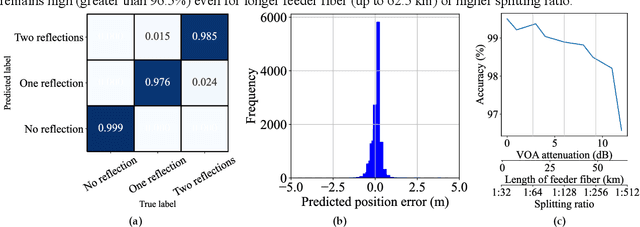

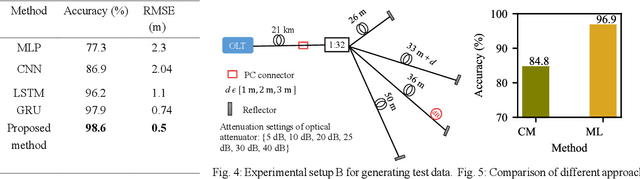

Jul 08, 2023Abstract:Passive optical network (PON) systems are vulnerable to a variety of failures, including fiber cuts and optical network unit (ONU) transmitter/receiver failures. Any service interruption caused by a fiber cut can result in huge financial losses for service providers or operators. Identifying the faulty ONU becomes difficult in the case of nearly equidistant branch terminations because the reflections from the branches overlap, making it difficult to distinguish the faulty branch given the global backscattering signal. With increasing network size, the complexity of fault monitoring in PON systems increases, resulting in less reliable monitoring. To address these challenges, we propose in this paper various machine learning (ML) approaches for fault monitoring in PON systems, and we validate them using experimental optical time domain reflectometry (OTDR) data.

Faulty Branch Identification in Passive Optical Networks using Machine Learning

Apr 03, 2023

Abstract:Passive optical networks (PONs) have become a promising broadband access network solution. To ensure a reliable transmission, and to meet service level agreements, PON systems have to be monitored constantly in order to quickly identify and localize networks faults. Typically, a service disruption in a PON system is mainly due to fiber cuts and optical network unit (ONU) transmitter/receiver failures. When the ONUs are located at different distances from the optical line terminal (OLT), the faulty ONU or branch can be identified by analyzing the recorded optical time domain reflectometry (OTDR) traces. However, faulty branch isolation becomes very challenging when the reflections originating from two or more branches with similar length overlap, which makes it very hard to discriminate the faulty branches given the global backscattered signal. Recently, machine learning (ML) based approaches have shown great potential for managing optical faults in PON systems. Such techniques perform well when trained and tested with data derived from the same PON system. But their performance may severely degrade, if the PON system (adopted for the generation of the training data) has changed, e.g. by adding more branches or varying the length difference between two neighboring branches. etc. A re-training of the ML models has to be conducted for each network change, which can be time consuming. In this paper, to overcome the aforementioned issues, we propose a generic ML approach trained independently of the network architecture for identifying the faulty branch in PON systems given OTDR signals for the cases of branches with close lengths. Such an approach can be applied to an arbitrary PON system without requiring to be re-trained for each change of the network. The proposed approach is validated using experimental data derived from PON system.

Branch Identification in Passive Optical Networks using Machine Learning

Apr 01, 2023

Abstract:A machine learning approach for improving monitoring in passive optical networks with almost equidistant branches is proposed and experimentally validated. It achieves a high diagnostic accuracy of 98.7% and an event localization error of 0.5m

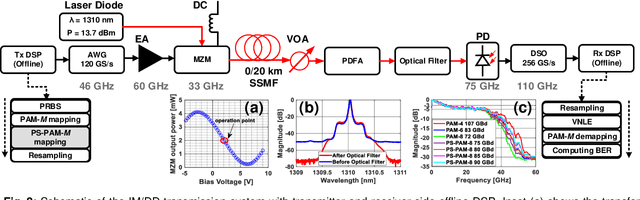

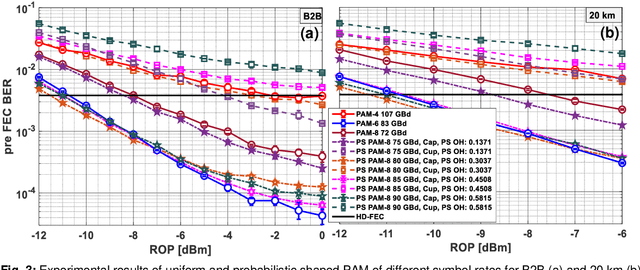

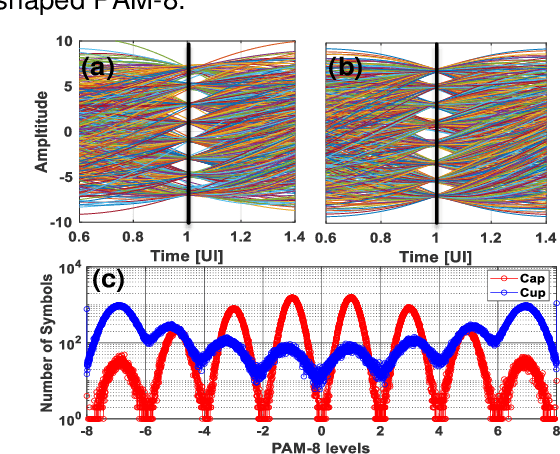

Probabilistic Shaping for High-Speed Unamplified IM/DD Systems with an O-Band EML

Mar 30, 2023Abstract:Probabilistic constellation shaping has been used in long-haul optically amplified coherent systems for its capability to approach the Shannon limit and realize fine rate granularity. The availability of high-bandwidth optical-electronic components and the previously mentioned advantages have invigorated researchers to explore probabilistic shaping (PS) in intensity-modulation and direct-detection (IM/DD) systems. This article presents an extensive comparison of uniform 8-ary pulse amplitude modulation (PAM) with PS PAM-8 using cap and cup Maxwell-Boltzmann (MB) distributions as well as MB distributions of different Gaussian orders. We report that in the presence of linear equalization, PS-PAM-8 outperforms uniform PAM-8 in terms of bit error ratio, achievable information rate and operational net bit rate indicating that cap-shaped PS-PAM-8 shows high tolerance against nonlinearities. In this paper, we have focused our investigations on O-band electro-absorption modulated laser unamplified IM/DD systems, which are operated close to the zero dispersion wavelength.

* 9 pages, 12 figures

Degradation Prediction of Semiconductor Lasers using Conditional Variational Autoencoder

Nov 05, 2022

Abstract:Semiconductor lasers have been rapidly evolving to meet the demands of next-generation optical networks. This imposes much more stringent requirements on the laser reliability, which are dominated by degradation mechanisms (e.g., sudden degradation) limiting the semiconductor laser lifetime. Physics-based approaches are often used to characterize the degradation behavior analytically, yet explicit domain knowledge and accurate mathematical models are required. Building such models can be very challenging due to a lack of a full understanding of the complex physical processes inducing the degradation under various operating conditions. To overcome the aforementioned limitations, we propose a new data-driven approach, extracting useful insights from the operational monitored data to predict the degradation trend without requiring any specific knowledge or using any physical model. The proposed approach is based on an unsupervised technique, a conditional variational autoencoder, and validated using vertical-cavity surface-emitting laser (VCSEL) and tunable edge emitting laser reliability data. The experimental results confirm that our model (i) achieves a good degradation prediction and generalization performance by yielding an F1 score of 95.3%, (ii) outperforms several baseline ML based anomaly detection techniques, and (iii) helps to shorten the aging tests by early predicting the failed devices before the end of the test and thereby saving costs

* Published in: Journal of Lightwave Technology (Volume: 40, Issue: 18, 15 September 2022)

A Machine Learning-based Framework for Predictive Maintenance of Semiconductor Laser for Optical Communication

Nov 05, 2022

Abstract:Semiconductor lasers, one of the key components for optical communication systems, have been rapidly evolving to meet the requirements of next generation optical networks with respect to high speed, low power consumption, small form factor etc. However, these demands have brought severe challenges to the semiconductor laser reliability. Therefore, a great deal of attention has been devoted to improving it and thereby ensuring reliable transmission. In this paper, a predictive maintenance framework using machine learning techniques is proposed for real-time heath monitoring and prognosis of semiconductor laser and thus enhancing its reliability. The proposed approach is composed of three stages: i) real-time performance degradation prediction, ii) degradation detection, and iii) remaining useful life (RUL) prediction. First of all, an attention based gated recurrent unit (GRU) model is adopted for real-time prediction of performance degradation. Then, a convolutional autoencoder is used to detect the degradation or abnormal behavior of a laser, given the predicted degradation performance values. Once an abnormal state is detected, a RUL prediction model based on attention-based deep learning is utilized. Afterwards, the estimated RUL is input for decision making and maintenance planning. The proposed framework is validated using experimental data derived from accelerated aging tests conducted for semiconductor tunable lasers. The proposed approach achieves a very good degradation performance prediction capability with a small root mean square error (RMSE) of 0.01, a good anomaly detection accuracy of 94.24% and a better RUL estimation capability compared to the existing ML-based laser RUL prediction models.

Experimental Comparison of PAM-8 Probabilistic Shaping with Different Gaussian Orders at 200 Gb/s Net Rate in IM/DD System with O-Band TOSA

Jun 14, 2022

Abstract:For 200Gb/s net rates, cap probabilistic shaped PAM-8 with different Gaussian orders are experimentally compared against uniform PAM-8. In back-to-back and 5km measurements, cap-shaped 85-GBd PAM-8 with Gaussian order of 5 outperforms 71-GBd uniform PAM-8 by up to 2.90dB and 3.80dB in receiver sensitivity, respectively.

Experimental Comparison of Cap and Cup Probabilistically Shaped PAM for O-Band IM/DD Transmission System

May 18, 2022

Abstract:For 200Gbit/s net rates, uniform PAM-4, 6 and 8 are experimentally compared against probabilistic shaped PAM-8 cap and cup variants. In back-to-back and 20km measurements, cap shaped 80GBd PAM-8 outperforms 72GBd PAM-8 and 83GBd PAM-6 by up to 3.50dB and 0.8dB in receiver sensitivity, respectively

* Originally published in ECOC-2021. We have updated Figure 3. The change also affects the overall outcome. In contrast to the published version, compared to uniform PAM-8 72 GBd, PS-PAM-8 80 GBd performance is updated to 3.50 dB instead of 5.17 dB, while for PAM-6 83 GBd the gain becomes 0.8 dB instead of 2.17 dB. The changes are adapted in all sections except the experimental setup and DSP section

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge