Khouloud Abdelli

Weather-Adaptive Multi-Step Forecasting of State of Polarization Changes in Aerial Fibers Using Wavelet Neural Networks

Sep 05, 2024Abstract:We introduce a novel weather-adaptive approach for multi-step forecasting of multi-scale SOP changes in aerial fiber links. By harnessing the discrete wavelet transform and incorporating weather data, our approach improves forecasting accuracy by over 65% in RMSE and 63% in MAPE compared to baselines.

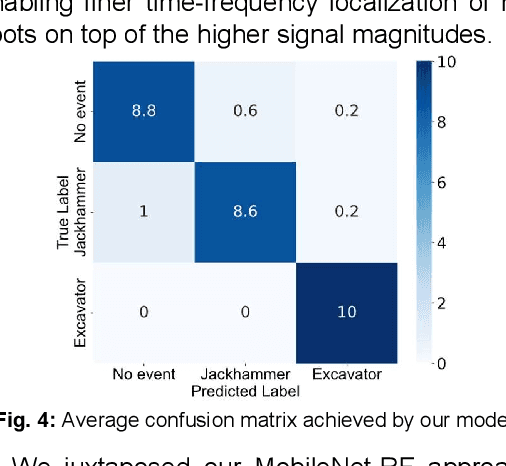

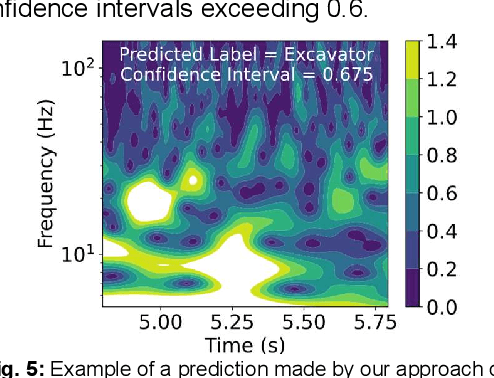

Threat Classification on Deployed Optical Networks Using MIMO Digital Fiber Sensing, Wavelets, and Machine Learning

Sep 05, 2024

Abstract:We demonstrate mechanical threats classification including jackhammers and excavators, leveraging wavelet transform of MIMO-DFS output data across a 57-km operational network link. Our machine learning framework incorporates transfer learning and shows 93% classification accuracy from field data, with benefits for optical network supervision.

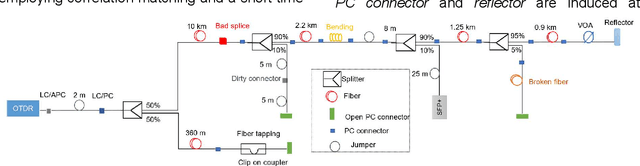

Fault Monitoring in Passive Optical Networks using Machine Learning Techniques

Jul 08, 2023Abstract:Passive optical network (PON) systems are vulnerable to a variety of failures, including fiber cuts and optical network unit (ONU) transmitter/receiver failures. Any service interruption caused by a fiber cut can result in huge financial losses for service providers or operators. Identifying the faulty ONU becomes difficult in the case of nearly equidistant branch terminations because the reflections from the branches overlap, making it difficult to distinguish the faulty branch given the global backscattering signal. With increasing network size, the complexity of fault monitoring in PON systems increases, resulting in less reliable monitoring. To address these challenges, we propose in this paper various machine learning (ML) approaches for fault monitoring in PON systems, and we validate them using experimental optical time domain reflectometry (OTDR) data.

Faulty Branch Identification in Passive Optical Networks using Machine Learning

Apr 03, 2023

Abstract:Passive optical networks (PONs) have become a promising broadband access network solution. To ensure a reliable transmission, and to meet service level agreements, PON systems have to be monitored constantly in order to quickly identify and localize networks faults. Typically, a service disruption in a PON system is mainly due to fiber cuts and optical network unit (ONU) transmitter/receiver failures. When the ONUs are located at different distances from the optical line terminal (OLT), the faulty ONU or branch can be identified by analyzing the recorded optical time domain reflectometry (OTDR) traces. However, faulty branch isolation becomes very challenging when the reflections originating from two or more branches with similar length overlap, which makes it very hard to discriminate the faulty branches given the global backscattered signal. Recently, machine learning (ML) based approaches have shown great potential for managing optical faults in PON systems. Such techniques perform well when trained and tested with data derived from the same PON system. But their performance may severely degrade, if the PON system (adopted for the generation of the training data) has changed, e.g. by adding more branches or varying the length difference between two neighboring branches. etc. A re-training of the ML models has to be conducted for each network change, which can be time consuming. In this paper, to overcome the aforementioned issues, we propose a generic ML approach trained independently of the network architecture for identifying the faulty branch in PON systems given OTDR signals for the cases of branches with close lengths. Such an approach can be applied to an arbitrary PON system without requiring to be re-trained for each change of the network. The proposed approach is validated using experimental data derived from PON system.

A Machine Learning-based Framework for Predictive Maintenance of Semiconductor Laser for Optical Communication

Nov 05, 2022

Abstract:Semiconductor lasers, one of the key components for optical communication systems, have been rapidly evolving to meet the requirements of next generation optical networks with respect to high speed, low power consumption, small form factor etc. However, these demands have brought severe challenges to the semiconductor laser reliability. Therefore, a great deal of attention has been devoted to improving it and thereby ensuring reliable transmission. In this paper, a predictive maintenance framework using machine learning techniques is proposed for real-time heath monitoring and prognosis of semiconductor laser and thus enhancing its reliability. The proposed approach is composed of three stages: i) real-time performance degradation prediction, ii) degradation detection, and iii) remaining useful life (RUL) prediction. First of all, an attention based gated recurrent unit (GRU) model is adopted for real-time prediction of performance degradation. Then, a convolutional autoencoder is used to detect the degradation or abnormal behavior of a laser, given the predicted degradation performance values. Once an abnormal state is detected, a RUL prediction model based on attention-based deep learning is utilized. Afterwards, the estimated RUL is input for decision making and maintenance planning. The proposed framework is validated using experimental data derived from accelerated aging tests conducted for semiconductor tunable lasers. The proposed approach achieves a very good degradation performance prediction capability with a small root mean square error (RMSE) of 0.01, a good anomaly detection accuracy of 94.24% and a better RUL estimation capability compared to the existing ML-based laser RUL prediction models.

Degradation Prediction of Semiconductor Lasers using Conditional Variational Autoencoder

Nov 05, 2022

Abstract:Semiconductor lasers have been rapidly evolving to meet the demands of next-generation optical networks. This imposes much more stringent requirements on the laser reliability, which are dominated by degradation mechanisms (e.g., sudden degradation) limiting the semiconductor laser lifetime. Physics-based approaches are often used to characterize the degradation behavior analytically, yet explicit domain knowledge and accurate mathematical models are required. Building such models can be very challenging due to a lack of a full understanding of the complex physical processes inducing the degradation under various operating conditions. To overcome the aforementioned limitations, we propose a new data-driven approach, extracting useful insights from the operational monitored data to predict the degradation trend without requiring any specific knowledge or using any physical model. The proposed approach is based on an unsupervised technique, a conditional variational autoencoder, and validated using vertical-cavity surface-emitting laser (VCSEL) and tunable edge emitting laser reliability data. The experimental results confirm that our model (i) achieves a good degradation prediction and generalization performance by yielding an F1 score of 95.3%, (ii) outperforms several baseline ML based anomaly detection techniques, and (iii) helps to shorten the aging tests by early predicting the failed devices before the end of the test and thereby saving costs

* Published in: Journal of Lightwave Technology (Volume: 40, Issue: 18, 15 September 2022)

Machine Learning based Laser Failure Mode Detection

Mar 19, 2022

Abstract:Laser degradation analysis is a crucial process for the enhancement of laser reliability. Here, we propose a data-driven fault detection approach based on Long Short-Term Memory (LSTM) recurrent neural networks to detect the different laser degradation modes based on synthetic historical failure data. In comparison to typical threshold-based systems, attaining 24.41% classification accuracy, the LSTM-based model achieves 95.52% accuracy, and also outperforms classical machine learning (ML) models namely Random Forest (RF), K-Nearest Neighbours (KNN) and Logistic Regression (LR).

Machine Learning based Data Driven Diagnostic and Prognostic Approach for Laser Reliability Enhancement

Mar 19, 2022

Abstract:In this paper, a data-driven diagnostic and prognostic approach based on machine learning is proposed to detect laser failure modes and to predict the remaining useful life (RUL) of a laser during its operation. We present an architecture of the proposed cognitive predictive maintenance framework and demonstrate its effectiveness using synthetic data.

Lifetime Prediction of 1550 nm DFB Laser using Machine learning Techniques

Mar 19, 2022

Abstract:A novel approach based on an artificial neural network (ANN) for lifetime prediction of 1.55 um InGaAsP MQW-DFB laser diodes is presented. It outperforms the conventional lifetime projection using accelerated aging tests.

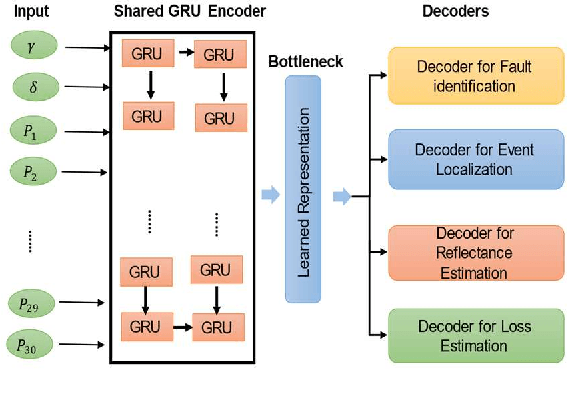

Gated Recurrent Unit based Autoencoder for Optical Link Fault Diagnosis in Passive Optical Networks

Mar 19, 2022

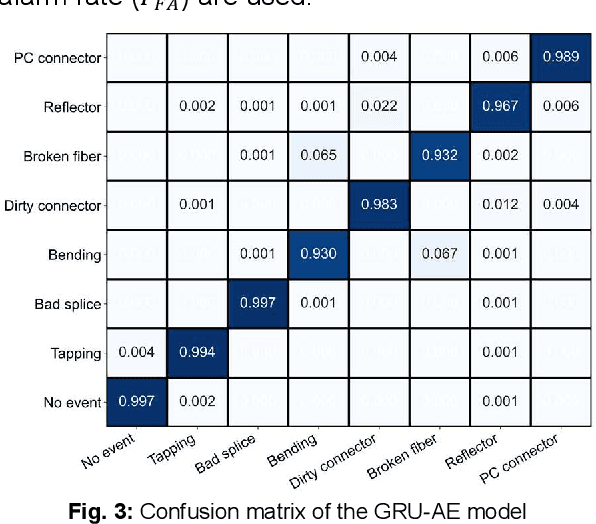

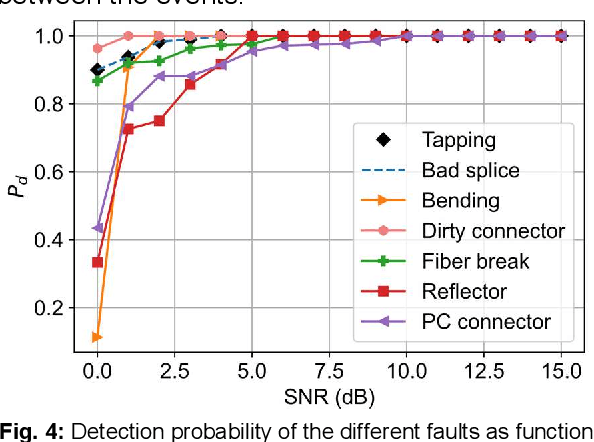

Abstract:We propose a deep learning approach based on an autoencoder for identifying and localizing fiber faults in passive optical networks. The experimental results show that the proposed method detects faults with 97% accuracy, pinpoints them with an RMSE of 0.18 m and outperforms conventional techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge