Salvatore D'Oro

Should I Have Expressed a Different Intent? Counterfactual Generation for LLM-Based Autonomous Control

Jan 29, 2026Abstract:Large language model (LLM)-powered agents can translate high-level user intents into plans and actions in an environment. Yet after observing an outcome, users may wonder: What if I had phrased my intent differently? We introduce a framework that enables such counterfactual reasoning in agentic LLM-driven control scenarios, while providing formal reliability guarantees. Our approach models the closed-loop interaction between a user, an LLM-based agent, and an environment as a structural causal model (SCM), and leverages test-time scaling to generate multiple candidate counterfactual outcomes via probabilistic abduction. Through an offline calibration phase, the proposed conformal counterfactual generation (CCG) yields sets of counterfactual outcomes that are guaranteed to contain the true counterfactual outcome with high probability. We showcase the performance of CCG on a wireless network control use case, demonstrating significant advantages compared to naive re-execution baselines.

SIA: Symbolic Interpretability for Anticipatory Deep Reinforcement Learning in Network Control

Jan 29, 2026Abstract:Deep reinforcement learning (DRL) promises adaptive control for future mobile networks but conventional agents remain reactive: they act on past and current measurements and cannot leverage short-term forecasts of exogenous KPIs such as bandwidth. Augmenting agents with predictions can overcome this temporal myopia, yet uptake in networking is scarce because forecast-aware agents act as closed-boxes; operators cannot tell whether predictions guide decisions or justify the added complexity. We propose SIA, the first interpreter that exposes in real time how forecast-augmented DRL agents operate. SIA fuses Symbolic AI abstractions with per-KPI Knowledge Graphs to produce explanations, and includes a new Influence Score metric. SIA achieves sub-millisecond speed, over 200x faster than existing XAI methods. We evaluate SIA on three diverse networking use cases, uncovering hidden issues, including temporal misalignment in forecast integration and reward-design biases that trigger counter-productive policies. These insights enable targeted fixes: a redesigned agent achieves a 9% higher average bitrate in video streaming, and SIA's online Action-Refinement module improves RAN-slicing reward by 25% without retraining. By making anticipatory DRL transparent and tunable, SIA lowers the barrier to proactive control in next-generation mobile networks.

AgentRAN: An Agentic AI Architecture for Autonomous Control of Open 6G Networks

Aug 25, 2025

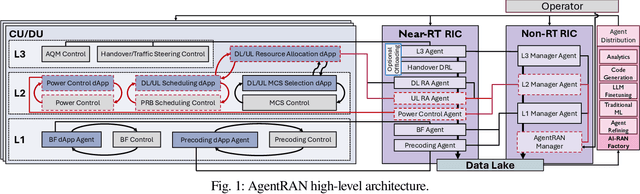

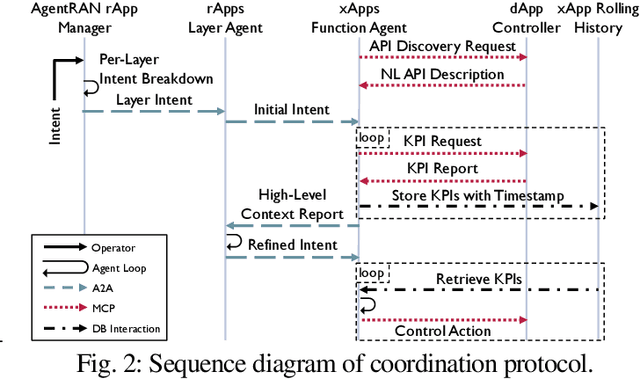

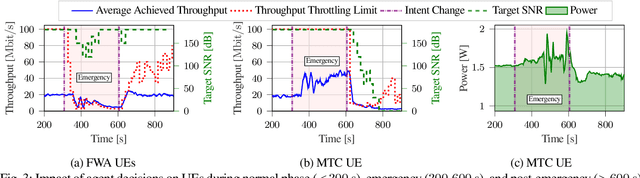

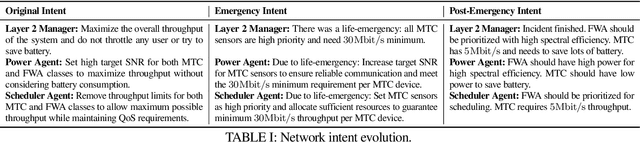

Abstract:The Open RAN movement has catalyzed a transformation toward programmable, interoperable cellular infrastructures. Yet, today's deployments still rely heavily on static control and manual operations. To move beyond this limitation, we introduce AgenRAN, an AI-native, Open RAN-aligned agentic framework that generates and orchestrates a fabric of distributed AI agents based on Natural Language (NL) intents. Unlike traditional approaches that require explicit programming, AgentRAN's LLM-powered agents interpret natural language intents, negotiate strategies through structured conversations, and orchestrate control loops across the network. AgentRAN instantiates a self-organizing hierarchy of agents that decompose complex intents across time scales (from sub-millisecond to minutes), spatial domains (cell to network-wide), and protocol layers (PHY/MAC to RRC). A central innovation is the AI-RAN Factory, an automated synthesis pipeline that observes agent interactions and continuously generates new agents embedding improved control algorithms, effectively transforming the network from a static collection of functions into an adaptive system capable of evolving its own intelligence. We demonstrate AgentRAN through live experiments on 5G testbeds where competing user demands are dynamically balanced through cascading intents. By replacing rigid APIs with NL coordination, AgentRAN fundamentally redefines how future 6G networks autonomously interpret, adapt, and optimize their behavior to meet operator goals.

Beyond Connectivity: An Open Architecture for AI-RAN Convergence in 6G

Jul 09, 2025Abstract:The proliferation of data-intensive Artificial Intelligence (AI) applications at the network edge demands a fundamental shift in RAN design, from merely consuming AI for network optimization, to actively enabling distributed AI workloads. This paradigm shift presents a significant opportunity for network operators to monetize AI at the edge while leveraging existing infrastructure investments. To realize this vision, this article presents a novel converged O-RAN and AI-RAN architecture that unifies orchestration and management of both telecommunications and AI workloads on shared infrastructure. The proposed architecture extends the Open RAN principles of modularity, disaggregation, and cloud-nativeness to support heterogeneous AI deployments. We introduce two key architectural innovations: (i) the AI-RAN Orchestrator, which extends the O-RAN Service Management and Orchestration (SMO) to enable integrated resource and allocation across RAN and AI workloads; and (ii) AI-RAN sites that provide distributed edge AI platforms with real-time processing capabilities. The proposed system supports flexible deployment options, allowing AI workloads to be orchestrated with specific timing requirements (real-time or batch processing) and geographic targeting. The proposed architecture addresses the orchestration requirements for managing heterogeneous workloads at different time scales while maintaining open, standardized interfaces and multi-vendor interoperability.

5G Aero: A Prototyping Platform for Evaluating Aerial 5G Communications

Jun 10, 2025Abstract:The application of small-factor, 5G-enabled Unmanned Aerial Vehicles (UAVs) has recently gained significant interest in various aerial and Industry 4.0 applications. However, ensuring reliable, high-throughput, and low-latency 5G communication in aerial applications remains a critical and underexplored problem. This paper presents the 5th generation (5G) Aero, a compact UAV optimized for 5G connectivity, aimed at fulfilling stringent 3rd Generation Partnership Project (3GPP) requirements. We conduct a set of experiments in an indoor environment, evaluating the UAV's ability to establish high-throughput, low-latency communications in both Line-of-Sight (LoS) and Non-Line-of-Sight (NLoS) conditions. Our findings demonstrate that the 5G Aero meets the required 3GPP standards for Command and Control (C2) packets latency in both LoS and NLoS, and video latency in LoS communications and it maintains acceptable latency levels for video transmission in NLoS conditions. Additionally, we show that the 5G module installed on the UAV introduces a negligible 1% decrease in flight time, showing that 5G technologies can be integrated into commercial off-the-shelf UAVs with minimal impact on battery lifetime. This paper contributes to the literature by demonstrating the practical capabilities of current 5G networks to support advanced UAV operations in telecommunications, offering insights into potential enhancements and optimizations for UAV performance in 5G networks

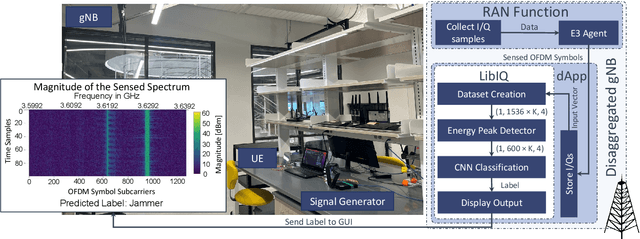

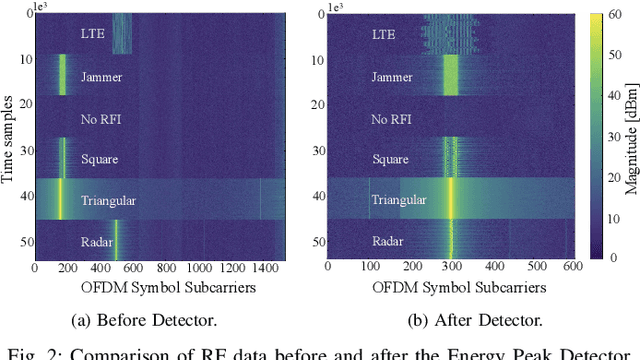

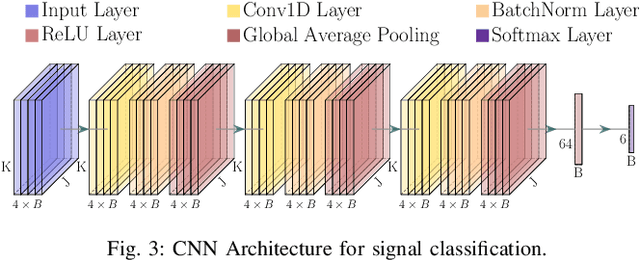

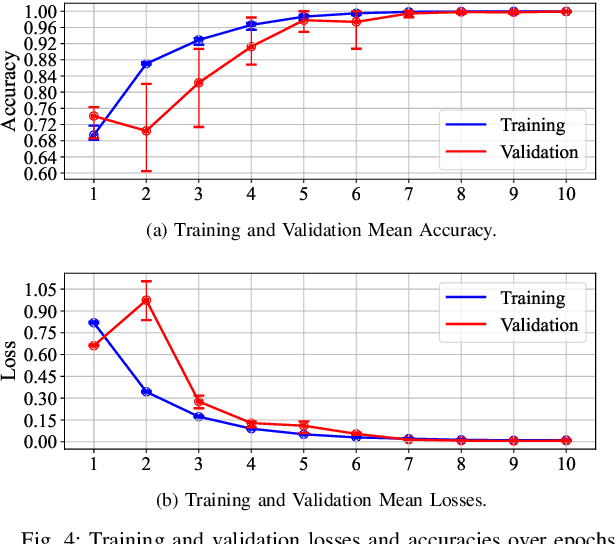

LibIQ: Toward Real-Time Spectrum Classification in O-RAN dApps

May 15, 2025

Abstract:The O-RAN architecture is transforming cellular networks by adopting RAN softwarization and disaggregation concepts to enable data-driven monitoring and control of the network. Such management is enabled by RICs, which facilitate near-real-time and non-real-time network control through xApps and rApps. However, they face limitations, including latency overhead in data exchange between the RAN and RIC, restricting real-time monitoring, and the inability to access user plain data due to privacy and security constraints, hindering use cases like beamforming and spectrum classification. In this paper, we leverage the dApps concept to enable real-time RF spectrum classification with LibIQ, a novel library for RF signals that facilitates efficient spectrum monitoring and signal classification by providing functionalities to read I/Q samples as time-series, create datasets and visualize time-series data through plots and spectrograms. Thanks to LibIQ, I/Q samples can be efficiently processed to detect external RF signals, which are subsequently classified using a CNN inside the library. To achieve accurate spectrum analysis, we created an extensive dataset of time-series-based I/Q samples, representing distinct signal types captured using a custom dApp running on a 5G deployment over the Colosseum network emulator and an OTA testbed. We evaluate our model by deploying LibIQ in heterogeneous scenarios with varying center frequencies, time windows, and external RF signals. In real-time analysis, the model classifies the processed I/Q samples, achieving an average accuracy of approximately 97.8\% in identifying signal types across all scenarios. We pledge to release both LibIQ and the dataset created as a publicly available framework upon acceptance.

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

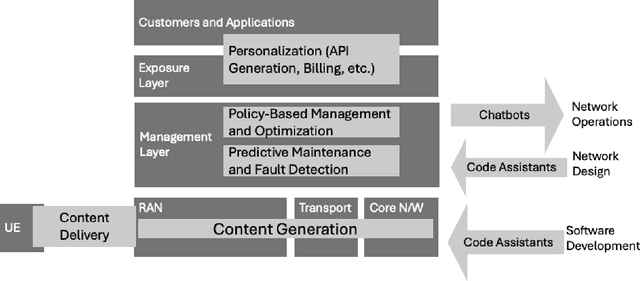

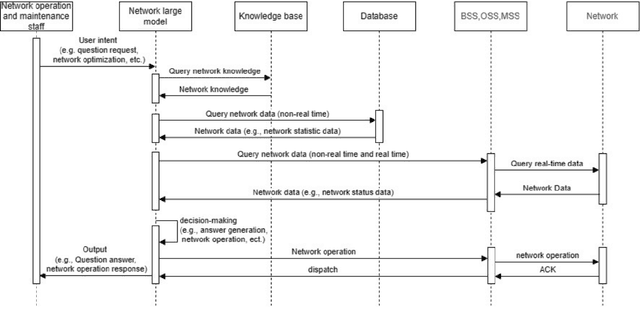

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

TIMESAFE: Timing Interruption Monitoring and Security Assessment for Fronthaul Environments

Dec 17, 2024

Abstract:5G and beyond cellular systems embrace the disaggregation of Radio Access Network (RAN) components, exemplified by the evolution of the fronthual (FH) connection between cellular baseband and radio unit equipment. Crucially, synchronization over the FH is pivotal for reliable 5G services. In recent years, there has been a push to move these links to an Ethernet-based packet network topology, leveraging existing standards and ongoing research for Time-Sensitive Networking (TSN). However, TSN standards, such as Precision Time Protocol (PTP), focus on performance with little to no concern for security. This increases the exposure of the open FH to security risks. Attacks targeting synchronization mechanisms pose significant threats, potentially disrupting 5G networks and impairing connectivity. In this paper, we demonstrate the impact of successful spoofing and replay attacks against PTP synchronization. We show how a spoofing attack is able to cause a production-ready O-RAN and 5G-compliant private cellular base station to catastrophically fail within 2 seconds of the attack, necessitating manual intervention to restore full network operations. To counter this, we design a Machine Learning (ML)-based monitoring solution capable of detecting various malicious attacks with over 97.5% accuracy.

Listen-While-Talking: Toward dApp-based Real-Time Spectrum Sharing in O-RAN

Jul 06, 2024Abstract:This demo paper presents a dApp-based real-time spectrum sharing scenario where a 5th generation (5G) base station implementing the NR stack adapts its transmission and reception strategies based on the incumbent priority users in the Citizen Broadband Radio Service (CBRS) band. The dApp is responsible for obtaining relevant measurements from the Next Generation Node Base (gNB), running the spectrum sensing inference, and configuring the gNB with a control action upon detecting the primary incumbent user transmissions. This approach is built on dApps, which extend the O-RAN framework to the real-time and user plane domains. Thus, it avoids the need of dedicated Spectrum Access Systems (SASs) in the CBRS band. The demonstration setup is based on the open-source 5G OpenAirInterface (OAI) framework, where we have implemented a dApp interfaced with a gNB and communicating with a Commercial Off-the-Shelf (COTS) User Equipment (UE) in an over-the-air wireless environment. When an incumbent user has active transmission, the dApp will detect and inform the primary user presence to the gNB. The dApps will also enforce a control policy that adapts the scheduling and transmission policy of the Radio Access Network (RAN). This demo provides valuable insights into the potential of using dApp-based spectrum sensing with O-RAN architecture in next generation cellular networks.

Stitching the Spectrum: Semantic Spectrum Segmentation with Wideband Signal Stitching

Feb 07, 2024

Abstract:Spectrum has become an extremely scarce and congested resource. As a consequence, spectrum sensing enables the coexistence of different wireless technologies in shared spectrum bands. Most existing work requires spectrograms to classify signals. Ultimately, this implies that images need to be continuously created from I/Q samples, thus creating unacceptable latency for real-time operations. In addition, spectrogram-based approaches do not achieve sufficient granularity level as they are based on object detection performed on pixels and are based on rectangular bounding boxes. For this reason, we propose a completely novel approach based on semantic spectrum segmentation, where multiple signals are simultaneously classified and localized in both time and frequency at the I/Q level. Conversely from the state-of-the-art computer vision algorithm, we add non-local blocks to combine the spatial features of signals, and thus achieve better performance. In addition, we propose a novel data generation approach where a limited set of easy-to-collect real-world wireless signals are ``stitched together'' to generate large-scale, wideband, and diverse datasets. Experimental results obtained on multiple testbeds (including the Arena testbed) using multiple antennas, multiple sampling frequencies, and multiple radios over the course of 3 days show that our approach classifies and localizes signals with a mean intersection over union (IOU) of 96.70% across 5 wireless protocols while performing in real-time with a latency of 2.6 ms. Moreover, we demonstrate that our approach based on non-local blocks achieves 7% more accuracy when segmenting the most challenging signals with respect to the state-of-the-art U-Net algorithm. We will release our 17 GB dataset and code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge