Salman Durrani

Impact of Pointing Error on Coverage Performance of 3D Indoor Terahertz Communication Systems

Jan 29, 2026Abstract:In this paper, we develop a tractable analytical framework for a three-dimensional (3D) indoor terahertz (THz) communication system to theoretically assess the impact of the pointing error on its coverage performance. Specifically, we model the locations of access points (APs) using a Poisson point process, human blockages as random cylinder processes, and wall blockages through a Boolean straight line process. A pointing error refers to beamforming gain and direction mismatch between the transmitter and receiver. We characterize it based on the inaccuracy of location estimate. We then analyze the impact of this pointing error on the received signal power and derive a tractable expression for the coverage probability, incorporating the multi-cluster fluctuating two-ray distribution to accurately model small-scale fading in THz communications. Aided by simulation results, we corroborate our analysis and demonstrate that the pointing error has a pronounced impact on the coverage probability. Specifically, we find that merely increasing the antenna array size is insufficient to improve the coverage probability and mitigate the detrimental impact of the pointing error, highlighting the necessity of advanced estimation techniques in THz communication systems.

Near-Field Secure Beamfocusing With Receiver-Centered Protected Zone

May 26, 2025Abstract:This work studies near-field secure communications through transmit beamfocusing. We examine the benefit of having a protected eavesdropper-free zone around the legitimate receiver, and we determine the worst-case secrecy performance against a potential eavesdropper located anywhere outside the protected zone. A max-min optimization problem is formulated for the beamfocusing design with and without artificial noise transmission. Despite the NP-hardness of the problem, we develop a synchronous gradient descent-ascent framework that approximates the global maximin solution. A low-complexity solution is also derived that delivers excellent performance over a wide range of operating conditions. We further extend this study to a scenario where it is not possible to physically enforce a protected zone. To this end, we consider secure communications through the creation of a virtual protected zone using a full-duplex legitimate receiver. Numerical results demonstrate that exploiting either the physical or virtual receiver-centered protected zone with appropriately designed beamfocusing is an effective strategy for achieving secure near-field communications.

Coverage Analysis for 3D Indoor Terahertz Communication System Over Fluctuating Two-Ray Fading Channels

Oct 07, 2024

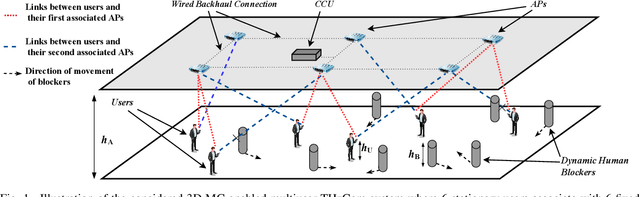

Abstract:In this paper, we develop a novel analytical framework for a three-dimensional (3D) indoor terahertz (THz) communication system. Our proposed model incorporates more accurate modeling of wall blockages via Manhattan line processes and precise modeling of THz fading channels via a fluctuating two-ray (FTR) channel model. We also account for traditional unique features of THz, such as molecular absorption loss, user blockages, and 3D directional antenna beams. Moreover, we model locations of access points (APs) using a Poisson point process and adopt the nearest line-of-sight AP association strategy. Due to the high penetration loss caused by wall blockages, we consider that a user equipment (UE) and its associated AP and interfering APs are all in the same rectangular area, i.e., a room. Based on the proposed rectangular area model, we evaluate the impact of the UE's location on the distance to its associated AP. We then develop a tractable method to derive a new expression for the coverage probability by examining the interference from interfering APs and considering the FTR fading experienced by THz communications. Aided by simulation results, we validate our analysis and demonstrate that the UE's location has a pronounced impact on its coverage probability. Additionally, we find that the optimal AP density is determined by both the UE's location and the room size, which provides valuable insights for meeting the coverage requirements of future THz communication system deployment.

Outage Performance of Multi-tier UAV Communication with Random Beam Misalignment

Jul 24, 2023

Abstract:By exploiting the degree of freedom on the altitude, unmanned aerial vehicle (UAV) communication can provide ubiquitous communication for future wireless networks. In the case of concurrent transmission of multiple UAVs, the directional beamforming formed by multiple antennas is an effective way to reduce co-channel interference. However, factors such as airflow disturbance or estimation error for UAV communications can cause the occurrence of beam misalignment. In this paper, we investigate the system performance of a multi-tier UAV communication network with the consideration of unstable beam alignment. In particular, we propose a tractable random model to capture the impacts of beam misalignment in the 3D space. Based on this, by utilizing stochastic geometry, an analytical framework for obtaining the outage probability in the downlink of a multi-tier UAV communication network for the closest distance association scheme and the maximum average power association scheme is established. The accuracy of the analysis is verified by Monte-Carlo simulations. The results indicate that in the presence of random beam misalignment, the optimal number of UAV antennas needs to be adjusted to be relatively larger when the density of UAVs increases or the altitude of UAVs becomes higher.

UAV-assisted IoT Monitoring Network: Adaptive Multiuser Access for Low-Latency and High-Reliability Under Bursty Traffic

Apr 25, 2023Abstract:In this work, we propose an adaptive system design for an Internet of Things (IoT) monitoring network with latency and reliability requirements, where IoT devices generate time-critical and event-triggered bursty traffic, and an unmanned aerial vehicle (UAV) aggregates and relays sensed data to the base station. Existing transmission schemes based on the overall average traffic rates over-utilize network resources when traffic is smooth, and suffer from packet collisions when traffic is bursty which occurs in an event of interest. We address such problems by designing an adaptive transmission scheme employing multiuser shared access (MUSA) based grant-free non-orthogonal multiple access and use short packet communication for low latency of the IoT-to-UAV communication. Specifically, to accommodate bursty traffic, we design an analytical framework and formulate an optimization problem to maximize the performance by determining the optimal number of transmission time slots, subject to the stringent reliability and latency constraints. We compare the performance of the proposed scheme with a non-adaptive power-diversity based scheme with a fixed number of time slots. Our results show that the proposed scheme has superior reliability and stability in comparison to the state-of-the-art scheme at moderate to high average traffic rates, while satisfying the stringent latency requirements.

Access-based Lightweight Physical Layer Authentication for the Internet of Things Devices

Mar 01, 2023

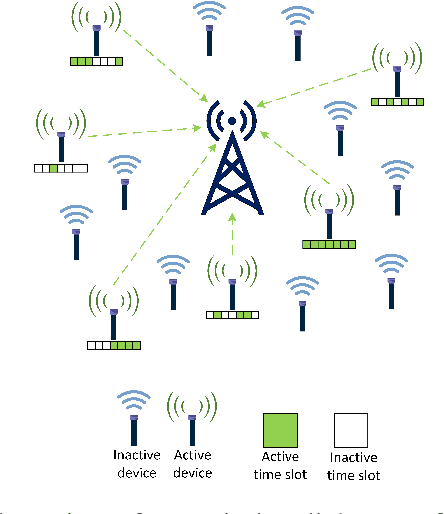

Abstract:Physical-layer authentication is a popular alternative to the conventional key-based authentication for internet of things (IoT) devices due to their limited computational capacity and battery power. However, this approach has limitations due to poor robustness under channel fluctuations, reconciliation overhead, and no clear safeguard distance to ensure the secrecy of the generated authentication keys. In this regard, we propose a novel, secure, and lightweight continuous authentication scheme for IoT device authentication. Our scheme utilizes the inherent properties of the IoT devices transmission model as its source for seed generation and device authentication. Specifically, our proposed scheme provides continuous authentication by checking the access time slots and spreading sequences of the IoT devices instead of repeatedly generating and verifying shared keys. Due to this, access to a coherent key is not required in our proposed scheme, resulting in the concealment of the seed information from attackers. Our proposed authentication scheme for IoT devices demonstrates improved performance compared to the benchmark schemes relying on physical-channel. Our empirical results find a near threefold decrease in misdetection rate of illegitimate devices and close to zero false alarm rate in various system settings with varied numbers of active devices up to 200 and signal-to-noise ratio from 0 dB to 30 dB. Our proposed authentication scheme also has a lower computational complexity of at least half the computational cost of the benchmark schemes based on support vector machine and binary hypothesis testing in our studies. This further corroborates the practicality of our scheme for IoT deployments.

Joint User and Data Detection in Grant-Free NOMA with Attention-based BiLSTM Network

Sep 14, 2022

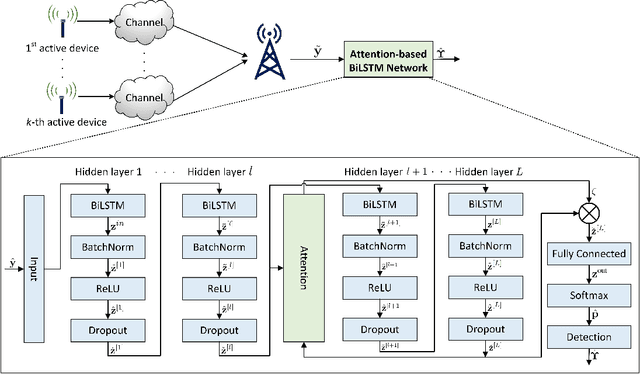

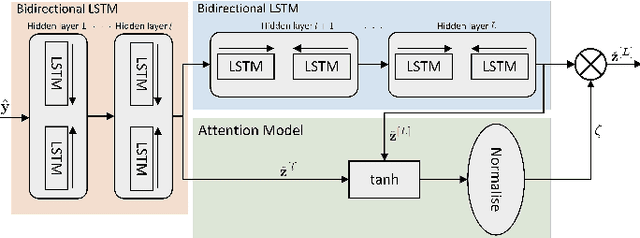

Abstract:We consider the multi-user detection (MUD) problem in uplink grant-free non-orthogonal multiple access (NOMA), where the access point has to identify the total number and correct identity of the active Internet of Things (IoT) devices and decode their transmitted data. We assume that IoT devices use complex spreading sequences and transmit information in a random-access manner following the burst-sparsity model, where some IoT devices transmit their data in multiple adjacent time slots with a high probability, while others transmit only once during a frame. Exploiting the temporal correlation, we propose an attention-based bidirectional long short-term memory (BiLSTM) network to solve the MUD problem. The BiLSTM network creates a pattern of the device activation history using forward and reverse pass LSTMs, whereas the attention mechanism provides essential context to the device activation points. By doing so, a hierarchical pathway is followed for detecting active devices in a grant-free scenario. Then, by utilising the complex spreading sequences, blind data detection for the estimated active devices is performed. The proposed framework does not require prior knowledge of device sparsity levels and channels for performing MUD. The results show that the proposed network achieves better performance compared to existing benchmark schemes.

Federated Learning Cost Disparity for IoT Devices

Apr 17, 2022

Abstract:Federated learning (FL) promotes predictive model training at the Internet of things (IoT) devices by evading data collection cost in terms of energy, time, and privacy. We model the learning gain achieved by an IoT device against its participation cost as its utility. Due to the device-heterogeneity, the local model learning cost and its quality, which can be time-varying, differs from device to device. We show that this variation results in utility unfairness because the same global model is shared among the devices. By default, the master is unaware of the local model computation and transmission costs of the devices, thus it is unable to address the utility unfairness problem. Also, a device may exploit this lack of knowledge at the master to intentionally reduce its expenditure and thereby enhance its utility. We propose to control the quality of the global model shared with the devices, in each round, based on their contribution and expenditure. This is achieved by employing differential privacy to curtail global model divulgence based on the learning contribution. In addition, we devise adaptive computation and transmission policies for each device to control its expenditure in order to mitigate utility unfairness. Our results show that the proposed scheme reduces the standard deviation of the energy cost of devices by 99% in comparison to the benchmark scheme, while the standard deviation of the training loss of devices varies around 0.103.

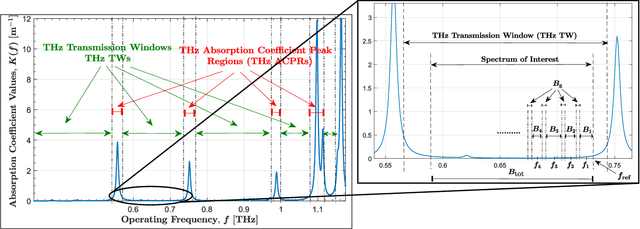

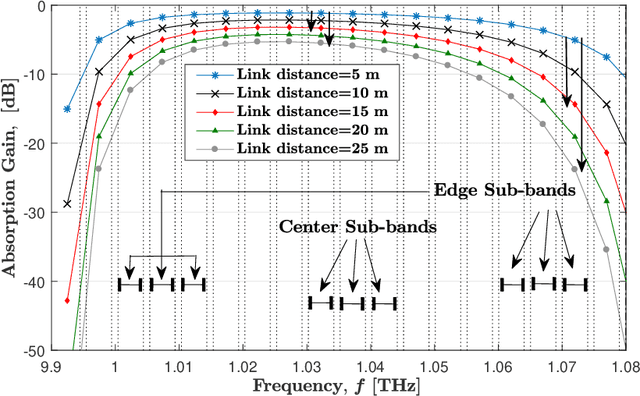

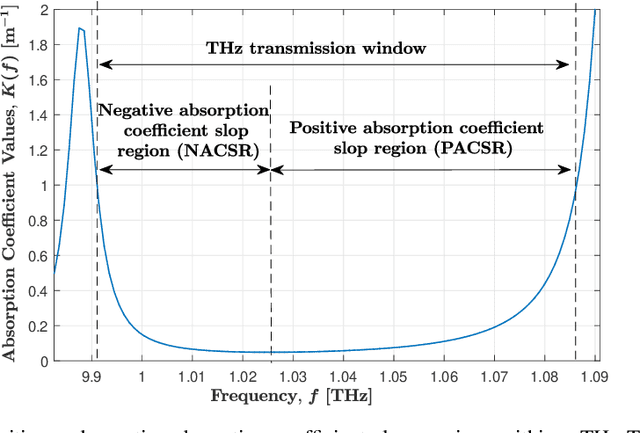

Spectrum Allocation with Adaptive Sub-band Bandwidth for Terahertz Communication Systems

Nov 10, 2021

Abstract:We study spectrum allocation for terahertz (THz) band communication (THzCom) systems, while considering the frequency and distance-dependent nature of THz channels. Different from existing studies, we explore multi-band-based spectrum allocation with adaptive sub-band bandwidth (ASB) by allowing the spectrum of interest to be divided into sub-bands with unequal bandwidths. Also, we investigate the impact of sub-band assignment on multi-connectivity (MC) enabled THzCom systems, where users associate and communicate with multiple access points simultaneously. We formulate resource allocation problems, with the primary focus on spectrum allocation, to determine sub-band assignment, sub-band bandwidth, and optimal transmit power. Thereafter, we propose reasonable approximations and transformations, and develop iterative algorithms based on the successive convex approximation technique to analytically solve the formulated problems. Aided by numerical results, we show that by enabling and optimizing ASB, significantly higher throughput can be achieved as compared to adopting equal sub-band bandwidth, and this throughput gain is most profound when the power budget constraint is more stringent. We also show that our sub-band assignment strategy in MC-enabled THzCom systems outperforms the state-of-the-art sub-band assignment strategies and the performance gain is most profound when the spectrum with the lowest average molecular absorption coefficient is selected during spectrum allocation.

Utility Fairness for the Differentially Private Federated Learning

Sep 11, 2021

Abstract:Federated learning (FL) allows predictive model training on the sensed data in a wireless Internet of things (IoT) network evading data collection cost in terms of energy, time, and privacy. In this paper, for a FL setting, we model the learning gain achieved by an IoT device against its participation cost as its utility. The local model quality and the associated cost differs from device to device due to the device-heterogeneity which could be time-varying. We identify that this results in utility unfairness because the same global model is shared among the devices. In the vanilla FL setting, the master is unaware of devices' local model computation and transmission costs, thus it is unable to address the utility unfairness problem. In addition, a device may exploit this lack of knowledge at the master to intentionally reduce its expenditure and thereby boost its utility. We propose to control the quality of the global model shared with the devices, in each round, based on their contribution and expenditure. This is achieved by employing differential privacy to curtail global model divulgence based on the learning contribution. Furthermore, we devise adaptive computation and transmission policies for each device to control its expenditure in order to mitigate utility unfairness. Our results show that the proposed scheme reduces the standard deviation of the energy cost of devices by 99% in comparison to the benchmark scheme, while the standard deviation of the training loss of devices varies around 0.103.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge