Ryota Yoshihashi

VASCAR: Content-Aware Layout Generation via Visual-Aware Self-Correction

Dec 05, 2024

Abstract:Large language models (LLMs) have proven effective for layout generation due to their ability to produce structure-description languages, such as HTML or JSON, even without access to visual information. Recently, LLM providers have evolved these models into large vision-language models (LVLM), which shows prominent multi-modal understanding capabilities. Then, how can we leverage this multi-modal power for layout generation? To answer this, we propose Visual-Aware Self-Correction LAyout GeneRation (VASCAR) for LVLM-based content-aware layout generation. In our method, LVLMs iteratively refine their outputs with reference to rendered layout images, which are visualized as colored bounding boxes on poster backgrounds. In experiments, we demonstrate that our method combined with the Gemini. Without any additional training, VASCAR achieves state-of-the-art (SOTA) layout generation quality outperforming both existing layout-specific generative models and other LLM-based methods.

Constant Rate Schedule: Constant-Rate Distributional Change for Efficient Training and Sampling in Diffusion Models

Nov 19, 2024Abstract:We propose a noise schedule that ensures a constant rate of change in the probability distribution of diffused data throughout the diffusion process. To obtain this noise schedule, we measure the rate of change in the probability distribution of the forward process and use it to determine the noise schedule before training diffusion models. The functional form of the noise schedule is automatically determined and tailored to each dataset and type of diffusion model. We evaluate the effectiveness of our noise schedule on unconditional and class-conditional image generation tasks using the LSUN (bedroom/church/cat/horse), ImageNet, and FFHQ datasets. Through extensive experiments, we confirmed that our noise schedule broadly improves the performance of the diffusion models regardless of the dataset, sampler, number of function evaluations, or type of diffusion model.

Attention as Annotation: Generating Images and Pseudo-masks for Weakly Supervised Semantic Segmentation with Diffusion

Sep 04, 2023Abstract:Although recent advancements in diffusion models enabled high-fidelity and diverse image generation, training of discriminative models largely depends on collections of massive real images and their manual annotation. Here, we present a training method for semantic segmentation that neither relies on real images nor manual annotation. The proposed method {\it attn2mask} utilizes images generated by a text-to-image diffusion model in combination with its internal text-to-image cross-attention as supervisory pseudo-masks. Since the text-to-image generator is trained with image-caption pairs but without pixel-wise labels, attn2mask can be regarded as a weakly supervised segmentation method overall. Experiments show that attn2mask achieves promising results in PASCAL VOC for not using real training data for segmentation at all, and it is also useful to scale up segmentation to a more-class scenario, i.e., ImageNet segmentation. It also shows adaptation ability with LoRA-based fine-tuning, which enables the transfer to a distant domain i.e., Cityscapes.

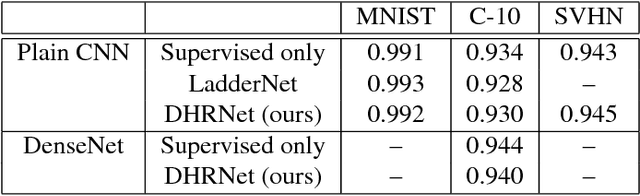

Ladder Siamese Network: a Method and Insights for Multi-level Self-Supervised Learning

Nov 25, 2022

Abstract:Siamese-network-based self-supervised learning (SSL) suffers from slow convergence and instability in training. To alleviate this, we propose a framework to exploit intermediate self-supervisions in each stage of deep nets, called the Ladder Siamese Network. Our self-supervised losses encourage the intermediate layers to be consistent with different data augmentations to single samples, which facilitates training progress and enhances the discriminative ability of the intermediate layers themselves. While some existing work has already utilized multi-level self supervisions in SSL, ours is different in that 1) we reveal its usefulness with non-contrastive Siamese frameworks in both theoretical and empirical viewpoints, and 2) ours improves image-level classification, instance-level detection, and pixel-level segmentation simultaneously. Experiments show that the proposed framework can improve BYOL baselines by 1.0% points in ImageNet linear classification, 1.2% points in COCO detection, and 3.1% points in PASCAL VOC segmentation. In comparison with the state-of-the-art methods, our Ladder-based model achieves competitive and balanced performances in all tested benchmarks without causing large degradation in one.

Context-Free TextSpotter for Real-Time and Mobile End-to-End Text Detection and Recognition

Jun 10, 2021

Abstract:In the deployment of scene-text spotting systems on mobile platforms, lightweight models with low computation are preferable. In concept, end-to-end (E2E) text spotting is suitable for such purposes because it performs text detection and recognition in a single model. However, current state-of-the-art E2E methods rely on heavy feature extractors, recurrent sequence modellings, and complex shape aligners to pursue accuracy, which means their computations are still heavy. We explore the opposite direction: How far can we go without bells and whistles in E2E text spotting? To this end, we propose a text-spotting method that consists of simple convolutions and a few post-processes, named Context-Free TextSpotter. Experiments using standard benchmarks show that Context-Free TextSpotter achieves real-time text spotting on a GPU with only three million parameters, which is the smallest and fastest among existing deep text spotters, with an acceptable transcription quality degradation compared to heavier ones. Further, we demonstrate that our text spotter can run on a smartphone with affordable latency, which is valuable for building stand-alone OCR applications.

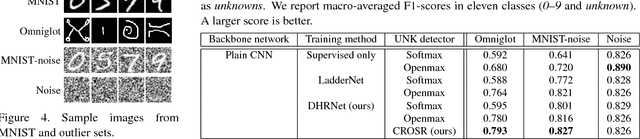

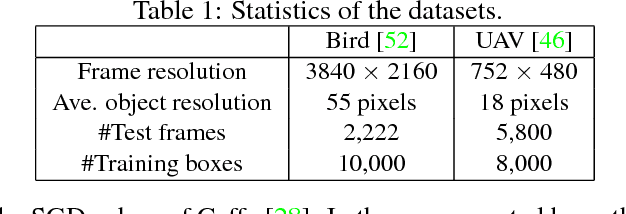

Finding a Needle in a Haystack: Tiny Flying Object Detection in 4K Videos using a Joint Detection-and-Tracking Approach

May 18, 2021

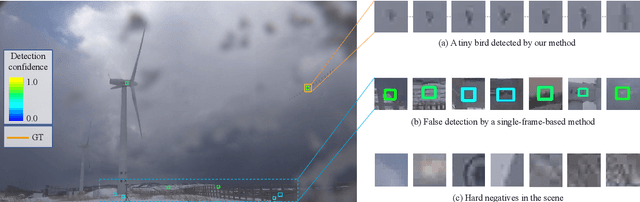

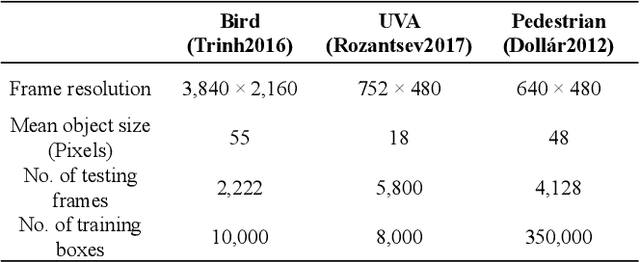

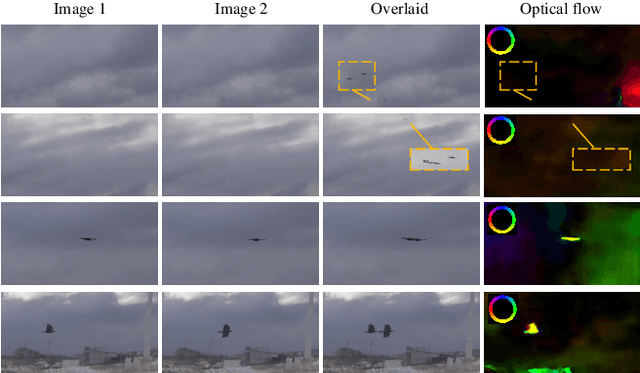

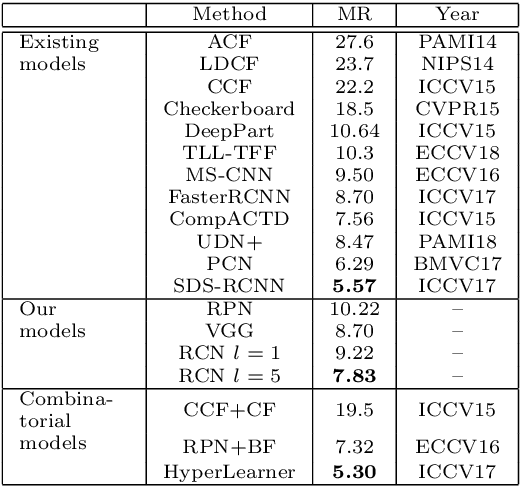

Abstract:Detecting tiny objects in a high-resolution video is challenging because the visual information is little and unreliable. Specifically, the challenge includes very low resolution of the objects, MPEG artifacts due to compression and a large searching area with many hard negatives. Tracking is equally difficult because of the unreliable appearance, and the unreliable motion estimation. Luckily, we found that by combining this two challenging tasks together, there will be mutual benefits. Following the idea, in this paper, we present a neural network model called the Recurrent Correlational Network, where detection and tracking are jointly performed over a multi-frame representation learned through a single, trainable, and end-to-end network. The framework exploits a convolutional long short-term memory network for learning informative appearance changes for detection, while the learned representation is shared in tracking for enhancing its performance. In experiments with datasets containing images of scenes with small flying objects, such as birds and unmanned aerial vehicles, the proposed method yielded consistent improvements in detection performance over deep single-frame detectors and existing motion-based detectors. Furthermore, our network performs as well as state-of-the-art generic object trackers when it was evaluated as a tracker on a bird image dataset.

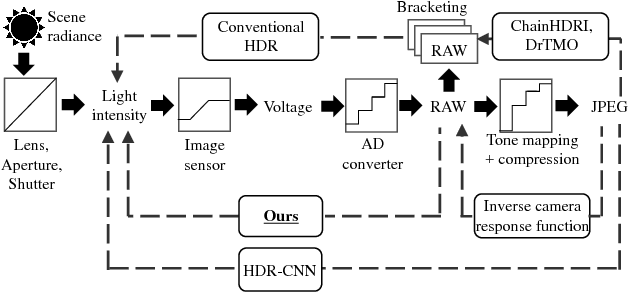

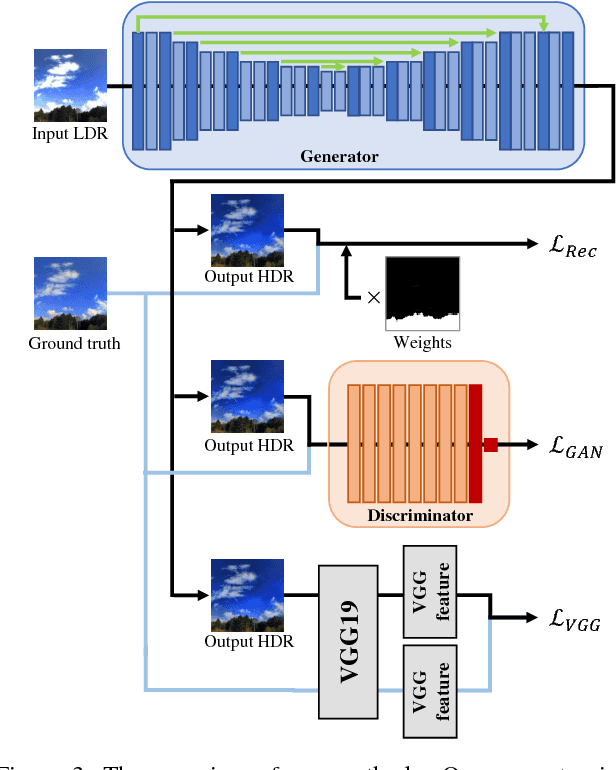

Hybrid Loss for Learning Single-Image-based HDR Reconstruction

Dec 18, 2018

Abstract:This paper tackles high-dynamic-range (HDR) image reconstruction given only a single low-dynamic-range (LDR) image as input. While the existing methods focus on minimizing the mean-squared-error (MSE) between the target and reconstructed images, we minimize a hybrid loss that consists of perceptual and adversarial losses in addition to HDR-reconstruction loss. The reconstruction loss instead of MSE is more suitable for HDR since it puts more weight on both over- and under- exposed areas. It makes the reconstruction faithful to the input. Perceptual loss enables the networks to utilize knowledge about objects and image structure for recovering the intensity gradients of saturated and grossly quantized areas. Adversarial loss helps to select the most plausible appearance from multiple solutions. The hybrid loss that combines all the three losses is calculated in logarithmic space of image intensity so that the outputs retain a large dynamic range and meanwhile the learning becomes tractable. Comparative experiments conducted with other state-of-the-art methods demonstrated that our method produces a leap in image quality.

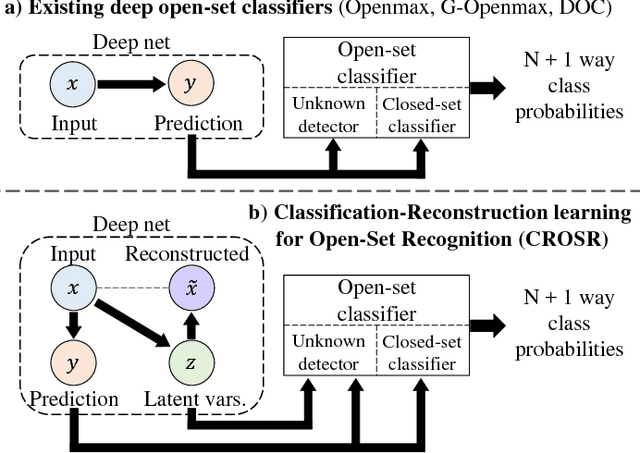

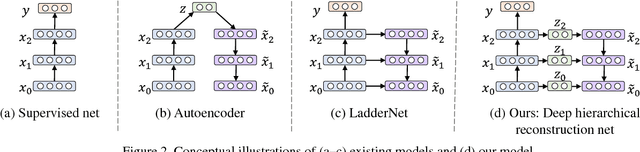

Classification-Reconstruction Learning for Open-Set Recognition

Dec 17, 2018

Abstract:Open-set classification is a problem of handling `unknown' classes that are not contained in the training dataset, whereas traditional classifiers assume that only known classes appear in the test environment. Existing open-set classifiers rely on deep networks trained in a supervised manner on known classes in the training set; this causes specialization of learned representations to known classes and makes it hard to distinguish unknowns from knowns. In contrast, we train networks for joint classification and reconstruction of input data. This enhances the learned representation so as to preserve information useful for separating unknowns from knowns, as well as to discriminate classes of knowns. Our novel Classification-Reconstruction learning for Open-Set Recognition (CROSR) utilizes latent representations for reconstruction and enables robust unknown detection without harming the known-class classification accuracy. Extensive experiments reveal that the proposed method outperforms existing deep open-set classifiers in multiple standard datasets and is robust to diverse outliers.

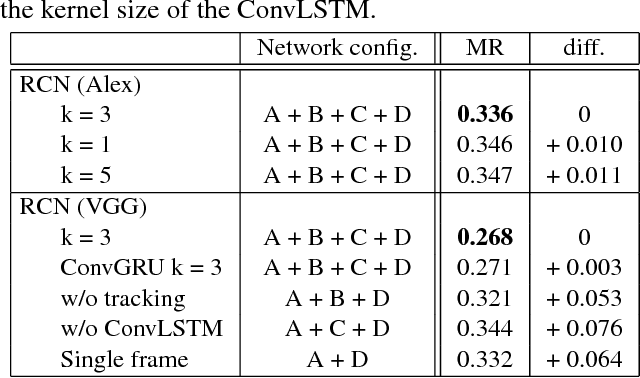

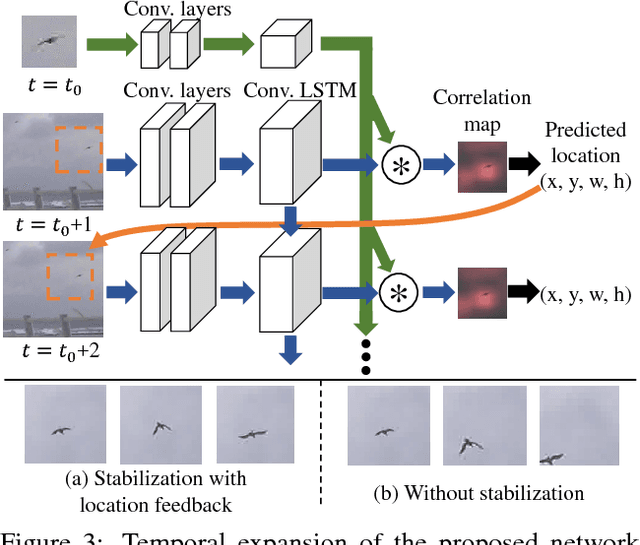

Differentiating Objects by Motion: Joint Detection and Tracking of Small Flying Objects

May 15, 2018

Abstract:While generic object detection has achieved large improvements with rich feature hierarchies from deep nets, detecting small objects with poor visual cues remains challenging. Motion cues from multiple frames may be more informative for detecting such hard-to-distinguish objects in each frame. However, how to encode discriminative motion patterns, such as deformations and pose changes that characterize objects, has remained an open question. To learn them and thereby realize small object detection, we present a neural model called the Recurrent Correlational Network, where detection and tracking are jointly performed over a multi-frame representation learned through a single, trainable, and end-to-end network. A convolutional long short-term memory network is utilized for learning informative appearance change for detection, while learned representation is shared in tracking for enhancing its performance. In experiments with datasets containing images of scenes with small flying objects, such as birds and unmanned aerial vehicles, the proposed method yielded consistent improvements in detection performance over deep single-frame detectors and existing motion-based detectors. Furthermore, our network performs as well as state-of-the-art generic object trackers when it was evaluated as a tracker on the bird dataset.

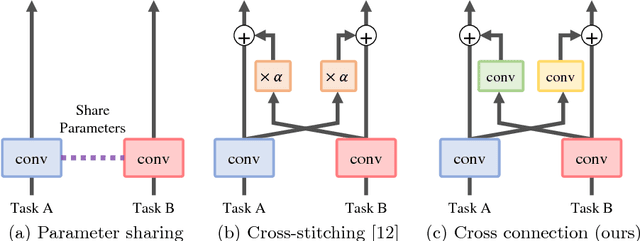

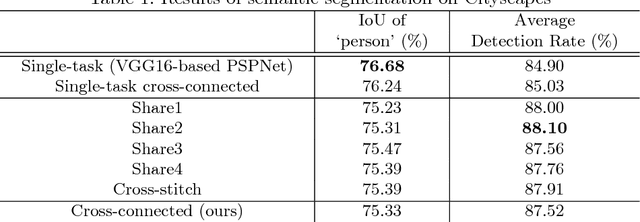

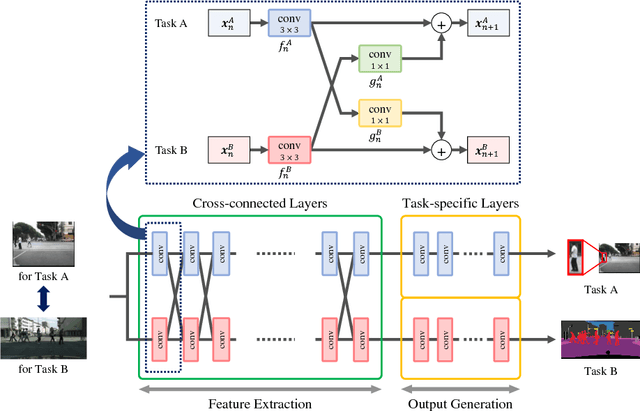

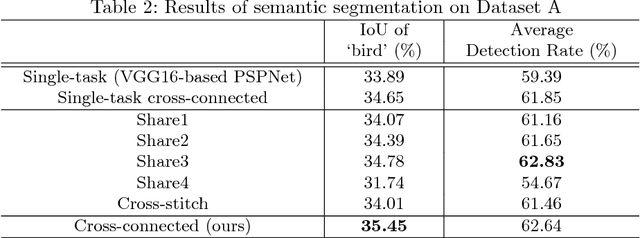

Cross-connected Networks for Multi-task Learning of Detection and Segmentation

May 15, 2018

Abstract:Multi-task learning improves generalization performance by sharing knowledge among related tasks. Existing models are for task combinations annotated on the same dataset, while there are cases where multiple datasets are available for each task. How to utilize knowledge of successful single-task CNNs that are trained on each dataset has been explored less than multi-task learning with a single dataset. We propose a cross-connected CNN, a new architecture that connects single-task CNNs through convolutional layers, which transfer useful information for the counterpart. We evaluated our proposed architecture on a combination of detection and segmentation using two datasets. Experiments on pedestrians show our CNN achieved a higher detection performance compared to baseline CNNs, while maintaining high quality for segmentation. It is the first known attempt to tackle multi-task learning with different training datasets between detection and segmentation. Experiments with wild birds demonstrate how our CNN learns general representations from limited datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge