Atsuki Osanai

SCAdapter: Content-Style Disentanglement for Diffusion Style Transfer

Dec 15, 2025Abstract:Diffusion models have emerged as the leading approach for style transfer, yet they struggle with photo-realistic transfers, often producing painting-like results or missing detailed stylistic elements. Current methods inadequately address unwanted influence from original content styles and style reference content features. We introduce SCAdapter, a novel technique leveraging CLIP image space to effectively separate and integrate content and style features. Our key innovation systematically extracts pure content from content images and style elements from style references, ensuring authentic transfers. This approach is enhanced through three components: Controllable Style Adaptive Instance Normalization (CSAdaIN) for precise multi-style blending, KVS Injection for targeted style integration, and a style transfer consistency objective maintaining process coherence. Comprehensive experiments demonstrate SCAdapter significantly outperforms state-of-the-art methods in both conventional and diffusion-based baselines. By eliminating DDIM inversion and inference-stage optimization, our method achieves at least $2\times$ faster inference than other diffusion-based approaches, making it both more effective and efficient for practical applications.

VASCAR: Content-Aware Layout Generation via Visual-Aware Self-Correction

Dec 05, 2024

Abstract:Large language models (LLMs) have proven effective for layout generation due to their ability to produce structure-description languages, such as HTML or JSON, even without access to visual information. Recently, LLM providers have evolved these models into large vision-language models (LVLM), which shows prominent multi-modal understanding capabilities. Then, how can we leverage this multi-modal power for layout generation? To answer this, we propose Visual-Aware Self-Correction LAyout GeneRation (VASCAR) for LVLM-based content-aware layout generation. In our method, LVLMs iteratively refine their outputs with reference to rendered layout images, which are visualized as colored bounding boxes on poster backgrounds. In experiments, we demonstrate that our method combined with the Gemini. Without any additional training, VASCAR achieves state-of-the-art (SOTA) layout generation quality outperforming both existing layout-specific generative models and other LLM-based methods.

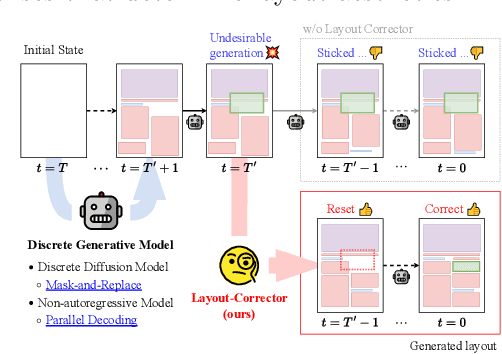

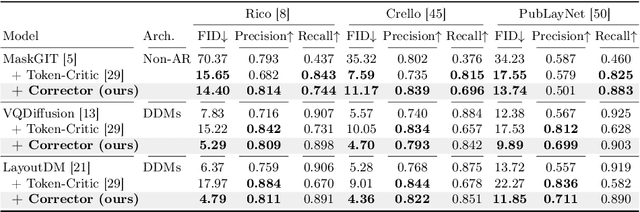

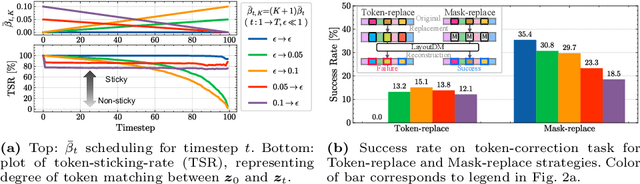

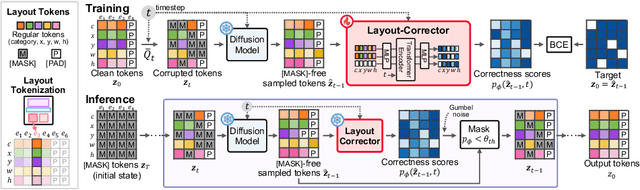

Layout-Corrector: Alleviating Layout Sticking Phenomenon in Discrete Diffusion Model

Sep 25, 2024

Abstract:Layout generation is a task to synthesize a harmonious layout with elements characterized by attributes such as category, position, and size. Human designers experiment with the placement and modification of elements to create aesthetic layouts, however, we observed that current discrete diffusion models (DDMs) struggle to correct inharmonious layouts after they have been generated. In this paper, we first provide novel insights into layout sticking phenomenon in DDMs and then propose a simple yet effective layout-assessment module Layout-Corrector, which works in conjunction with existing DDMs to address the layout sticking problem. We present a learning-based module capable of identifying inharmonious elements within layouts, considering overall layout harmony characterized by complex composition. During the generation process, Layout-Corrector evaluates the correctness of each token in the generated layout, reinitializing those with low scores to the ungenerated state. The DDM then uses the high-scored tokens as clues to regenerate the harmonized tokens. Layout-Corrector, tested on common benchmarks, consistently boosts layout-generation performance when in conjunction with various state-of-the-art DDMs. Furthermore, our extensive analysis demonstrates that the Layout-Corrector (1) successfully identifies erroneous tokens, (2) facilitates control over the fidelity-diversity trade-off, and (3) significantly mitigates the performance drop associated with fast sampling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge