R. Kenny Jones

ShapeLib: designing a library of procedural 3D shape abstractions with Large Language Models

Feb 13, 2025Abstract:Procedural representations are desirable, versatile, and popular shape encodings. Authoring them, either manually or using data-driven procedures, remains challenging, as a well-designed procedural representation should be compact, intuitive, and easy to manipulate. A long-standing problem in shape analysis studies how to discover a reusable library of procedural functions, with semantically aligned exposed parameters, that can explain an entire shape family. We present ShapeLib as the first method that leverages the priors of frontier LLMs to design a library of 3D shape abstraction functions. Our system accepts two forms of design intent: text descriptions of functions to include in the library and a seed set of exemplar shapes. We discover procedural abstractions that match this design intent by proposing, and then validating, function applications and implementations. The discovered shape functions in the library are not only expressive but also generalize beyond the seed set to a full family of shapes. We train a recognition network that learns to infer shape programs based on our library from different visual modalities (primitives, voxels, point clouds). Our shape functions have parameters that are semantically interpretable and can be modified to produce plausible shape variations. We show that this allows inferred programs to be successfully manipulated by an LLM given a text prompt. We evaluate ShapeLib on different datasets and show clear advantages over existing methods and alternative formulations.

Learning to Edit Visual Programs with Self-Supervision

Jun 04, 2024Abstract:We design a system that learns how to edit visual programs. Our edit network consumes a complete input program and a visual target. From this input, we task our network with predicting a local edit operation that could be applied to the input program to improve its similarity to the target. In order to apply this scheme for domains that lack program annotations, we develop a self-supervised learning approach that integrates this edit network into a bootstrapped finetuning loop along with a network that predicts entire programs in one-shot. Our joint finetuning scheme, when coupled with an inference procedure that initializes a population from the one-shot model and evolves members of this population with the edit network, helps to infer more accurate visual programs. Over multiple domains, we experimentally compare our method against the alternative of using only the one-shot model, and find that even under equal search-time budgets, our editing-based paradigm provides significant advantages.

ParSEL: Parameterized Shape Editing with Language

May 31, 2024Abstract:The ability to edit 3D assets from natural language presents a compelling paradigm to aid in the democratization of 3D content creation. However, while natural language is often effective at communicating general intent, it is poorly suited for specifying precise manipulation. To address this gap, we introduce ParSEL, a system that enables controllable editing of high-quality 3D assets from natural language. Given a segmented 3D mesh and an editing request, ParSEL produces a parameterized editing program. Adjusting the program parameters allows users to explore shape variations with a precise control over the magnitudes of edits. To infer editing programs which align with an input edit request, we leverage the abilities of large-language models (LLMs). However, while we find that LLMs excel at identifying initial edit operations, they often fail to infer complete editing programs, and produce outputs that violate shape semantics. To overcome this issue, we introduce Analytical Edit Propagation (AEP), an algorithm which extends a seed edit with additional operations until a complete editing program has been formed. Unlike prior methods, AEP searches for analytical editing operations compatible with a range of possible user edits through the integration of computer algebra systems for geometric analysis. Experimentally we demonstrate ParSEL's effectiveness in enabling controllable editing of 3D objects through natural language requests over alternative system designs.

Learning to Infer Generative Template Programs for Visual Concepts

Mar 20, 2024

Abstract:People grasp flexible visual concepts from a few examples. We explore a neurosymbolic system that learns how to infer programs that capture visual concepts in a domain-general fashion. We introduce Template Programs: programmatic expressions from a domain-specific language that specify structural and parametric patterns common to an input concept. Our framework supports multiple concept-related tasks, including few-shot generation and co-segmentation through parsing. We develop a learning paradigm that allows us to train networks that infer Template Programs directly from visual datasets that contain concept groupings. We run experiments across multiple visual domains: 2D layouts, Omniglot characters, and 3D shapes. We find that our method outperforms task-specific alternatives, and performs competitively against domain-specific approaches for the limited domains where they exist.

Improving Unsupervised Visual Program Inference with Code Rewriting Families

Sep 26, 2023Abstract:Programs offer compactness and structure that makes them an attractive representation for visual data. We explore how code rewriting can be used to improve systems for inferring programs from visual data. We first propose Sparse Intermittent Rewrite Injection (SIRI), a framework for unsupervised bootstrapped learning. SIRI sparsely applies code rewrite operations over a dataset of training programs, injecting the improved programs back into the training set. We design a family of rewriters for visual programming domains: parameter optimization, code pruning, and code grafting. For three shape programming languages in 2D and 3D, we show that using SIRI with our family of rewriters improves performance: better reconstructions and faster convergence rates, compared with bootstrapped learning methods that do not use rewriters or use them naively. Finally, we demonstrate that our family of rewriters can be effectively used at test time to improve the output of SIRI predictions. For 2D and 3D CSG, we outperform or match the reconstruction performance of recent domain-specific neural architectures, while producing more parsimonious programs that use significantly fewer primitives.

ShapeCoder: Discovering Abstractions for Visual Programs from Unstructured Primitives

May 09, 2023Abstract:Programs are an increasingly popular representation for visual data, exposing compact, interpretable structure that supports manipulation. Visual programs are usually written in domain-specific languages (DSLs). Finding "good" programs, that only expose meaningful degrees of freedom, requires access to a DSL with a "good" library of functions, both of which are typically authored by domain experts. We present ShapeCoder, the first system capable of taking a dataset of shapes, represented with unstructured primitives, and jointly discovering (i) useful abstraction functions and (ii) programs that use these abstractions to explain the input shapes. The discovered abstractions capture common patterns (both structural and parametric) across the dataset, so that programs rewritten with these abstractions are more compact, and expose fewer degrees of freedom. ShapeCoder improves upon previous abstraction discovery methods, finding better abstractions, for more complex inputs, under less stringent input assumptions. This is principally made possible by two methodological advancements: (a) a shape to program recognition network that learns to solve sub-problems and (b) the use of e-graphs, augmented with a conditional rewrite scheme, to determine when abstractions with complex parametric expressions can be applied, in a tractable manner. We evaluate ShapeCoder on multiple datasets of 3D shapes, where primitive decompositions are either parsed from manual annotations or produced by an unsupervised cuboid abstraction method. In all domains, ShapeCoder discovers a library of abstractions that capture high-level relationships, remove extraneous degrees of freedom, and achieve better dataset compression compared with alternative approaches. Finally, we investigate how programs rewritten to use discovered abstractions prove useful for downstream tasks.

SHRED: 3D Shape Region Decomposition with Learned Local Operations

Jun 07, 2022

Abstract:We present SHRED, a method for 3D SHape REgion Decomposition. SHRED takes a 3D point cloud as input and uses learned local operations to produce a segmentation that approximates fine-grained part instances. We endow SHRED with three decomposition operations: splitting regions, fixing the boundaries between regions, and merging regions together. Modules are trained independently and locally, allowing SHRED to generate high-quality segmentations for categories not seen during training. We train and evaluate SHRED with fine-grained segmentations from PartNet; using its merge-threshold hyperparameter, we show that SHRED produces segmentations that better respect ground-truth annotations compared with baseline methods, at any desired decomposition granularity. Finally, we demonstrate that SHRED is useful for downstream applications, out-performing all baselines on zero-shot fine-grained part instance segmentation and few-shot fine-grained semantic segmentation when combined with methods that learn to label shape regions.

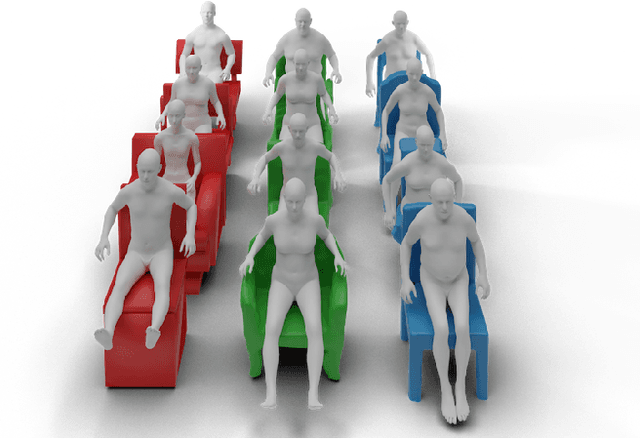

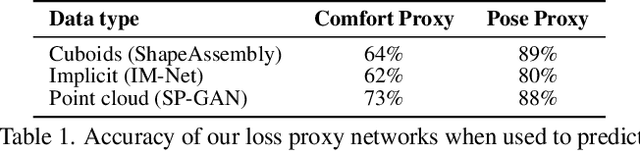

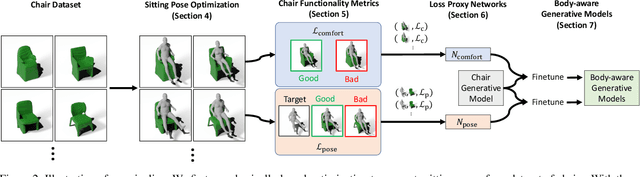

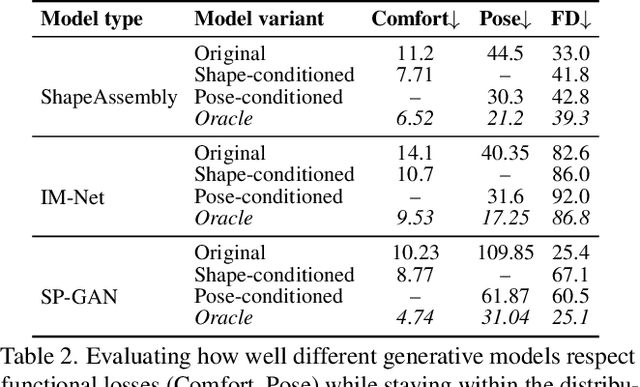

Learning Body-Aware 3D Shape Generative Models

Dec 16, 2021

Abstract:The shape of many objects in the built environment is dictated by their relationships to the human body: how will a person interact with this object? Existing data-driven generative models of 3D shapes produce plausible objects but do not reason about the relationship of those objects to the human body. In this paper, we learn body-aware generative models of 3D shapes. Specifically, we train generative models of chairs, an ubiquitous shape category, which can be conditioned on a given body shape or sitting pose. The body-shape-conditioned models produce chairs which will be comfortable for a person with the given body shape; the pose-conditioned models produce chairs which accommodate the given sitting pose. To train these models, we define a "sitting pose matching" metric and a novel "sitting comfort" metric. Calculating these metrics requires an expensive optimization to sit the body into the chair, which is too slow to be used as a loss function for training a generative model. Thus, we train neural networks to efficiently approximate these metrics. We use our approach to train three body-aware generative shape models: a structured part-based generator, a point cloud generator, and an implicit surface generator. In all cases, our approach produces models which adapt their output chair shapes to input human body specifications.

The Neurally-Guided Shape Parser: A Monte Carlo Method for Hierarchical Labeling of Over-segmented 3D Shapes

Jun 22, 2021

Abstract:Many learning-based 3D shape semantic segmentation methods assign labels to shape atoms (e.g. points in a point cloud or faces in a mesh) with a single-pass approach trained in an end-to-end fashion. Such methods achieve impressive performance but require large amounts of labeled training data. This paradigm entangles two separable subproblems: (1) decomposing a shape into regions and (2) assigning semantic labels to these regions. We claim that disentangling these subproblems reduces the labeled data burden: (1) region decomposition requires no semantic labels and could be performed in an unsupervised fashion, and (2) labeling shape regions instead of atoms results in a smaller search space and should be learnable with less labeled training data. In this paper, we investigate this second claim by presenting the Neurally-Guided Shape Parser (NGSP), a method that learns how to assign semantic labels to regions of an over-segmented 3D shape. We solve this problem via MAP inference, modeling the posterior probability of a labeling assignment conditioned on an input shape. We employ a Monte Carlo importance sampling approach guided by a neural proposal network, a search-based approach made feasible by assuming the input shape is decomposed into discrete regions. We evaluate NGSP on the task of hierarchical semantic segmentation on manufactured 3D shapes from PartNet. We find that NGSP delivers significant performance improvements over baselines that learn to label shape atoms and then aggregate predictions for each shape region, especially in low-data regimes. Finally, we demonstrate that NGSP is robust to region granularity, as it maintains strong segmentation performance even as the regions undergo significant corruption.

ShapeMOD: Macro Operation Discovery for 3D Shape Programs

Apr 13, 2021

Abstract:A popular way to create detailed yet easily controllable 3D shapes is via procedural modeling, i.e. generating geometry using programs. Such programs consist of a series of instructions along with their associated parameter values. To fully realize the benefits of this representation, a shape program should be compact and only expose degrees of freedom that allow for meaningful manipulation of output geometry. One way to achieve this goal is to design higher-level macro operators that, when executed, expand into a series of commands from the base shape modeling language. However, manually authoring such macros, much like shape programs themselves, is difficult and largely restricted to domain experts. In this paper, we present ShapeMOD, an algorithm for automatically discovering macros that are useful across large datasets of 3D shape programs. ShapeMOD operates on shape programs expressed in an imperative, statement-based language. It is designed to discover macros that make programs more compact by minimizing the number of function calls and free parameters required to represent an input shape collection. We run ShapeMOD on multiple collections of programs expressed in a domain-specific language for 3D shape structures. We show that it automatically discovers a concise set of macros that abstract out common structural and parametric patterns that generalize over large shape collections. We also demonstrate that the macros found by ShapeMOD improve performance on downstream tasks including shape generative modeling and inferring programs from point clouds. Finally, we conduct a user study that indicates that ShapeMOD's discovered macros make interactive shape editing more efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge