Quan-Dung Pham

VinBrain JSC., Vietnam

The 2nd Workshop on Maritime Computer Vision 2024

Nov 23, 2023

Abstract:The 2nd Workshop on Maritime Computer Vision (MaCVi) 2024 addresses maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicles (USV). Three challenges categories are considered: (i) UAV-based Maritime Object Tracking with Re-identification, (ii) USV-based Maritime Obstacle Segmentation and Detection, (iii) USV-based Maritime Boat Tracking. The USV-based Maritime Obstacle Segmentation and Detection features three sub-challenges, including a new embedded challenge addressing efficicent inference on real-world embedded devices. This report offers a comprehensive overview of the findings from the challenges. We provide both statistical and qualitative analyses, evaluating trends from over 195 submissions. All datasets, evaluation code, and the leaderboard are available to the public at https://macvi.org/workshop/macvi24.

UWA360CAM: A 360$^{\circ}$ 24/7 Real-Time Streaming Camera System for Underwater Applications

Sep 30, 2023

Abstract:Omnidirectional camera is a cost-effective and information-rich sensor highly suitable for many marine applications and the ocean scientific community, encompassing several domains such as augmented reality, mapping, motion estimation, visual surveillance, and simultaneous localization and mapping. However, designing and constructing such a high-quality 360$^{\circ}$ real-time streaming camera system for underwater applications is a challenging problem due to the technical complexity in several aspects including sensor resolution, wide field of view, power supply, optical design, system calibration, and overheating management. This paper presents a novel and comprehensive system that addresses the complexities associated with the design, construction, and implementation of a fully functional 360$^{\circ}$ real-time streaming camera system specifically tailored for underwater environments. Our proposed system, UWA360CAM, can stream video in real time, operate in 24/7, and capture 360$^{\circ}$ underwater panorama images. Notably, our work is the pioneering effort in providing a detailed and replicable account of this system. The experiments provide a comprehensive analysis of our proposed system.

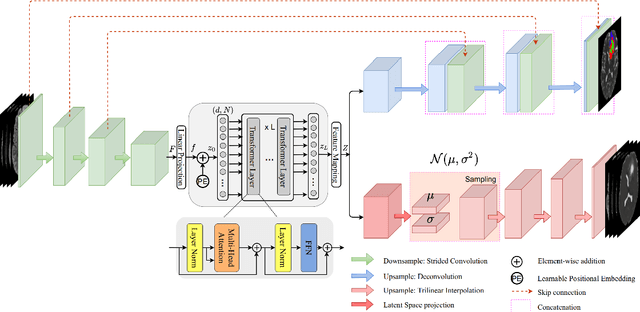

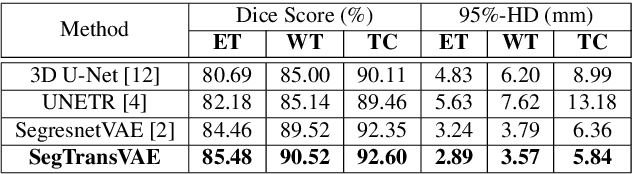

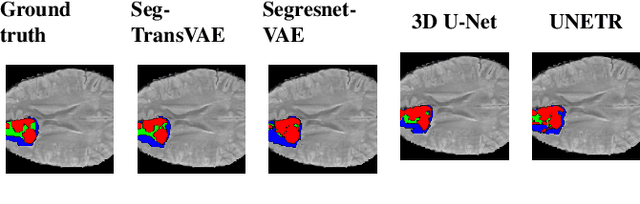

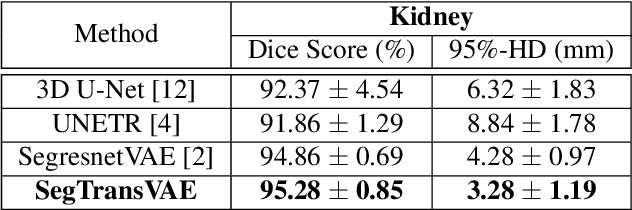

SegTransVAE: Hybrid CNN -- Transformer with Regularization for medical image segmentation

Jan 26, 2022

Abstract:Current research on deep learning for medical image segmentation exposes their limitations in learning either global semantic information or local contextual information. To tackle these issues, a novel network named SegTransVAE is proposed in this paper. SegTransVAE is built upon encoder-decoder architecture, exploiting transformer with the variational autoencoder (VAE) branch to the network to reconstruct the input images jointly with segmentation. To the best of our knowledge, this is the first method combining the success of CNN, transformer, and VAE. Evaluation on various recently introduced datasets shows that SegTransVAE outperforms previous methods in Dice Score and $95\%$-Haudorff Distance while having comparable inference time to a simple CNN-based architecture network. The source code is available at: https://github.com/itruonghai/SegTransVAE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge