Qiongxiu Li

APEX: Probing Neural Networks via Activation Perturbation

Feb 03, 2026Abstract:Prior work on probing neural networks primarily relies on input-space analysis or parameter perturbation, both of which face fundamental limitations in accessing structural information encoded in intermediate representations. We introduce Activation Perturbation for EXploration (APEX), an inference-time probing paradigm that perturbs hidden activations while keeping both inputs and model parameters fixed. We theoretically show that activation perturbation induces a principled transition from sample-dependent to model-dependent behavior by suppressing input-specific signals and amplifying representation-level structure, and further establish that input perturbation corresponds to a constrained special case of this framework. Through representative case studies, we demonstrate the practical advantages of APEX. In the small-noise regime, APEX provides a lightweight and efficient measure of sample regularity that aligns with established metrics, while also distinguishing structured from randomly labeled models and revealing semantically coherent prediction transitions. In the large-noise regime, APEX exposes training-induced model-level biases, including a pronounced concentration of predictions on the target class in backdoored models. Overall, our results show that APEX offers an effective perspective for exploring, and understanding neural networks beyond what is accessible from input space alone.

Semantic Leakage from Image Embeddings

Jan 30, 2026Abstract:Image embeddings are generally assumed to pose limited privacy risk. We challenge this assumption by formalizing semantic leakage as the ability to recover semantic structures from compressed image embeddings. Surprisingly, we show that semantic leakage does not require exact reconstruction of the original image. Preserving local semantic neighborhoods under embedding alignment is sufficient to expose the intrinsic vulnerability of image embeddings. Crucially, this preserved neighborhood structure allows semantic information to propagate through a sequence of lossy mappings. Based on this conjecture, we propose Semantic Leakage from Image Embeddings (SLImE), a lightweight inference framework that reveals semantic information from standalone compressed image embeddings, incorporating a locally trained semantic retriever with off-the-shelf models, without training task-specific decoders. We thoroughly validate each step of the framework empirically, from aligned embeddings to retrieved tags, symbolic representations, and grammatical and coherent descriptions. We evaluate SLImE across a range of open and closed embedding models, including GEMINI, COHERE, NOMIC, and CLIP, and demonstrate consistent recovery of semantic information across diverse inference tasks. Our results reveal a fundamental vulnerability in image embeddings, whereby the preservation of semantic neighborhoods under alignment enables semantic leakage, highlighting challenges for privacy preservation.1

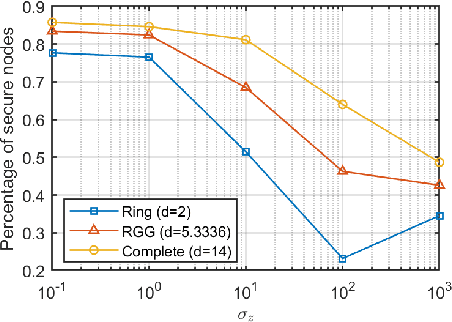

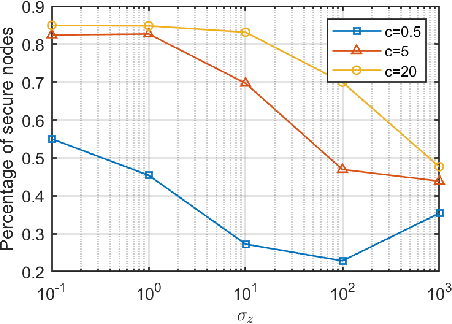

When Is Distributed Nonlinear Aggregation Private? Optimality and Information-Theoretical Bounds

Jan 19, 2026Abstract:Nonlinear aggregation is central to modern distributed systems, yet its privacy behavior is far less understood than that of linear aggregation. Unlike linear aggregation where mature mechanisms can often suppress information leakage, nonlinear operators impose inherent structural limits on what privacy guarantees are theoretically achievable when the aggregate must be computed exactly. This paper develops a unified information-theoretic framework to characterize privacy leakage in distributed nonlinear aggregation under a joint adversary that combines passive (honest-but-curious) corruption and eavesdropping over communication channels. We cover two broad classes of nonlinear aggregates: order-based operators (maximum/minimum and top-$K$) and robust aggregation (median/quantiles and trimmed mean). We first derive fundamental lower bounds on leakage that hold without sacrificing accuracy, thereby identifying the minimum unavoidable information revealed by the computation and the transcript. We then propose simple yet effective privacy-preserving distributed algorithms, and show that with appropriate randomized initialization and parameter choices, our proposed approaches can attach the derived optimal bounds for the considered operators. Extensive experiments validate the tightness of the bounds and demonstrate that network topology and key algorithmic parameters (including the stepsize) govern the observed leakage in line with the theoretical analysis, yielding actionable guidelines for privacy-preserving nonlinear aggregation.

Do LLMs Really Memorize Personally Identifiable Information? Revisiting PII Leakage with a Cue-Controlled Memorization Framework

Jan 07, 2026Abstract:Large Language Models (LLMs) have been reported to "leak" Personally Identifiable Information (PII), with successful PII reconstruction often interpreted as evidence of memorization. We propose a principled revision of memorization evaluation for LLMs, arguing that PII leakage should be evaluated under low lexical cue conditions, where target PII cannot be reconstructed through prompt-induced generalization or pattern completion. We formalize Cue-Resistant Memorization (CRM) as a cue-controlled evaluation framework and a necessary condition for valid memorization evaluation, explicitly conditioning on prompt-target overlap cues. Using CRM, we conduct a large-scale multilingual re-evaluation of PII leakage across 32 languages and multiple memorization paradigms. Revisiting reconstruction-based settings, including verbatim prefix-suffix completion and associative reconstruction, we find that their apparent effectiveness is driven primarily by direct surface-form cues rather than by true memorization. When such cues are controlled for, reconstruction success diminishes substantially. We further examine cue-free generation and membership inference, both of which exhibit extremely low true positive rates. Overall, our results suggest that previously reported PII leakage is better explained by cue-driven behavior than by genuine memorization, highlighting the importance of cue-controlled evaluation for reliably quantifying privacy-relevant memorization in LLMs.

Breaking Privacy in Federated Clustering: Perfect Input Reconstruction via Temporal Correlations

Nov 10, 2025

Abstract:Federated clustering allows multiple parties to discover patterns in distributed data without sharing raw samples. To reduce overhead, many protocols disclose intermediate centroids during training. While often treated as harmless for efficiency, whether such disclosure compromises privacy remains an open question. Prior analyses modeled the problem as a so-called Hidden Subset Sum Problem (HSSP) and argued that centroid release may be safe, since classical HSSP attacks fail to recover inputs. We revisit this question and uncover a new leakage mechanism: temporal regularities in $k$-means iterations create exploitable structure that enables perfect input reconstruction. Building on this insight, we propose Trajectory-Aware Reconstruction (TAR), an attack that combines temporal assignment information with algebraic analysis to recover exact original inputs. Our findings provide the first rigorous evidence, supported by a practical attack, that centroid disclosure in federated clustering significantly compromises privacy, exposing a fundamental tension between privacy and efficiency.

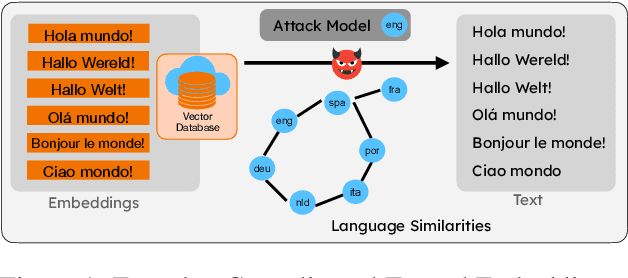

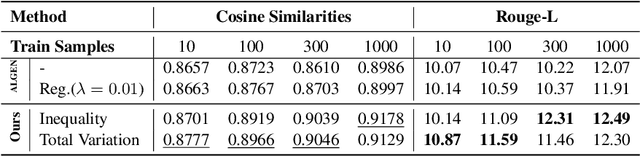

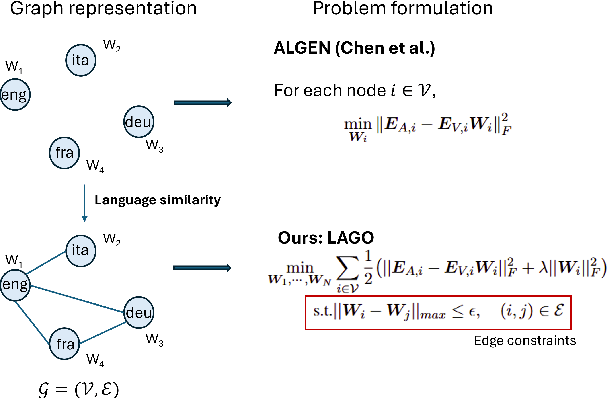

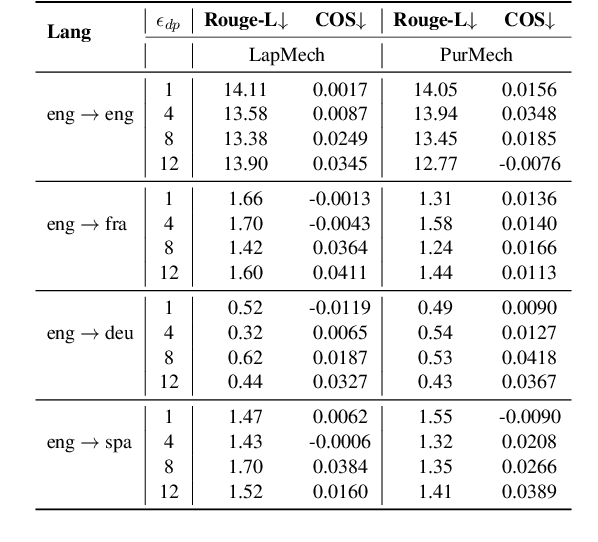

LAGO: Few-shot Crosslingual Embedding Inversion Attacks via Language Similarity-Aware Graph Optimization

May 21, 2025

Abstract:We propose LAGO - Language Similarity-Aware Graph Optimization - a novel approach for few-shot cross-lingual embedding inversion attacks, addressing critical privacy vulnerabilities in multilingual NLP systems. Unlike prior work in embedding inversion attacks that treat languages independently, LAGO explicitly models linguistic relationships through a graph-based constrained distributed optimization framework. By integrating syntactic and lexical similarity as edge constraints, our method enables collaborative parameter learning across related languages. Theoretically, we show this formulation generalizes prior approaches, such as ALGEN, which emerges as a special case when similarity constraints are relaxed. Our framework uniquely combines Frobenius-norm regularization with linear inequality or total variation constraints, ensuring robust alignment of cross-lingual embedding spaces even with extremely limited data (as few as 10 samples per language). Extensive experiments across multiple languages and embedding models demonstrate that LAGO substantially improves the transferability of attacks with 10-20% increase in Rouge-L score over baselines. This work establishes language similarity as a critical factor in inversion attack transferability, urging renewed focus on language-aware privacy-preserving multilingual embeddings.

Shared Path: Unraveling Memorization in Multilingual LLMs through Language Similarities

May 21, 2025Abstract:We present the first comprehensive study of Memorization in Multilingual Large Language Models (MLLMs), analyzing 95 languages using models across diverse model scales, architectures, and memorization definitions. As MLLMs are increasingly deployed, understanding their memorization behavior has become critical. Yet prior work has focused primarily on monolingual models, leaving multilingual memorization underexplored, despite the inherently long-tailed nature of training corpora. We find that the prevailing assumption, that memorization is highly correlated with training data availability, fails to fully explain memorization patterns in MLLMs. We hypothesize that treating languages in isolation - ignoring their similarities - obscures the true patterns of memorization. To address this, we propose a novel graph-based correlation metric that incorporates language similarity to analyze cross-lingual memorization. Our analysis reveals that among similar languages, those with fewer training tokens tend to exhibit higher memorization, a trend that only emerges when cross-lingual relationships are explicitly modeled. These findings underscore the importance of a language-aware perspective in evaluating and mitigating memorization vulnerabilities in MLLMs. This also constitutes empirical evidence that language similarity both explains Memorization in MLLMs and underpins Cross-lingual Transferability, with broad implications for multilingual NLP.

Optimal Privacy-Preserving Distributed Median Consensus

Mar 13, 2025

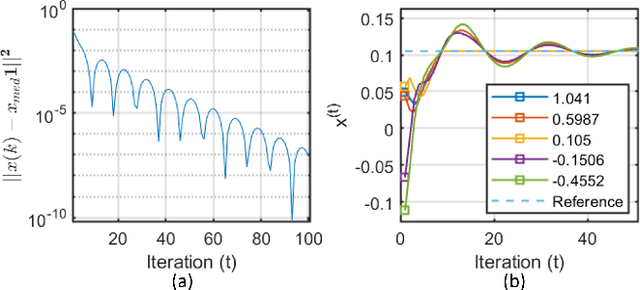

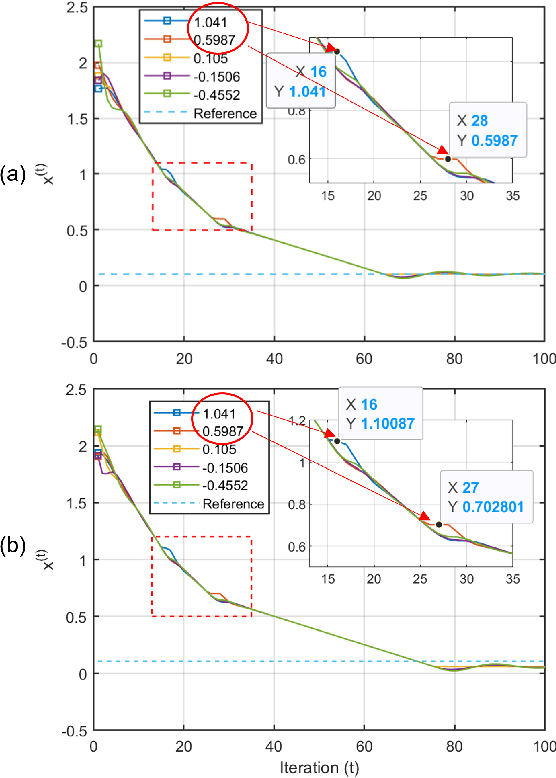

Abstract:Distributed median consensus has emerged as a critical paradigm in multi-agent systems due to the inherent robustness of the median against outliers and anomalies in measurement. Despite the sensitivity of the data involved, the development of privacy-preserving mechanisms for median consensus remains underexplored. In this work, we present the first rigorous analysis of privacy in distributed median consensus, focusing on an $L_1$-norm minimization framework. We establish necessary and sufficient conditions under which exact consensus and perfect privacy-defined as zero information leakage-can be achieved simultaneously. Our information-theoretic analysis provides provable guarantees against passive and eavesdropping adversaries, ensuring that private data remain concealed. Extensive numerical experiments validate our theoretical results, demonstrating the practical feasibility of achieving both accuracy and privacy in distributed median consensus.

Byzantine-Resilient Federated Learning via Distributed Optimization

Mar 13, 2025Abstract:Byzantine attacks present a critical challenge to Federated Learning (FL), where malicious participants can disrupt the training process, degrade model accuracy, and compromise system reliability. Traditional FL frameworks typically rely on aggregation-based protocols for model updates, leaving them vulnerable to sophisticated adversarial strategies. In this paper, we demonstrate that distributed optimization offers a principled and robust alternative to aggregation-centric methods. Specifically, we show that the Primal-Dual Method of Multipliers (PDMM) inherently mitigates Byzantine impacts by leveraging its fault-tolerant consensus mechanism. Through extensive experiments on three datasets (MNIST, FashionMNIST, and Olivetti), under various attack scenarios including bit-flipping and Gaussian noise injection, we validate the superior resilience of distributed optimization protocols. Compared to traditional aggregation-centric approaches, PDMM achieves higher model utility, faster convergence, and improved stability. Our results highlight the effectiveness of distributed optimization in defending against Byzantine threats, paving the way for more secure and resilient federated learning systems.

Trustworthy Machine Learning via Memorization and the Granular Long-Tail: A Survey on Interactions, Tradeoffs, and Beyond

Mar 10, 2025

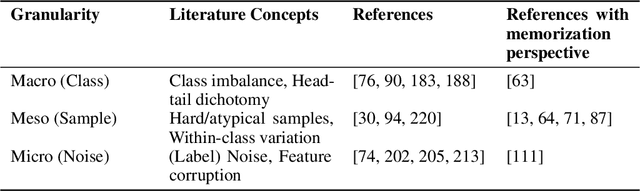

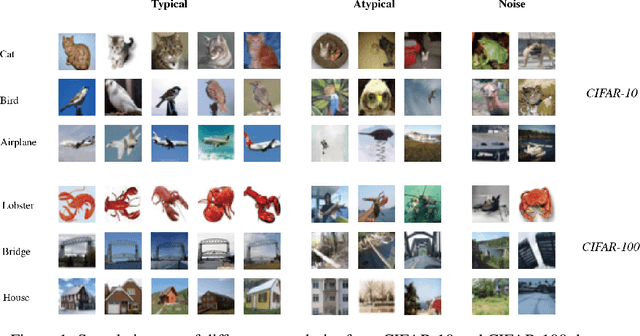

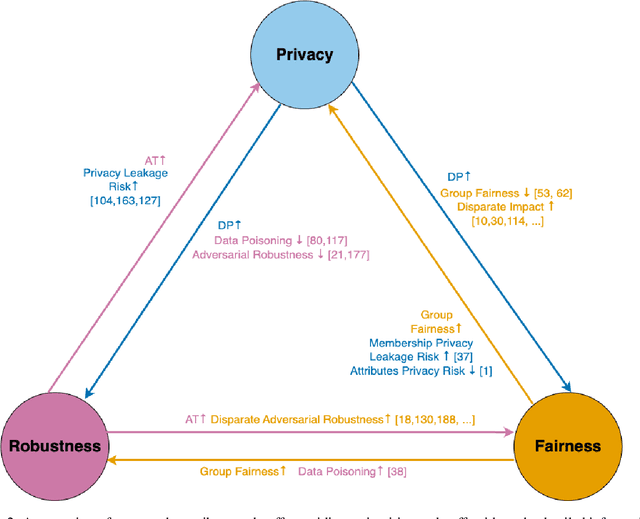

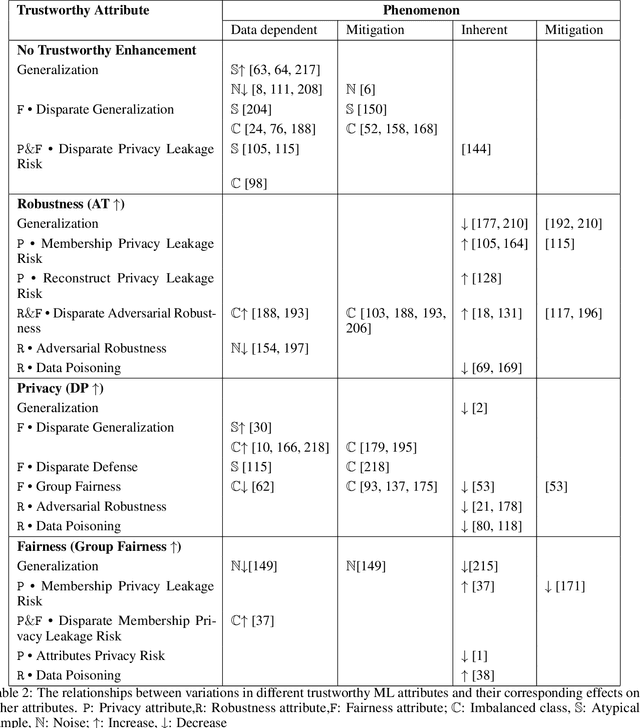

Abstract:The role of memorization in machine learning (ML) has garnered significant attention, particularly as modern models are empirically observed to memorize fragments of training data. Previous theoretical analyses, such as Feldman's seminal work, attribute memorization to the prevalence of long-tail distributions in training data, proving it unavoidable for samples that lie in the tail of the distribution. However, the intersection of memorization and trustworthy ML research reveals critical gaps. While prior research in memorization in trustworthy ML has solely focused on class imbalance, recent work starts to differentiate class-level rarity from atypical samples, which are valid and rare intra-class instances. However, a critical research gap remains: current frameworks conflate atypical samples with noisy and erroneous data, neglecting their divergent impacts on fairness, robustness, and privacy. In this work, we conduct a thorough survey of existing research and their findings on trustworthy ML and the role of memorization. More and beyond, we identify and highlight uncharted gaps and propose new revenues in this research direction. Since existing theoretical and empirical analyses lack the nuances to disentangle memorization's duality as both a necessity and a liability, we formalize three-level long-tail granularity - class imbalance, atypicality, and noise - to reveal how current frameworks misapply these levels, perpetuating flawed solutions. By systematizing this granularity, we draw a roadmap for future research. Trustworthy ML must reconcile the nuanced trade-offs between memorizing atypicality for fairness assurance and suppressing noise for robustness and privacy guarantee. Redefining memorization via this granularity reshapes the theoretical foundation for trustworthy ML, and further affords an empirical prerequisite for models that align performance with societal trust.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge