Qiang Zhai

MExD: An Expert-Infused Diffusion Model for Whole-Slide Image Classification

Mar 16, 2025

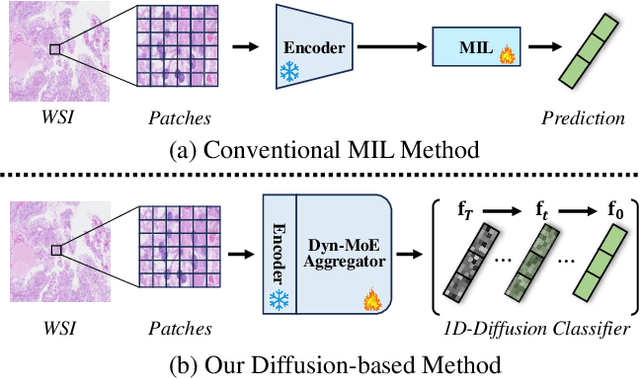

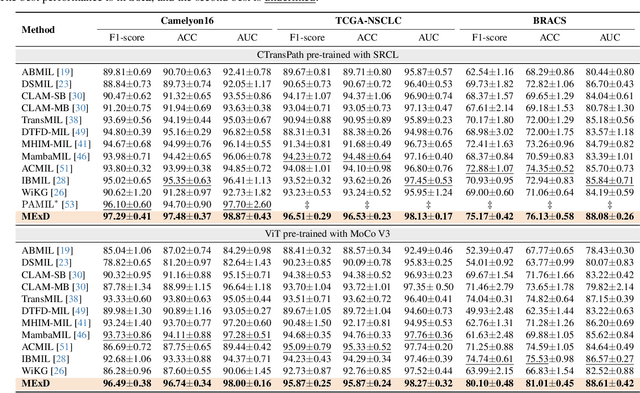

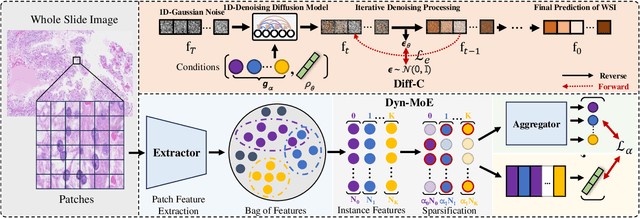

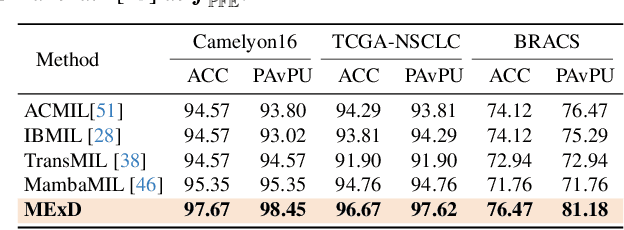

Abstract:Whole Slide Image (WSI) classification poses unique challenges due to the vast image size and numerous non-informative regions, which introduce noise and cause data imbalance during feature aggregation. To address these issues, we propose MExD, an Expert-Infused Diffusion Model that combines the strengths of a Mixture-of-Experts (MoE) mechanism with a diffusion model for enhanced classification. MExD balances patch feature distribution through a novel MoE-based aggregator that selectively emphasizes relevant information, effectively filtering noise, addressing data imbalance, and extracting essential features. These features are then integrated via a diffusion-based generative process to directly yield the class distribution for the WSI. Moving beyond conventional discriminative approaches, MExD represents the first generative strategy in WSI classification, capturing fine-grained details for robust and precise results. Our MExD is validated on three widely-used benchmarks-Camelyon16, TCGA-NSCLC, and BRACS consistently achieving state-of-the-art performance in both binary and multi-class tasks.

Teach CLIP to Develop a Number Sense for Ordinal Regression

Aug 07, 2024Abstract:Ordinal regression is a fundamental problem within the field of computer vision, with customised well-trained models on specific tasks. While pre-trained vision-language models (VLMs) have exhibited impressive performance on various vision tasks, their potential for ordinal regression has received less exploration. In this study, we first investigate CLIP's potential for ordinal regression, from which we expect the model could generalise to different ordinal regression tasks and scenarios. Unfortunately, vanilla CLIP fails on this task, since current VLMs have a well-documented limitation of encapsulating compositional concepts such as number sense. We propose a simple yet effective method called NumCLIP to improve the quantitative understanding of VLMs. We disassemble the exact image to number-specific text matching problem into coarse classification and fine prediction stages. We discretize and phrase each numerical bin with common language concept to better leverage the available pre-trained alignment in CLIP. To consider the inherent continuous property of ordinal regression, we propose a novel fine-grained cross-modal ranking-based regularisation loss specifically designed to keep both semantic and ordinal alignment in CLIP's feature space. Experimental results on three general ordinal regression tasks demonstrate the effectiveness of NumCLIP, with 10% and 3.83% accuracy improvement on historical image dating and image aesthetics assessment task, respectively. Code is publicly available at https://github.com/xmed-lab/NumCLIP.

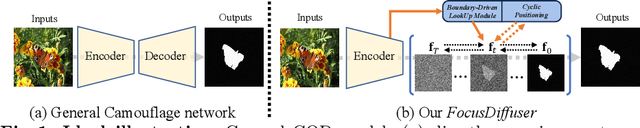

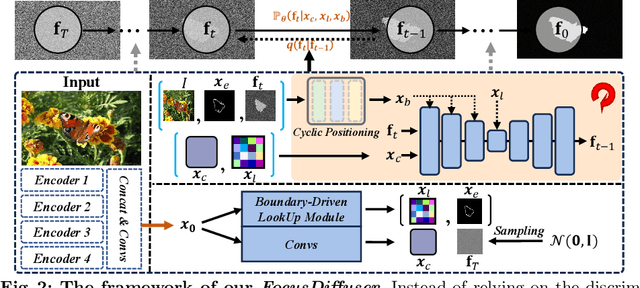

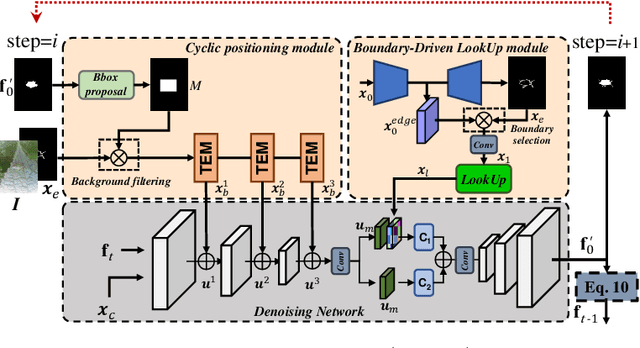

FocusDiffuser: Perceiving Local Disparities for Camouflaged Object Detection

Jul 18, 2024

Abstract:Detecting objects seamlessly blended into their surroundings represents a complex task for both human cognitive capabilities and advanced artificial intelligence algorithms. Currently, the majority of methodologies for detecting camouflaged objects mainly focus on utilizing discriminative models with various unique designs. However, it has been observed that generative models, such as Stable Diffusion, possess stronger capabilities for understanding various objects in complex environments; Yet their potential for the cognition and detection of camouflaged objects has not been extensively explored. In this study, we present a novel denoising diffusion model, namely FocusDiffuser, to investigate how generative models can enhance the detection and interpretation of camouflaged objects. We believe that the secret to spotting camouflaged objects lies in catching the subtle nuances in details. Consequently, our FocusDiffuser innovatively integrates specialized enhancements, notably the Boundary-Driven LookUp (BDLU) module and Cyclic Positioning (CP) module, to elevate standard diffusion models, significantly boosting the detail-oriented analytical capabilities. Our experiments demonstrate that FocusDiffuser, from a generative perspective, effectively addresses the challenge of camouflaged object detection, surpassing leading models on benchmarks like CAMO, COD10K and NC4K.

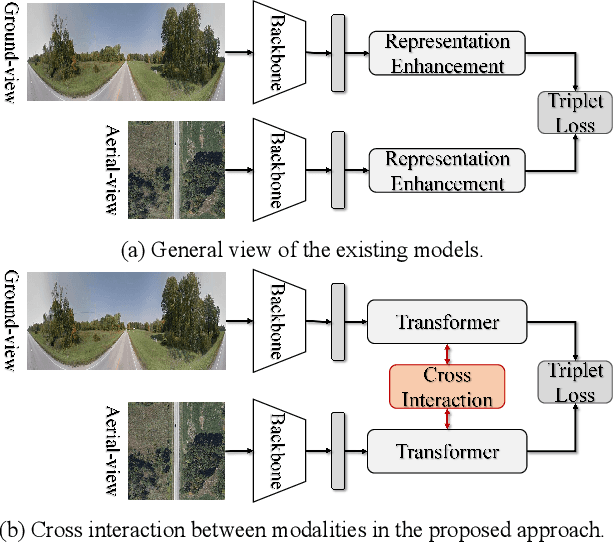

Mutual Generative Transformer Learning for Cross-view Geo-localization

Mar 17, 2022

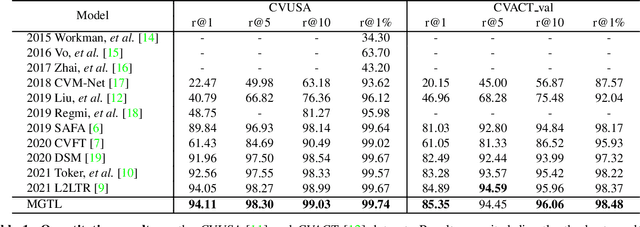

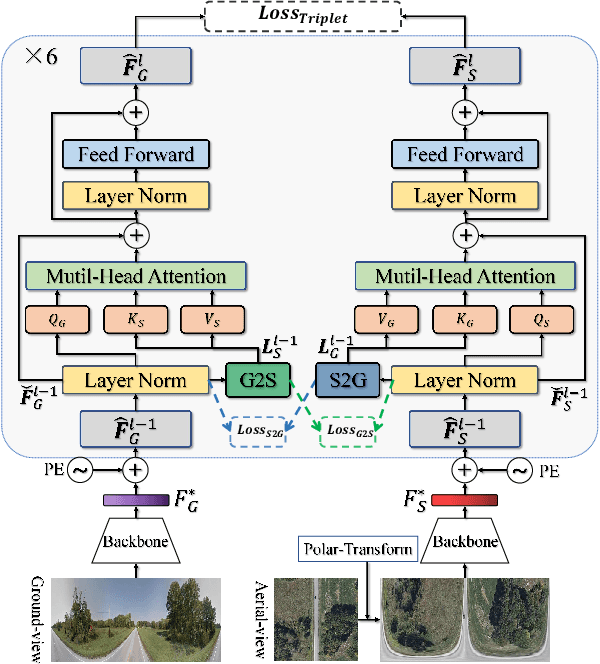

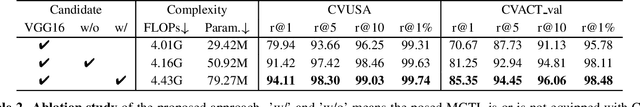

Abstract:Cross-view geo-localization (CVGL), which aims to estimate the geographical location of the ground-level camera by matching against enormous geo-tagged aerial (e.g., satellite) images, remains extremely challenging due to the drastic appearance differences across views. Existing methods mainly employ Siamese-like CNNs to extract global descriptors without examining the mutual benefits between the two modes. In this paper, we present a novel approach using cross-modal knowledge generative tactics in combination with transformer, namely mutual generative transformer learning (MGTL), for CVGL. Specifically, MGTL develops two separate generative modules--one for aerial-like knowledge generation from ground-level semantic information and vice versa--and fully exploits their mutual benefits through the attention mechanism. Experiments on challenging public benchmarks, CVACT and CVUSA, demonstrate the effectiveness of the proposed method compared to the existing state-of-the-art models.

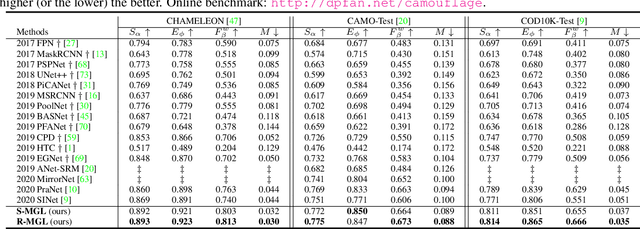

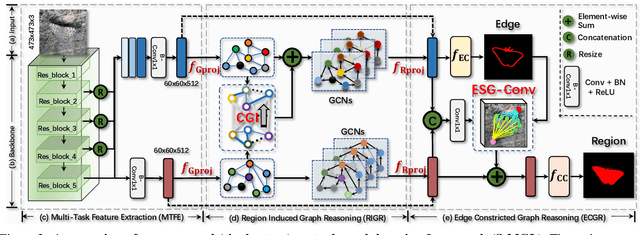

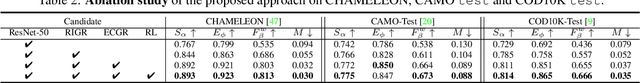

Mutual Graph Learning for Camouflaged Object Detection

Apr 03, 2021

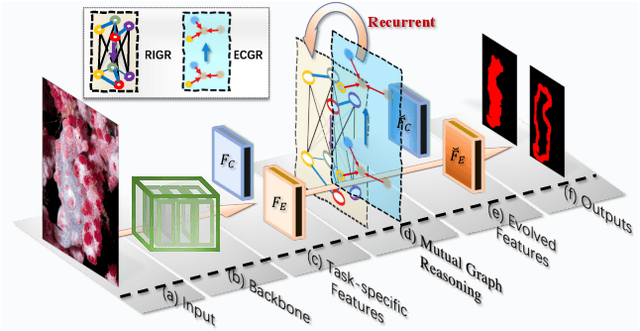

Abstract:Automatically detecting/segmenting object(s) that blend in with their surroundings is difficult for current models. A major challenge is that the intrinsic similarities between such foreground objects and background surroundings make the features extracted by deep model indistinguishable. To overcome this challenge, an ideal model should be able to seek valuable, extra clues from the given scene and incorporate them into a joint learning framework for representation co-enhancement. With this inspiration, we design a novel Mutual Graph Learning (MGL) model, which generalizes the idea of conventional mutual learning from regular grids to the graph domain. Specifically, MGL decouples an image into two task-specific feature maps -- one for roughly locating the target and the other for accurately capturing its boundary details -- and fully exploits the mutual benefits by recurrently reasoning their high-order relations through graphs. Importantly, in contrast to most mutual learning approaches that use a shared function to model all between-task interactions, MGL is equipped with typed functions for handling different complementary relations to maximize information interactions. Experiments on challenging datasets, including CHAMELEON, CAMO and COD10K, demonstrate the effectiveness of our MGL with superior performance to existing state-of-the-art methods.

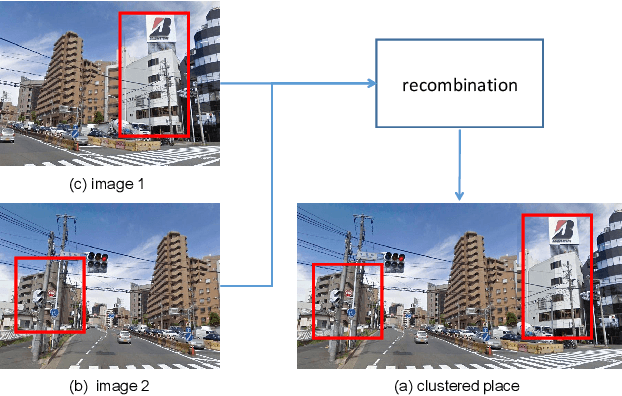

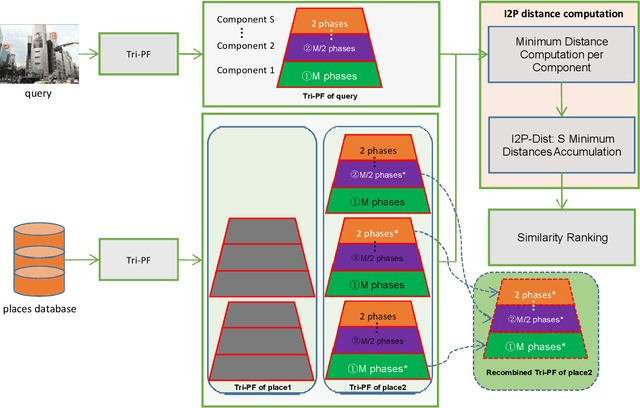

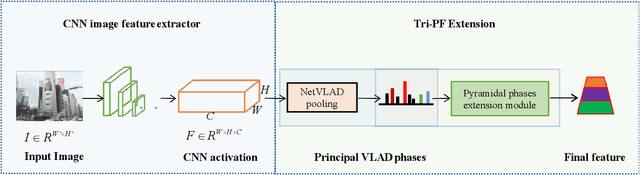

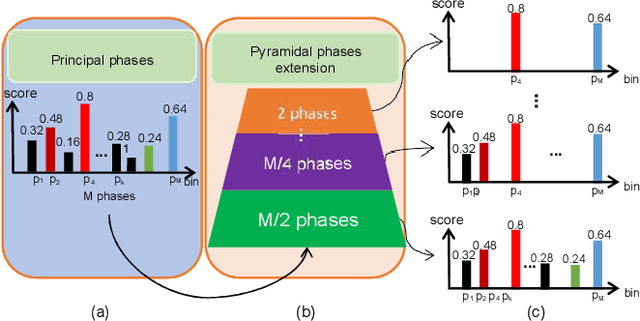

Place Clustering-based Feature Recombination for Visual Place Recognition

Jul 26, 2019

Abstract:Visual place recognition is an important problem in both computer vision and robotics, and image content changes caused by occlusion and viewpoint changes in natural scenes still pose challenges to place recognition. This paper aims at the problem by proposing novel feature recombination based on place clustering. Firstly, a general pyramid extension scheme, called Pyramid Principal Phases Feature (Tri-PF), is extracted based on the histogram feature. Further to maximize the role of the new feature, we evaluate the similarity by clustering images with a certain threshold as a 'place'. Extensive experiments have been conducted to verify the effectiveness of the proposed approach and the results demonstrate that our method can achieve consistently better performance than state-of-the-art on two standard place recognition benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge