Pragna Mannam

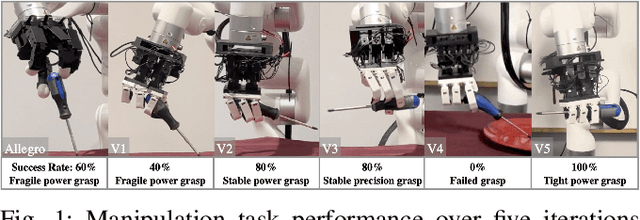

Design and Control Co-Optimization for Automated Design Iteration of Dexterous Anthropomorphic Soft Robotic Hands

Mar 15, 2024

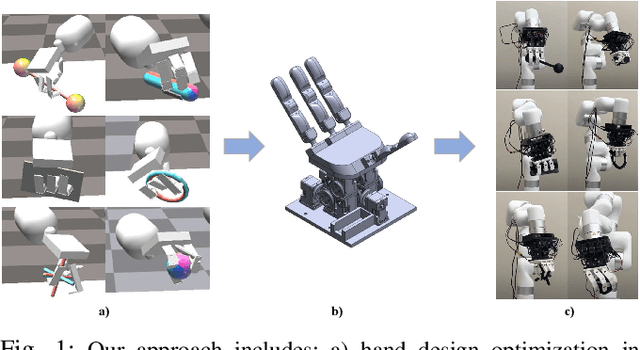

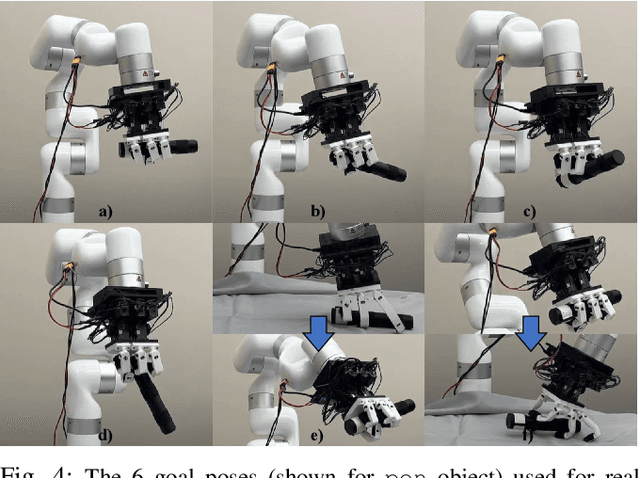

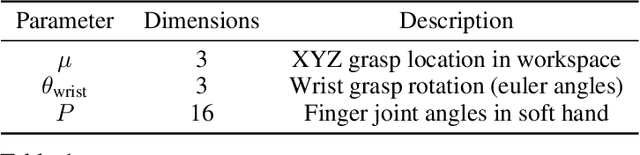

Abstract:We automate soft robotic hand design iteration by co-optimizing design and control policy for dexterous manipulation skills in simulation. Our design iteration pipeline combines genetic algorithms and policy transfer to learn control policies for nearly 400 hand designs, testing grasp quality under external force disturbances. We validate the optimized designs in the real world through teleoperation of pickup and reorient manipulation tasks. Our real world evaluation, from over 900 teleoperated tasks, shows that the trend in design performance in simulation resembles that of the real world. Furthermore, we show that optimized hand designs from our approach outperform existing soft robot hands from prior work in the real world. The results highlight the usefulness of simulation in guiding parameter choices for anthropomorphic soft robotic hand systems, and the effectiveness of our automated design iteration approach, despite the sim-to-real gap.

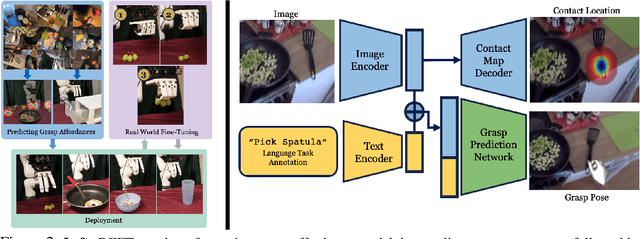

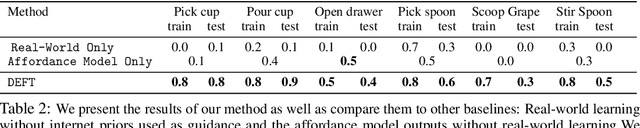

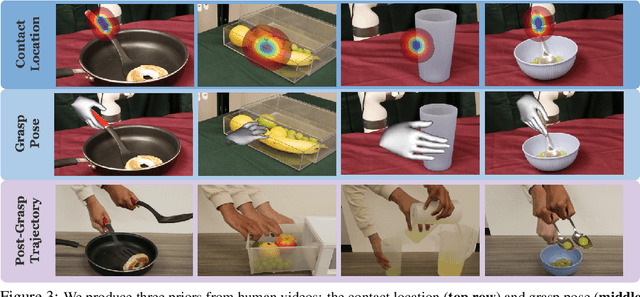

DEFT: Dexterous Fine-Tuning for Real-World Hand Policies

Oct 30, 2023

Abstract:Dexterity is often seen as a cornerstone of complex manipulation. Humans are able to perform a host of skills with their hands, from making food to operating tools. In this paper, we investigate these challenges, especially in the case of soft, deformable objects as well as complex, relatively long-horizon tasks. However, learning such behaviors from scratch can be data inefficient. To circumvent this, we propose a novel approach, DEFT (DExterous Fine-Tuning for Hand Policies), that leverages human-driven priors, which are executed directly in the real world. In order to improve upon these priors, DEFT involves an efficient online optimization procedure. With the integration of human-based learning and online fine-tuning, coupled with a soft robotic hand, DEFT demonstrates success across various tasks, establishing a robust, data-efficient pathway toward general dexterous manipulation. Please see our website at https://dexterous-finetuning.github.io for video results.

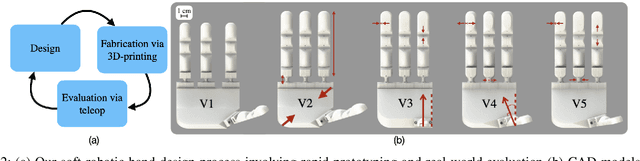

A Framework for Designing Anthropomorphic Soft Hands through Interaction

Jun 07, 2023

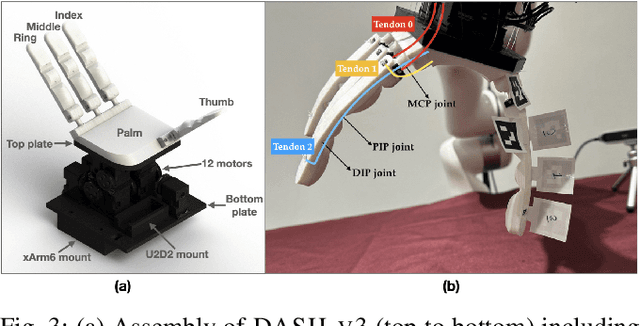

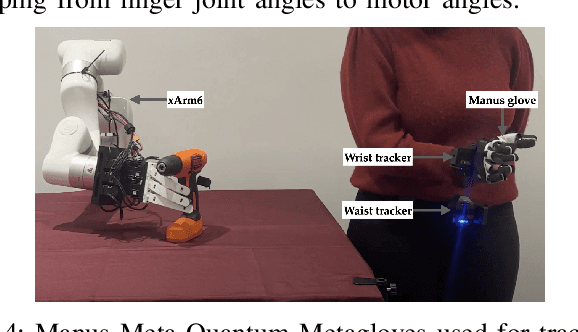

Abstract:Modeling and simulating soft robot hands can aid in design iteration for complex and high degree-of-freedom (DoF) morphologies. This can be further supplemented by iterating on the design based on its performance in real world manipulation tasks. However, this requires a framework that allows us to iterate quickly at low costs. In this paper, we present a framework that leverages rapid prototyping of the hand using 3D-printing, and utilizes teleoperation to evaluate the hand in real world manipulation tasks. Using this framework, we design a 3D-printed 16-DoF dexterous anthropomorphic soft hand (DASH) and iteratively improve its design over three iterations. Rapid prototyping techniques such as 3D-printing allow us to directly evaluate the fabricated hand without modeling it in simulation. We show that the design is improved at each iteration through the hand's performance in 30 real-world teleoperated manipulation tasks. Testing over 600 demonstrations shows that our final version of DASH can solve 16 of the 30 tasks compared to Allegro, a popular rigid hand in the market, which can only solve 7 tasks. We open-source our CAD models as well as the teleoperated dataset for further study and are available on our website (https://dash-through-interaction.github.io.)

DeltaZ: An Accessible Compliant Delta Robot Manipulator for Research and Education

Jul 02, 2022

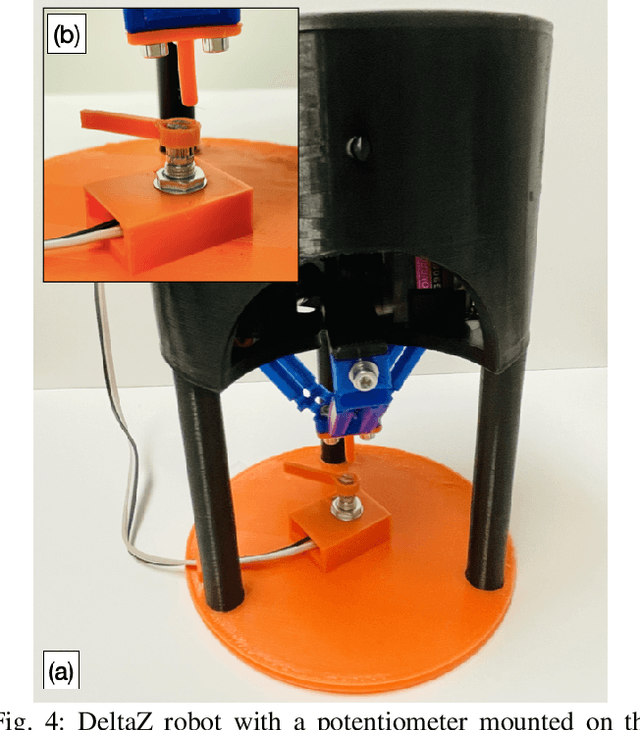

Abstract:This paper presents the DeltaZ robot, a centimeter-scale, low-cost, delta-style robot that allows for a broad range of capabilities and robust functionalities. Current technologies allow DeltaZ to be 3D-printed from soft and rigid materials so that it is easy to assemble and maintain, and lowers the barriers to utilize. Functionality of the robot stems from its three translational degrees of freedom and a closed form kinematic solution which makes manipulation problems more intuitive compared to other manipulators. Moreover, the low cost of the robot presents an opportunity to democratize manipulators for a research setting. We also describe how the robot can be used as a reinforcement learning benchmark. Open-source 3D-printable designs and code are available to the public.

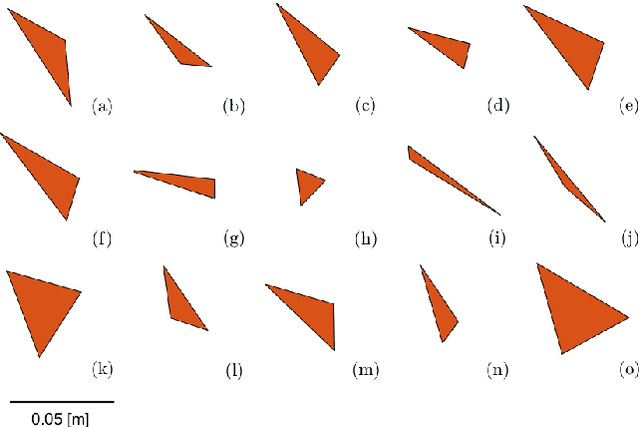

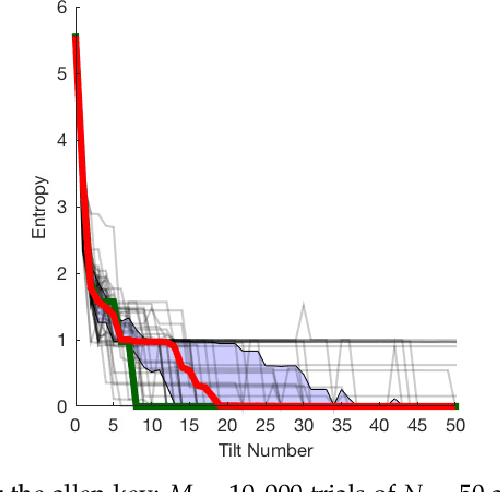

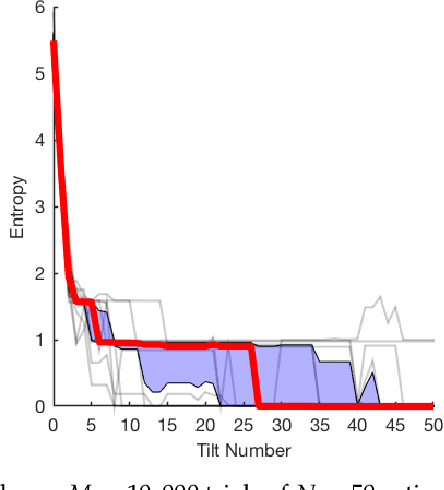

Sensorless Pose Determination using Randomized Action Sequences

Dec 04, 2018

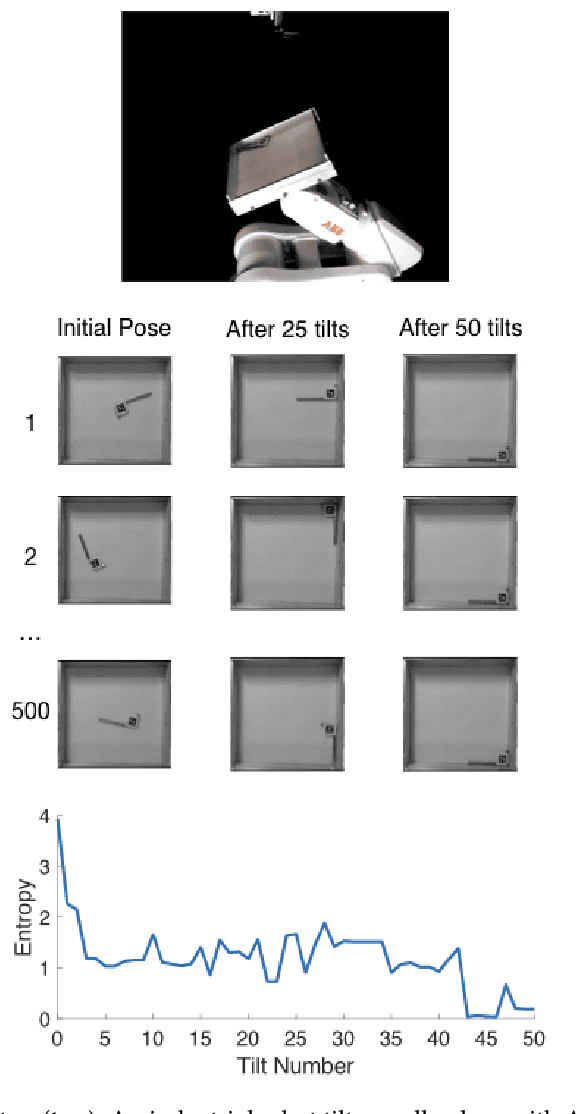

Abstract:This paper is a study of 2D manipulation without sensing and planning, by exploring the effects of unplanned randomized action sequences on 2D object pose uncertainty. Our approach follows the work of Erdmann and Mason's sensorless reorienting of an object into a completely determined pose, regardless of its initial pose. While Erdmann and Mason proposed a method using Newtonian mechanics, this paper shows that under some circumstances, a long enough sequence of random actions will also converge toward a determined final pose of the object. This is verified through several simulation and real robot experiments where randomized action sequences are shown to reduce entropy of the object pose distribution. The effects of varying object shapes, action sequences, and surface friction are also explored.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge