Pingping Liu

UrbanMoE: A Sparse Multi-Modal Mixture-of-Experts Framework for Multi-Task Urban Region Profiling

Jan 30, 2026Abstract:Urban region profiling, the task of characterizing geographical areas, is crucial for urban planning and resource allocation. However, existing research in this domain faces two significant limitations. First, most methods are confined to single-task prediction, failing to capture the interconnected, multi-faceted nature of urban environments where numerous indicators are deeply correlated. Second, the field lacks a standardized experimental benchmark, which severely impedes fair comparison and reproducible progress. To address these challenges, we first establish a comprehensive benchmark for multi-task urban region profiling, featuring multi-modal features and a diverse set of strong baselines to ensure a fair and rigorous evaluation environment. Concurrently, we propose UrbanMoE, the first sparse multi-modal, multi-expert framework specifically architected to solve the multi-task challenge. Leveraging a sparse Mixture-of-Experts architecture, it dynamically routes multi-modal features to specialized sub-networks, enabling the simultaneous prediction of diverse urban indicators. We conduct extensive experiments on three real-world datasets within our benchmark, where UrbanMoE consistently demonstrates superior performance over all baselines. Further in-depth analysis validates the efficacy and efficiency of our approach, setting a new state-of-the-art and providing the community with a valuable tool for future research in urban analytics

Holistic Capability Preservation: Towards Compact Yet Comprehensive Reasoning Models

Apr 09, 2025Abstract:This technical report presents Ring-Lite-Distill, a lightweight reasoning model derived from our open-source Mixture-of-Experts (MoE) Large Language Models (LLMs) Ling-Lite. This study demonstrates that through meticulous high-quality data curation and ingenious training paradigms, the compact MoE model Ling-Lite can be further trained to achieve exceptional reasoning capabilities, while maintaining its parameter-efficient architecture with only 2.75 billion activated parameters, establishing an efficient lightweight reasoning architecture. In particular, in constructing this model, we have not merely focused on enhancing advanced reasoning capabilities, exemplified by high-difficulty mathematical problem solving, but rather aimed to develop a reasoning model with more comprehensive competency coverage. Our approach ensures coverage across reasoning tasks of varying difficulty levels while preserving generic capabilities, such as instruction following, tool use, and knowledge retention. We show that, Ring-Lite-Distill's reasoning ability reaches a level comparable to DeepSeek-R1-Distill-Qwen-7B, while its general capabilities significantly surpass those of DeepSeek-R1-Distill-Qwen-7B. The models are accessible at https://huggingface.co/inclusionAI

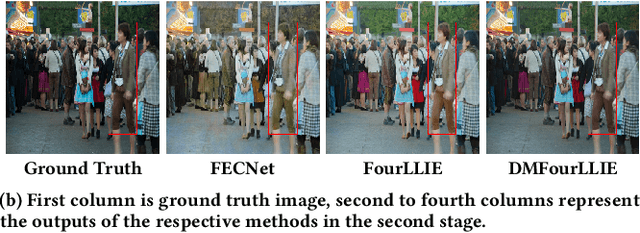

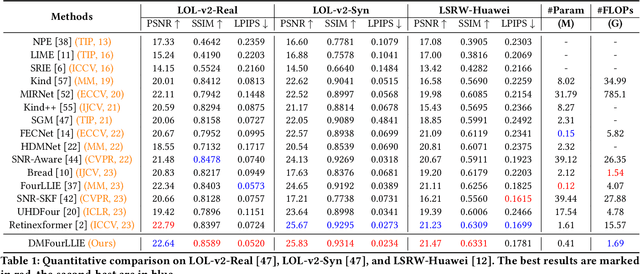

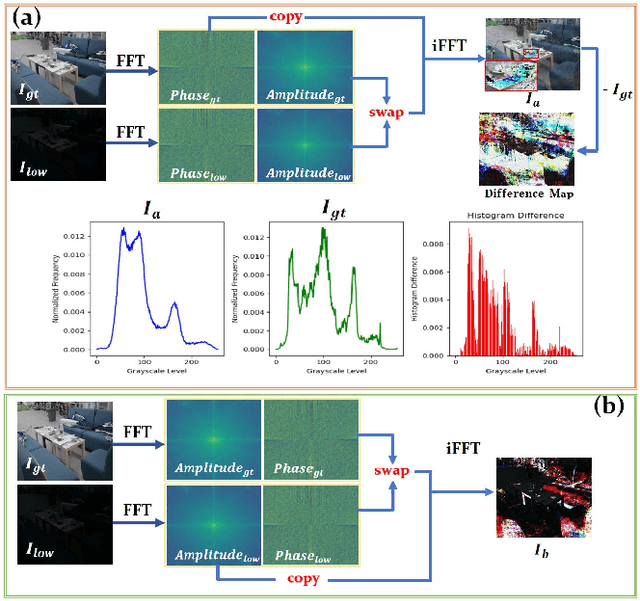

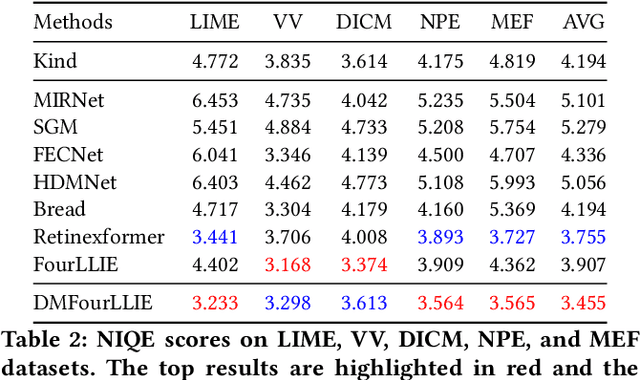

DMFourLLIE: Dual-Stage and Multi-Branch Fourier Network for Low-Light Image Enhancement

Dec 01, 2024

Abstract:In the Fourier frequency domain, luminance information is primarily encoded in the amplitude component, while spatial structure information is significantly contained within the phase component. Existing low-light image enhancement techniques using Fourier transform have mainly focused on amplifying the amplitude component and simply replicating the phase component, an approach that often leads to color distortions and noise issues. In this paper, we propose a Dual-Stage Multi-Branch Fourier Low-Light Image Enhancement (DMFourLLIE) framework to address these limitations by emphasizing the phase component's role in preserving image structure and detail. The first stage integrates structural information from infrared images to enhance the phase component and employs a luminance-attention mechanism in the luminance-chrominance color space to precisely control amplitude enhancement. The second stage combines multi-scale and Fourier convolutional branches for robust image reconstruction, effectively recovering spatial structures and textures. This dual-branch joint optimization process ensures that complex image information is retained, overcoming the limitations of previous methods that neglected the interplay between amplitude and phase. Extensive experiments across multiple datasets demonstrate that DMFourLLIE outperforms current state-of-the-art methods in low-light image enhancement. Our code is available at https://github.com/bywlzts/DMFourLLIE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge