Peter L. Choyke

Location-based Radiology Report-Guided Semi-supervised Learning for Prostate Cancer Detection

Jun 18, 2024Abstract:Prostate cancer is one of the most prevalent malignancies in the world. While deep learning has potential to further improve computer-aided prostate cancer detection on MRI, its efficacy hinges on the exhaustive curation of manually annotated images. We propose a novel methodology of semisupervised learning (SSL) guided by automatically extracted clinical information, specifically the lesion locations in radiology reports, allowing for use of unannotated images to reduce the annotation burden. By leveraging lesion locations, we refined pseudo labels, which were then used to train our location-based SSL model. We show that our SSL method can improve prostate lesion detection by utilizing unannotated images, with more substantial impacts being observed when larger proportions of unannotated images are used.

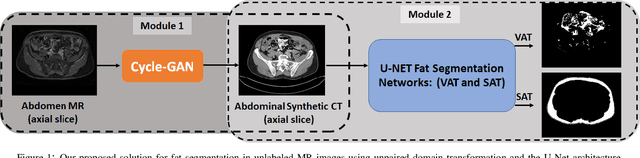

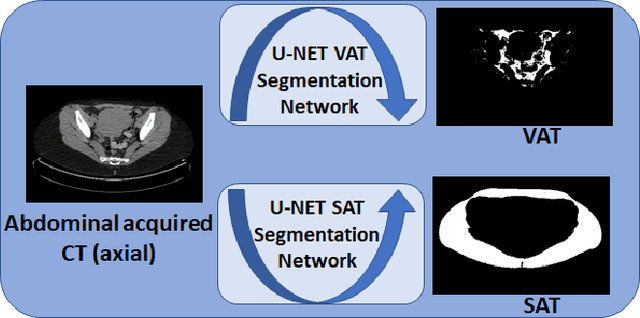

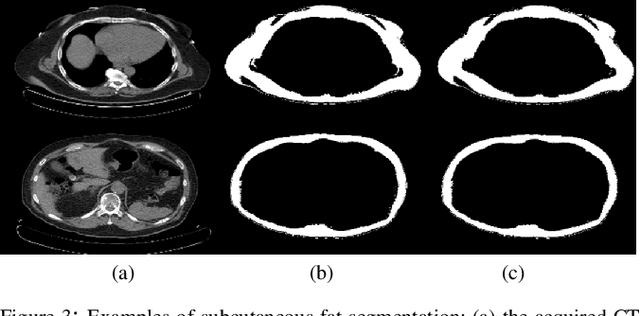

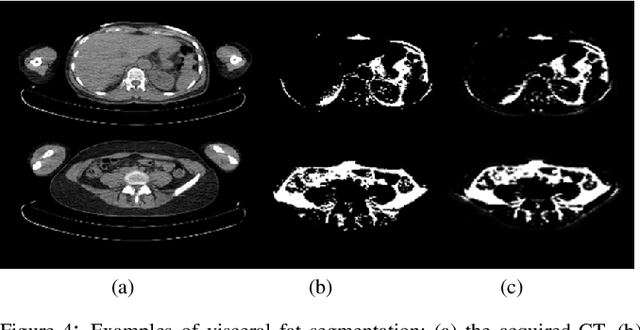

Adipose Tissue Segmentation in Unlabeled Abdomen MRI using Cross Modality Domain Adaptation

May 11, 2020

Abstract:Abdominal fat quantification is critical since multiple vital organs are located within this region. Although computed tomography (CT) is a highly sensitive modality to segment body fat, it involves ionizing radiations which makes magnetic resonance imaging (MRI) a preferable alternative for this purpose. Additionally, the superior soft tissue contrast in MRI could lead to more accurate results. Yet, it is highly labor intensive to segment fat in MRI scans. In this study, we propose an algorithm based on deep learning technique(s) to automatically quantify fat tissue from MR images through a cross modality adaptation. Our method does not require supervised labeling of MR scans, instead, we utilize a cycle generative adversarial network (C-GAN) to construct a pipeline that transforms the existing MR scans into their equivalent synthetic CT (s-CT) images where fat segmentation is relatively easier due to the descriptive nature of HU (hounsfield unit) in CT images. The fat segmentation results for MRI scans were evaluated by expert radiologist. Qualitative evaluation of our segmentation results shows average success score of 3.80/5 and 4.54/5 for visceral and subcutaneous fat segmentation in MR images.

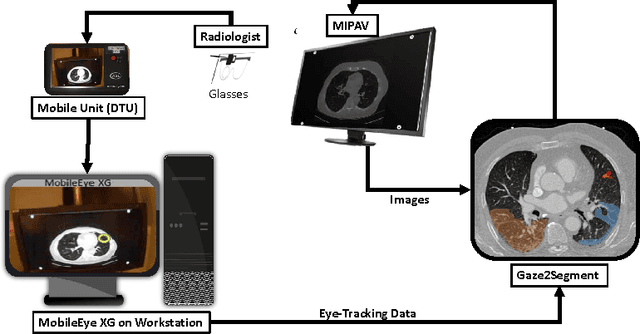

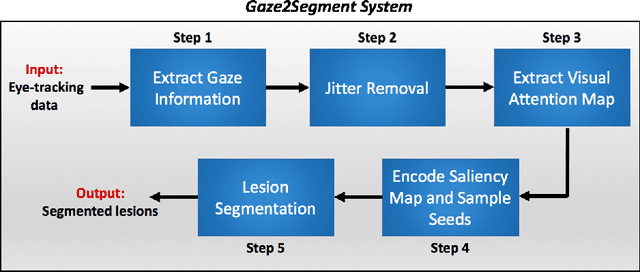

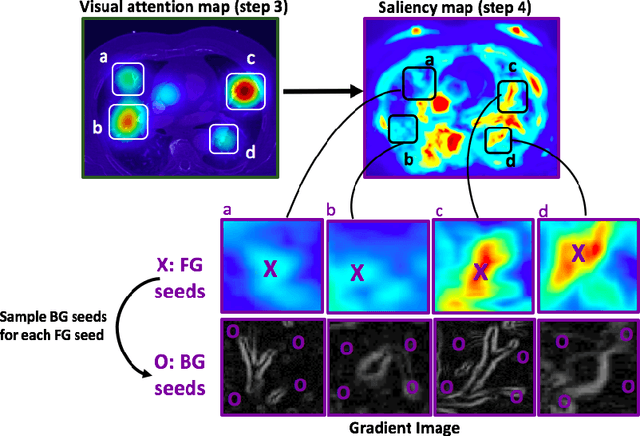

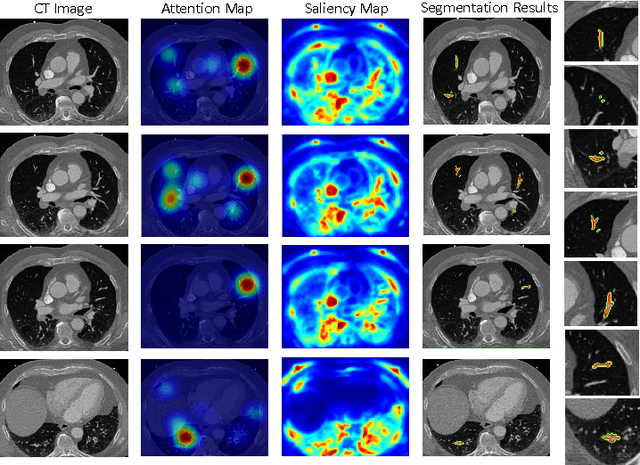

Gaze2Segment: A Pilot Study for Integrating Eye-Tracking Technology into Medical Image Segmentation

Aug 10, 2016

Abstract:This study introduced a novel system, called Gaze2Segment, integrating biological and computer vision techniques to support radiologists' reading experience with an automatic image segmentation task. During diagnostic assessment of lung CT scans, the radiologists' gaze information were used to create a visual attention map. This map was then combined with a computer-derived saliency map, extracted from the gray-scale CT images. The visual attention map was used as an input for indicating roughly the location of a object of interest. With computer-derived saliency information, on the other hand, we aimed at finding foreground and background cues for the object of interest. At the final step, these cues were used to initiate a seed-based delineation process. Segmentation accuracy of the proposed Gaze2Segment was found to be 86% with dice similarity coefficient and 1.45 mm with Hausdorff distance. To the best of our knowledge, Gaze2Segment is the first true integration of eye-tracking technology into a medical image segmentation task without the need for any further user-interaction.

Unsupervised deconvolution of dynamic imaging reveals intratumor vascular heterogeneity

Sep 04, 2014Abstract:Intratumor heterogeneity is often manifested by vascular compartments with distinct pharmacokinetics that cannot be resolved directly by in vivo dynamic imaging. We developed tissue-specific compartment modeling (TSCM), an unsupervised computational method of deconvolving dynamic imaging series from heterogeneous tumors that can improve vascular phenotyping in many biological contexts. Applying TSCM to dynamic contrast-enhanced MRI of breast cancers revealed characteristic intratumor vascular heterogeneity and therapeutic responses that were otherwise undetectable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge