Brad J. Wood

Location-based Radiology Report-Guided Semi-supervised Learning for Prostate Cancer Detection

Jun 18, 2024Abstract:Prostate cancer is one of the most prevalent malignancies in the world. While deep learning has potential to further improve computer-aided prostate cancer detection on MRI, its efficacy hinges on the exhaustive curation of manually annotated images. We propose a novel methodology of semisupervised learning (SSL) guided by automatically extracted clinical information, specifically the lesion locations in radiology reports, allowing for use of unannotated images to reduce the annotation burden. By leveraging lesion locations, we refined pseudo labels, which were then used to train our location-based SSL model. We show that our SSL method can improve prostate lesion detection by utilizing unannotated images, with more substantial impacts being observed when larger proportions of unannotated images are used.

Learning Deep Similarity Metric for 3D MR-TRUS Registration

Oct 15, 2018

Abstract:Purpose: The fusion of transrectal ultrasound (TRUS) and magnetic resonance (MR) images for guiding targeted prostate biopsy has significantly improved the biopsy yield of aggressive cancers. A key component of MR-TRUS fusion is image registration. However, it is very challenging to obtain a robust automatic MR-TRUS registration due to the large appearance difference between the two imaging modalities. The work presented in this paper aims to tackle this problem by addressing two challenges: (i) the definition of a suitable similarity metric and (ii) the determination of a suitable optimization strategy. Methods: This work proposes the use of a deep convolutional neural network to learn a similarity metric for MR-TRUS registration. We also use a composite optimization strategy that explores the solution space in order to search for a suitable initialization for the second-order optimization of the learned metric. Further, a multi-pass approach is used in order to smooth the metric for optimization. Results: The learned similarity metric outperforms the classical mutual information and also the state-of-the-art MIND feature based methods. The results indicate that the overall registration framework has a large capture range. The proposed deep similarity metric based approach obtained a mean TRE of 3.86mm (with an initial TRE of 16mm) for this challenging problem. Conclusion: A similarity metric that is learned using a deep neural network can be used to assess the quality of any given image registration and can be used in conjunction with the aforementioned optimization framework to perform automatic registration that is robust to poor initialization.

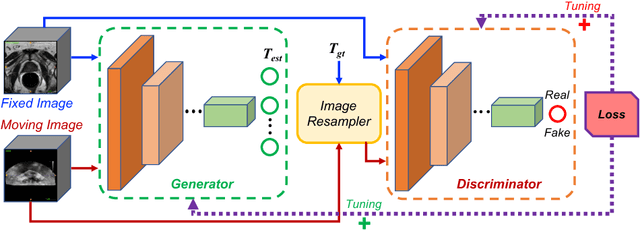

Adversarial Image Registration with Application for MR and TRUS Image Fusion

Oct 01, 2018

Abstract:Robust and accurate alignment of multimodal medical images is a very challenging task, which however is very useful for many clinical applications. For example, magnetic resonance (MR) and transrectal ultrasound (TRUS) image registration is a critical component in MR-TRUS fusion guided prostate interventions. However, due to the huge difference between the image appearances and the large variation in image correspondence, MR-TRUS image registration is a very challenging problem. In this paper, an adversarial image registration (AIR) framework is proposed. By training two deep neural networks simultaneously, one being a generator and the other being a discriminator, we can obtain not only a network for image registration, but also a metric network which can help evaluate the quality of image registration. The developed AIR-net is then evaluated using clinical datasets acquired through image-fusion guided prostate biopsy procedures and promising results are demonstrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge