Syed M. Anwar

Zero-Shot Pediatric Tuberculosis Detection in Chest X-Rays using Self-Supervised Learning

Feb 22, 2024

Abstract:Tuberculosis (TB) remains a significant global health challenge, with pediatric cases posing a major concern. The World Health Organization (WHO) advocates for chest X-rays (CXRs) for TB screening. However, visual interpretation by radiologists can be subjective, time-consuming and prone to error, especially in pediatric TB. Artificial intelligence (AI)-driven computer-aided detection (CAD) tools, especially those utilizing deep learning, show promise in enhancing lung disease detection. However, challenges include data scarcity and lack of generalizability. In this context, we propose a novel self-supervised paradigm leveraging Vision Transformers (ViT) for improved TB detection in CXR, enabling zero-shot pediatric TB detection. We demonstrate improvements in TB detection performance ($\sim$12.7% and $\sim$13.4% top AUC/AUPR gains in adults and children, respectively) when conducting self-supervised pre-training when compared to fully-supervised (i.e., non pre-trained) ViT models, achieving top performances of 0.959 AUC and 0.962 AUPR in adult TB detection, and 0.697 AUC and 0.607 AUPR in zero-shot pediatric TB detection. As a result, this work demonstrates that self-supervised learning on adult CXRs effectively extends to challenging downstream tasks such as pediatric TB detection, where data are scarce.

Quantitative Metrics for Benchmarking Medical Image Harmonization

Feb 06, 2024

Abstract:Image harmonization is an important preprocessing strategy to address domain shifts arising from data acquired using different machines and scanning protocols in medical imaging. However, benchmarking the effectiveness of harmonization techniques has been a challenge due to the lack of widely available standardized datasets with ground truths. In this context, we propose three metrics: two intensity harmonization metrics and one anatomy preservation metric for medical images during harmonization, where no ground truths are required. Through extensive studies on a dataset with available harmonization ground truth, we demonstrate that our metrics are correlated with established image quality assessment metrics. We show how these novel metrics may be applied to real-world scenarios where no harmonization ground truth exists. Additionally, we provide insights into different interpretations of the metric values, shedding light on their significance in the context of the harmonization process. As a result of our findings, we advocate for the adoption of these quantitative harmonization metrics as a standard for benchmarking the performance of image harmonization techniques.

Classification of Arrhythmia by Using Deep Learning with 2-D ECG Spectral Image Representation

May 25, 2020

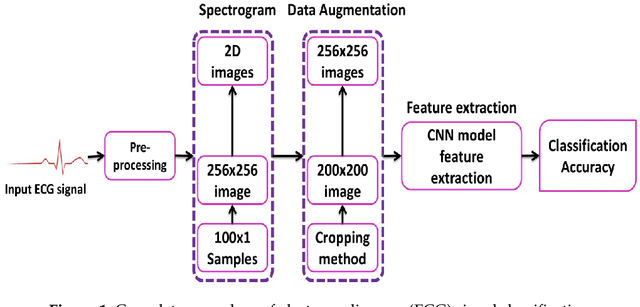

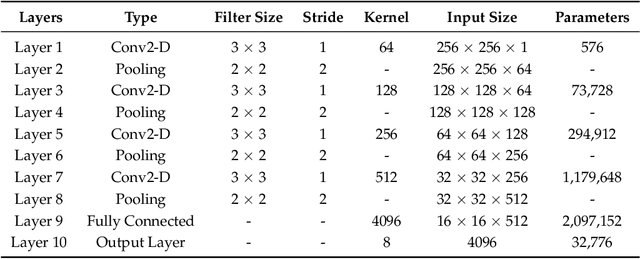

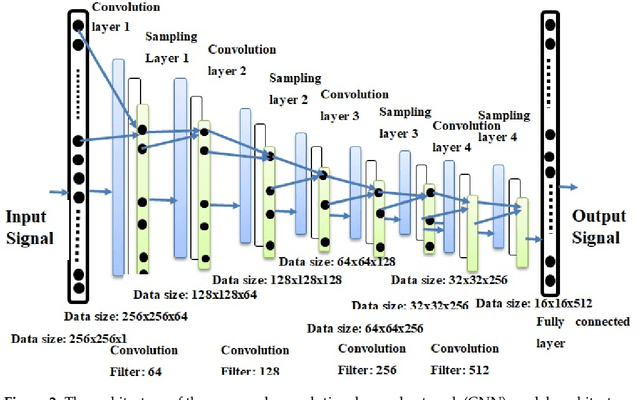

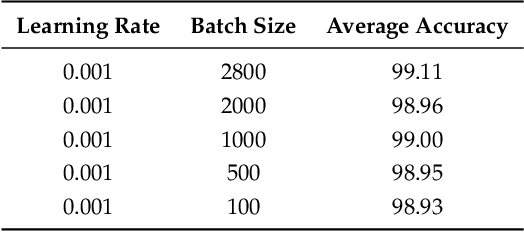

Abstract:The electrocardiogram (ECG) is one of the most extensively employed signals used in the diagnosis and prediction of cardiovascular diseases (CVDs). The ECG signals can capture the heart's rhythmic irregularities, commonly known as arrhythmias. A careful study of ECG signals is crucial for precise diagnoses of patients' acute and chronic heart conditions. In this study, we propose a two-dimensional (2-D) convolutional neural network (CNN) model for the classification of ECG signals into eight classes; namely, normal beat, premature ventricular contraction beat, paced beat, right bundle branch block beat, left bundle branch block beat, atrial premature contraction beat, ventricular flutter wave beat, and ventricular escape beat. The one-dimensional ECG time series signals are transformed into 2-D spectrograms through short-time Fourier transform. The 2-D CNN model consisting of four convolutional layers and four pooling layers is designed for extracting robust features from the input spectrograms. Our proposed methodology is evaluated on a publicly available MIT-BIH arrhythmia dataset. We achieved a state-of-the-art average classification accuracy of 99.11\%, which is better than those of recently reported results in classifying similar types of arrhythmias. The performance is significant in other indices as well, including sensitivity and specificity, which indicates the success of the proposed method.

* 14 pages, 5 figures, accepted for future publication in Remote Sensing MDPI Journal

Adipose Tissue Segmentation in Unlabeled Abdomen MRI using Cross Modality Domain Adaptation

May 11, 2020

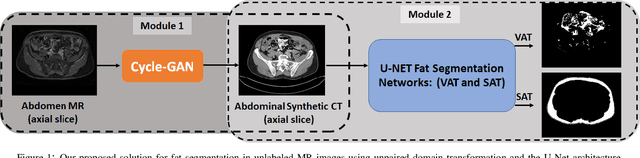

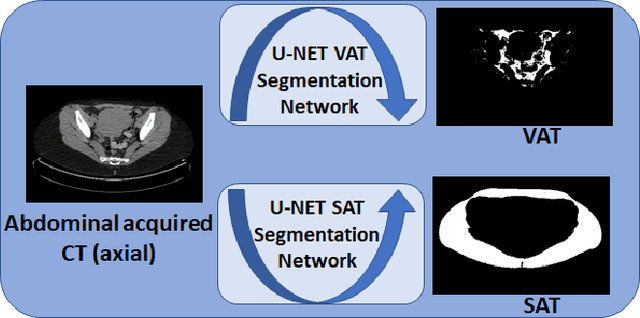

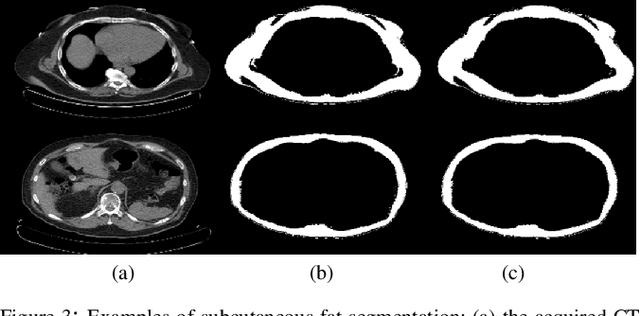

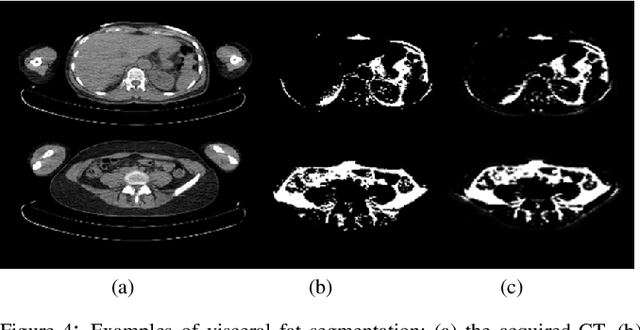

Abstract:Abdominal fat quantification is critical since multiple vital organs are located within this region. Although computed tomography (CT) is a highly sensitive modality to segment body fat, it involves ionizing radiations which makes magnetic resonance imaging (MRI) a preferable alternative for this purpose. Additionally, the superior soft tissue contrast in MRI could lead to more accurate results. Yet, it is highly labor intensive to segment fat in MRI scans. In this study, we propose an algorithm based on deep learning technique(s) to automatically quantify fat tissue from MR images through a cross modality adaptation. Our method does not require supervised labeling of MR scans, instead, we utilize a cycle generative adversarial network (C-GAN) to construct a pipeline that transforms the existing MR scans into their equivalent synthetic CT (s-CT) images where fat segmentation is relatively easier due to the descriptive nature of HU (hounsfield unit) in CT images. The fat segmentation results for MRI scans were evaluated by expert radiologist. Qualitative evaluation of our segmentation results shows average success score of 3.80/5 and 4.54/5 for visceral and subcutaneous fat segmentation in MR images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge