Elizabeth Jones

A Collaborative Computer Aided Diagnosis (C-CAD) System with Eye-Tracking, Sparse Attentional Model, and Deep Learning

Apr 28, 2018

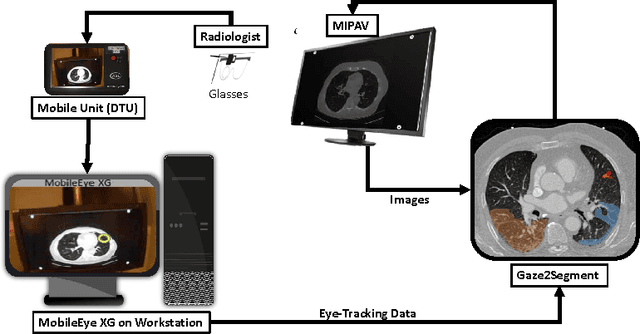

Abstract:There are at least two categories of errors in radiology screening that can lead to suboptimal diagnostic decisions and interventions:(i)human fallibility and (ii)complexity of visual search. Computer aided diagnostic (CAD) tools are developed to help radiologists to compensate for some of these errors. However, despite their significant improvements over conventional screening strategies, most CAD systems do not go beyond their use as second opinion tools due to producing a high number of false positives, which human interpreters need to correct. In parallel with efforts in computerized analysis of radiology scans, several researchers have examined behaviors of radiologists while screening medical images to better understand how and why they miss tumors, how they interact with the information in an image, and how they search for unknown pathology in the images. Eye-tracking tools have been instrumental in exploring answers to these fundamental questions. In this paper, we aim to develop a paradigm shift CAD system, called collaborative CAD (C-CAD), that unifies both of the above mentioned research lines: CAD and eye-tracking. We design an eye-tracking interface providing radiologists with a real radiology reading room experience. Then, we propose a novel algorithm that unifies eye-tracking data and a CAD system. Specifically, we present a new graph based clustering and sparsification algorithm to transform eye-tracking data (gaze) into a signal model to interpret gaze patterns quantitatively and qualitatively. The proposed C-CAD collaborates with radiologists via eye-tracking technology and helps them to improve diagnostic decisions. The C-CAD learns radiologists' search efficiency by processing their gaze patterns. To do this, the C-CAD uses a deep learning algorithm in a newly designed multi-task learning platform to segment and diagnose cancers simultaneously.

Gaze2Segment: A Pilot Study for Integrating Eye-Tracking Technology into Medical Image Segmentation

Aug 10, 2016

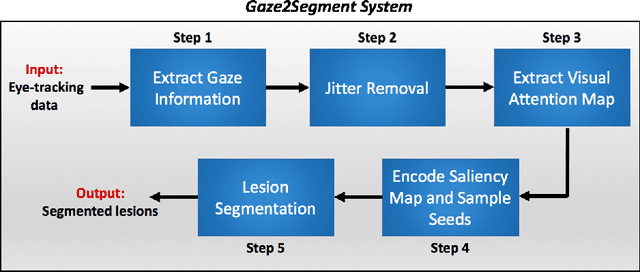

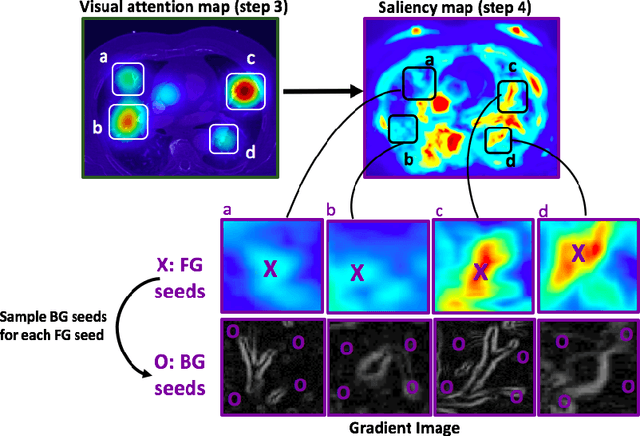

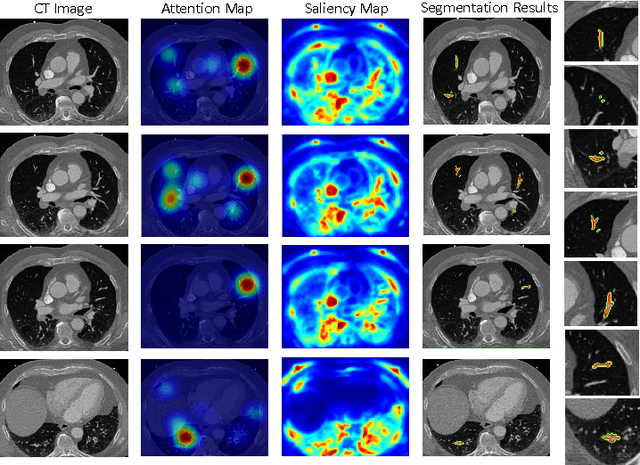

Abstract:This study introduced a novel system, called Gaze2Segment, integrating biological and computer vision techniques to support radiologists' reading experience with an automatic image segmentation task. During diagnostic assessment of lung CT scans, the radiologists' gaze information were used to create a visual attention map. This map was then combined with a computer-derived saliency map, extracted from the gray-scale CT images. The visual attention map was used as an input for indicating roughly the location of a object of interest. With computer-derived saliency information, on the other hand, we aimed at finding foreground and background cues for the object of interest. At the final step, these cues were used to initiate a seed-based delineation process. Segmentation accuracy of the proposed Gaze2Segment was found to be 86% with dice similarity coefficient and 1.45 mm with Hausdorff distance. To the best of our knowledge, Gaze2Segment is the first true integration of eye-tracking technology into a medical image segmentation task without the need for any further user-interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge