Pengying Wu

CoINS: Counterfactual Interactive Navigation via Skill-Aware VLM

Jan 07, 2026Abstract:Recent Vision-Language Models (VLMs) have demonstrated significant potential in robotic planning. However, they typically function as semantic reasoners, lacking an intrinsic understanding of the specific robot's physical capabilities. This limitation is particularly critical in interactive navigation, where robots must actively modify cluttered environments to create traversable paths. Existing VLM-based navigators are predominantly confined to passive obstacle avoidance, failing to reason about when and how to interact with objects to clear blocked paths. To bridge this gap, we propose Counterfactual Interactive Navigation via Skill-aware VLM (CoINS), a hierarchical framework that integrates skill-aware reasoning and robust low-level execution. Specifically, we fine-tune a VLM, named InterNav-VLM, which incorporates skill affordance and concrete constraint parameters into the input context and grounds them into a metric-scale environmental representation. By internalizing the logic of counterfactual reasoning through fine-tuning on the proposed InterNav dataset, the model learns to implicitly evaluate the causal effects of object removal on navigation connectivity, thereby determining interaction necessity and target selection. To execute the generated high-level plans, we develop a comprehensive skill library through reinforcement learning, specifically introducing traversability-oriented strategies to manipulate diverse objects for path clearance. A systematic benchmark in Isaac Sim is proposed to evaluate both the reasoning and execution aspects of interactive navigation. Extensive simulations and real-world experiments demonstrate that CoINS significantly outperforms representative baselines, achieving a 17\% higher overall success rate and over 80\% improvement in complex long-horizon scenarios compared to the best-performing baseline

SwarmDiff: Swarm Robotic Trajectory Planning in Cluttered Environments via Diffusion Transformer

May 21, 2025Abstract:Swarm robotic trajectory planning faces challenges in computational efficiency, scalability, and safety, particularly in complex, obstacle-dense environments. To address these issues, we propose SwarmDiff, a hierarchical and scalable generative framework for swarm robots. We model the swarm's macroscopic state using Probability Density Functions (PDFs) and leverage conditional diffusion models to generate risk-aware macroscopic trajectory distributions, which then guide the generation of individual robot trajectories at the microscopic level. To ensure a balance between the swarm's optimal transportation and risk awareness, we integrate Wasserstein metrics and Conditional Value at Risk (CVaR). Additionally, we introduce a Diffusion Transformer (DiT) to improve sampling efficiency and generation quality by capturing long-range dependencies. Extensive simulations and real-world experiments demonstrate that SwarmDiff outperforms existing methods in computational efficiency, trajectory validity, and scalability, making it a reliable solution for swarm robotic trajectory planning.

Adaptive Interactive Navigation of Quadruped Robots using Large Language Models

Mar 29, 2025

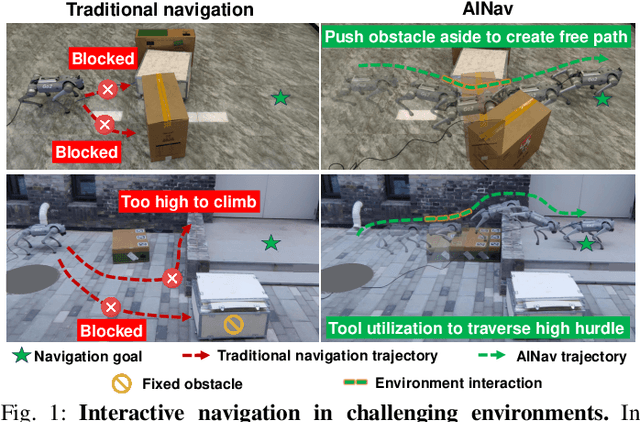

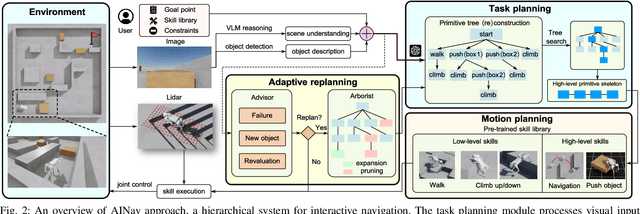

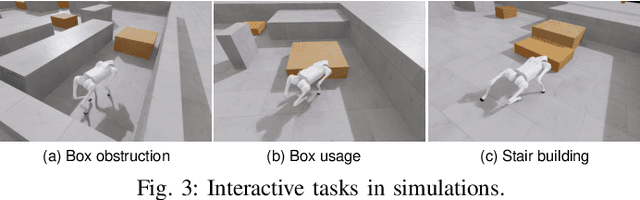

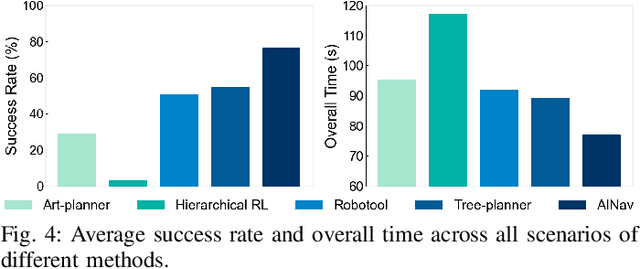

Abstract:Robotic navigation in complex environments remains a critical research challenge. Traditional navigation methods focus on optimal trajectory generation within free space, struggling in environments lacking viable paths to the goal, such as disaster zones or cluttered warehouses. To address this gap, we propose an adaptive interactive navigation approach that proactively interacts with environments to create feasible paths to reach originally unavailable goals. Specifically, we present a primitive tree for task planning with large language models (LLMs), facilitating effective reasoning to determine interaction objects and sequences. To ensure robust subtask execution, we adopt reinforcement learning to pre-train a comprehensive skill library containing versatile locomotion and interaction behaviors for motion planning. Furthermore, we introduce an adaptive replanning method featuring two LLM-based modules: an advisor serving as a flexible replanning trigger and an arborist for autonomous plan adjustment. Integrated with the tree structure, the replanning mechanism allows for convenient node addition and pruning, enabling rapid plan modification in unknown environments. Comprehensive simulations and experiments have demonstrated our method's effectiveness and adaptivity in diverse scenarios. The supplementary video is available at page: https://youtu.be/W5ttPnSap2g.

CAMON: Cooperative Agents for Multi-Object Navigation with LLM-based Conversations

Jun 30, 2024

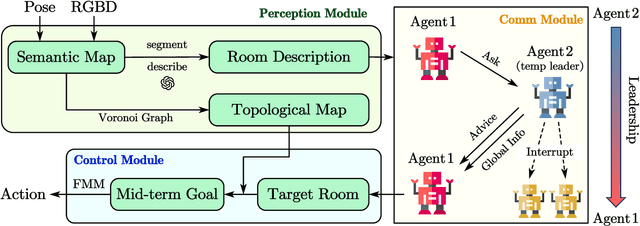

Abstract:Visual navigation tasks are critical for household service robots. As these tasks become increasingly complex, effective communication and collaboration among multiple robots become imperative to ensure successful completion. In recent years, large language models (LLMs) have exhibited remarkable comprehension and planning abilities in the context of embodied agents. However, their application in household scenarios, specifically in the use of multiple agents collaborating to complete complex navigation tasks through communication, remains unexplored. Therefore, this paper proposes a framework for decentralized multi-agent navigation, leveraging LLM-enabled communication and collaboration. By designing the communication-triggered dynamic leadership organization structure, we achieve faster team consensus with fewer communication instances, leading to better navigation effectiveness and collaborative exploration efficiency. With the proposed novel communication scheme, our framework promises to be conflict-free and robust in multi-object navigation tasks, even when there is a surge in team size.

ASPIRe: An Informative Trajectory Planner with Mutual Information Approximation for Target Search and Tracking

Mar 04, 2024

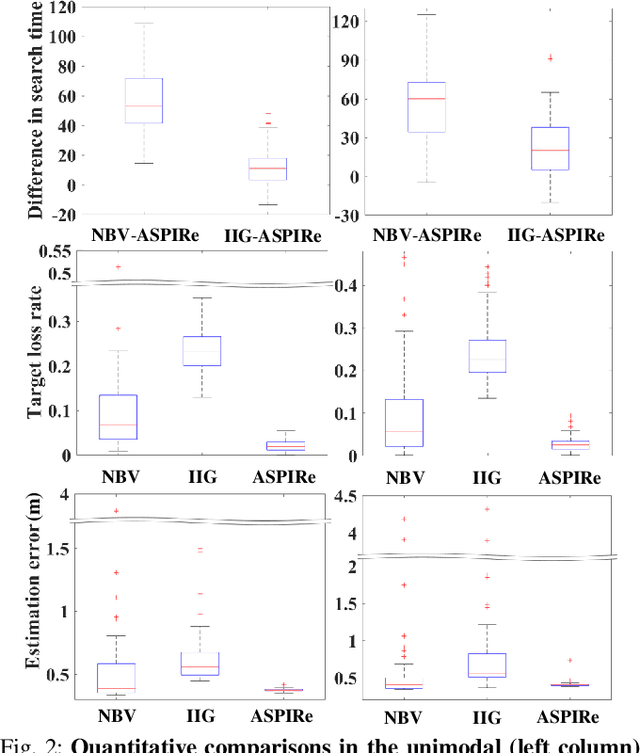

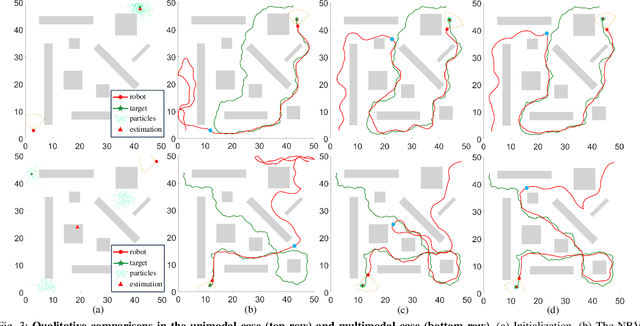

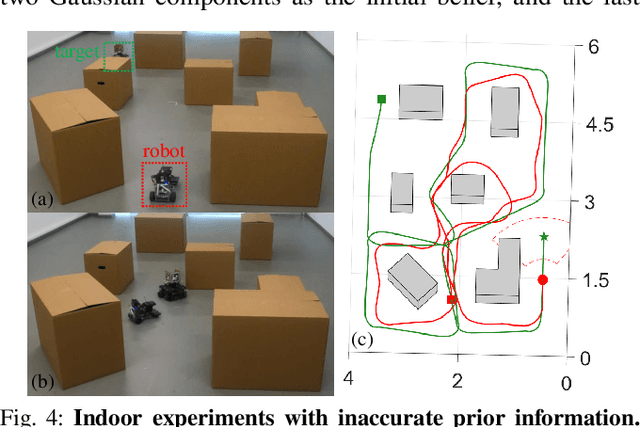

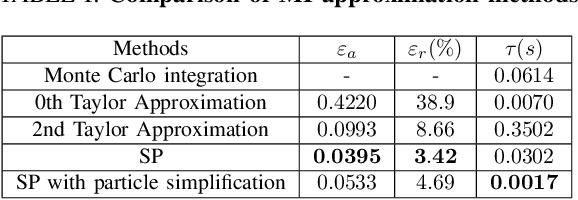

Abstract:This paper proposes an informative trajectory planning approach, namely, \textit{adaptive particle filter tree with sigma point-based mutual information reward approximation} (ASPIRe), for mobile target search and tracking (SAT) in cluttered environments with limited sensing field of view. We develop a novel sigma point-based approximation to accurately estimate mutual information (MI) for general, non-Gaussian distributions utilizing particle representation of the belief state, while simultaneously maintaining high computational efficiency. Building upon the MI approximation, we develop the Adaptive Particle Filter Tree (APFT) approach with MI as the reward, which features belief state tree nodes for informative trajectory planning in continuous state and measurement spaces. An adaptive criterion is proposed in APFT to adjust the planning horizon based on the expected information gain. Simulations and physical experiments demonstrate that ASPIRe achieves real-time computation and outperforms benchmark methods in terms of both search efficiency and estimation accuracy.

DOZE: A Dataset for Open-Vocabulary Zero-Shot Object Navigation in Dynamic Environments

Feb 29, 2024Abstract:Zero-Shot Object Navigation (ZSON) requires agents to autonomously locate and approach unseen objects in unfamiliar environments and has emerged as a particularly challenging task within the domain of Embodied AI. Existing datasets for developing ZSON algorithms lack consideration of dynamic obstacles, object attribute diversity, and scene texts, thus exhibiting noticeable discrepancy from real-world situations. To address these issues, we propose a Dataset for Open-Vocabulary Zero-Shot Object Navigation in Dynamic Environments (DOZE) that comprises ten high-fidelity 3D scenes with over 18k tasks, aiming to mimic complex, dynamic real-world scenarios. Specifically, DOZE scenes feature multiple moving humanoid obstacles, a wide array of open-vocabulary objects, diverse distinct-attribute objects, and valuable textual hints. Besides, different from existing datasets that only provide collision checking between the agent and static obstacles, we enhance DOZE by integrating capabilities for detecting collisions between the agent and moving obstacles. This novel functionality enables evaluation of the agents' collision avoidance abilities in dynamic environments. We test four representative ZSON methods on DOZE, revealing substantial room for improvement in existing approaches concerning navigation efficiency, safety, and object recognition accuracy. Our dataset could be found at https://DOZE-Dataset.github.io/.

VoroNav: Voronoi-based Zero-shot Object Navigation with Large Language Model

Jan 05, 2024

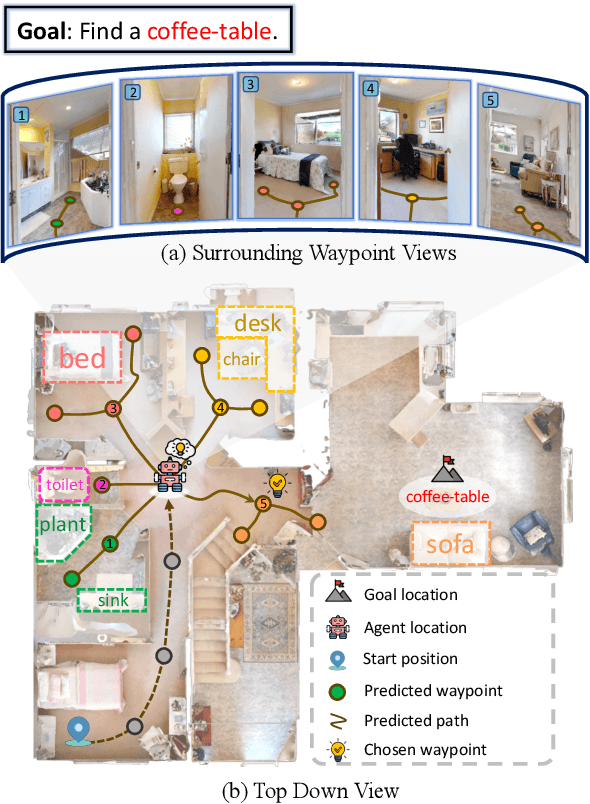

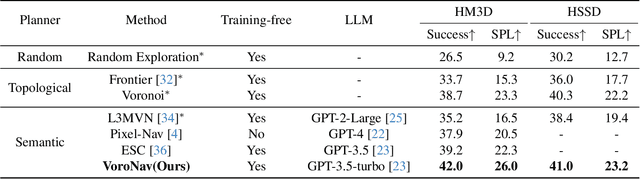

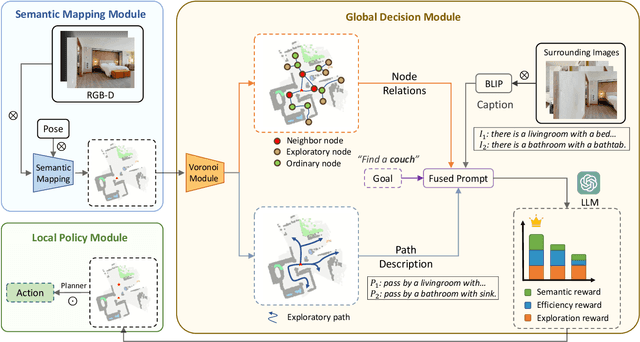

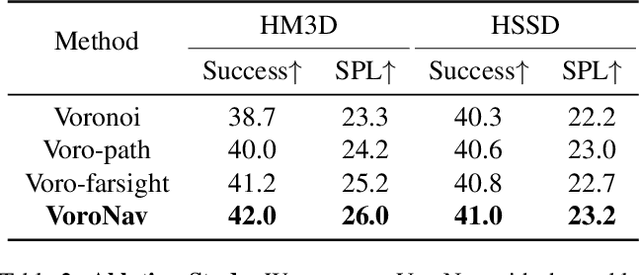

Abstract:In the realm of household robotics, the Zero-Shot Object Navigation (ZSON) task empowers agents to adeptly traverse unfamiliar environments and locate objects from novel categories without prior explicit training. This paper introduces VoroNav, a novel semantic exploration framework that proposes the Reduced Voronoi Graph to extract exploratory paths and planning nodes from a semantic map constructed in real time. By harnessing topological and semantic information, VoroNav designs text-based descriptions of paths and images that are readily interpretable by a large language model (LLM). Our approach presents a synergy of path and farsight descriptions to represent the environmental context, enabling the LLM to apply commonsense reasoning to ascertain the optimal waypoints for navigation. Extensive evaluation on the HM3D and HSSD datasets validates that VoroNav surpasses existing ZSON benchmarks in both success rates and exploration efficiency (+2.8% Success and +3.7% SPL on HM3D, +2.6% Success and +3.8% SPL on HSSD). Additionally introduced metrics that evaluate obstacle avoidance proficiency and perceptual efficiency further corroborate the enhancements achieved by our method in ZSON planning.

Probabilistic Visibility-Aware Trajectory Planning for Target Tracking in Cluttered Environments

Jun 10, 2023Abstract:Target tracking with a mobile robot has numerous significant applications in both civilian and military. Practical challenges such as limited field-of-view, obstacle occlusion, and system uncertainty may all adversely affect tracking performance, yet few existing works can simultaneously tackle these limitations. To bridge the gap, we introduce the concept of belief-space probability of detection (BPOD) to measure the predictive visibility of the target under stochastic robot and target states. An Extended Kalman Filter variant incorporating BPOD is developed to predict target belief state under uncertain visibility within the planning horizon. Furthermore, we propose a computationally efficient algorithm to uniformly calculate both BPOD and the chance-constrained collision risk by utilizing linearized signed distance function (SDF), and then design a two-stage strategy for lightweight calculation of SDF in sequential convex programming. Building upon these treatments, we develop a real-time, non-myopic trajectory planner for visibility-aware and safe target tracking in the presence of system uncertainty. The effectiveness of the proposed approach is verified by both simulations and real-world experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge