Pengju Tian

RS-DFM: A Remote Sensing Distributed Foundation Model for Diverse Downstream Tasks

Jun 11, 2024

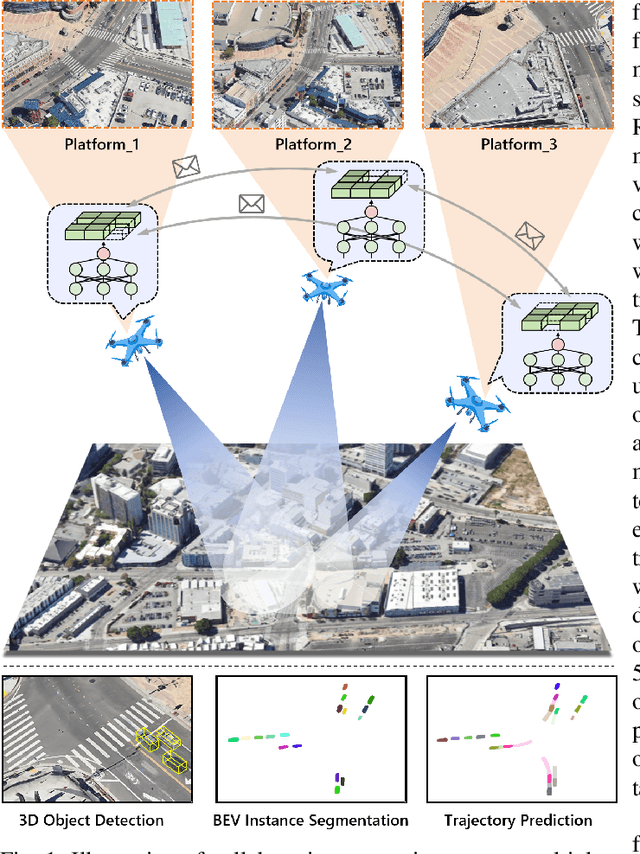

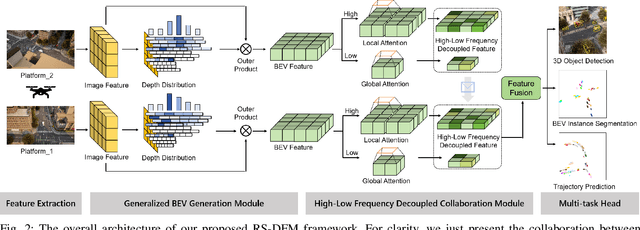

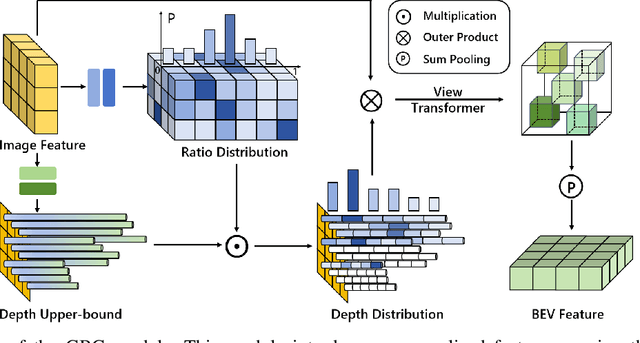

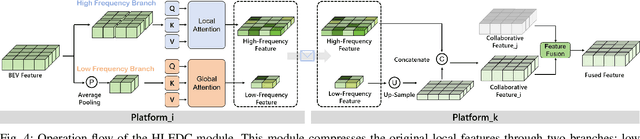

Abstract:Remote sensing lightweight foundation models have achieved notable success in online perception within remote sensing. However, their capabilities are restricted to performing online inference solely based on their own observations and models, thus lacking a comprehensive understanding of large-scale remote sensing scenarios. To overcome this limitation, we propose a Remote Sensing Distributed Foundation Model (RS-DFM) based on generalized information mapping and interaction. This model can realize online collaborative perception across multiple platforms and various downstream tasks by mapping observations into a unified space and implementing a task-agnostic information interaction strategy. Specifically, we leverage the ground-based geometric prior of remote sensing oblique observations to transform the feature mapping from absolute depth estimation to relative depth estimation, thereby enhancing the model's ability to extract generalized features across diverse heights and perspectives. Additionally, we present a dual-branch information compression module to decouple high-frequency and low-frequency feature information, achieving feature-level compression while preserving essential task-agnostic details. In support of our research, we create a multi-task simulation dataset named AirCo-MultiTasks for multi-UAV collaborative observation. We also conduct extensive experiments, including 3D object detection, instance segmentation, and trajectory prediction. The numerous results demonstrate that our RS-DFM achieves state-of-the-art performance across various downstream tasks.

UVCPNet: A UAV-Vehicle Collaborative Perception Network for 3D Object Detection

Jun 07, 2024Abstract:With the advancement of collaborative perception, the role of aerial-ground collaborative perception, a crucial component, is becoming increasingly important. The demand for collaborative perception across different perspectives to construct more comprehensive perceptual information is growing. However, challenges arise due to the disparities in the field of view between cross-domain agents and their varying sensitivity to information in images. Additionally, when we transform image features into Bird's Eye View (BEV) features for collaboration, we need accurate depth information. To address these issues, we propose a framework specifically designed for aerial-ground collaboration. First, to mitigate the lack of datasets for aerial-ground collaboration, we develop a virtual dataset named V2U-COO for our research. Second, we design a Cross-Domain Cross-Adaptation (CDCA) module to align the target information obtained from different domains, thereby achieving more accurate perception results. Finally, we introduce a Collaborative Depth Optimization (CDO) module to obtain more precise depth estimation results, leading to more accurate perception outcomes. We conduct extensive experiments on both our virtual dataset and a public dataset to validate the effectiveness of our framework. Our experiments on the V2U-COO dataset and the DAIR-V2X dataset demonstrate that our method improves detection accuracy by 6.1% and 2.7%, respectively.

UCDNet: Multi-UAV Collaborative 3D Object Detection Network by Reliable Feature Mapping

Jun 07, 2024Abstract:Multi-UAV collaborative 3D object detection can perceive and comprehend complex environments by integrating complementary information, with applications encompassing traffic monitoring, delivery services and agricultural management. However, the extremely broad observations in aerial remote sensing and significant perspective differences across multiple UAVs make it challenging to achieve precise and consistent feature mapping from 2D images to 3D space in multi-UAV collaborative 3D object detection paradigm. To address the problem, we propose an unparalleled camera-based multi-UAV collaborative 3D object detection paradigm called UCDNet. Specifically, the depth information from the UAVs to the ground is explicitly utilized as a strong prior to provide a reference for more accurate and generalizable feature mapping. Additionally, we design a homologous points geometric consistency loss as an auxiliary self-supervision, which directly influences the feature mapping module, thereby strengthening the global consistency of multi-view perception. Experiments on AeroCollab3D and CoPerception-UAVs datasets show our method increases 4.7% and 10% mAP respectively compared to the baseline, which demonstrates the superiority of UCDNet.

Drones Help Drones: A Collaborative Framework for Multi-Drone Object Trajectory Prediction and Beyond

May 23, 2024

Abstract:Collaborative trajectory prediction can comprehensively forecast the future motion of objects through multi-view complementary information. However, it encounters two main challenges in multi-drone collaboration settings. The expansive aerial observations make it difficult to generate precise Bird's Eye View (BEV) representations. Besides, excessive interactions can not meet real-time prediction requirements within the constrained drone-based communication bandwidth. To address these problems, we propose a novel framework named "Drones Help Drones" (DHD). Firstly, we incorporate the ground priors provided by the drone's inclined observation to estimate the distance between objects and drones, leading to more precise BEV generation. Secondly, we design a selective mechanism based on the local feature discrepancy to prioritize the critical information contributing to prediction tasks during inter-drone interactions. Additionally, we create the first dataset for multi-drone collaborative prediction, named "Air-Co-Pred", and conduct quantitative and qualitative experiments to validate the effectiveness of our DHD framework.The results demonstrate that compared to state-of-the-art approaches, DHD reduces position deviation in BEV representations by over 20% and requires only a quarter of the transmission ratio for interactions while achieving comparable prediction performance. Moreover, DHD also shows promising generalization to the collaborative 3D object detection in CoPerception-UAVs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge