Pedro Piacenza

SpikeATac: A Multimodal Tactile Finger with Taxelized Dynamic Sensing for Dexterous Manipulation

Oct 30, 2025

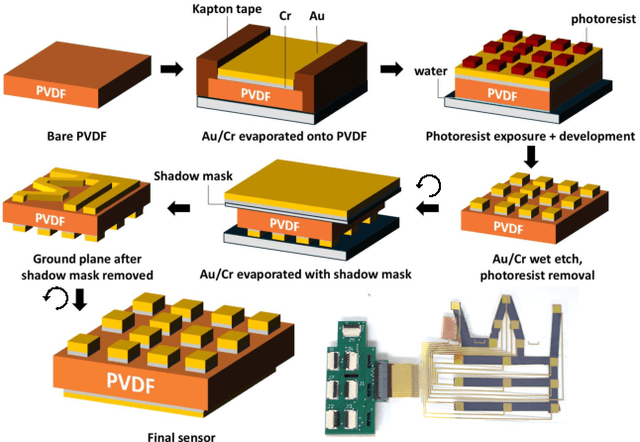

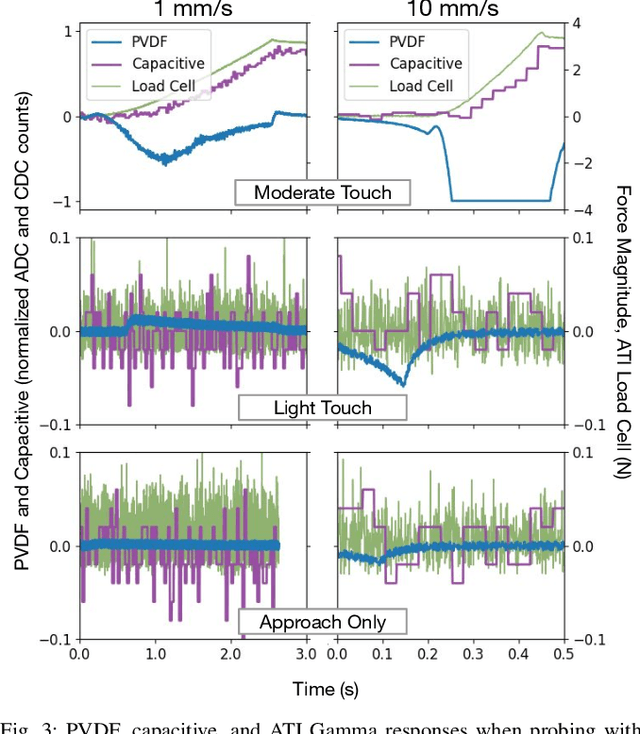

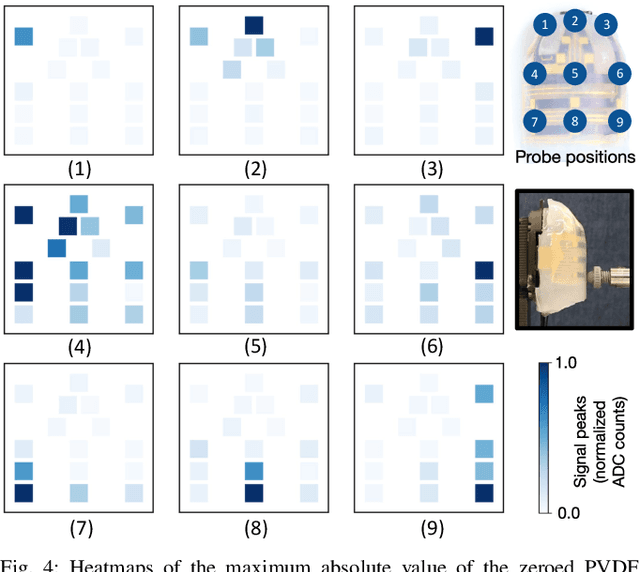

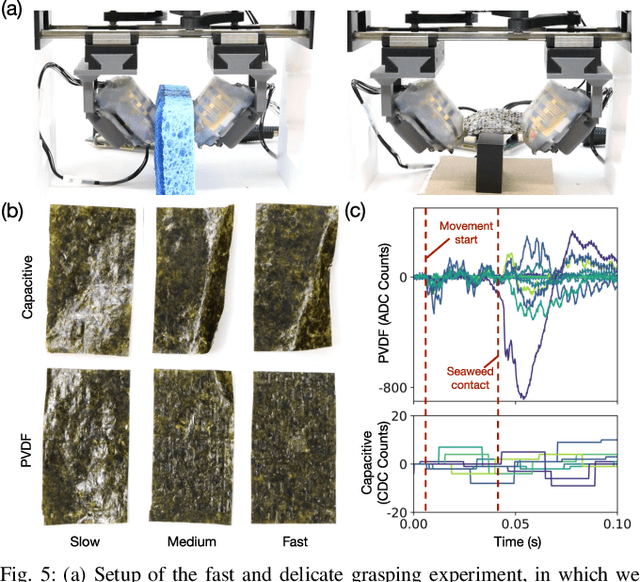

Abstract:In this work, we introduce SpikeATac, a multimodal tactile finger combining a taxelized and highly sensitive dynamic response (PVDF) with a static transduction method (capacitive) for multimodal touch sensing. Named for its `spiky' response, SpikeATac's 16-taxel PVDF film sampled at 4 kHz provides fast, sensitive dynamic signals to the very onset and breaking of contact. We characterize the sensitivity of the different modalities, and show that SpikeATac provides the ability to stop quickly and delicately when grasping fragile, deformable objects. Beyond parallel grasping, we show that SpikeATac can be used in a learning-based framework to achieve new capabilities on a dexterous multifingered robot hand. We use a learning recipe that combines reinforcement learning from human feedback with tactile-based rewards to fine-tune the behavior of a policy to modulate force. Our hardware platform and learning pipeline together enable a difficult dexterous and contact-rich task that has not previously been achieved: in-hand manipulation of fragile objects. Videos are available at \href{https://roamlab.github.io/spikeatac/}{roamlab.github.io/spikeatac}.

MiniBEE: A New Form Factor for Compact Bimanual Dexterity

Oct 02, 2025Abstract:Bimanual robot manipulators can achieve impressive dexterity, but typically rely on two full six- or seven- degree-of-freedom arms so that paired grippers can coordinate effectively. This traditional framework increases system complexity while only exploiting a fraction of the overall workspace for dexterous interaction. We introduce the MiniBEE (Miniature Bimanual End-effector), a compact system in which two reduced-mobility arms (3+ DOF each) are coupled into a kinematic chain that preserves full relative positioning between grippers. To guide our design, we formulate a kinematic dexterity metric that enlarges the dexterous workspace while keeping the mechanism lightweight and wearable. The resulting system supports two complementary modes: (i) wearable kinesthetic data collection with self-tracked gripper poses, and (ii) deployment on a standard robot arm, extending dexterity across its entire workspace. We present kinematic analysis and design optimization methods for maximizing dexterous range, and demonstrate an end-to-end pipeline in which wearable demonstrations train imitation learning policies that perform robust, real-world bimanual manipulation.

A Compact, Low-cost Force and Torque Sensor for Robot Fingers with LED-based Displacement Sensing

Oct 04, 2024

Abstract:Force/torque sensing is an important modality for robotic manipulation, but commodity solutions, generally developed with other applications in mind, do not generally fit the needs of robot hands. This paper introduces a novel method for six-axis force/torque sensing, using LEDs to sense the displacement between two plates connected by a transparent elastomer. Our method allows for finger-size packaging with no amplification electronics, low cost manufacturing, and easy integration into a complete hand. On test forces between 0-2 N, our prototype sensor exhibits a mean error between 0.05 and 0.07 N across the three force directions, suggesting future applicability to fine manipulation tasks.

Pouring by Feel: An Analysis of Tactile and Proprioceptive Sensing for Accurate Pouring

Oct 27, 2023Abstract:As service robots begin to be deployed to assist humans, it is important for them to be able to perform a skill as ubiquitous as pouring. Specifically, we focus on the task of pouring an exact amount of water without any environmental instrumentation, that is, using only the robot's own sensors to perform this task in a general way robustly. In our approach we use a simple PID controller which uses the measured change in weight of the held container to supervise the pour. Unlike previous methods which use specialized force-torque sensors at the robot wrist, we use our robot joint torque sensors and investigate the added benefit of tactile sensors at the fingertips. We train three estimators from data which regress the poured weight out of the source container and show that we can accurately pour within 10 ml of the target on average while being robust enough to pour at novel locations and with different grasps on the source container.

VFAS-Grasp: Closed Loop Grasping with Visual Feedback and Adaptive Sampling

Oct 27, 2023Abstract:We consider the problem of closed-loop robotic grasping and present a novel planner which uses Visual Feedback and an uncertainty-aware Adaptive Sampling strategy (VFAS) to close the loop. At each iteration, our method VFAS-Grasp builds a set of candidate grasps by generating random perturbations of a seed grasp. The candidates are then scored using a novel metric which combines a learned grasp-quality estimator, the uncertainty in the estimate and the distance from the seed proposal to promote temporal consistency. Additionally, we present two mechanisms to improve the efficiency of our sampling strategy: We dynamically scale the sampling region size and number of samples in it based on past grasp scores. We also leverage a motion vector field estimator to shift the center of our sampling region. We demonstrate that our algorithm can run in real time (20 Hz) and is capable of improving grasp performance for static scenes by refining the initial grasp proposal. We also show that it can enable grasping of slow moving objects, such as those encountered during human to robot handover.

RAMP: Hierarchical Reactive Motion Planning for Manipulation Tasks Using Implicit Signed Distance Functions

May 17, 2023

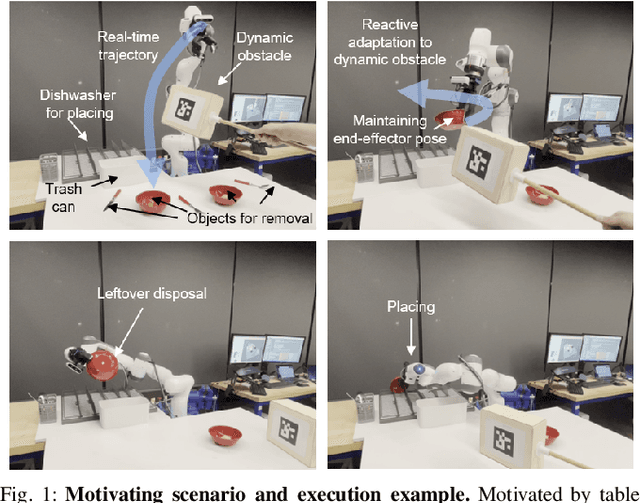

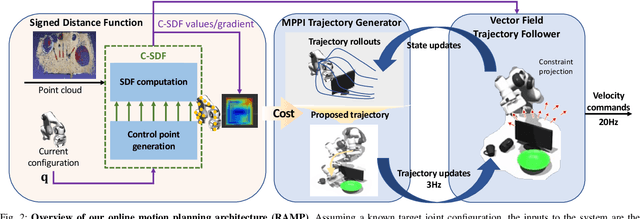

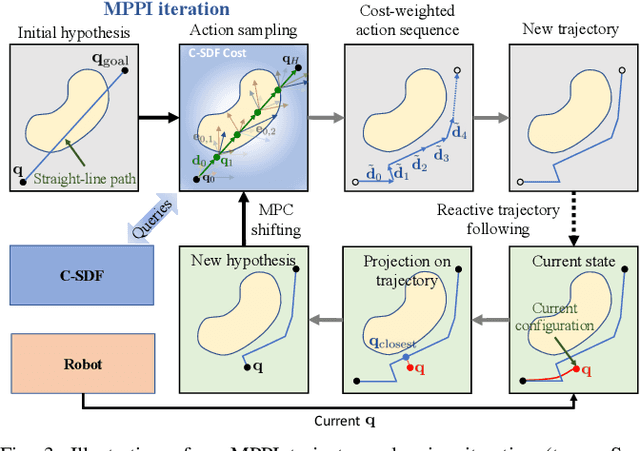

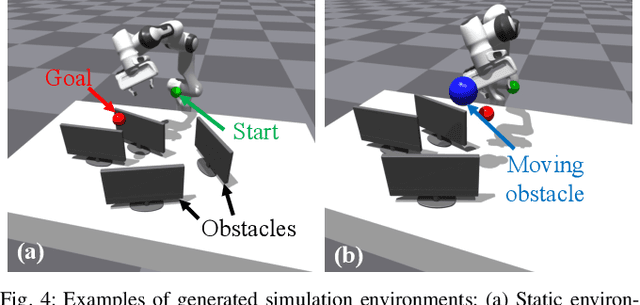

Abstract:We introduce Reactive Action and Motion Planner (RAMP), which combines the strengths of search-based and reactive approaches for motion planning. In essence, RAMP is a hierarchical approach where a novel variant of a Model Predictive Path Integral (MPPI) controller is used to generate trajectories which are then followed asynchronously by a local vector field controller. We demonstrate, in the context of a table clearing application, that RAMP can rapidly find paths in the robot's configuration space, satisfy task and robot-specific constraints, and provide safety by reacting to static or dynamically moving obstacles. RAMP achieves superior performance through a number of key innovations: we use Signed Distance Function (SDF) representations directly from the robot configuration space, both for collision checking and reactive control. The use of SDFs allows for a smoother definition of collision cost when planning for a trajectory, and is critical in ensuring safety while following trajectories. In addition, we introduce a novel variant of MPPI which, combined with the safety guarantees of the vector field trajectory follower, performs incremental real-time global trajectory planning. Simulation results establish that our method can generate paths that are comparable to traditional and state-of-the-art approaches in terms of total trajectory length while being up to 30 times faster. Real-world experiments demonstrate the safety and effectiveness of our approach in challenging table clearing scenarios.

A Sensorized Multicurved Robot Finger with Data-driven Touch Sensing via Overlapping Light Signals

Apr 01, 2020

Abstract:Despite significant advances in touch and force transduction, tactile sensing is still far from ubiquitous in robotic manipulation. Existing methods for building touch sensors have proven difficult to integrate into robot fingers due to multiple challenges, including difficulty in covering multicurved surfaces, high wire count, or packaging constrains preventing their use in dexterous hands. In this paper, we present a multicurved robotic finger with accurate touch localization and normal force detection over complex, three-dimensional surfaces. The key to our approach is the novel use of overlapping signals from light emitters and receivers embedded in a transparent waveguide layer that covers the functional areas of the finger. By measuring light transport between every emitter and receiver, we show that we can obtain a very rich signal set that changes in response to deformation of the finger due to touch. We then show that purely data-driven deep learning methods are able to extract useful information from such data, such as contact location and applied normal force, without the need for analytical models. The final result is a fully integrated, sensorized robot finger, with a low wire count and using easily accessible manufacturing methods, designed for easy integration into dexterous manipulators.

Data-driven Super-resolution on a Tactile Dome

Feb 26, 2018

Abstract:While tactile sensor technology has made great strides over the past decades, applications in robotic manipulation are limited by aspects such as blind spots, difficult integration into hands, and low spatial resolution. We present a method for localizing contact with high accuracy over curved, three dimensional surfaces, with a low wire count and reduced integration complexity. To achieve this, we build a volume of soft material embedded with individual off-the-shelf pressure sensors. Using data driven techniques, we map the raw signals from these pressure sensors to known surface locations and indentation depths. Additionally, we show that a finite element model can be used to improve the placement of the pressure sensors inside the volume and to explore the design space in simulation. We validate our approach on physically implemented tactile domes which achieve high contact localization accuracy ($1.1mm$ in the best case) over a large, curved sensing area ($1,300mm^2$ hemisphere). We believe this approach can be used to deploy tactile sensing capabilities over three dimensional surfaces such as a robotic finger or palm.

* 8 pages, 9 figures

Data-driven Tactile Sensing using Spatially Overlapping Signals

Feb 22, 2018

Abstract:Traditional methods for achieving high localization accuracy on tactile sensors usually involve a matrix of miniaturized individual sensors distributed on the area of interest. This approach usually comes at a price of increased complexity in fabrication and circuitry, and can be hard to adapt to non-planar geometries. We propose a method where sensing terminals are embedded in a volume of soft material. Mechanical strain in this material results in a measurable signal between any two given terminals. By having multiple terminals and pairing them against each other in all possible combinations, we obtain a rich signal set using few wires. We mine this data to learn the mapping between the signals we extract and the contact parameters of interest. Our approach is general enough that it can be applied with different transduction methods, and achieves high accuracy in identifying indentation location and depth. Moreover, this method lends itself to simple fabrication techniques and makes no assumption about the underlying geometry, potentially simplifying future integration in robot hands.

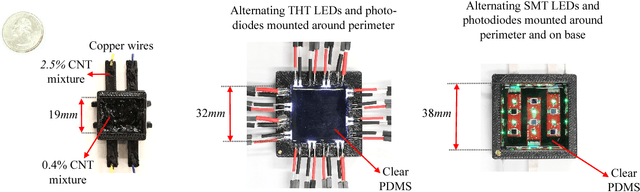

Accurate Contact Localization and Indentation Depth Prediction With an Optics-based Tactile Sensor

Feb 19, 2018

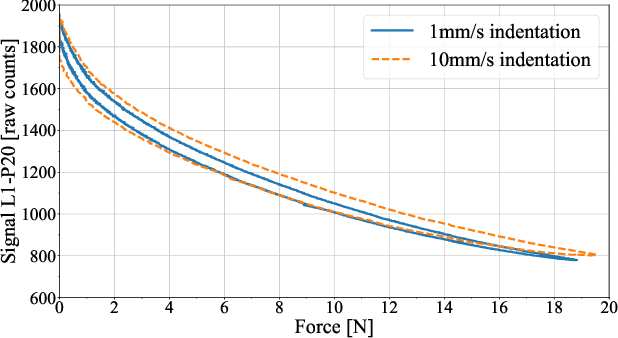

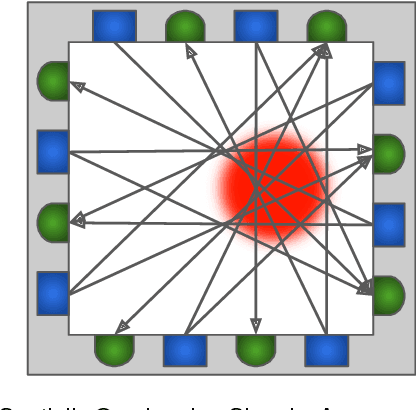

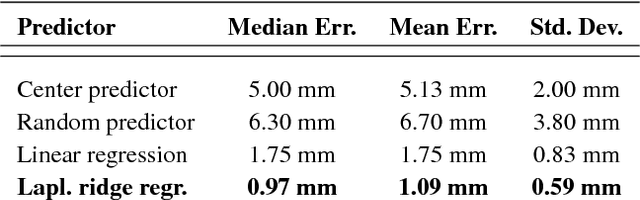

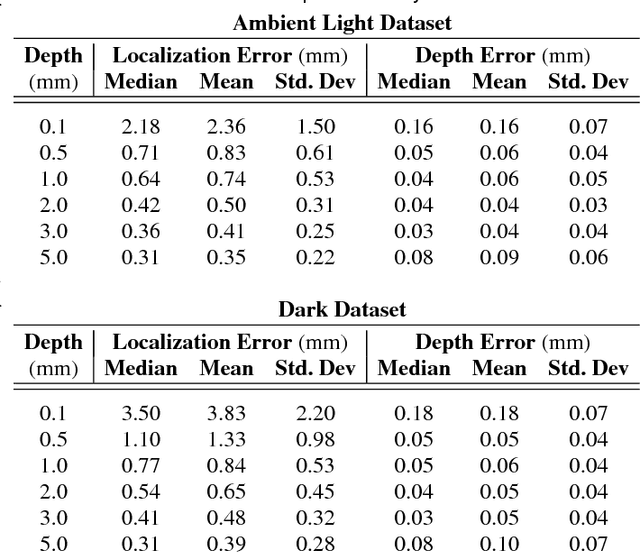

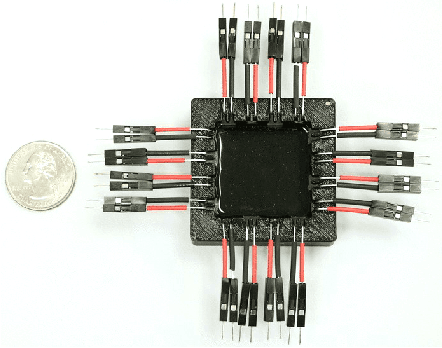

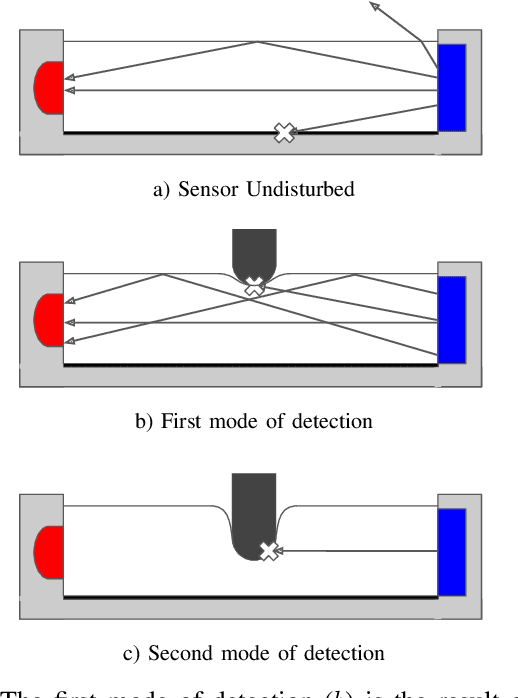

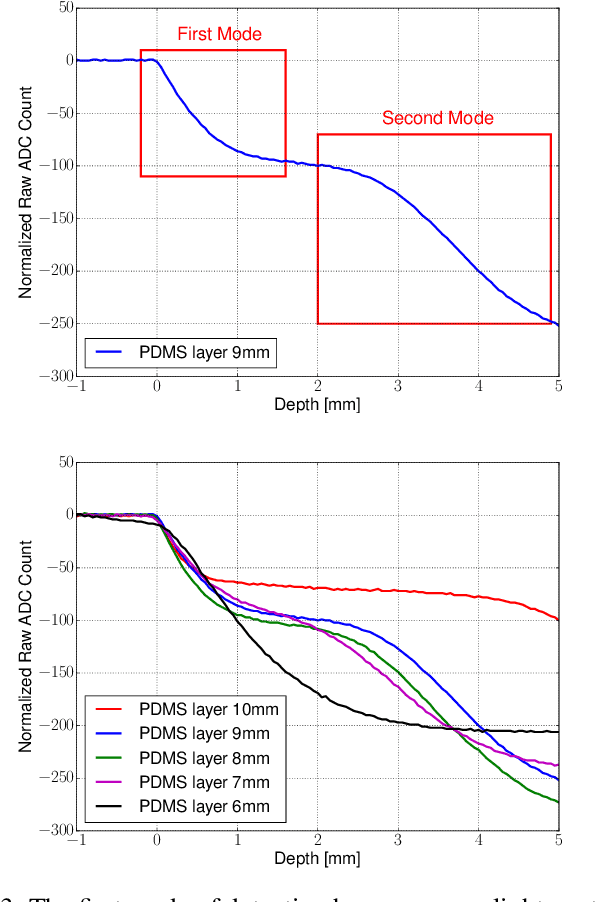

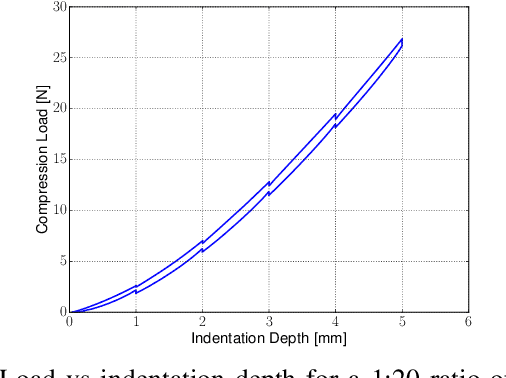

Abstract:Traditional methods to achieve high localization accuracy with tactile sensors usually use a matrix of miniaturized individual sensors distributed on the area of interest. This approach usually comes at a price of increased complexity in fabrication and circuitry, and can be hard to adapt for non planar geometries. We propose to use low cost optic components mounted on the edges of the sensing area to measure how light traveling through an elastomer is affected by touch. Multiple light emitters and receivers provide us with a rich signal set that contains the necessary information to pinpoint both the location and depth of an indentation with high accuracy. We demonstrate sub-millimeter accuracy on location and depth on a 20mm by 20mm active sensing area. Our sensor provides high depth sensitivity as a result of two different modalities in how light is guided through our elastomer. This method results in a low cost, easy to manufacture sensor. We believe this approach can be adapted to cover non-planar surfaces, simplifying future integration in robot skin applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge