Keith Behrman

A Sensorized Multicurved Robot Finger with Data-driven Touch Sensing via Overlapping Light Signals

Apr 01, 2020

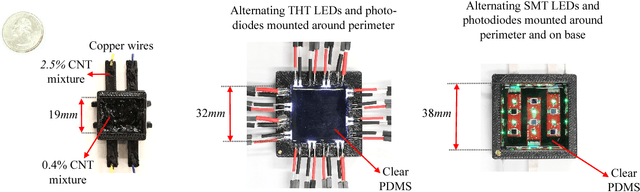

Abstract:Despite significant advances in touch and force transduction, tactile sensing is still far from ubiquitous in robotic manipulation. Existing methods for building touch sensors have proven difficult to integrate into robot fingers due to multiple challenges, including difficulty in covering multicurved surfaces, high wire count, or packaging constrains preventing their use in dexterous hands. In this paper, we present a multicurved robotic finger with accurate touch localization and normal force detection over complex, three-dimensional surfaces. The key to our approach is the novel use of overlapping signals from light emitters and receivers embedded in a transparent waveguide layer that covers the functional areas of the finger. By measuring light transport between every emitter and receiver, we show that we can obtain a very rich signal set that changes in response to deformation of the finger due to touch. We then show that purely data-driven deep learning methods are able to extract useful information from such data, such as contact location and applied normal force, without the need for analytical models. The final result is a fully integrated, sensorized robot finger, with a low wire count and using easily accessible manufacturing methods, designed for easy integration into dexterous manipulators.

Data-driven Tactile Sensing using Spatially Overlapping Signals

Feb 22, 2018

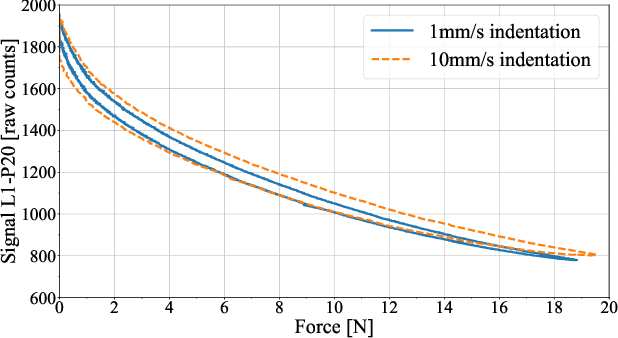

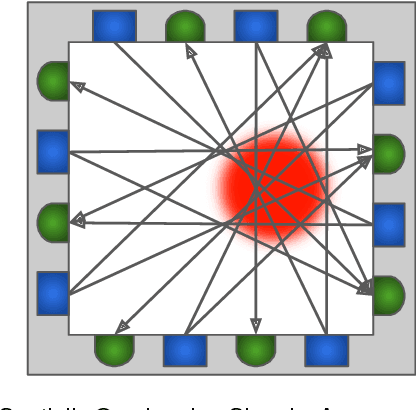

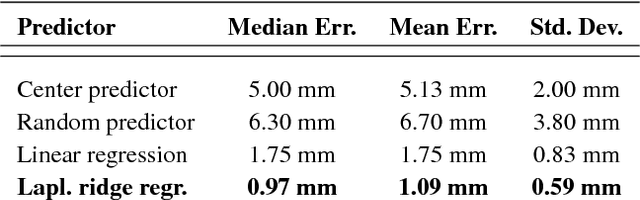

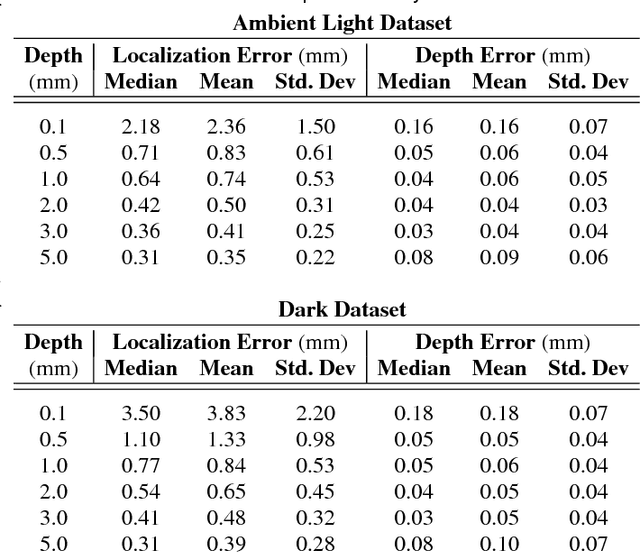

Abstract:Traditional methods for achieving high localization accuracy on tactile sensors usually involve a matrix of miniaturized individual sensors distributed on the area of interest. This approach usually comes at a price of increased complexity in fabrication and circuitry, and can be hard to adapt to non-planar geometries. We propose a method where sensing terminals are embedded in a volume of soft material. Mechanical strain in this material results in a measurable signal between any two given terminals. By having multiple terminals and pairing them against each other in all possible combinations, we obtain a rich signal set using few wires. We mine this data to learn the mapping between the signals we extract and the contact parameters of interest. Our approach is general enough that it can be applied with different transduction methods, and achieves high accuracy in identifying indentation location and depth. Moreover, this method lends itself to simple fabrication techniques and makes no assumption about the underlying geometry, potentially simplifying future integration in robot hands.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge