Patrizio Pelliccione

A Research Roadmap for Augmenting Software Engineering Processes and Software Products with Generative AI

Oct 30, 2025Abstract:Generative AI (GenAI) is rapidly transforming software engineering (SE) practices, influencing how SE processes are executed, as well as how software systems are developed, operated, and evolved. This paper applies design science research to build a roadmap for GenAI-augmented SE. The process consists of three cycles that incrementally integrate multiple sources of evidence, including collaborative discussions from the FSE 2025 "Software Engineering 2030" workshop, rapid literature reviews, and external feedback sessions involving peers. McLuhan's tetrads were used as a conceptual instrument to systematically capture the transforming effects of GenAI on SE processes and software products.The resulting roadmap identifies four fundamental forms of GenAI augmentation in SE and systematically characterizes their related research challenges and opportunities. These insights are then consolidated into a set of future research directions. By grounding the roadmap in a rigorous multi-cycle process and cross-validating it among independent author teams and peers, the study provides a transparent and reproducible foundation for analyzing how GenAI affects SE processes, methods and tools, and for framing future research within this rapidly evolving area. Based on these findings, the article finally makes ten predictions for SE in the year 2030.

Runtime Verification and Field Testing for ROS-Based Robotic Systems

Apr 17, 2024

Abstract:Robotic systems are becoming pervasive and adopted in increasingly many domains, such as manufacturing, healthcare, and space exploration. To this end, engineering software has emerged as a crucial discipline for building maintainable and reusable robotic systems. Robotics software engineering research has received increasing attention, fostering autonomy as a fundamental goal. However, robotics developers are still challenged trying to achieve this goal given that simulation is not able to deliver solutions to realistically emulate real-world phenomena. Robots also need to operate in unpredictable and uncontrollable environments, which require safe and trustworthy self-adaptation capabilities implemented in software. Typical techniques to address the challenges are runtime verification, field-based testing, and mitigation techniques that enable fail-safe solutions. However, there is no clear guidance to architect ROS-based systems to enable and facilitate runtime verification and field-based testing. This paper aims to fill in this gap by providing guidelines that can help developers and QA teams when developing, verifying or testing their robots in the field. These guidelines are carefully tailored to address the challenges and requirements of testing robotics systems in real-world scenarios. We conducted a literature review on studies addressing runtime verification and field-based testing for robotic systems, mined ROS-based application repositories, and validated the applicability, clarity, and usefulness via two questionnaires with 55 answers. We contribute 20 guidelines formulated for researchers and practitioners in robotic software engineering. Finally, we map our guidelines to open challenges thus far in runtime verification and field-based testing for ROS-based systems and, we outline promising research directions in the field.

Social, Legal, Ethical, Empathetic, and Cultural Rules: Compilation and Reasoning (Extended Version)

Dec 15, 2023

Abstract:The rise of AI-based and autonomous systems is raising concerns and apprehension due to potential negative repercussions stemming from their behavior or decisions. These systems must be designed to comply with the human contexts in which they will operate. To this extent, Townsend et al. (2022) introduce the concept of SLEEC (social, legal, ethical, empathetic, or cultural) rules that aim to facilitate the formulation, verification, and enforcement of the rules AI-based and autonomous systems should obey. They lay out a methodology to elicit them and to let philosophers, lawyers, domain experts, and others to formulate them in natural language. To enable their effective use in AI systems, it is necessary to translate these rules systematically into a formal language that supports automated reasoning. In this study, we first conduct a linguistic analysis of the SLEEC rules pattern, which justifies the translation of SLEEC rules into classical logic. Then we investigate the computational complexity of reasoning about SLEEC rules and show how logical programming frameworks can be employed to implement SLEEC rules in practical scenarios. The result is a readily applicable strategy for implementing AI systems that conform to norms expressed as SLEEC rules.

Software Reconfiguration in Robotics

Oct 02, 2023Abstract:Since it has often been claimed by academics that reconfiguration is essential, many approaches to reconfiguration, especially of robotic systems, have been developed. Accordingly, the literature on robotics is rich in techniques for reconfiguring robotic systems. However, when talking to researchers in the domain, there seems to be no common understanding of what exactly reconfiguration is and how it relates to other concepts such as adaptation. Beyond this academic perspective, robotics frameworks provide mechanisms for dynamically loading and unloading parts of robotics applications. While we have a fuzzy picture of the state-of-the-art in robotic reconfiguration from an academic perspective, we lack a picture of the state-of-practice from a practitioner perspective. To fill this gap, we survey the literature on reconfiguration in robotic systems by identifying and analyzing 98 relevant papers, review how four major robotics frameworks support reconfiguration, and finally investigate the realization of reconfiguration in 48 robotics applications. When comparing the state-of-the-art with the state-of-practice, we observed a significant discrepancy between them, in particular, the scientific community focuses on complex structural reconfiguration, while in practice only parameter reconfiguration is widely used. Based on our observations, we discuss possible reasons for this discrepancy and conclude with a takeaway message for academics and practitioners interested in robotics.

Correct-by-Construction Design of Contextual Robotic Missions Using Contracts

Jun 13, 2023

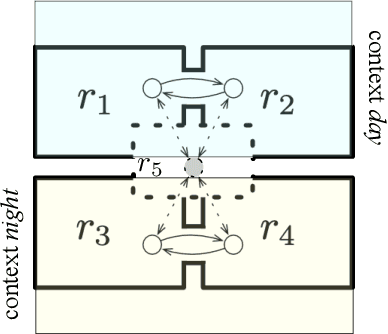

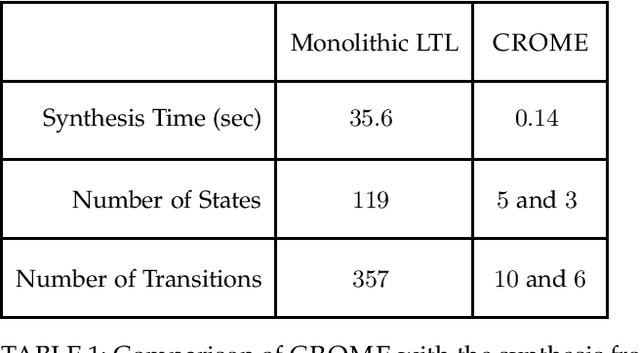

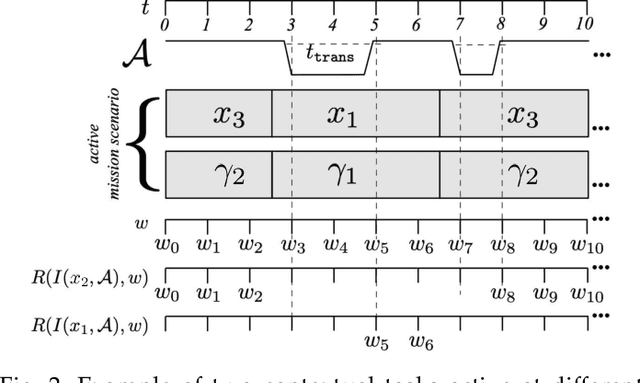

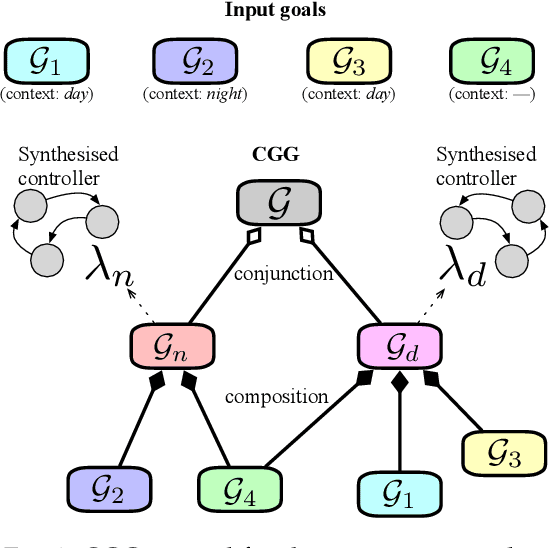

Abstract:Effectively specifying and implementing robotic missions pose a set of challenges to software engineering for robotic systems, since they require formalizing and executing a robot's high-level tasks while considering various application scenarios and conditions, also known as contexts, in real-world operational environments. Writing correct mission specifications that explicitly account for multiple contexts can be a tedious and error-prone task. Moreover, as the number of context, hence the specification, becomes more complex, generating a correct-by-construction implementation, e.g., by using synthesis methods, can become intractable. A viable approach to address these issues is to decompose the mission specification into smaller sub-missions, with each sub-mission corresponding to a specific context. However, such a compositional approach would still pose challenges in ensuring the overall mission correctness. In this paper, we propose a new, compositional framework for the specification and implementation of contextual robotic missions using assume-guarantee contracts. The mission specification is captured in a hierarchical and modular way and each sub-mission is synthesized as a robot controller. We address the problem of dynamically switching between sub-mission controllers while ensuring correctness under certain conditions.

A Compositional Approach to Creating Architecture Frameworks with an Application to Distributed AI Systems

Dec 27, 2022Abstract:Artificial intelligence (AI) in its various forms finds more and more its way into complex distributed systems. For instance, it is used locally, as part of a sensor system, on the edge for low-latency high-performance inference, or in the cloud, e.g. for data mining. Modern complex systems, such as connected vehicles, are often part of an Internet of Things (IoT). To manage complexity, architectures are described with architecture frameworks, which are composed of a number of architectural views connected through correspondence rules. Despite some attempts, the definition of a mathematical foundation for architecture frameworks that are suitable for the development of distributed AI systems still requires investigation and study. In this paper, we propose to extend the state of the art on architecture framework by providing a mathematical model for system architectures, which is scalable and supports co-evolution of different aspects for example of an AI system. Based on Design Science Research, this study starts by identifying the challenges with architectural frameworks. Then, we derive from the identified challenges four rules and we formulate them by exploiting concepts from category theory. We show how compositional thinking can provide rules for the creation and management of architectural frameworks for complex systems, for example distributed systems with AI. The aim of the paper is not to provide viewpoints or architecture models specific to AI systems, but instead to provide guidelines based on a mathematical formulation on how a consistent framework can be built up with existing, or newly created, viewpoints. To put in practice and test the approach, the identified and formulated rules are applied to derive an architectural framework for the EU Horizon 2020 project ``Very efficient deep learning in the IoT" (VEDLIoT) in the form of a case study.

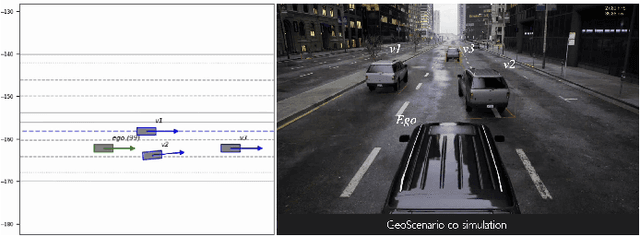

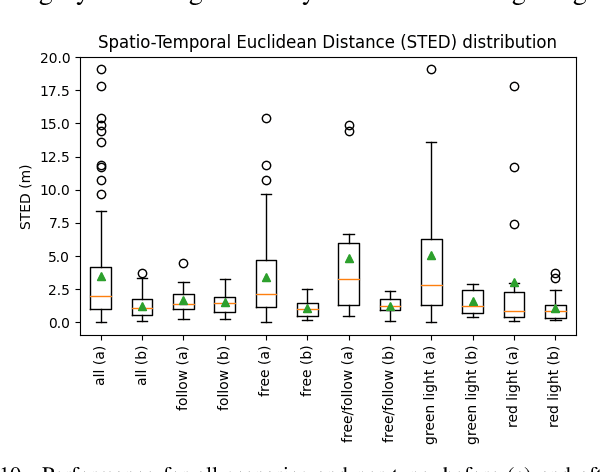

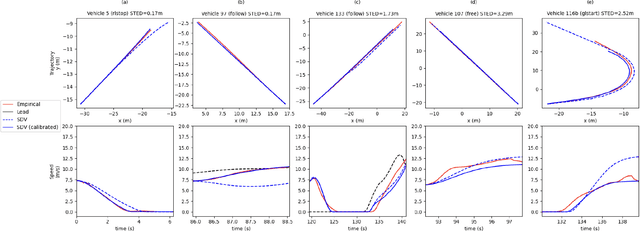

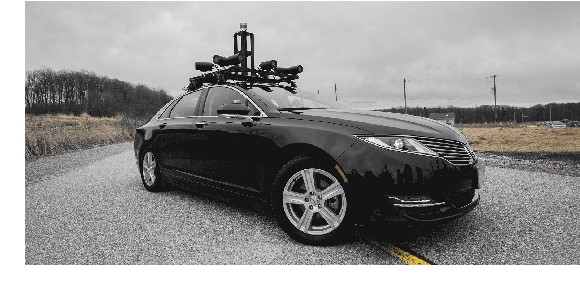

A Driver-Vehicle Model for ADS Scenario-based Testing

May 05, 2022

Abstract:Scenario-based testing for automated driving systems (ADS) must be able to simulate traffic scenarios that rely on interactions with other vehicles. Although many languages for high-level scenario modelling have been proposed, they lack the features to precisely and reliably control the required micro-simulation, while also supporting behavior reuse and test reproducibility for a wide range of interactive scenarios. To fill this gap between scenario design and execution, we propose the Simulated Driver-Vehicle Model (SDV) to represent and simulate vehicles as dynamic entities with their behavior being constrained by scenario design and goals set by testers. The model combines driver and vehicle as a single entity. It is based on human-like driving and the mechanical limitations of real vehicles for realistic simulation. The layered architecture of the model leverages behavior trees to express high-level behaviors in terms of lower-level maneuvers, affording multiple driving styles and reuse. Further, optimization-based maneuver planner guides the simulated vehicles towards the desired behavior. Our extensive evaluation shows the model's design effectiveness using NHTSA pre-crash scenarios, its motion realism in comparison to naturalistic urban traffic, and its scalability with traffic density. Finally, we show the applicability of SDV model to test a real ADS and to identify crash scenarios, which are impractical to represent using predefined vehicle trajectories. The SDV model instances can be injected into existing simulation environments via co-simulation.

Future Intelligent Autonomous Robots, Ethical by Design. Learning from Autonomous Cars Ethics

Jul 16, 2021

Abstract:Development of the intelligent autonomous robot technology presupposes its anticipated beneficial effect on the individuals and societies. In the case of such disruptive emergent technology, not only questions of how to build, but also why to build and with what consequences are important. The field of ethics of intelligent autonomous robotic cars is a good example of research with actionable practical value, where a variety of stakeholders, including the legal system and other societal and governmental actors, as well as companies and businesses, collaborate bringing about shared view of ethics and societal aspects of technology. It could be used as a starting platform for the approaches to the development of intelligent autonomous robots in general, considering human-machine interfaces in different phases of the life cycle of technology - the development, implementation, testing, use and disposal. Drawing from our work on ethics of autonomous intelligent robocars, and the existing literature on ethics of robotics, our contribution consists of a set of values and ethical principles with identified challenges and proposed approaches for meeting them. This may help stakeholders in the field of intelligent autonomous robotics to connect ethical principles with their applications. Our recommendations of ethical requirements for autonomous cars can be used for other types of intelligent autonomous robots, with the caveat for social robots that require more research regarding interactions with the users. We emphasize that existing ethical frameworks need to be applied in a context-sensitive way, by assessments in interdisciplinary, multi-competent teams through multi-criteria analysis. Furthermore, we argue for the need of a continuous development of ethical principles, guidelines, and regulations, informed by the progress of technologies and involving relevant stakeholders.

Robotics Software Engineering: A Perspective from the Service Robotics Domain

Jul 07, 2020

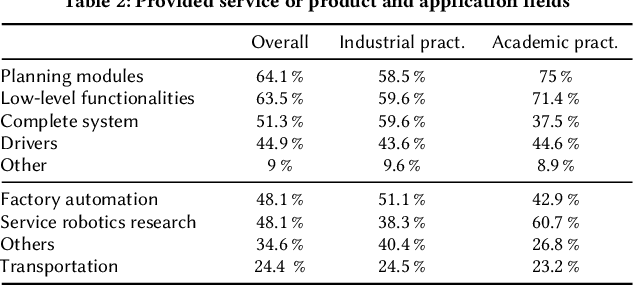

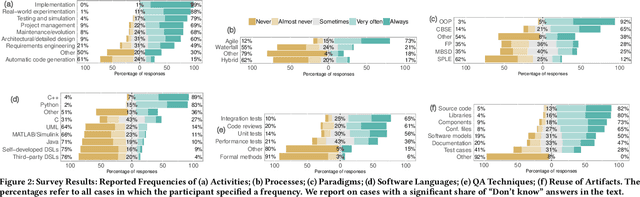

Abstract:Robots that support humans by performing useful tasks (a.k.a., service robots) are booming worldwide. In contrast to industrial robots, the development of service robots comes with severe software engineering challenges, since they require high levels of robustness and autonomy to operate in highly heterogeneous environments. As a domain with critical safety implications, service robotics faces a need for sound software development practices. In this paper, we present the first large-scale empirical study to assess the state of the art and practice of robotics software engineering. We conducted 18 semi-structured interviews with industrial practitioners working in 15 companies from 9 different countries and a survey with 156 respondents (from 26 countries) from the robotics domain. Our results provide a comprehensive picture of (i) the practices applied by robotics industrial and academic practitioners, including processes, paradigms, languages, tools, frameworks, and reuse practices, (ii) the distinguishing characteristics of robotics software engineering, and (iii) recurrent challenges usually faced, together with adopted solutions. The paper concludes by discussing observations, derived hypotheses, and proposed actions for researchers and practitioners.

CAGFuzz: Coverage-Guided Adversarial Generative Fuzzing Testing of Deep Learning Systems

Nov 14, 2019

Abstract:Deep Learning systems (DL) based on Deep Neural Networks (DNNs) are more and more used in various aspects of our life, including unmanned vehicles, speech processing, and robotics. However, due to the limited dataset and the dependence on manual labeling data, DNNs often fail to detect their erroneous behaviors, which may lead to serious problems. Several approaches have been proposed to enhance the input examples for testing DL systems. However, they have the following limitations. First, they design and generate adversarial examples from the perspective of model, which may cause low generalization ability when they are applied to other models. Second, they only use surface feature constraints to judge the difference between the adversarial example generated and the original example. The deep feature constraints, which contain high-level semantic information, such as image object category and scene semantics are completely neglected. To address these two problems, in this paper, we propose CAGFuzz, a Coverage-guided Adversarial Generative Fuzzing testing approach, which generates adversarial examples for a targeted DNN to discover its potential defects. First, we train an adversarial case generator (AEG) from the perspective of general data set. Second, we extract the depth features of the original and adversarial examples, and constrain the adversarial examples by cosine similarity to ensure that the semantic information of adversarial examples remains unchanged. Finally, we retrain effective adversarial examples to improve neuron testing coverage rate. Based on several popular data sets, we design a set of dedicated experiments to evaluate CAGFuzz. The experimental results show that CAGFuzz can improve the neuron coverage rate, detect hidden errors, and also improve the accuracy of the target DNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge