Omid Semiari

Graph Reinforcement Learning for QoS-Aware Load Balancing in Open Radio Access Networks

Apr 28, 2025

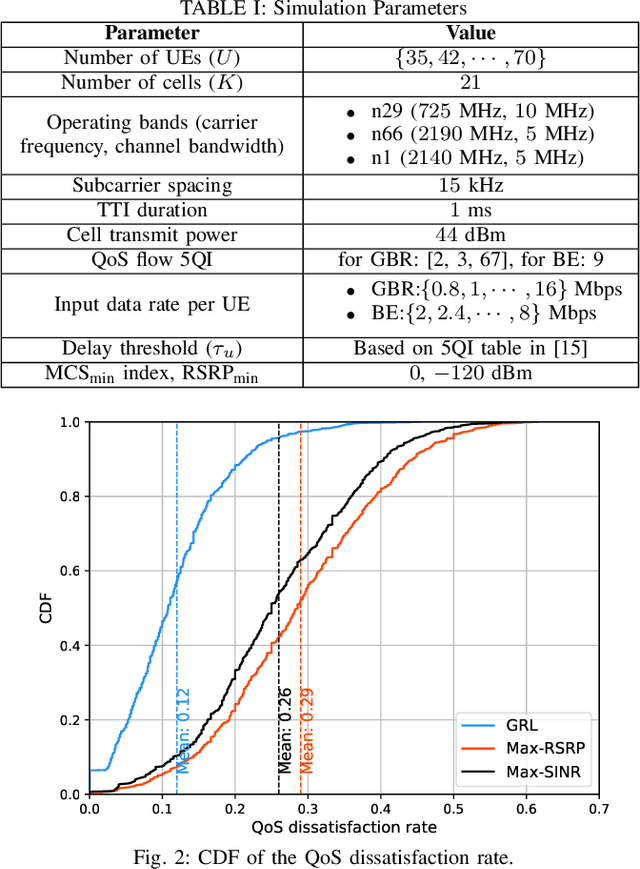

Abstract:Next-generation wireless cellular networks are expected to provide unparalleled Quality-of-Service (QoS) for emerging wireless applications, necessitating strict performance guarantees, e.g., in terms of link-level data rates. A critical challenge in meeting these QoS requirements is the prevention of cell congestion, which involves balancing the load to ensure sufficient radio resources are available for each cell to serve its designated User Equipments (UEs). In this work, a novel QoS-aware Load Balancing (LB) approach is developed to optimize the performance of Guaranteed Bit Rate (GBR) and Best Effort (BE) traffic in a multi-band Open Radio Access Network (O-RAN) under QoS and resource constraints. The proposed solution builds on Graph Reinforcement Learning (GRL), a powerful framework at the intersection of Graph Neural Network (GNN) and RL. The QoS-aware LB is modeled as a Markov Decision Process, with states represented as graphs. QoS consideration are integrated into both state representations and reward signal design. The LB agent is then trained using an off-policy dueling Deep Q Network (DQN) that leverages a GNN-based architecture. This design ensures the LB policy is invariant to the ordering of nodes (UE or cell), flexible in handling various network sizes, and capable of accounting for spatial node dependencies in LB decisions. Performance of the GRL-based solution is compared with two baseline methods. Results show substantial performance gains, including a $53\%$ reduction in QoS violations and a fourfold increase in the 5th percentile rate for BE traffic.

Reliability-Optimized User Admission Control for URLLC Traffic: A Neural Contextual Bandit Approach

Jan 05, 2024

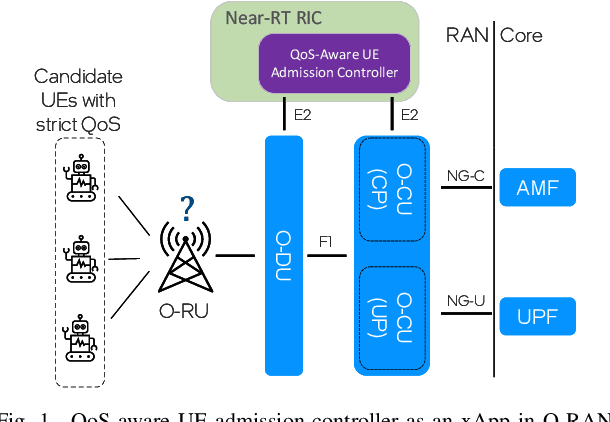

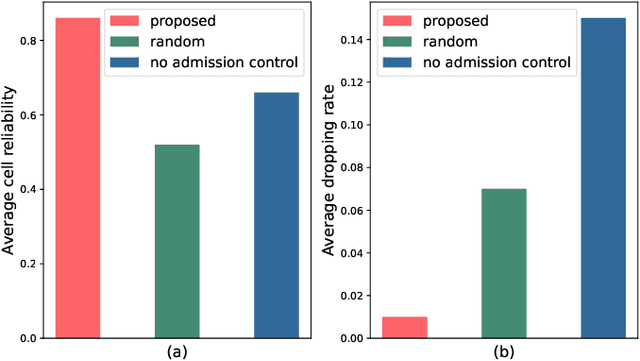

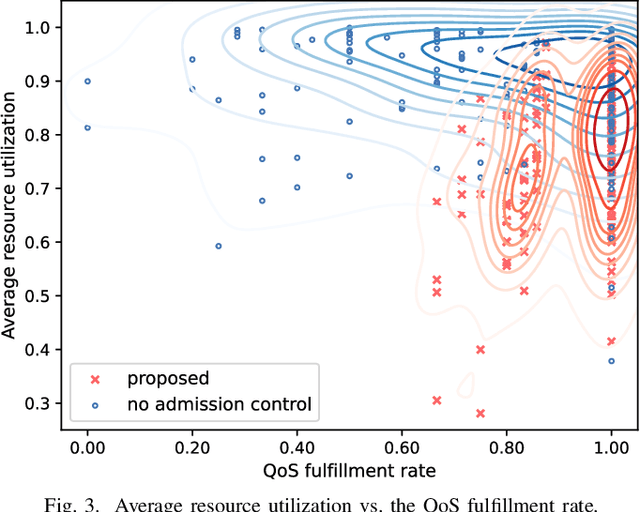

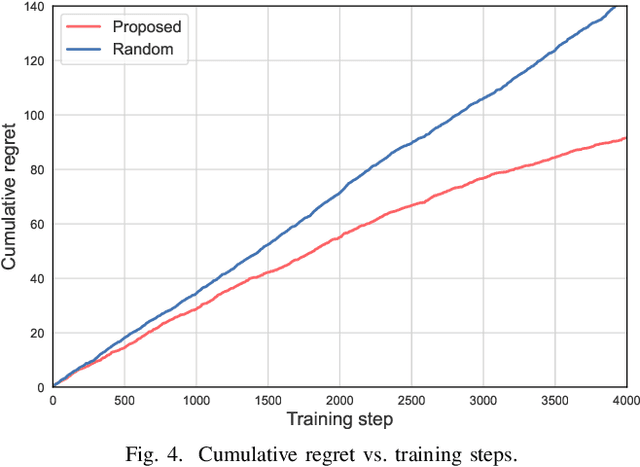

Abstract:Ultra-reliable low-latency communication (URLLC) is the cornerstone for a broad range of emerging services in next-generation wireless networks. URLLC fundamentally relies on the network's ability to proactively determine whether sufficient resources are available to support the URLLC traffic, and thus, prevent so-called cell overloads. Nonetheless, achieving accurate quality-of-service (QoS) predictions for URLLC user equipment (UEs) and preventing cell overloads are very challenging tasks. This is due to dependency of the QoS metrics (latency and reliability) on traffic and channel statistics, users' mobility, and interdependent performance across UEs. In this paper, a new QoS-aware UE admission control approach is developed to proactively estimate QoS for URLLC UEs, prior to associating them with a cell, and accordingly, admit only a subset of UEs that do not lead to a cell overload. To this end, an optimization problem is formulated to find an efficient UE admission control policy, cognizant of UEs' QoS requirements and cell-level load dynamics. To solve this problem, a new machine learning based method is proposed that builds on (deep) neural contextual bandits, a suitable framework for dealing with nonlinear bandit problems. In fact, the UE admission controller is treated as a bandit agent that observes a set of network measurements (context) and makes admission control decisions based on context-dependent QoS (reward) predictions. The simulation results show that the proposed scheme can achieve near-optimal performance and yield substantial gains in terms of cell-level service reliability and efficient resource utilization.

Convergence of Communications, Control, and Machine Learning for Secure and Autonomous Vehicle Navigation

Jul 05, 2023Abstract:Connected and autonomous vehicles (CAVs) can reduce human errors in traffic accidents, increase road efficiency, and execute various tasks ranging from delivery to smart city surveillance. Reaping these benefits requires CAVs to autonomously navigate to target destinations. To this end, each CAV's navigation controller must leverage the information collected by sensors and wireless systems for decision-making on longitudinal and lateral movements. However, enabling autonomous navigation for CAVs requires a convergent integration of communication, control, and learning systems. The goal of this article is to explicitly expose the challenges related to this convergence and propose solutions to address them in two major use cases: Uncoordinated and coordinated CAVs. In particular, challenges related to the navigation of uncoordinated CAVs include stable path tracking, robust control against cyber-physical attacks, and adaptive navigation controller design. Meanwhile, when multiple CAVs coordinate their movements during navigation, fundamental problems such as stable formation, fast collaborative learning, and distributed intrusion detection are analyzed. For both cases, solutions using the convergence of communication theory, control theory, and machine learning are proposed to enable effective and secure CAV navigation. Preliminary simulation results are provided to show the merits of proposed solutions.

Evolutionary Deep Reinforcement Learning for Dynamic Slice Management in O-RAN

Aug 30, 2022

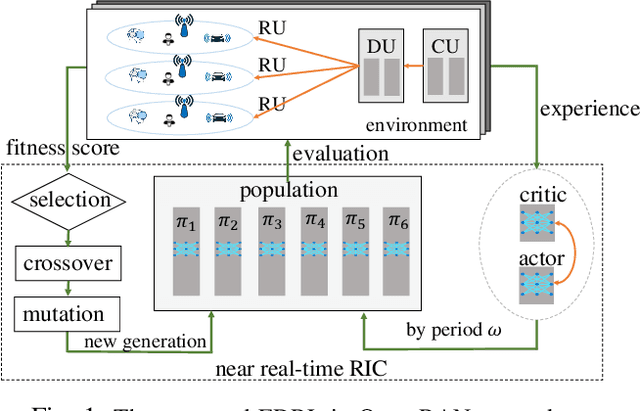

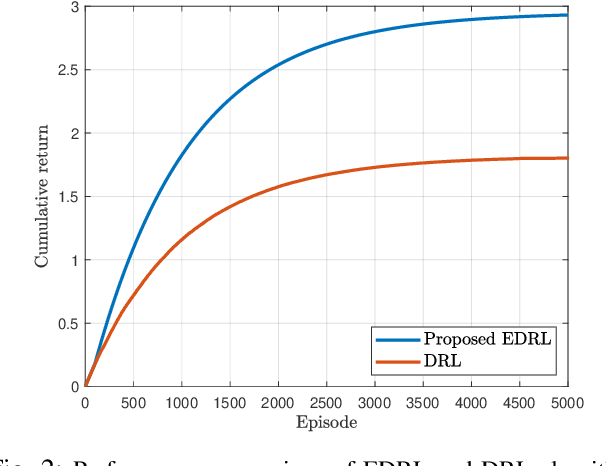

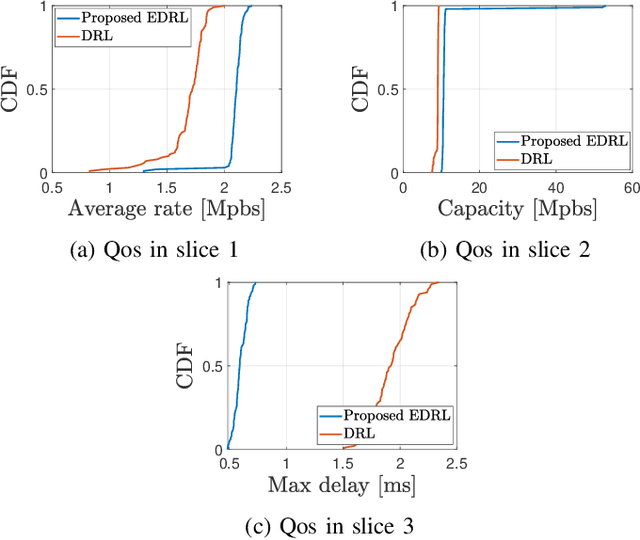

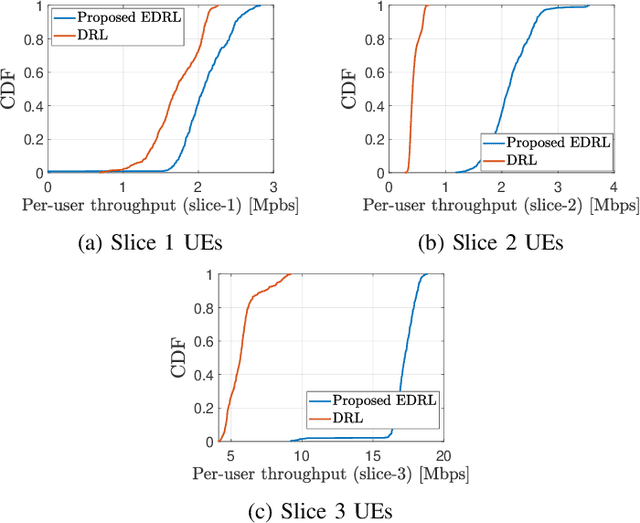

Abstract:The next-generation wireless networks are required to satisfy a variety of services and criteria concurrently. To address upcoming strict criteria, a new open radio access network (O-RAN) with distinguishing features such as flexible design, disaggregated virtual and programmable components, and intelligent closed-loop control was developed. O-RAN slicing is being investigated as a critical strategy for ensuring network quality of service (QoS) in the face of changing circumstances. However, distinct network slices must be dynamically controlled to avoid service level agreement (SLA) variation caused by rapid changes in the environment. Therefore, this paper introduces a novel framework able to manage the network slices through provisioned resources intelligently. Due to diverse heterogeneous environments, intelligent machine learning approaches require sufficient exploration to handle the harshest situations in a wireless network and accelerate convergence. To solve this problem, a new solution is proposed based on evolutionary-based deep reinforcement learning (EDRL) to accelerate and optimize the slice management learning process in the radio access network's (RAN) intelligent controller (RIC) modules. To this end, the O-RAN slicing is represented as a Markov decision process (MDP) which is then solved optimally for resource allocation to meet service demand using the EDRL approach. In terms of reaching service demands, simulation results show that the proposed approach outperforms the DRL baseline by 62.2%.

Variational Autoencoders for Reliability Optimization in Multi-Access Edge Computing Networks

Jan 25, 2022

Abstract:Multi-access edge computing (MEC) is viewed as an integral part of future wireless networks to support new applications with stringent service reliability and latency requirements. However, guaranteeing ultra-reliable and low-latency MEC (URLL MEC) is very challenging due to uncertainties of wireless links, limited communications and computing resources, as well as dynamic network traffic. Enabling URLL MEC mandates taking into account the statistics of the end-to-end (E2E) latency and reliability across the wireless and edge computing systems. In this paper, a novel framework is proposed to optimize the reliability of MEC networks by considering the distribution of E2E service delay, encompassing over-the-air transmission and edge computing latency. The proposed framework builds on correlated variational autoencoders (VAEs) to estimate the full distribution of the E2E service delay. Using this result, a new optimization problem based on risk theory is formulated to maximize the network reliability by minimizing the Conditional Value at Risk (CVaR) as a risk measure of the E2E service delay. To solve this problem, a new algorithm is developed to efficiently allocate users' processing tasks to edge computing servers across the MEC network, while considering the statistics of the E2E service delay learned by VAEs. The simulation results show that the proposed scheme outperforms several baselines that do not account for the risk analyses or statistics of the E2E service delay.

Wireless-Enabled Asynchronous Federated Fourier Neural Network for Turbulence Prediction in Urban Air Mobility (UAM)

Dec 26, 2021

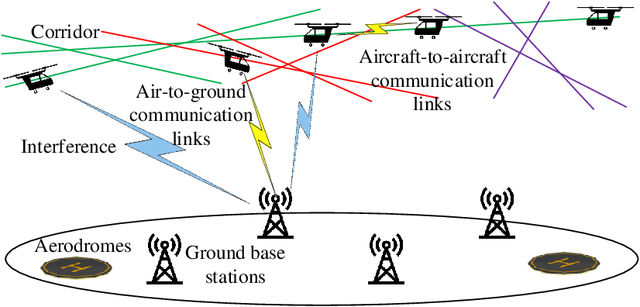

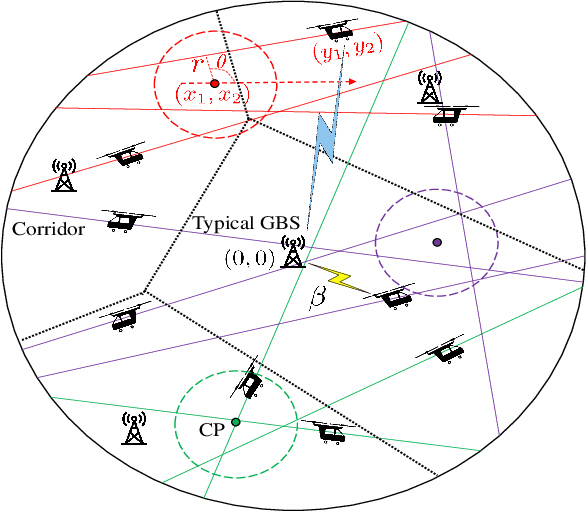

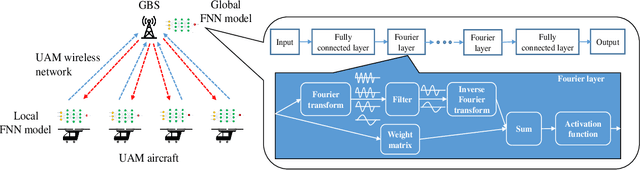

Abstract:To meet the growing mobility needs in intra-city transportation, the concept of urban air mobility (UAM) has been proposed in which vertical takeoff and landing (VTOL) aircraft are used to provide a ride-hailing service. In UAM, aircraft can operate in designated air spaces known as corridors, that link the aerodromes. A reliable communication network between GBSs and aircraft enables UAM to adequately utilize the airspace and create a fast, efficient, and safe transportation system. In this paper, to characterize the wireless connectivity performance for UAM, a spatial model is proposed. For this setup, the distribution of the distance between an arbitrarily selected GBS and its associated aircraft and the Laplace transform of the interference experienced by the GBS are derived. Using these results, the signal-to-interference ratio (SIR)-based connectivity probability is determined to capture the connectivity performance of the UAM aircraft-to-ground communication network. Then, leveraging these connectivity results, a wireless-enabled asynchronous federated learning (AFL) framework that uses a Fourier neural network is proposed to tackle the challenging problem of turbulence prediction during UAM operations. For this AFL scheme, a staleness-aware global aggregation scheme is introduced to expedite the convergence to the optimal turbulence prediction model used by UAM aircraft. Simulation results validate the theoretical derivations for the UAM wireless connectivity. The results also demonstrate that the proposed AFL framework converges to the optimal turbulence prediction model faster than the synchronous federated learning baselines and a staleness-free AFL approach. Furthermore, the results characterize the performance of wireless connectivity and convergence of the aircraft's turbulence model under different parameter settings, offering useful UAM design guidelines.

Joint Sensing and Communication for Situational Awareness in Wireless THz Systems

Nov 28, 2021

Abstract:Next-generation wireless systems are rapidly evolving from communication-only systems to multi-modal systems with integrated sensing and communications. In this paper a novel joint sensing and communication framework is proposed for enabling wireless extended reality (XR) at terahertz (THz) bands. To gather rich sensing information and a higher line-of-sight (LoS) availability, THz-operated reconfigurable intelligent surfaces (RISs) acting as base stations are deployed. The sensing parameters are extracted by leveraging THz's quasi-opticality and opportunistically utilizing uplink communication waveforms. This enables the use of the same waveform, spectrum, and hardware for both sensing and communication purposes. The environmental sensing parameters are then derived by exploiting the sparsity of THz channels via tensor decomposition. Hence, a high-resolution indoor mapping is derived so as to characterize the spatial availability of communications and the mobility of users. Simulation results show that in the proposed framework, the resolution and data rate of the overall system are positively correlated, thus allowing a joint optimization between these metrics with no tradeoffs. Results also show that the proposed framework improves the system reliability in static and mobile systems. In particular, the highest reliability gains of 10% in reliability are achieved in a walking speed mobile environment compared to communication only systems with beam tracking.

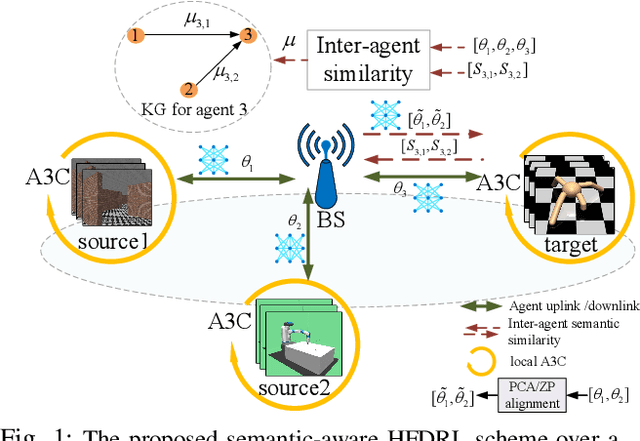

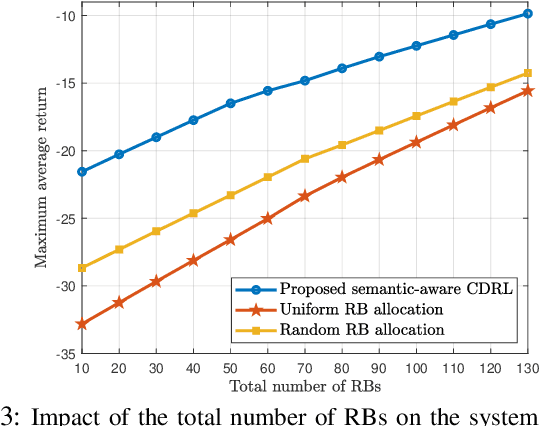

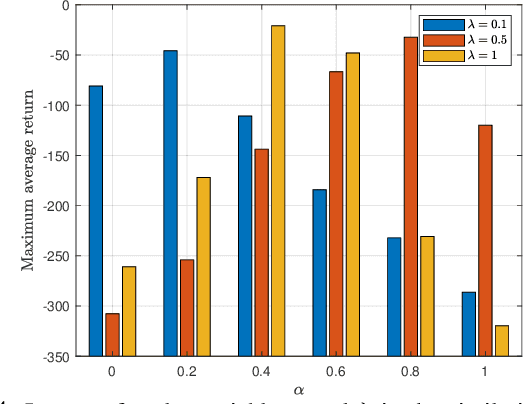

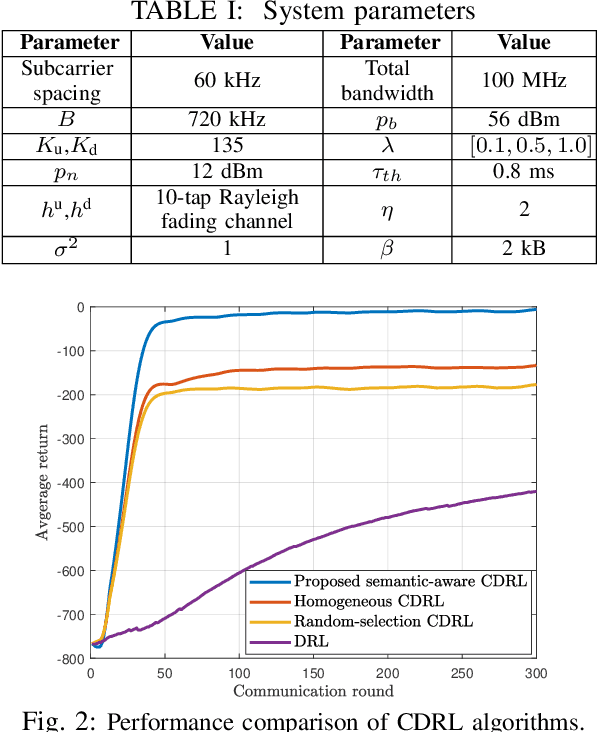

Semantic-Aware Collaborative Deep Reinforcement Learning Over Wireless Cellular Networks

Nov 23, 2021

Abstract:Collaborative deep reinforcement learning (CDRL) algorithms in which multiple agents can coordinate over a wireless network is a promising approach to enable future intelligent and autonomous systems that rely on real-time decision-making in complex dynamic environments. Nonetheless, in practical scenarios, CDRL faces many challenges due to the heterogeneity of agents and their learning tasks, different environments, time constraints of the learning, and resource limitations of wireless networks. To address these challenges, in this paper, a novel semantic-aware CDRL method is proposed to enable a group of heterogeneous untrained agents with semantically-linked DRL tasks to collaborate efficiently across a resource-constrained wireless cellular network. To this end, a new heterogeneous federated DRL (HFDRL) algorithm is proposed to select the best subset of semantically relevant DRL agents for collaboration. The proposed approach then jointly optimizes the training loss and wireless bandwidth allocation for the cooperating selected agents in order to train each agent within the time limit of its real-time task. Simulation results show the superior performance of the proposed algorithm compared to state-of-the-art baselines.

Performance Analysis and Optimization of Uplink Cellular Networks with Flexible Frame Structure

Mar 10, 2021

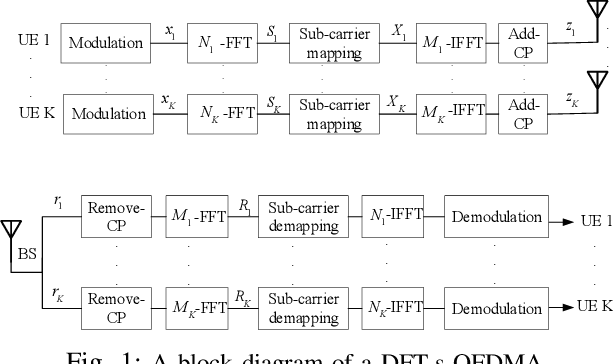

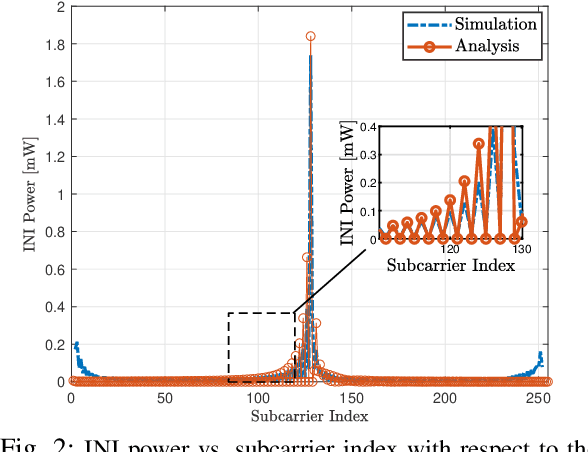

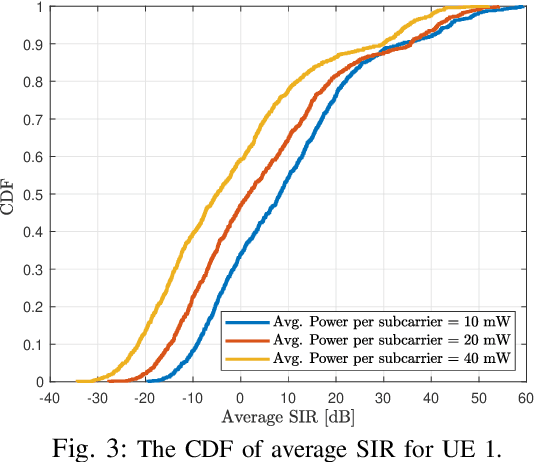

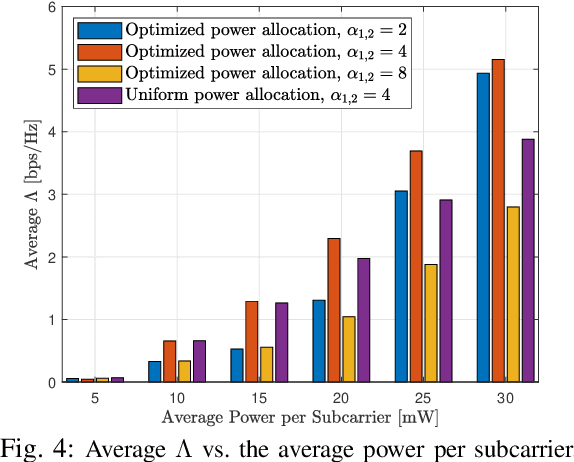

Abstract:Future wireless cellular networks must support both enhanced mobile broadband (eMBB) and ultra reliable low latency communication (URLLC) to manage heterogeneous data traffic for emerging wireless services. To achieve this goal, a promising technique is to enable flexible frame structure by dynamically changing the data frame's numerology according to the channel information as well as traffic quality of service requirements. However, due to nonorthogonal subcarriers, this technique can result in an interference, known as inter numerology interference (INI), thus, degrading the network performance. In this work, a novel framework is proposed to analyze the INI in the uplink cellular communications. In particular, a closed form expression is derived for the INI power in the uplink with a flexible frame structure, and a new resource allocation problem is formulated to maximize the network spectral efficiency (SE) by jointly optimizing the power allocation and numerology selection in a multi user uplink scenario. The simulation results validate the derived theoretical INI analyses and provide guidelines for power allocation and numerology selection.

Reinforcement Learning for Optimized Beam Training in Multi-Hop Terahertz Communications

Feb 10, 2021

Abstract:Communication at terahertz (THz) frequency bands is a promising solution for achieving extremely high data rates in next-generation wireless networks. While the THz communication is conventionally envisioned for short-range wireless applications due to the high atmospheric absorption at THz frequencies, multi-hop directional transmissions can be enabled to extend the communication range. However, to realize multi-hop THz communications, conventional beam training schemes, such as exhaustive search or hierarchical methods with a fixed number of training levels, can lead to a very large time overhead. To address this challenge, in this paper, a novel hierarchical beam training scheme with dynamic training levels is proposed to optimize the performance of multi-hop THz links. In fact, an optimization problem is formulated to maximize the overall spectral efficiency of the multi-hop THz link by dynamically and jointly selecting the number of beam training levels across all the constituent single-hop links. To solve this problem in presence of unknown channel state information, noise, and path loss, a new reinforcement learning solution based on the multi-armed bandit (MAB) is developed. Simulation results show the fast convergence of the proposed scheme in presence of random channels and noise. The results also show that the proposed scheme can yield up to 75% performance gain, in terms of spectral efficiency, compared to the conventional hierarchical beam training with a fixed number of training levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge