Fatemeh Lotfi

Meta Hierarchical Reinforcement Learning for Scalable Resource Management in O-RAN

Dec 08, 2025Abstract:The increasing complexity of modern applications demands wireless networks capable of real time adaptability and efficient resource management. The Open Radio Access Network (O-RAN) architecture, with its RAN Intelligent Controller (RIC) modules, has emerged as a pivotal solution for dynamic resource management and network slicing. While artificial intelligence (AI) driven methods have shown promise, most approaches struggle to maintain performance under unpredictable and highly dynamic conditions. This paper proposes an adaptive Meta Hierarchical Reinforcement Learning (Meta-HRL) framework, inspired by Model Agnostic Meta Learning (MAML), to jointly optimize resource allocation and network slicing in O-RAN. The framework integrates hierarchical control with meta learning to enable both global and local adaptation: the high-level controller allocates resources across slices, while low level agents perform intra slice scheduling. The adaptive meta-update mechanism weights tasks by temporal difference error variance, improving stability and prioritizing complex network scenarios. Theoretical analysis establishes sublinear convergence and regret guarantees for the two-level learning process. Simulation results demonstrate a 19.8% improvement in network management efficiency compared with baseline RL and meta-RL approaches, along with faster adaptation and higher QoS satisfaction across eMBB, URLLC, and mMTC slices. Additional ablation and scalability studies confirm the method's robustness, achieving up to 40% faster adaptation and consistent fairness, latency, and throughput performance as network scale increases.

Task Specific Sharpness Aware O-RAN Resource Management using Multi Agent Reinforcement Learning

Nov 19, 2025Abstract:Next-generation networks utilize the Open Radio Access Network (O-RAN) architecture to enable dynamic resource management, facilitated by the RAN Intelligent Controller (RIC). While deep reinforcement learning (DRL) models show promise in optimizing network resources, they often struggle with robustness and generalizability in dynamic environments. This paper introduces a novel resource management approach that enhances the Soft Actor Critic (SAC) algorithm with Sharpness-Aware Minimization (SAM) in a distributed Multi-Agent RL (MARL) framework. Our method introduces an adaptive and selective SAM mechanism, where regularization is explicitly driven by temporal-difference (TD)-error variance, ensuring that only agents facing high environmental complexity are regularized. This targeted strategy reduces unnecessary overhead, improves training stability, and enhances generalization without sacrificing learning efficiency. We further incorporate a dynamic $ρ$ scheduling scheme to refine the exploration-exploitation trade-off across agents. Experimental results show our method significantly outperforms conventional DRL approaches, yielding up to a $22\%$ improvement in resource allocation efficiency and ensuring superior QoS satisfaction across diverse O-RAN slices.

Covertness in the Near Field: Maximizing the Covert Region with FDA

Nov 22, 2024Abstract:Covert communication in wireless networks ensures that transmissions remain undetectable to adversaries, making it a potential enabler for privacy and security in sensitive applications. However, to meet the high performance and connectivity demands of sixth-generation (6G) networks, future wireless systems will require larger antenna arrays, higher operating frequencies, and advanced antenna architectures. This shift changes the propagation model from far-field planar-wave to near-field spherical-wave which necessitates a redesign of existing covert communication systems. Unlike far-field beamforming, which relies only on direction, near-field beamforming depends on both distance and direction, providing additional degrees of freedom for system design. In this paper, we aim to utilize those freedoms by proposing near-field Frequency Diverse Array (FDA)-based transmission strategies that manipulate the beampattern in both distance and angle, thereby establishing a non-covert region around the legitimate user. Our approach takes advantage of near-field properties and FDA technology to significantly reduce the area vulnerable to detection by adversaries while maintaining covert communication with the legitimate receiver. Numerical simulations show that our methods outperform conventional phased arrays by shrinking the non-covert region and allowing the covert region to expand as the number of antennas increases.

Open RAN LSTM Traffic Prediction and Slice Management using Deep Reinforcement Learning

Jan 12, 2024

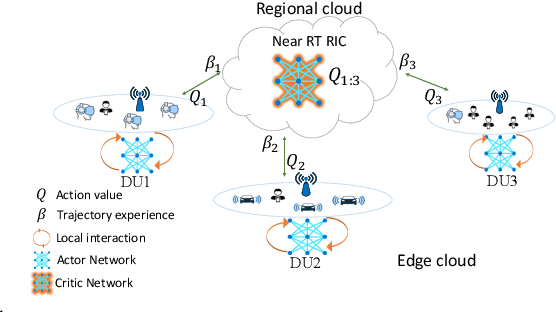

Abstract:With emerging applications such as autonomous driving, smart cities, and smart factories, network slicing has become an essential component of 5G and beyond networks as a means of catering to a service-aware network. However, managing different network slices while maintaining quality of services (QoS) is a challenge in a dynamic environment. To address this issue, this paper leverages the heterogeneous experiences of distributed units (DUs) in ORAN systems and introduces a novel approach to ORAN slicing xApp using distributed deep reinforcement learning (DDRL). Additionally, to enhance the decision-making performance of the RL agent, a prediction rApp based on long short-term memory (LSTM) is incorporated to provide additional information from the dynamic environment to the xApp. Simulation results demonstrate significant improvements in network performance, particularly in reducing QoS violations. This emphasizes the importance of using the prediction rApp and distributed actors' information jointly as part of a dynamic xApp.

Joint Path planning and Power Allocation of a Cellular-Connected UAV using Apprenticeship Learning via Deep Inverse Reinforcement Learning

Jun 15, 2023Abstract:This paper investigates an interference-aware joint path planning and power allocation mechanism for a cellular-connected unmanned aerial vehicle (UAV) in a sparse suburban environment. The UAV's goal is to fly from an initial point and reach a destination point by moving along the cells to guarantee the required quality of service (QoS). In particular, the UAV aims to maximize its uplink throughput and minimize the level of interference to the ground user equipment (UEs) connected to the neighbor cellular BSs, considering the shortest path and flight resource limitation. Expert knowledge is used to experience the scenario and define the desired behavior for the sake of the agent (i.e., UAV) training. To solve the problem, an apprenticeship learning method is utilized via inverse reinforcement learning (IRL) based on both Q-learning and deep reinforcement learning (DRL). The performance of this method is compared to learning from a demonstration technique called behavioral cloning (BC) using a supervised learning approach. Simulation and numerical results show that the proposed approach can achieve expert-level performance. We also demonstrate that, unlike the BC technique, the performance of our proposed approach does not degrade in unseen situations.

Attention-based Open RAN Slice Management using Deep Reinforcement Learning

Jun 15, 2023

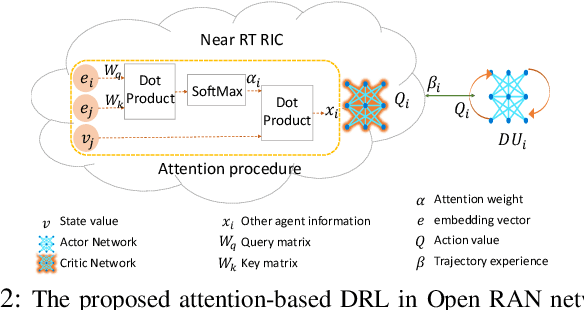

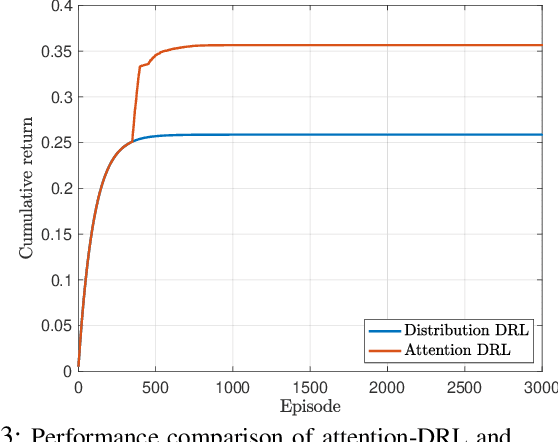

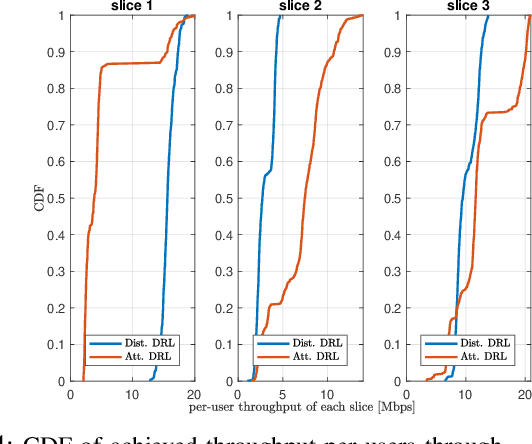

Abstract:As emerging networks such as Open Radio Access Networks (O-RAN) and 5G continue to grow, the demand for various services with different requirements is increasing. Network slicing has emerged as a potential solution to address the different service requirements. However, managing network slices while maintaining quality of services (QoS) in dynamic environments is a challenging task. Utilizing machine learning (ML) approaches for optimal control of dynamic networks can enhance network performance by preventing Service Level Agreement (SLA) violations. This is critical for dependable decision-making and satisfying the needs of emerging networks. Although RL-based control methods are effective for real-time monitoring and controlling network QoS, generalization is necessary to improve decision-making reliability. This paper introduces an innovative attention-based deep RL (ADRL) technique that leverages the O-RAN disaggregated modules and distributed agent cooperation to achieve better performance through effective information extraction and implementing generalization. The proposed method introduces a value-attention network between distributed agents to enable reliable and optimal decision-making. Simulation results demonstrate significant improvements in network performance compared to other DRL baseline methods.

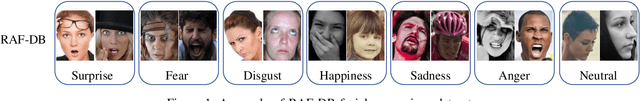

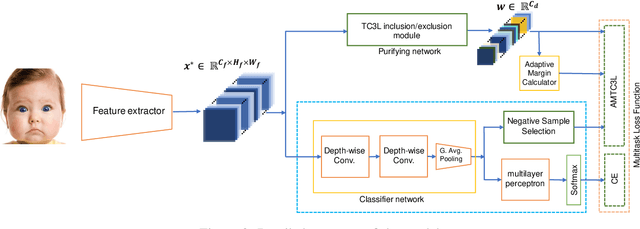

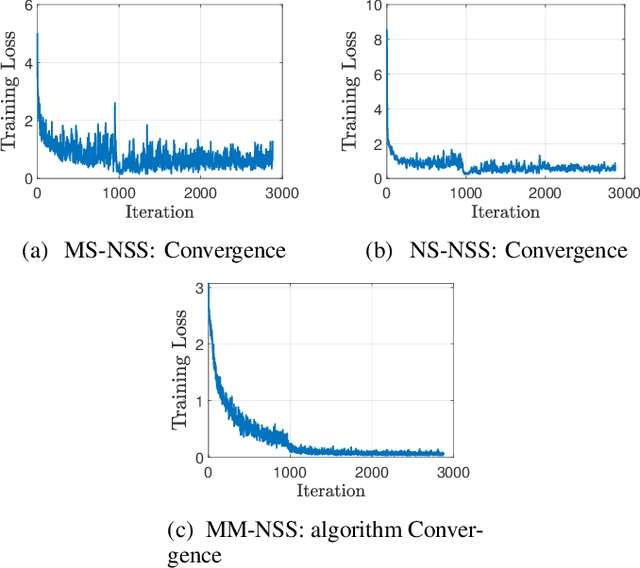

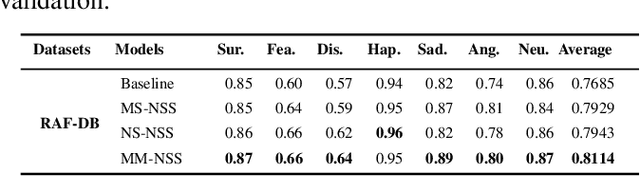

Triplet Loss-less Center Loss Sampling Strategies in Facial Expression Recognition Scenarios

Feb 08, 2023

Abstract:Facial expressions convey massive information and play a crucial role in emotional expression. Deep neural network (DNN) accompanied by deep metric learning (DML) techniques boost the discriminative ability of the model in facial expression recognition (FER) applications. DNN, equipped with only classification loss functions such as Cross-Entropy cannot compact intra-class feature variation or separate inter-class feature distance as well as when it gets fortified by a DML supporting loss item. The triplet center loss (TCL) function is applied on all dimensions of the sample's embedding in the embedding space. In our work, we developed three strategies: fully-synthesized, semi-synthesized, and prediction-based negative sample selection strategies. To achieve better results, we introduce a selective attention module that provides a combination of pixel-wise and element-wise attention coefficients using high-semantic deep features of input samples. We evaluated the proposed method on the RAF-DB, a highly imbalanced dataset. The experimental results reveal significant improvements in comparison to the baseline for all three negative sample selection strategies.

Evolutionary Deep Reinforcement Learning for Dynamic Slice Management in O-RAN

Aug 30, 2022

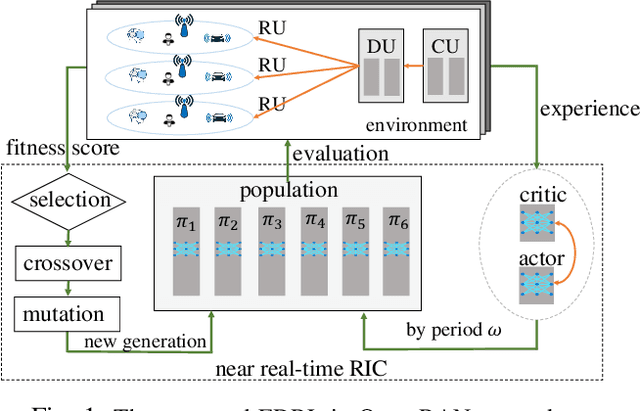

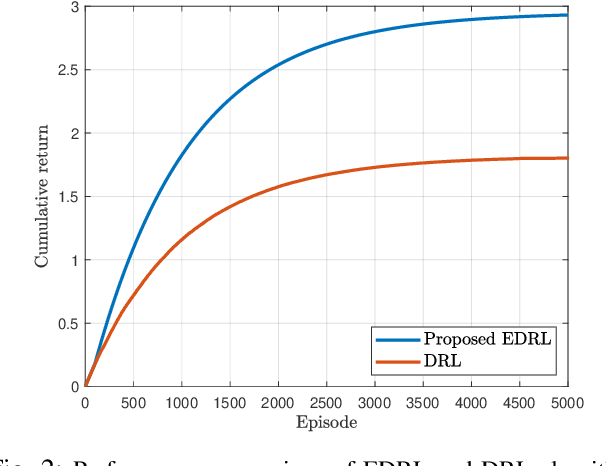

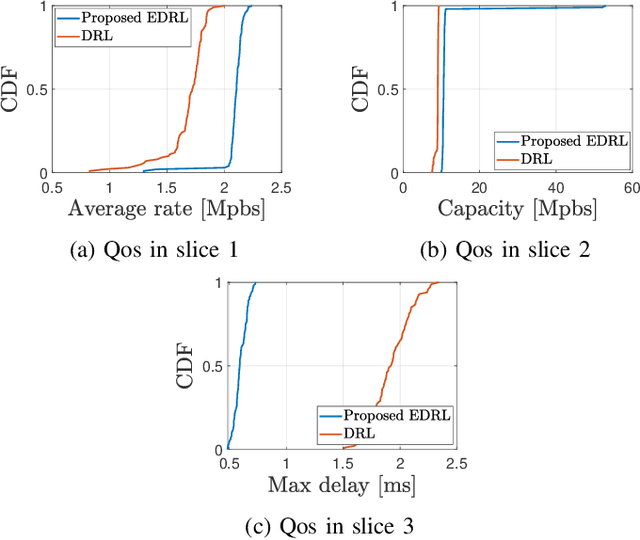

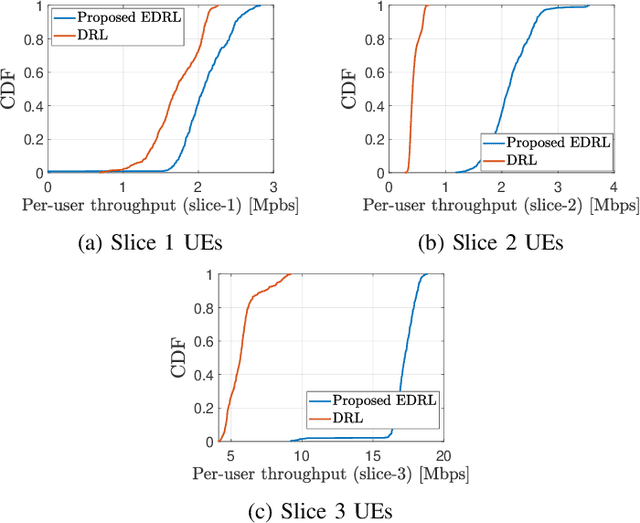

Abstract:The next-generation wireless networks are required to satisfy a variety of services and criteria concurrently. To address upcoming strict criteria, a new open radio access network (O-RAN) with distinguishing features such as flexible design, disaggregated virtual and programmable components, and intelligent closed-loop control was developed. O-RAN slicing is being investigated as a critical strategy for ensuring network quality of service (QoS) in the face of changing circumstances. However, distinct network slices must be dynamically controlled to avoid service level agreement (SLA) variation caused by rapid changes in the environment. Therefore, this paper introduces a novel framework able to manage the network slices through provisioned resources intelligently. Due to diverse heterogeneous environments, intelligent machine learning approaches require sufficient exploration to handle the harshest situations in a wireless network and accelerate convergence. To solve this problem, a new solution is proposed based on evolutionary-based deep reinforcement learning (EDRL) to accelerate and optimize the slice management learning process in the radio access network's (RAN) intelligent controller (RIC) modules. To this end, the O-RAN slicing is represented as a Markov decision process (MDP) which is then solved optimally for resource allocation to meet service demand using the EDRL approach. In terms of reaching service demands, simulation results show that the proposed approach outperforms the DRL baseline by 62.2%.

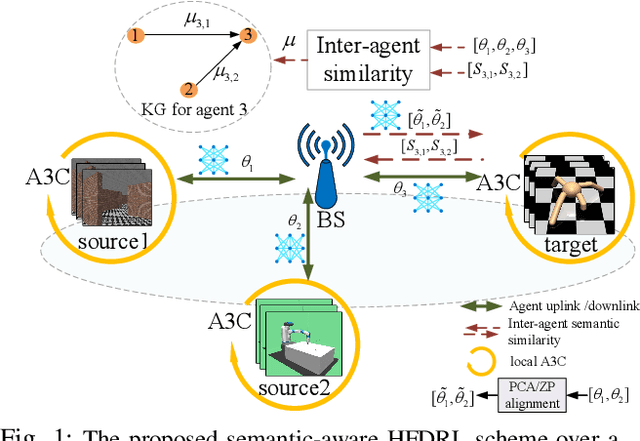

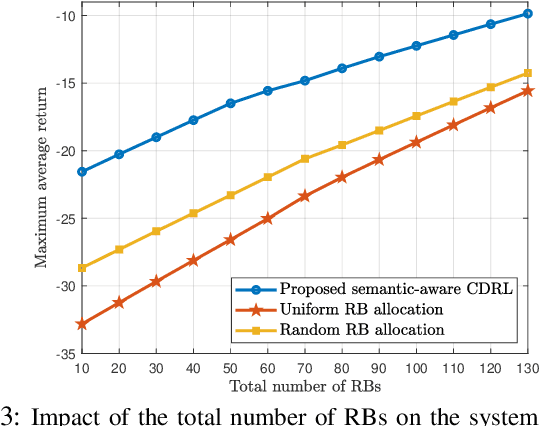

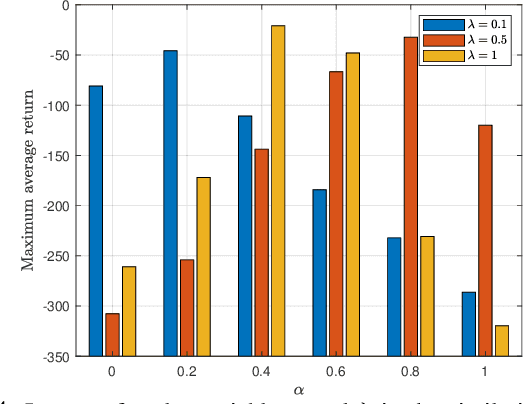

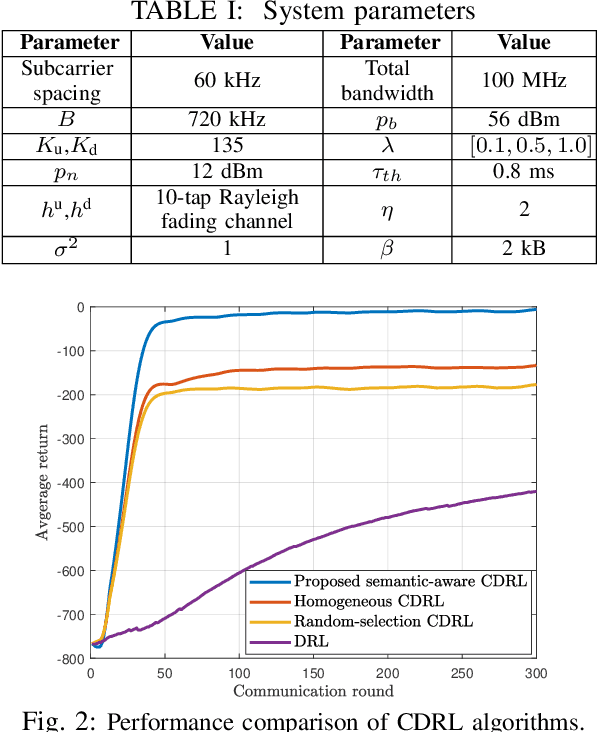

Semantic-Aware Collaborative Deep Reinforcement Learning Over Wireless Cellular Networks

Nov 23, 2021

Abstract:Collaborative deep reinforcement learning (CDRL) algorithms in which multiple agents can coordinate over a wireless network is a promising approach to enable future intelligent and autonomous systems that rely on real-time decision-making in complex dynamic environments. Nonetheless, in practical scenarios, CDRL faces many challenges due to the heterogeneity of agents and their learning tasks, different environments, time constraints of the learning, and resource limitations of wireless networks. To address these challenges, in this paper, a novel semantic-aware CDRL method is proposed to enable a group of heterogeneous untrained agents with semantically-linked DRL tasks to collaborate efficiently across a resource-constrained wireless cellular network. To this end, a new heterogeneous federated DRL (HFDRL) algorithm is proposed to select the best subset of semantically relevant DRL agents for collaboration. The proposed approach then jointly optimizes the training loss and wireless bandwidth allocation for the cooperating selected agents in order to train each agent within the time limit of its real-time task. Simulation results show the superior performance of the proposed algorithm compared to state-of-the-art baselines.

Performance Analysis and Optimization of Uplink Cellular Networks with Flexible Frame Structure

Mar 10, 2021

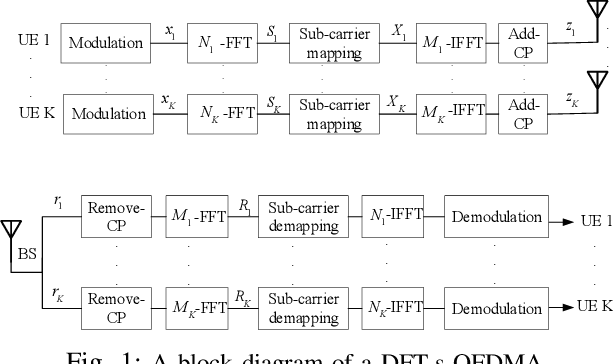

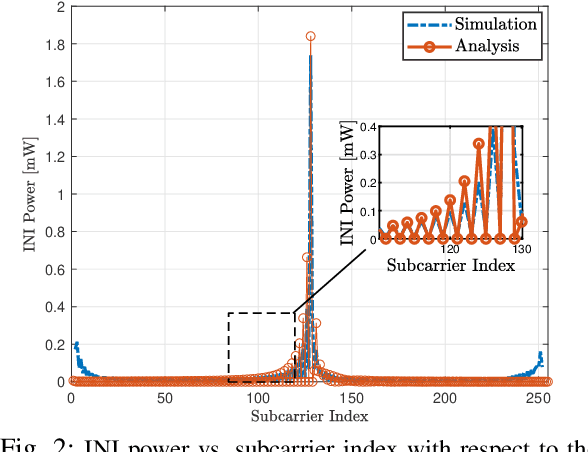

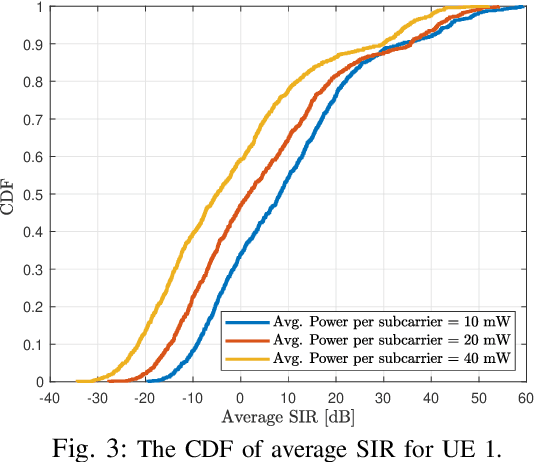

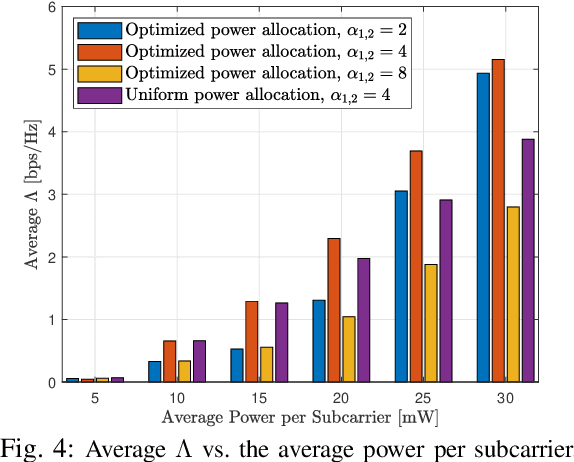

Abstract:Future wireless cellular networks must support both enhanced mobile broadband (eMBB) and ultra reliable low latency communication (URLLC) to manage heterogeneous data traffic for emerging wireless services. To achieve this goal, a promising technique is to enable flexible frame structure by dynamically changing the data frame's numerology according to the channel information as well as traffic quality of service requirements. However, due to nonorthogonal subcarriers, this technique can result in an interference, known as inter numerology interference (INI), thus, degrading the network performance. In this work, a novel framework is proposed to analyze the INI in the uplink cellular communications. In particular, a closed form expression is derived for the INI power in the uplink with a flexible frame structure, and a new resource allocation problem is formulated to maximize the network spectral efficiency (SE) by jointly optimizing the power allocation and numerology selection in a multi user uplink scenario. The simulation results validate the derived theoretical INI analyses and provide guidelines for power allocation and numerology selection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge