Nicholas C. Landolfi

Unsupervised language models for disease variant prediction

Dec 07, 2022Abstract:There is considerable interest in predicting the pathogenicity of protein variants in human genes. Due to the sparsity of high quality labels, recent approaches turn to \textit{unsupervised} learning, using Multiple Sequence Alignments (MSAs) to train generative models of natural sequence variation within each gene. These generative models then predict variant likelihood as a proxy to evolutionary fitness. In this work we instead combine this evolutionary principle with pretrained protein language models (LMs), which have already shown promising results in predicting protein structure and function. Instead of training separate models per-gene, we find that a single protein LM trained on broad sequence datasets can score pathogenicity for any gene variant zero-shot, without MSAs or finetuning. We call this unsupervised approach \textbf{VELM} (Variant Effect via Language Models), and show that it achieves scoring performance comparable to the state of the art when evaluated on clinically labeled variants of disease-related genes.

Learning Reward Functions from Diverse Sources of Human Feedback: Optimally Integrating Demonstrations and Preferences

Jun 24, 2020

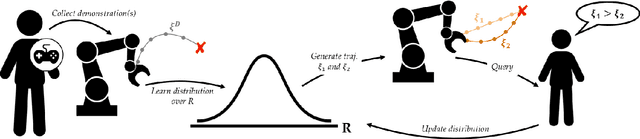

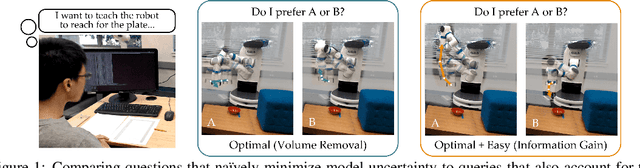

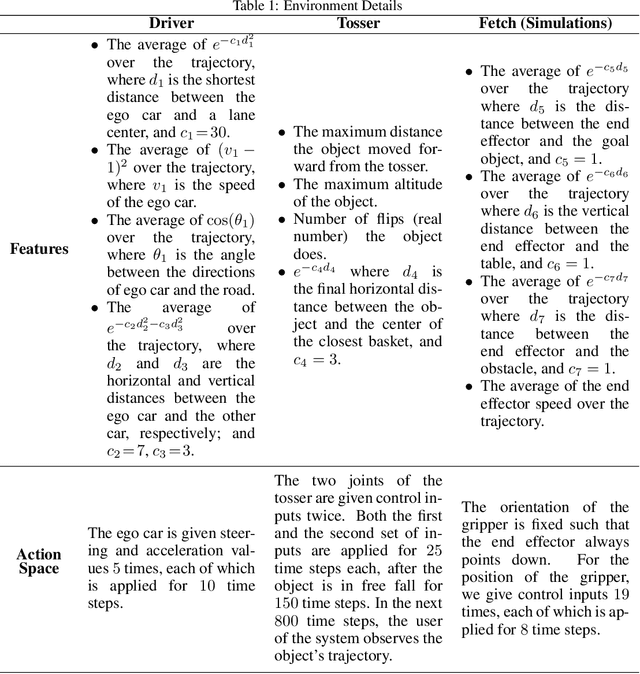

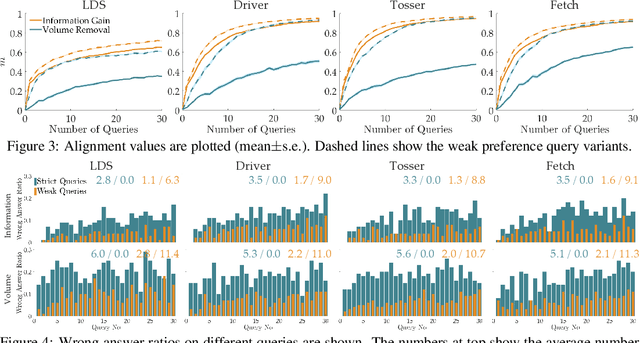

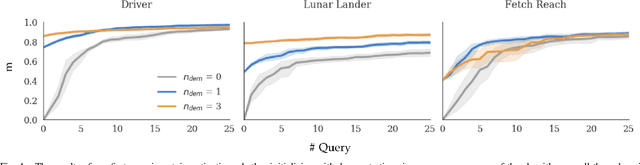

Abstract:Reward functions are a common way to specify the objective of a robot. As designing reward functions can be extremely challenging, a more promising approach is to directly learn reward functions from human teachers. Importantly, humans provide data in a variety of forms: these include instructions (e.g., natural language), demonstrations, (e.g., kinesthetic guidance), and preferences (e.g., comparative rankings). Prior research has independently applied reward learning to each of these different data sources. However, there exist many domains where some of these information sources are not applicable or inefficient -- while multiple sources are complementary and expressive. Motivated by this general problem, we present a framework to integrate multiple sources of information, which are either passively or actively collected from human users. In particular, we present an algorithm that first utilizes user demonstrations to initialize a belief about the reward function, and then proactively probes the user with preference queries to zero-in on their true reward. This algorithm not only enables us combine multiple data sources, but it also informs the robot when it should leverage each type of information. Further, our approach accounts for the human's ability to provide data: yielding user-friendly preference queries which are also theoretically optimal. Our extensive simulated experiments and user studies on a Fetch mobile manipulator demonstrate the superiority and the usability of our integrated framework.

Asking Easy Questions: A User-Friendly Approach to Active Reward Learning

Oct 10, 2019

Abstract:Robots can learn the right reward function by querying a human expert. Existing approaches attempt to choose questions where the robot is most uncertain about the human's response; however, they do not consider how easy it will be for the human to answer! In this paper we explore an information gain formulation for optimally selecting questions that naturally account for the human's ability to answer. Our approach identifies questions that optimize the trade-off between robot and human uncertainty, and determines when these questions become redundant or costly. Simulations and a user study show our method not only produces easy questions, but also ultimately results in faster reward learning.

A Model-based Approach for Sample-efficient Multi-task Reinforcement Learning

Jul 15, 2019

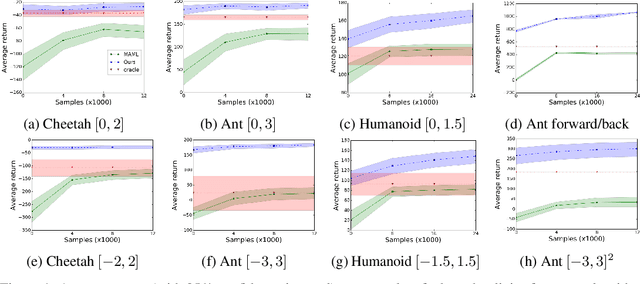

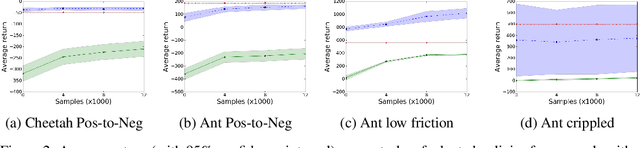

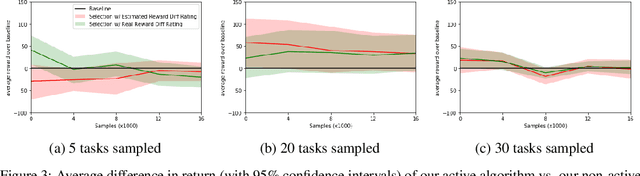

Abstract:The aim of multi-task reinforcement learning is two-fold: (1) efficiently learn by training against multiple tasks and (2) quickly adapt, using limited samples, to a variety of new tasks. In this work, the tasks correspond to reward functions for environments with the same (or similar) dynamical models. We propose to learn a dynamical model during the training process and use this model to perform sample-efficient adaptation to new tasks at test time. We use significantly fewer samples by performing policy optimization only in a "virtual" environment whose transitions are given by our learned dynamical model. Our algorithm sequentially trains against several tasks. Upon encountering a new task, we first warm-up a policy on our learned dynamical model, which requires no new samples from the environment. We then adapt the dynamical model with samples from this policy in the real environment. We evaluate our approach on several continuous control benchmarks and demonstrate its efficacy over MAML, a state-of-the-art meta-learning algorithm, on these tasks.

Learning Reward Functions by Integrating Human Demonstrations and Preferences

Jun 21, 2019

Abstract:Our goal is to accurately and efficiently learn reward functions for autonomous robots. Current approaches to this problem include inverse reinforcement learning (IRL), which uses expert demonstrations, and preference-based learning, which iteratively queries the user for her preferences between trajectories. In robotics however, IRL often struggles because it is difficult to get high-quality demonstrations; conversely, preference-based learning is very inefficient since it attempts to learn a continuous, high-dimensional function from binary feedback. We propose a new framework for reward learning, DemPref, that uses both demonstrations and preference queries to learn a reward function. Specifically, we (1) use the demonstrations to learn a coarse prior over the space of reward functions, to reduce the effective size of the space from which queries are generated; and (2) use the demonstrations to ground the (active) query generation process, to improve the quality of the generated queries. Our method alleviates the efficiency issues faced by standard preference-based learning methods and does not exclusively depend on (possibly low-quality) demonstrations. In numerical experiments, we find that DemPref is significantly more efficient than a standard active preference-based learning method. In a user study, we compare our method to a standard IRL method; we find that users rated the robot trained with DemPref as being more successful at learning their desired behavior, and preferred to use the DemPref system (over IRL) to train the robot.

Social Cohesion in Autonomous Driving

Aug 27, 2018

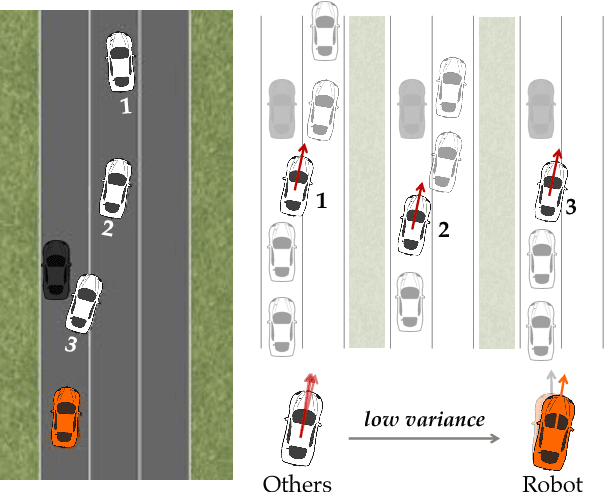

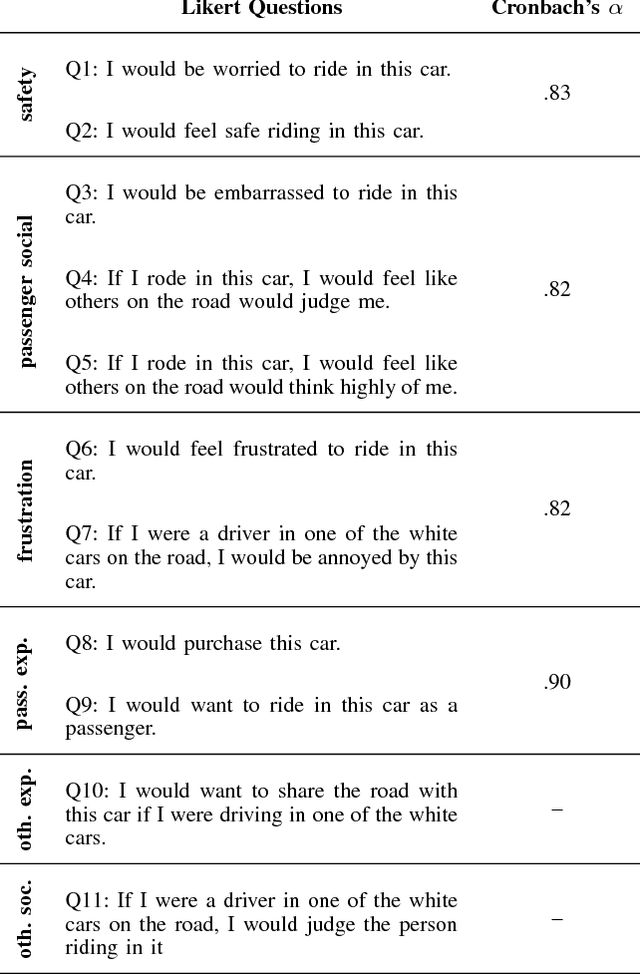

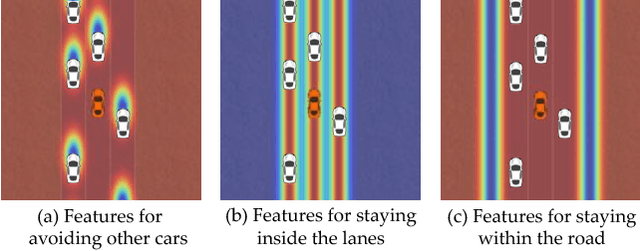

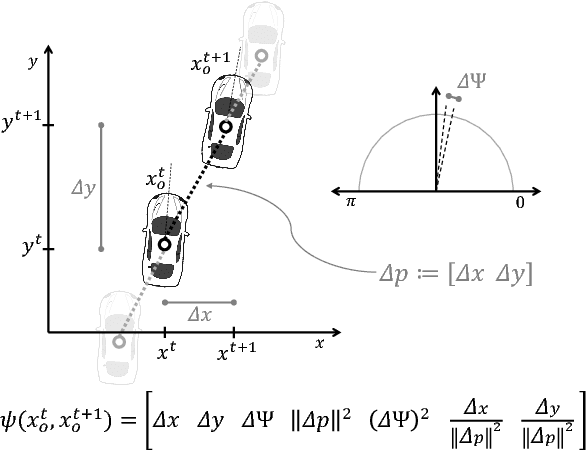

Abstract:Autonomous cars can perform poorly for many reasons. They may have perception issues, incorrect dynamics models, be unaware of obscure rules of human traffic systems, or follow certain rules too conservatively. Regardless of the exact failure mode of the car, often human drivers around the car are behaving correctly. For example, even if the car does not know that it should pull over when an ambulance races by, other humans on the road will know and will pull over. We propose to make socially cohesive cars that leverage the behavior of nearby human drivers to act in ways that are safer and more socially acceptable. The simple intuition behind our algorithm is that if all the humans are consistently behaving in a particular way, then the autonomous car probably should too. We analyze the performance of our algorithm in a variety of scenarios and conduct a user study to assess people's attitudes towards socially cohesive cars. We find that people are surprisingly tolerant of mistakes that cohesive cars might make in order to get the benefits of driving in a car with a safer, or even just more socially acceptable behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge