Neil Fendley

A Systematic Review of Poisoning Attacks Against Large Language Models

Jun 06, 2025Abstract:With the widespread availability of pretrained Large Language Models (LLMs) and their training datasets, concerns about the security risks associated with their usage has increased significantly. One of these security risks is the threat of LLM poisoning attacks where an attacker modifies some part of the LLM training process to cause the LLM to behave in a malicious way. As an emerging area of research, the current frameworks and terminology for LLM poisoning attacks are derived from earlier classification poisoning literature and are not fully equipped for generative LLM settings. We conduct a systematic review of published LLM poisoning attacks to clarify the security implications and address inconsistencies in terminology across the literature. We propose a comprehensive poisoning threat model applicable to categorize a wide range of LLM poisoning attacks. The poisoning threat model includes four poisoning attack specifications that define the logistics and manipulation strategies of an attack as well as six poisoning metrics used to measure key characteristics of an attack. Under our proposed framework, we organize our discussion of published LLM poisoning literature along four critical dimensions of LLM poisoning attacks: concept poisons, stealthy poisons, persistent poisons, and poisons for unique tasks, to better understand the current landscape of security risks.

Mirror Mirror on the Wall, Have I Forgotten it All? A New Framework for Evaluating Machine Unlearning

May 13, 2025Abstract:Machine unlearning methods take a model trained on a dataset and a forget set, then attempt to produce a model as if it had only been trained on the examples not in the forget set. We empirically show that an adversary is able to distinguish between a mirror model (a control model produced by retraining without the data to forget) and a model produced by an unlearning method across representative unlearning methods from the literature. We build distinguishing algorithms based on evaluation scores in the literature (i.e. membership inference scores) and Kullback-Leibler divergence. We propose a strong formal definition for machine unlearning called computational unlearning. Computational unlearning is defined as the inability for an adversary to distinguish between a mirror model and a model produced by an unlearning method. If the adversary cannot guess better than random (except with negligible probability), then we say that an unlearning method achieves computational unlearning. Our computational unlearning definition provides theoretical structure to prove unlearning feasibility results. For example, our computational unlearning definition immediately implies that there are no deterministic computational unlearning methods for entropic learning algorithms. We also explore the relationship between differential privacy (DP)-based unlearning methods and computational unlearning, showing that DP-based approaches can satisfy computational unlearning at the cost of an extreme utility collapse. These results demonstrate that current methodology in the literature fundamentally falls short of achieving computational unlearning. We conclude by identifying several open questions for future work.

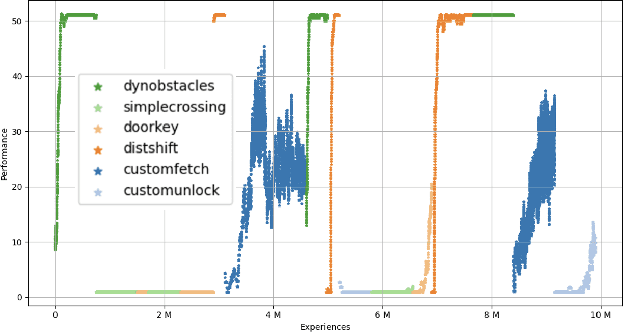

Continual Reinforcement Learning with TELLA

Aug 08, 2022

Abstract:Training reinforcement learning agents that continually learn across multiple environments is a challenging problem. This is made more difficult by a lack of reproducible experiments and standard metrics for comparing different continual learning approaches. To address this, we present TELLA, a tool for the Test and Evaluation of Lifelong Learning Agents. TELLA provides specified, reproducible curricula to lifelong learning agents while logging detailed data for evaluation and standardized analysis. Researchers can define and share their own curricula over various learning environments or run against a curriculum created under the DARPA Lifelong Learning Machines (L2M) Program.

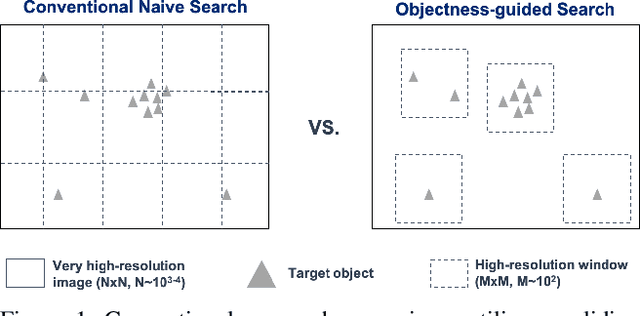

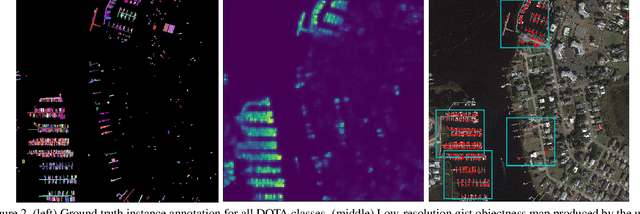

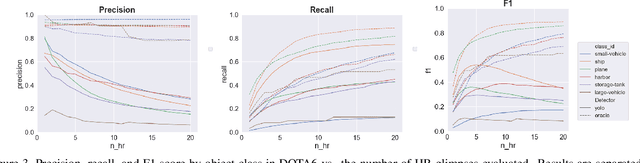

Objectness-Guided Open Set Visual Search and Closed Set Detection

Dec 11, 2020

Abstract:Searching for small objects in large images is currently challenging for deep learning systems, but is a task with numerous applications including remote sensing and medical imaging. Thorough scanning of very large images is computationally expensive, particularly at resolutions sufficient to capture small objects. The smaller an object of interest, the more likely it is to be obscured by clutter or otherwise deemed insignificant. We examine these issues in the context of two complementary problems: closed-set object detection and open-set target search. First, we present a method for predicting pixel-level objectness from a low resolution gist image, which we then use to select regions for subsequent evaluation at high resolution. This approach has the benefit of not being fixed to a predetermined grid, allowing fewer costly high-resolution glimpses than existing methods. Second, we propose a novel strategy for open-set visual search that seeks to find all objects in an image of the same class as a given target reference image. We interpret both detection problems through a probabilistic, Bayesian lens, whereby the objectness maps produced by our method serve as priors in a maximum-a-posteriori approach to the detection step. We evaluate the end-to-end performance of both the combination of our patch selection strategy with this target search approach and the combination of our patch selection strategy with standard object detection methods. Both our patch selection and target search approaches are seen to significantly outperform baseline strategies.

Random Projections for Adversarial Attack Detection

Dec 11, 2020

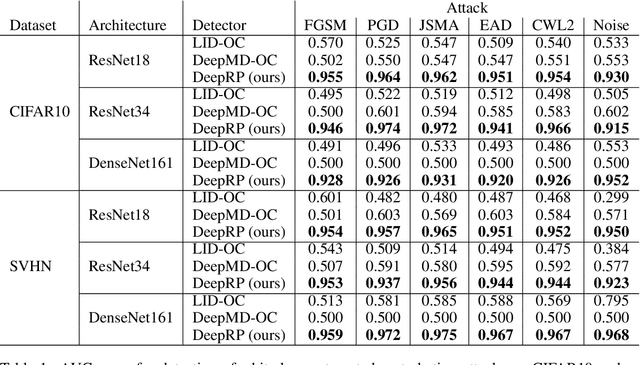

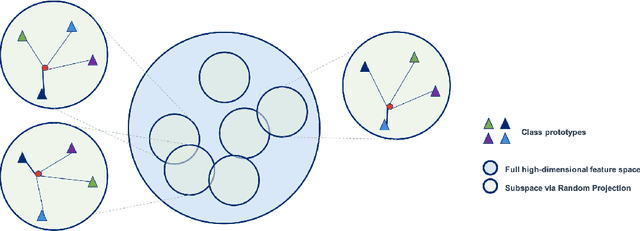

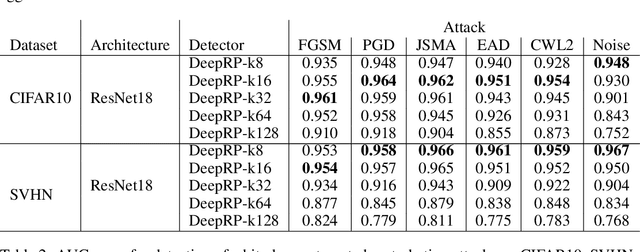

Abstract:Whilst adversarial attack detection has received considerable attention, it remains a fundamentally challenging problem from two perspectives. First, while threat models can be well-defined, attacker strategies may still vary widely within those constraints. Therefore, detection should be considered as an open-set problem, standing in contrast to most current detection strategies. These methods take a closed-set view and train binary detectors, thus biasing detection toward attacks seen during detector training. Second, information is limited at test time and confounded by nuisance factors including the label and underlying content of the image. Many of the current high-performing techniques use training sets for dealing with some of these issues, but can be limited by the overall size and diversity of those sets during the detection step. We address these challenges via a novel strategy based on random subspace analysis. We present a technique that makes use of special properties of random projections, whereby we can characterize the behavior of clean and adversarial examples across a diverse set of subspaces. We then leverage the self-consistency (or inconsistency) of model activations to discern clean from adversarial examples. Performance evaluation demonstrates that our technique outperforms ($>0.92$ AUC) competing state of the art (SOTA) attack strategies, while remaining truly agnostic to the attack method itself. It also requires significantly less training data, composed only of clean examples, when compared to competing SOTA methods, which achieve only chance performance, when evaluated in a more rigorous testing scenario.

Jacks of All Trades, Masters Of None: Addressing Distributional Shift and Obtrusiveness via Transparent Patch Attacks

May 01, 2020

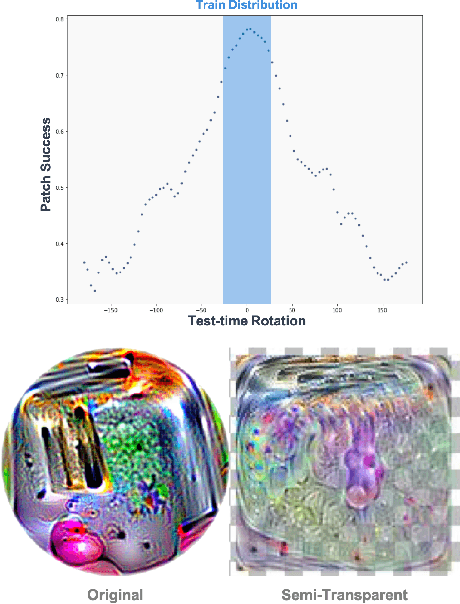

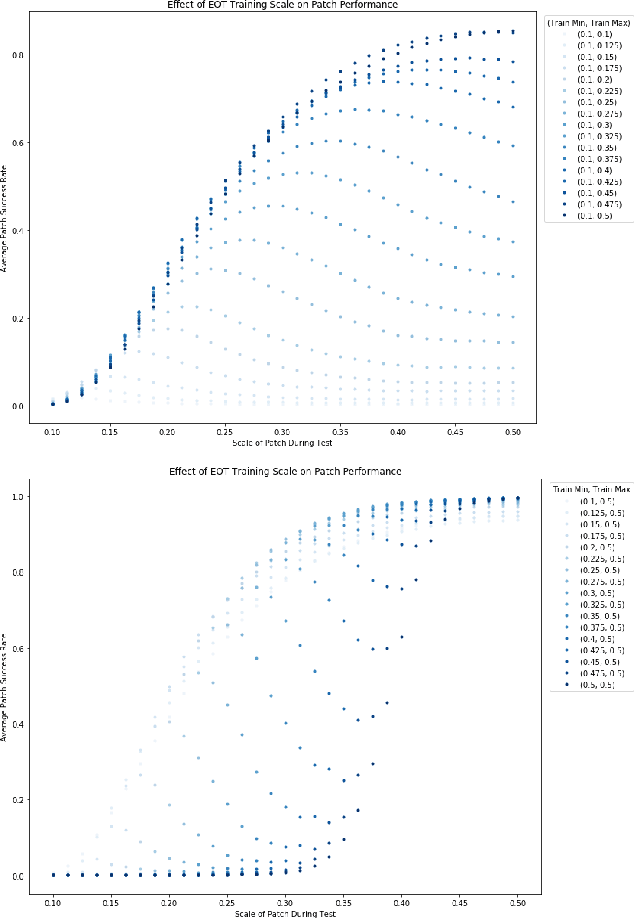

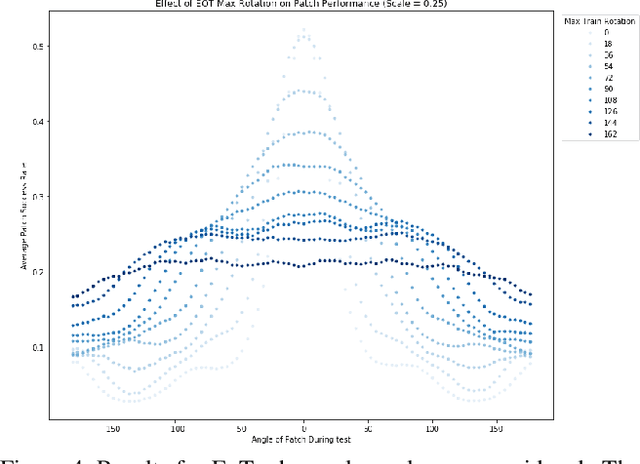

Abstract:We focus on the development of effective adversarial patch attacks and -- for the first time -- jointly address the antagonistic objectives of attack success and obtrusiveness via the design of novel semi-transparent patches. This work is motivated by our pursuit of a systematic performance analysis of patch attack robustness with regard to geometric transformations. Specifically, we first elucidate a) key factors underpinning patch attack success and b) the impact of distributional shift between training and testing/deployment when cast under the Expectation over Transformation (EoT) formalism. By focusing our analysis on three principal classes of transformations (rotation, scale, and location), our findings provide quantifiable insights into the design of effective patch attacks and demonstrate that scale, among all factors, significantly impacts patch attack success. Working from these findings, we then focus on addressing how to overcome the principal limitations of scale for the deployment of attacks in real physical settings: namely the obtrusiveness of large patches. Our strategy is to turn to the novel design of irregularly-shaped, semi-transparent partial patches which we construct via a new optimization process that jointly addresses the antagonistic goals of mitigating obtrusiveness and maximizing effectiveness. Our study -- we hope -- will help encourage more focus in the community on the issues of obtrusiveness, scale, and success in patch attacks.

The TrojAI Software Framework: An OpenSource tool for Embedding Trojans into Deep Learning Models

Mar 13, 2020

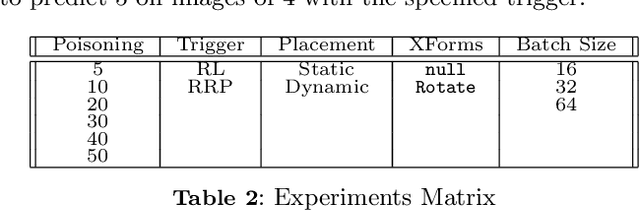

Abstract:In this paper, we introduce the TrojAI software framework, an open source set of Python tools capable of generating triggered (poisoned) datasets and associated deep learning (DL) models with trojans at scale. We utilize the developed framework to generate a large set of trojaned MNIST classifiers, as well as demonstrate the capability to produce a trojaned reinforcement-learning model using vector observations. Results on MNIST show that the nature of the trigger, training batch size, and dataset poisoning percentage all affect successful embedding of trojans. We test Neural Cleanse against the trojaned MNIST models and successfully detect anomalies in the trained models approximately $18\%$ of the time. Our experiments and workflow indicate that the TrojAI software framework will enable researchers to easily understand the effects of various configurations of the dataset and training hyperparameters on the generated trojaned deep learning model, and can be used to rapidly and comprehensively test new trojan detection methods.

Adversarial Examples in Remote Sensing

May 28, 2018

Abstract:This paper considers attacks against machine learning algorithms used in remote sensing applications, a domain that presents a suite of challenges that are not fully addressed by current research focused on natural image data such as ImageNet. In particular, we present a new study of adversarial examples in the context of satellite image classification problems. Using a recently curated data set and associated classifier, we provide a preliminary analysis of adversarial examples in settings where the targeted classifier is permitted multiple observations of the same location over time. While our experiments to date are purely digital, our problem setup explicitly incorporates a number of practical considerations that a real-world attacker would need to take into account when mounting a physical attack. We hope this work provides a useful starting point for future studies of potential vulnerabilities in this setting.

Functional Map of the World

Apr 13, 2018

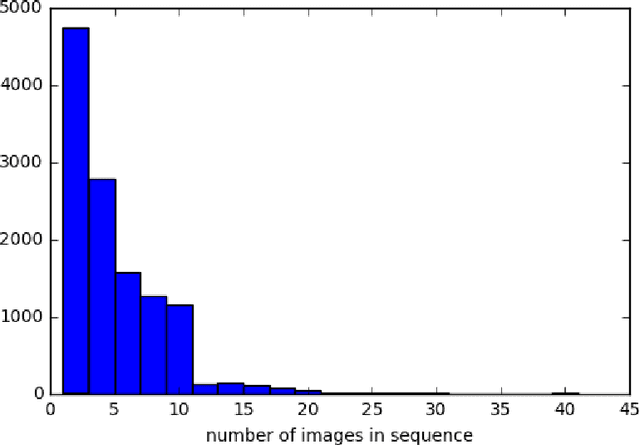

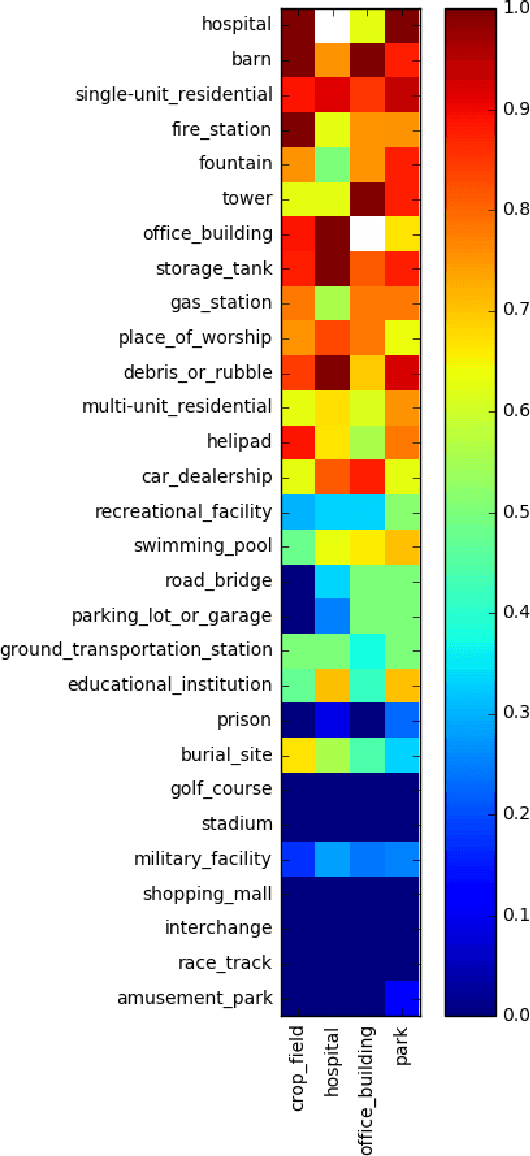

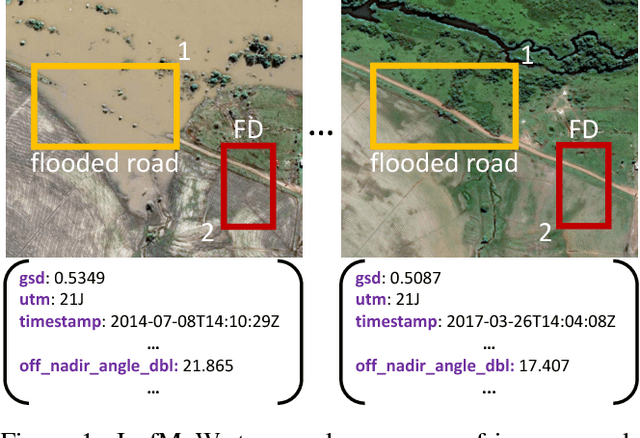

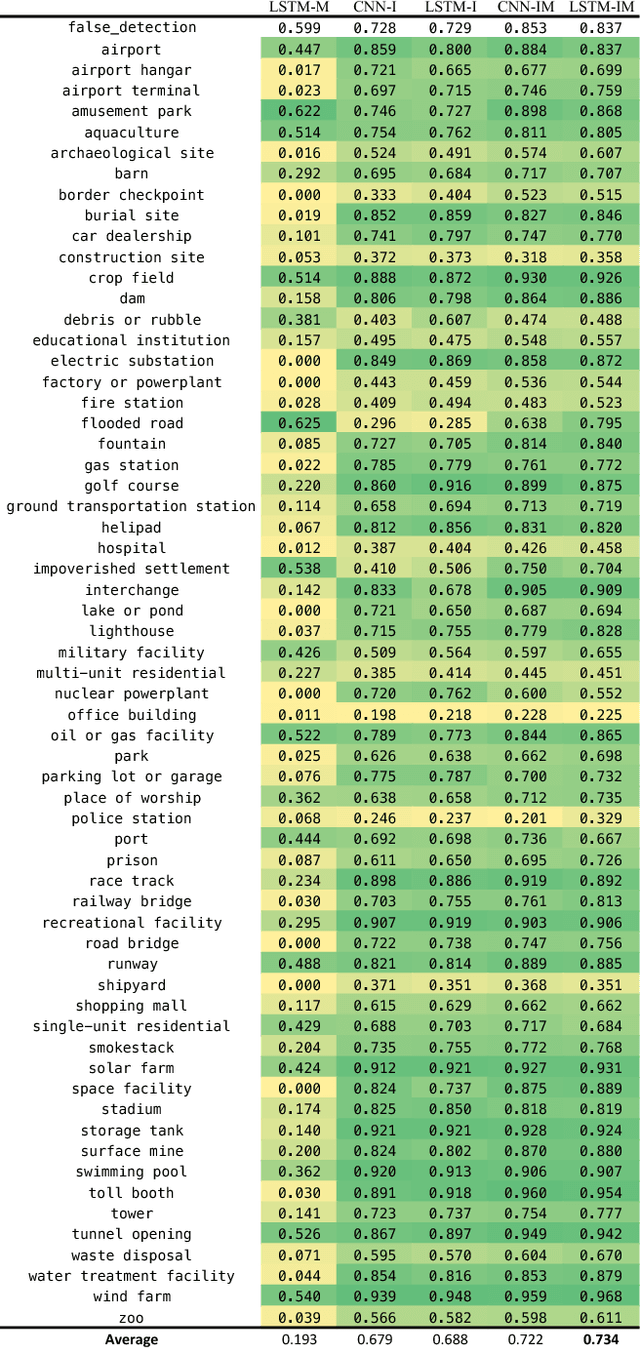

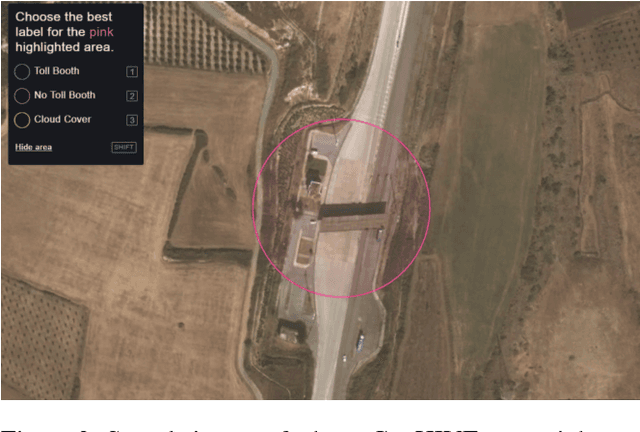

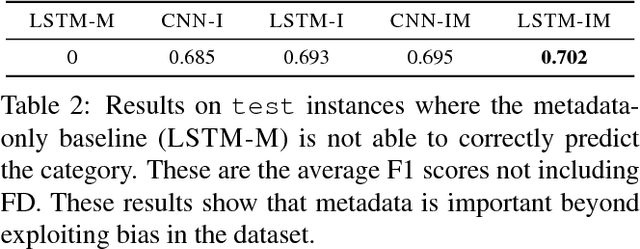

Abstract:We present a new dataset, Functional Map of the World (fMoW), which aims to inspire the development of machine learning models capable of predicting the functional purpose of buildings and land use from temporal sequences of satellite images and a rich set of metadata features. The metadata provided with each image enables reasoning about location, time, sun angles, physical sizes, and other features when making predictions about objects in the image. Our dataset consists of over 1 million images from over 200 countries. For each image, we provide at least one bounding box annotation containing one of 63 categories, including a "false detection" category. We present an analysis of the dataset along with baseline approaches that reason about metadata and temporal views. Our data, code, and pretrained models have been made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge