Natsuki Yamanobe

AIST

Component Selection for Craft Assembly Tasks

Jul 19, 2024

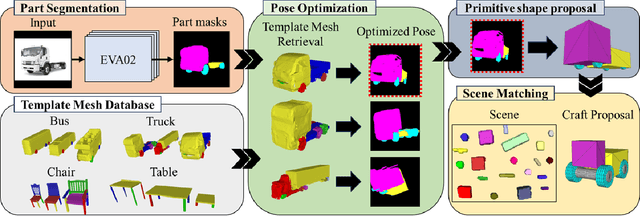

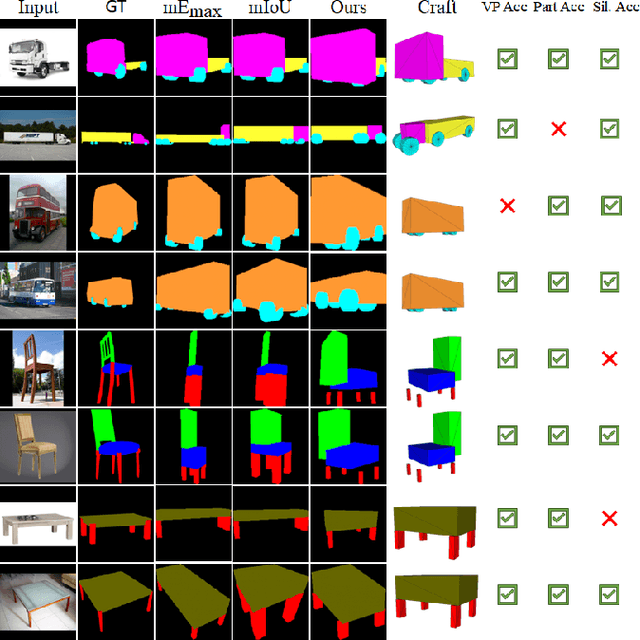

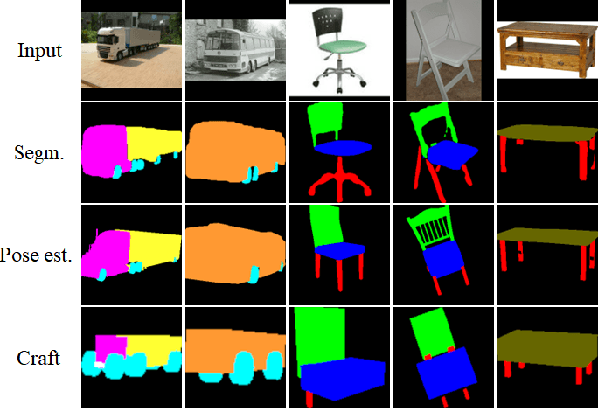

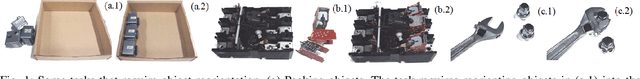

Abstract:Inspired by traditional handmade crafts, where a person improvises assemblies based on the available objects, we formally introduce the Craft Assembly Task. It is a robotic assembly task that involves building an accurate representation of a given target object using the available objects, which do not directly correspond to its parts. In this work, we focus on selecting the subset of available objects for the final craft, when the given input is an RGB image of the target in the wild. We use a mask segmentation neural network to identify visible parts, followed by retrieving labelled template meshes. These meshes undergo pose optimization to determine the most suitable template. Then, we propose to simplify the parts of the transformed template mesh to primitive shapes like cuboids or cylinders. Finally, we design a search algorithm to find correspondences in the scene based on local and global proportions. We develop baselines for comparison that consider all possible combinations, and choose the highest scoring combination for common metrics used in foreground maps and mask accuracy. Our approach achieves comparable results to the baselines for two different scenes, and we show qualitative results for an implementation in a real-world scenario.

Assembly Planning by Recognizing a Graphical Instruction Manual

Jun 01, 2021

Abstract:This paper proposes a robot assembly planning method by automatically reading the graphical instruction manuals design for humans. Essentially, the method generates an Assembly Task Sequence Graph (ATSG) by recognizing a graphical instruction manual. An ATSG is a graph describing the assembly task procedure by detecting types of parts included in the instruction images, completing the missing information automatically, and correcting the detection errors automatically. To build an ATSG, the proposed method first extracts the information of the parts contained in each image of the graphical instruction manual. Then, by using the extracted part information, it estimates the proper work motions and tools for the assembly task. After that, the method builds an ATSG by considering the relationship between the previous and following images, which makes it possible to estimate the undetected parts caused by occlusion using the information of the entire image series. Finally, by collating the total number of each part with the generated ATSG, the excess or deficiency of parts are investigated, and task procedures are removed or added according to those parts. In the experiment section, we build an ATSG using the proposed method to a graphical instruction manual for a chair and demonstrate the action sequences found in the ATSG can be performed by a dual-arm robot execution. The results show the proposed method is effective and simplifies robot teaching in automatic assembly.

Deep Learning Scooping Motion using Bilateral Teleoperations

Oct 24, 2018

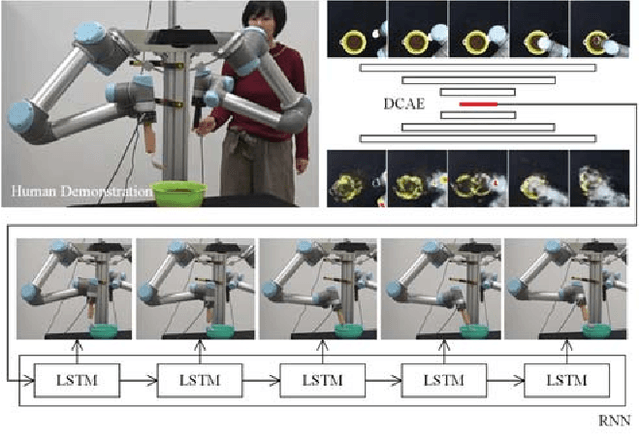

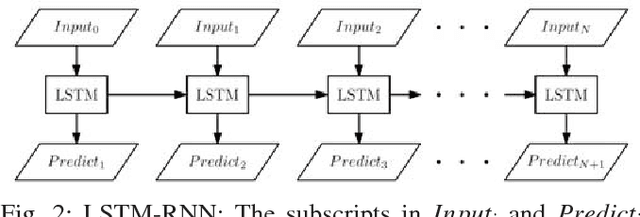

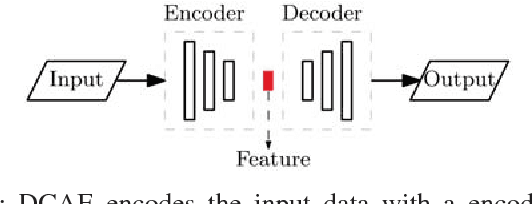

Abstract:We present bilateral teleoperation system for task learning and robot motion generation. Our system includes a bilateral teleoperation platform and a deep learning software. The deep learning software refers to human demonstration using the bilateral teleoperation platform to collect visual images and robotic encoder values. It leverages the datasets of images and robotic encoder information to learn about the inter-modal correspondence between visual images and robot motion. In detail, the deep learning software uses a combination of Deep Convolutional Auto-Encoders (DCAE) over image regions, and Recurrent Neural Network with Long Short-Term Memory units (LSTM-RNN) over robot motor angles, to learn motion taught be human teleoperation. The learnt models are used to predict new motion trajectories for similar tasks. Experimental results show that our system has the adaptivity to generate motion for similar scooping tasks. Detailed analysis is performed based on failure cases of the experimental results. Some insights about the cans and cannots of the system are summarized.

Tool Exchangeable Grasp/Assembly Planner

May 23, 2018

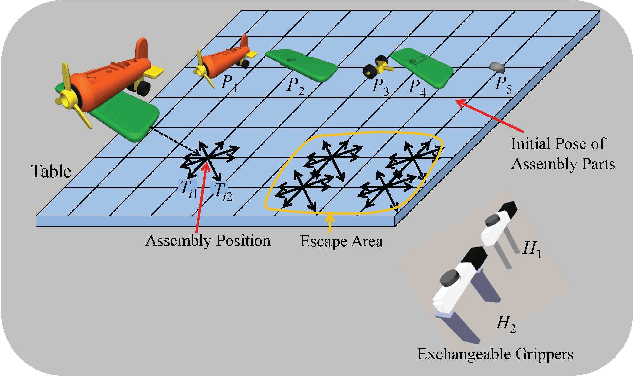

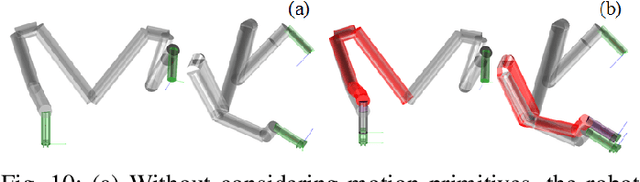

Abstract:This paper proposes a novel assembly planner for a manipulator which can simultaneously plan assembly sequence, robot motion, grasping configuration, and exchange of grippers. Our assembly planner assumes multiple grippers and can automatically selects a feasible one to assemble a part. For a given AND/OR graph of an assembly task, we consider generating the assembly graph from which assembly motion of a robot can be planned. The edges of the assembly graph are composed of three kinds of paths, i.e., transfer/assembly paths, transit paths and tool exchange paths. In this paper, we first explain the proposed method for planning assembly motion sequence including the function of gripper exchange. Finally, the effectiveness of the proposed method is confirmed through some numerical examples and a physical experiment.

* This is to appear Int. Conf. on Intelligent Autonomous Systems

A Mid-level Planning System for Object Reorientation

Aug 10, 2016

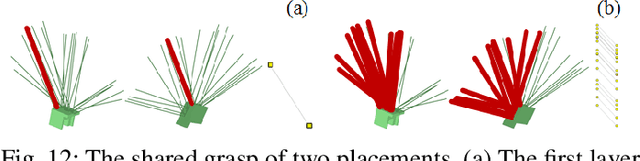

Abstract:This paper presents a mid-level planning system for object reorientation. It includes a grasp planner, a placement planner, and a regrasp sequence solver. Given the initial and goal poses of an object, the mid-level planning system finds a sequence of hand configurations that reorient the object from the initial to the goal. This mid-level planning system is open to low-level motion planning algorithm by providing two end-effector poses as the input. It is also open to high-level symbolic planners by providing interface functions like placing an object to a given position at a given rotation. The planning system is demonstrated with several simulation examples and real-robot executions using a Kawada Hiro robot and Robotiq 85 grippers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge